Technology peripherals

Technology peripherals AI

AI Covering text, positioning and segmentation tasks, Zhiyuan and Hong Kong Chinese jointly proposed the first multi-functional 3D medical multi-modal large model

Covering text, positioning and segmentation tasks, Zhiyuan and Hong Kong Chinese jointly proposed the first multi-functional 3D medical multi-modal large model

作者 | 香港中文大学白帆

编辑 | ScienceAI

近日,香港中文大学和智源联合提出的 M3D 系列工作,包括 M3D-Data, M3D-LaMed 和 M3D-Bench,从数据集、模型和测评全方面推动 3D 医学图像分析的发展。

(1)M3D-Data 是目前最大的 3D 医学图像数据集,包括 M3D-Cap (120K 3D 图文对), M3D-VQA (510K 问答对),M3D-Seg(150K 3D Mask),M3D-RefSeg (3K 推理分割)共四个子数据集。

(2)M3D-LaMed 是目前最多功能的 3D 医学多模态大模型,能够解决文本(疾病诊断、图像检索、视觉问答、报告生成等),定位(目标检测、视觉定位等)和分割(语义分割、指代分割、推理分割等)三类医学分析任务。

(3)M3D-Bench 能够全面和自动评估 8 种任务,涵盖文本、定位和分割三个方面,并提供人工校验后的测试数据。

我们最早于 2024 年 4 月发布了数据集、模型和代码。

近期,我们提供了更小和更强的 M3D-LaMed-Phi-3-4B 模型,并增加了线上 demo 供大家体验!

最新进展请关注 GitHub 库的更新 ,如果有任何疑问和建议可以及时联系,欢迎大家讨论和支持我们的工作。

- 论文链接:https://arxiv.org/abs/2404.00578

- 代码:https://github.com/BAAI-DCAI/M3D

- 模型:https://huggingface.co/GoodBaiBai88/M3D-LaMed-Phi-3-4B

- 数据集:https://github.com/BAAI-DCAI/M3D?tab=readme-ov-file#data

- 线上 Demo:https://baai.rpailab.xyz/

我们能为医学图像相关研究者提供什么?

- M3D-Data, 最大的 3D 医学多模态数据集;

- M3D-Seg,整合了几乎所有开源 3D 医学分割数据集,共计 25 个;

- M3D-LaMed, 支持文本、定位和分割的最多功能的 3D 医学多模态大模型,提供了简洁清晰的代码框架,研究者可以轻易魔改每个模块的设置;

- M3D-CLIP,基于 M3D-Cap 3D 图文对,我们训练了一个图文对比学习的 M3D-CLIP 模型,共提供其中的视觉预训练权重 3DViT;

- M3D-Bench,全面和清晰的测评方案和代码。

- 本文涉及的所有资源全部开放,希望能帮助研究者共同推进 3D 医学图像分析的发展。

线上Demo视频。

医学图像分析对临床诊断和治疗至关重要,多模态大语言模型 (MLLM) 对此的支持日益增多。然而,先前的研究主要集中在 2D 医学图像上,尽管 3D 图像具有更丰富的空间信息,但对其的研究和探索还不够。

本文旨在利用 MLLM 推进 3D 医学图像分析。为此,我们提出了一个大规模 3D 多模态医学数据集 M3D-Data,其中包含 120K 个图像-文本对和 662K 个指令-响应对,专门针对各种 3D 医学任务量身定制,例如图文检索、报告生成、视觉问答、定位和分割。

此外,我们提出了 M3D-LaMed,这是一种用于 3D 医学图像分析的多功能多模态大语言模型。

我们还引入了一个新的 3D 多模态医学基准 M3D-Bench,它有助于在八个任务中进行自动评估。通过综合评估,我们的方法被证明是一种稳健的 3D 医学图像分析模型,其表现优于现有解决方案。所有代码、数据和模型均可在以下网址公开获取。

数据集

M3D-Data 共包括4个子数据集,分别为M3D-Cap(图文对), M3D-VQA(视觉问答对), M3D-RefSeg(推理分割)和 M3D-Seg(整合 25 个 3D 分割数据集)。

数据集统计情况。

M3D-VQA 数据集分布。其中问题类型主要包括平面、期相、器官、异常和定位五类常见的3D图像问题。

我们整合了几乎所有开源的 3D 医学分割数据集,组成了 M3D-Seg,共计 25 个。数据集可以被用来做语义分割、推理分割、指代分割和相应的检测定位任务。

M3D-Seg。

模型

M3D-LaMed 模型结构如下图所示。(a)3D 图像编码器通过跨模态对比学习损失由图文数据进行预训练,可直接应用于图文检索任务。(b)在 M3D-LaMed 模型中,3D 医学图像被输入到预先训练的 3D 图像编码器和高效的 3D 空间池化感知器中,并将视觉 token 插入 LLM,输出的 [SEG] 作为 prompt 驱动分割模块。

M3D-LaMed 模型结构。

实验

图文检索

在 3D 图文检索中,模型旨在根据相似性从数据集中匹配图像和文本,通常涉及两个任务:文本到图像检索 (TR) 和图像到文本检索 (IR)。

由于缺乏合适的方法,我们将 2D 医学的代表模型 PMC-CLIP 应用于 3D 图文检索中,我们发现由于缺乏空间信息,几乎无法和 3D 图文检索模型对比。

报告生成

在报告生成中,该模型根据从 3D 医学图像中提取的信息生成文本报告。

封闭式视觉问答

在封闭式视觉问答中,需要为模型提供封闭的答案候选,例如 A,B,C,D,要求模型从候选中选出正确答案。

我们发现在医学领域 M3D-LaMed 超过通用的 GPT-4V。

开放式视觉问答

在开放式视觉问答中,模型生成开放式的答案,不存在任何答案提示和候选。

我们发现在医学领域 M3D-LaMed 超过通用的 GPT-4V。不过需注意目前GPT-4V 限制了医疗相关问题的回答。

定位

Positioning is crucial in visual language tasks, especially those involving input and output boxes. Tasks in the output box, such as referent expression understanding (REC), aim to locate a target object in an image based on a referent representation. In contrast, input box tasks, such as referent expression generation (REG), require the model to generate a description of a specific region based on an image and a location box.

Segmentation

The segmentation task is crucial in 3D medical image analysis because of its recognition and localization capabilities. To address various textual cues, segmentation is divided into semantic segmentation and referential expression segmentation. For semantic segmentation, the model generates segmentation masks based on semantic labels. Referential expression segmentation requires target segmentation based on natural language expression description, which requires the model to have certain understanding and reasoning capabilities.

Case Study of Out-of-Distribution (OOD) Problems

We tested the M3D-LaMed model on an OOD conversation, which means that all problems are not relevant to our training data. We found that M3D-LaMed has strong generalization capabilities and can produce reasonable answers to OOD problems rather than gibberish. In each set of conversations, the avatar and questions on the left come from the user, and the avatar and answers on the right come from M3D-LaMed.

The model has strong reasoning and generalization capabilities.

Our latest trained smaller M3D-LaMed-Phi-3-4B model has better performance, everyone is welcome to use it! GoodBaiBai88/M3D-LaMed-Phi-3-4B · Hugging Face

Report generation test results

Closed VQA test results

Reviewed on TotalSegmentator Semantic Segmentation Dice Results

Summary

Our M3D series of studies promotes the use of MLLM for 3D medical image analysis. Specifically, we build a large-scale 3D multimodal medical dataset M3D-Data, which contains 120K 3D image-text pairs and 662K instruction-response pairs, tailored for 3D medical tasks. Furthermore, we propose M3D-LaMed, a general model that handles image text retrieval, report generation, visual question answering, localization, and segmentation. Furthermore, we introduce a comprehensive benchmark, M3D-Bench, which is carefully designed for eight tasks.

Our approach lays a solid foundation for MLLM to understand the vision and language of 3D medical scenes. Our data, code, and models will facilitate further exploration and application of 3D medical MLLM in future research. We hope that our work can be helpful to researchers in the field, and everyone is welcome to use and discuss it.

The above is the detailed content of Covering text, positioning and segmentation tasks, Zhiyuan and Hong Kong Chinese jointly proposed the first multi-functional 3D medical multi-modal large model. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

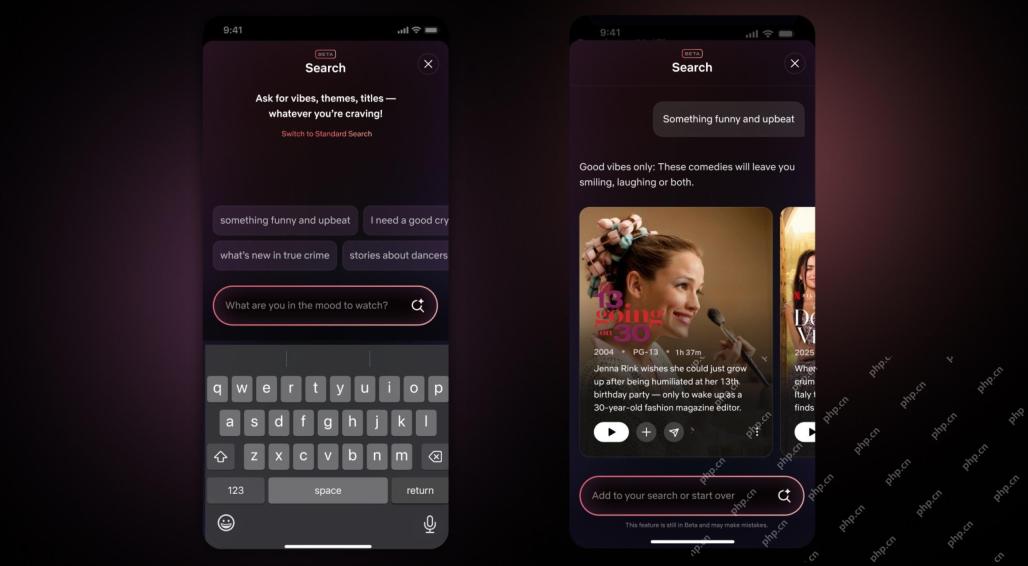

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

Dreamweaver Mac version

Visual web development tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.