Technology peripherals

Technology peripherals AI

AI MIT's latest masterpiece: using GPT-3.5 to solve the problem of time series anomaly detection

MIT's latest masterpiece: using GPT-3.5 to solve the problem of time series anomaly detectionMIT's latest masterpiece: using GPT-3.5 to solve the problem of time series anomaly detection

Picture

Picture

Paper title: Large language models can be zero-shot anomaly detectors for time series?

Download address: https: //arxiv.org/pdf/2405.14755v1

1. Overall introduction to this article from

, based on LLM (such as GPT-3.5-turbo, MISTRAL, etc.) for time series anomaly detection . The core lies in the design of the pipeline, which is mainly divided into two parts.

Time series data processing: Convert the original time series into LLM understandable input through discretization and other methods;

LM-based anomaly detection Pipeline has designed two prompt-based anomaly detection pipeline, one is a prompt-based method that asks the large model for the abnormal location, and the large model gives the index of the abnormal location; the other is a prediction-based method that allows the large model to perform time series predictions based on the difference between the predicted value and the actual value. Perform abnormal location.

Picture

Picture

2. Time series data processing

In order to adapt the time series to the LLM input, the article converts the time series into numbers, by Numbers serve as input to LLM. The core here is how to retain as much original time series information as possible with the shortest length.

First, uniformly subtract the minimum value from the original time series to prevent the occurrence of negative values. Negative value indexes will occupy a token. At the same time, the decimal points of the values are uniformly moved back, and each value is retained to a fixed number of digits (such as 3 decimal places). Since GPT has restrictions on the maximum length of input, this paper adopts a dynamic window strategy to divide the original sequence into overlapping subsequences and input them into the large model.

Due to different LLM tokenizers, in order to prevent the numbers from being completely separated, a space is added in the middle of each number in the text to force the distinction. Subsequent verification of the effect also showed that the method of adding spaces is better than not adding spaces. The following example is the processing result:

Picture

Picture

Different data processing methods, used for different large models, will produce different results, as shown in the figure below Show.

Picture

Picture

3. Anomaly detection Pipeline

The article proposes two anomaly detection pipelines based on LLM, the first one is PROMPTER , convert the anomaly detection problem into a prompt and input it into the large model, and let the model directly give the answer; the other is DETECTOR, which allows the large model to perform time series prediction, and then determine the abnormal points through the difference between the prediction result and the real value.

Picture

Picture

PROMPTER: The following table is the process of prompt iteration in the article. Starting from the simplest prompt, we continue to find problems with the results given by LLM. And improved the prompt, and after 5 versions of iteration, the final prompt was formed. Using this prompt, the model can directly output the index information of the abnormal location.

Picture

Picture

DETECTOR: There has been a lot of previous work using large models for time series forecasting. The processed time series in this article can directly allow large models to generate prediction results. Take the median of multiple results generated by different windows, and then use the difference between the predicted results and the real results as the basis for anomaly detection.

4. Experimental results

Through experimental comparison, it is found that the anomaly detection method based on large models can improve the effect by 12.5% due to the anomaly detection model based on Transformer. AER (AER: Auto-Encoder with Regression for Time Series Anomaly Detection) is the most effective deep learning-based anomaly detection method and is still 30% better than the LLM-based method. In addition, the pipeline method based on DIRECTOR is better than the method based on PROMTER.

Picture

Picture

In addition, the article also visualizes the anomaly detection process of the large model, as shown below.

picture

picture

The above is the detailed content of MIT's latest masterpiece: using GPT-3.5 to solve the problem of time series anomaly detection. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

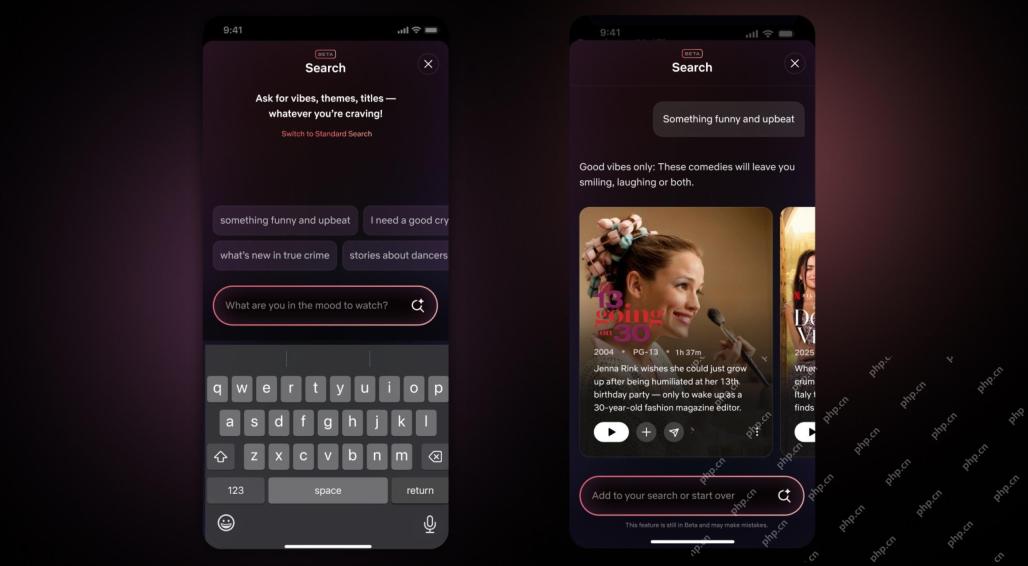

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Notepad++7.3.1

Easy-to-use and free code editor