Front-end SEO—detailed explanation

When we enter keywords in the input box and click search or query, we then get the results. Digging into the story behind it, search engines do a lot of things.

In search engine websites, such as Baidu, there is a very large database in the background, which stores a large number of keywords, and each keyword corresponds to many URLs. These URLs are obtained by the Baidu program from the vast Internet. Downloaded and collected bit by bit, these programs are called "search engine spiders" or "web crawlers". These hard-working "spiders" crawl on the Internet every day, from one link to another, download the content, analyze and refine it, and find the keywords. If the "spider" thinks that the keyword is not in the database and is not useful to the user, Useful ones are stored in the database. On the contrary, if the "spider" thinks it is spam or duplicate information, it will discard it and continue crawling to find the latest and useful information and save it for users to search. When a user searches, URLs related to the keyword can be retrieved and displayed to the visitor.

A keyword pair uses multiple URLs, so there is a sorting problem. Correspondingly, the URL that best matches the keyword will be ranked first. In the process of "spiders" crawling web content and refining keywords, there is a problem: whether the "spiders" can understand it. If the website content is flash and js, it will be incomprehensible and confusing, even if the keywords are appropriate, it will be useless. Correspondingly, if the website content is its language, then it can understand it, and its language is SEO.

Full name: Search English Optimization, search engine optimization. Ever since there were search engines, SEO was born.

Meaning of existence: Optimization behavior done in order to improve the number and ranking position of web pages in the natural search results of search engines. In short, we hope that search engines such as Baidu will include more of our carefully crafted websites, and that the websites will be ranked first when others visit them.

Category: white hat SEO and black hat SEO. White hat SEO plays a role in improving and standardizing website design, making the website more friendly to search engines and users, and the website can also obtain reasonable traffic from search engines, which is encouraged and supported by search engines. Black hat SEO uses and amplifies search engine policy flaws to obtain more user visits. Most of this type of behavior deceives search engines and is not supported or encouraged by general search engine companies. This article focuses on white hat SEO, so what can white hat SEO do?

1. Carefully set the title, keywords, and description of the website to reflect the positioning of the website and let search engines understand what the website does;

2. Website content optimization: Correspondence between content and keywords, increase keyword density;

3. Set up the Robot.txt file properly on the website;

4. Generate a search engine friendly site map;

5. Add external links and promote them on various websites;

Through the website's structural layout design and web page code optimization, the front-end page can be understood by both browser users and "spiders".

Generally speaking, the fewer levels of the website structure you create, the easier it is to be crawled by "spiders" and easier to be included. Generally, the directory structure of small and medium-sized websites exceeds three levels, and "spiders" are unwilling to crawl down, "What if I get lost in the dark?" And according to relevant surveys: If a visitor fails to find the information he needs after jumping three times, he is likely to leave. Therefore, the three-tier directory structure is also a requirement for experience. For this we need to do:

1. Control the number of homepage links

The homepage of the website is the place with the highest weight. If there are too few links on the homepage and there is no "bridge", the "spider" cannot continue to crawl down to the inner pages, which will directly affect the number of included websites. However, there should not be too many links on the homepage. Once there are too many links, there will be no substantive links, which will easily affect the user experience, reduce the weight of the homepage of the website, and the inclusion effect will not be good.

Therefore, for small and medium-sized enterprise websites, it is recommended that there be no more than 100 homepage links. The nature of the links can include page navigation, bottom navigation, anchor text links, etc. Note that the links should be based on the user's good experience and guide the user to obtain information. On top of that.

2. Flatten the directory hierarchy and try to allow the "spider" to jump to any internal page in the website as long as 3 jumps. Flat directory structure, such as: "Plant" --> "Fruit" --> "Apple", "Orange", "Banana", you can find bananas after passing level 3.

3.Navigation optimization

Navigation should be text-based as much as possible, or it can be paired with image navigation, but the image code must be optimized. tags must add "alt" and "title" attributes to tell search engines the positioning of the navigation, so that even if the image is When it fails to display properly, the user can also see the prompt text.

Secondly, breadcrumb navigation should be added to every web page. Benefits: From a user experience perspective, it allows users to understand their current location and the location of the current page in the entire website, helping users quickly understand The organizational form of the website creates a better sense of location, and provides an interface for returning to each page to facilitate user operations; for "spiders", it can clearly understand the website structure, and also adds a large number of internal links for easy crawling. Take, reduce bounce rate.

4. Structural layout of the website--details that cannot be ignored

Page header: logo and main navigation, as well as user information.

Main body of the page: The main text on the left, including breadcrumb navigation and text; popular articles and related articles on the right. Benefits: retaining visitors and allowing them to stay longer. For "spiders", these articles are related links, which enhances the Page relevance can also enhance the weight of the page.

Bottom of the page: Copyright information and friendly links.

Special note: How to write paging navigation. Recommended writing method: "Home page 1 2 3 4 5 6 7 8 9 drop-down box", so that the "spider" can jump directly according to the corresponding page number, and the drop-down box directly selects the page to jump. The following writing method is not recommended, "Home Page Next Page Last Page", especially when the number of pages is particularly large, the "spider" needs to crawl down many times before crawling, which will make it very tiring and easy to give up.

5. Control the size of the page, reduce http requests, and improve the loading speed of the website.

It is best not to exceed 100k for a page. If it is too large, the page loading speed will be slow. When the speed is very slow, the user experience is not good, visitors cannot be retained, and once the timeout occurs, the "spider" will also leave.

1. Title: Only emphasize the key points. Try to put important keywords in the front. Keywords should not appear repeatedly. Try to include them on every page. Do not set the same content in the title.

2. Tags: Keywords, just list the important keywords of several pages, remember to over-stuff them.

3. Tag: Web page description. It needs to be a high-level summary of the web page content. Remember not to be too long or overly stuffed with keywords. Each page should be different.

4. Tags in: Try to make the code semantic, use the appropriate tags at the appropriate locations, and use the right tags to do the right thing. Make it clear to both source code readers and "spiders". For example: h1-h6 are used for title classes.

5. Hyperlink tag: For in-page links, add the "title" attribute to explain it so that visitors and "spiders" know it. As for external links that link to other websites, you need to add the el="nofollow" attribute to tell the "spider" not to crawl, because once the "spider" crawls the external link, it will not come back.

6. Use the h1 tag for the text title: "Spider" thinks it is the most important. If you don't like h1, the default style of h1 can be set through CSS. Try to use h1 tags for main text titles and h2 tags for subtitles, but h title tags should not be used casually in other places.

7. Line break tag: only used for line breaks of text content

8. Tables should use table title tags

9. Images should be described using the "alt" attribute

10. Bold, emphasize tags: Use when emphasis is needed. Bold tags can be highly valued in search engines. They can highlight keywords and express important content. The emphasis effect of emphasized tags is second only to bold tags.

10. Do not use special symbols for text indentation. You should use CSS to set it. Do not use the special symbol © for the copyright symbol. You can directly use the input method, spell "banquan", and select the serial number 5 to type the copyright symbol ©.

12. Make clever use of CSS layout and put the HTML code of important content at the front. The front content is considered the most important and is given priority to be read by "spiders" to capture content keywords.

13. Do not use JS to output important content, because the "spider" will not recognize it

14. Try to use iframe as little as possible, because "spiders" generally will not read the content

15. Use display: none with caution: For text content that you do not want to display, you should set z-index or set it outside the browser display. Because search engines will filter out the content in display:none.

16. Continuously simplify the code

17. If the js code operates on DOM, it should be placed before the body end tag and after the html code.

-------------------------------------------------- -------------------------- Waiter, give me two wheels!

The above is the detailed content of Front-end SEO—detailed explanation. For more information, please follow other related articles on the PHP Chinese website!

How does performance differ between Linux and Windows for various tasks?May 14, 2025 am 12:03 AM

How does performance differ between Linux and Windows for various tasks?May 14, 2025 am 12:03 AMLinux performs well in servers and development environments, while Windows performs better in desktop and gaming. 1) Linux's file system performs well when dealing with large numbers of small files. 2) Linux performs excellently in high concurrency and high throughput network scenarios. 3) Linux memory management has more advantages in server environments. 4) Linux is efficient when executing command line and script tasks, while Windows performs better on graphical interfaces and multimedia applications.

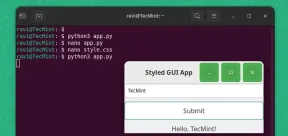

How to Create GUI Applications In Linux Using PyGObjectMay 13, 2025 am 11:09 AM

How to Create GUI Applications In Linux Using PyGObjectMay 13, 2025 am 11:09 AMCreating graphical user interface (GUI) applications is a fantastic way to bring your ideas to life and make your programs more user-friendly. PyGObject is a Python library that allows developers to create GUI applications on Linux desktops using the

How to Install LAMP Stack with PhpMyAdmin in Arch LinuxMay 13, 2025 am 11:01 AM

How to Install LAMP Stack with PhpMyAdmin in Arch LinuxMay 13, 2025 am 11:01 AMArch Linux provides a flexible cutting-edge system environment and is a powerfully suited solution for developing web applications on small non-critical systems because is a completely open source and provides the latest up-to-date releases on kernel

How to Install LEMP (Nginx, PHP, MariaDB) on Arch LinuxMay 13, 2025 am 10:43 AM

How to Install LEMP (Nginx, PHP, MariaDB) on Arch LinuxMay 13, 2025 am 10:43 AMDue to its Rolling Release model which embraces cutting-edge software Arch Linux was not designed and developed to run as a server to provide reliable network services because it requires extra time for maintenance, constant upgrades, and sensible fi

![12 Must-Have Linux Console [Terminal] File Managers](https://img.php.cn/upload/article/001/242/473/174710245395762.png?x-oss-process=image/resize,p_40) 12 Must-Have Linux Console [Terminal] File ManagersMay 13, 2025 am 10:14 AM

12 Must-Have Linux Console [Terminal] File ManagersMay 13, 2025 am 10:14 AMLinux console file managers can be very helpful in day-to-day tasks, when managing files on a local machine, or when connected to a remote one. The visual console representation of the directory helps us quickly perform file/folder operations and sav

qBittorrent: A Powerful Open-Source BitTorrent ClientMay 13, 2025 am 10:12 AM

qBittorrent: A Powerful Open-Source BitTorrent ClientMay 13, 2025 am 10:12 AMqBittorrent is a popular open-source BitTorrent client that allows users to download and share files over the internet. The latest version, qBittorrent 5.0, was released recently and comes packed with new features and improvements. This article will

Setup Nginx Virtual Hosts, phpMyAdmin, and SSL on Arch LinuxMay 13, 2025 am 10:03 AM

Setup Nginx Virtual Hosts, phpMyAdmin, and SSL on Arch LinuxMay 13, 2025 am 10:03 AMThe previous Arch Linux LEMP article just covered basic stuff, from installing network services (Nginx, PHP, MySQL, and PhpMyAdmin) and configuring minimal security required for MySQL server and PhpMyadmin. This topic is strictly related to the forme

Zenity: Building GTK Dialogs in Shell ScriptsMay 13, 2025 am 09:38 AM

Zenity: Building GTK Dialogs in Shell ScriptsMay 13, 2025 am 09:38 AMZenity is a tool that allows you to create graphical dialog boxes in Linux using the command line. It uses GTK , a toolkit for creating graphical user interfaces (GUIs), making it easy to add visual elements to your scripts. Zenity can be extremely u

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Chinese version

Chinese version, very easy to use

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver Mac version

Visual web development tools