Home >Technology peripherals >AI >How to apply NLP large models to time series? A summary of the five categories of methods!

How to apply NLP large models to time series? A summary of the five categories of methods!

- PHPzforward

- 2024-02-19 23:50:03989browse

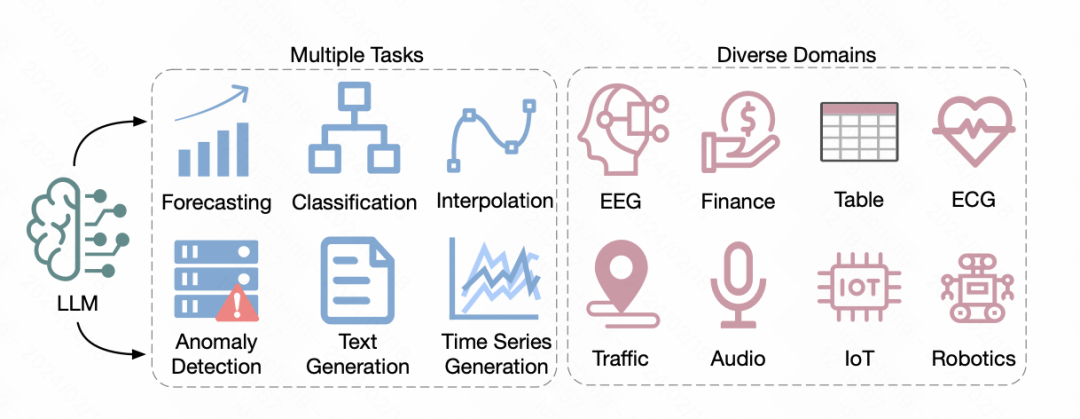

Recently, the University of California published a review article exploring methods of applying pre-trained large language models in the field of natural language processing to time series forecasting. This article summarizes the application of 5 different NLP large models in the time series field. Next, we will briefly introduce these 5 methods mentioned in this review.

Picture

Picture

Paper title: Large Language Models for Time Series: A Survey

Download address: https://arxiv.org /pdf/2402.01801.pdf

Picture

Picture

1. Prompt-based method

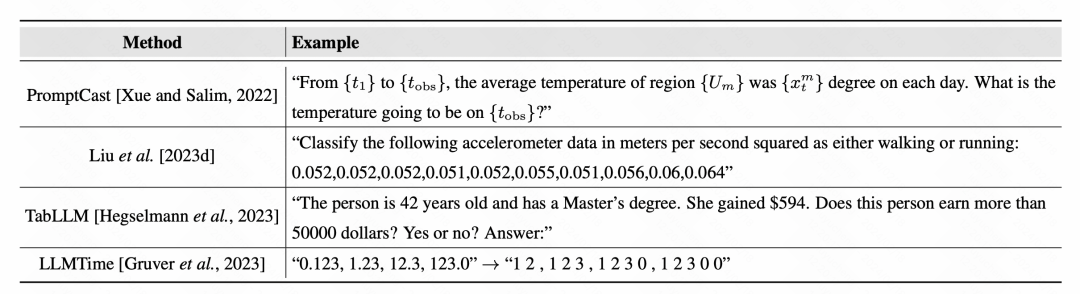

By directly using prompt method, the model can target Time series data for forecast output. In the previous prompt method, the basic idea was to pre-train a prompt text, fill it with time series data, and let the model generate prediction results. For example, when constructing text describing a time series task, fill in the time series data and let the model directly output prediction results.

Picture

Picture

When processing time series, numbers are often regarded as part of the text, and the issue of tokenizing numbers has also attracted much attention. Some methods specifically add spaces between numbers to distinguish numbers more clearly and avoid unreasonable distinctions between numbers in dictionaries.

2. Discretization

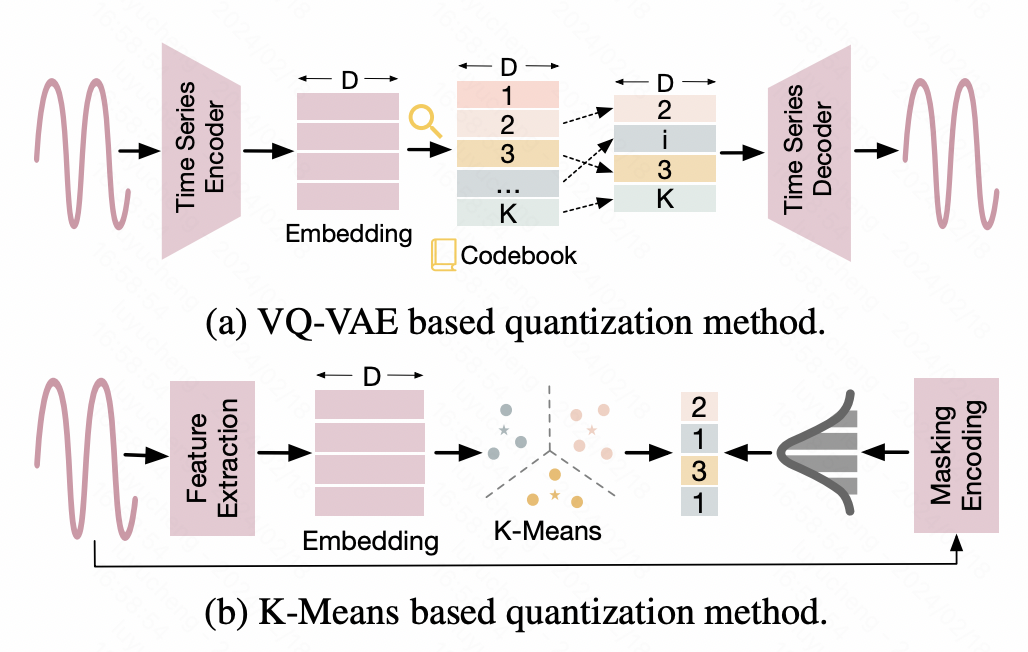

This type of method discretizes time series and converts continuous values into discrete id results to adapt to the input form of large NLP models. For example, one approach is to map time series into discrete representations with the help of Vector Quantized-Variational AutoEncoder (VQ-VAE) technology. VQ-VAE is an autoencoder structure based on VAE. VAE maps the original input into a representation vector through the Encoder, and then restores the original data through the Decoder. VQ-VAE ensures that the intermediate generated representation vector is discretized. A dictionary is constructed based on this discretized representation vector to implement discretized mapping of time series data. Another method is based on K-means discretization, using the centroids generated by Kmeans to discretize the original time series. In addition, in some work, time series are also directly converted into text. For example, in some financial scenarios, daily price increases, price decreases and other information are directly converted into corresponding letter symbols as input to the large NLP model.

Picture

Picture

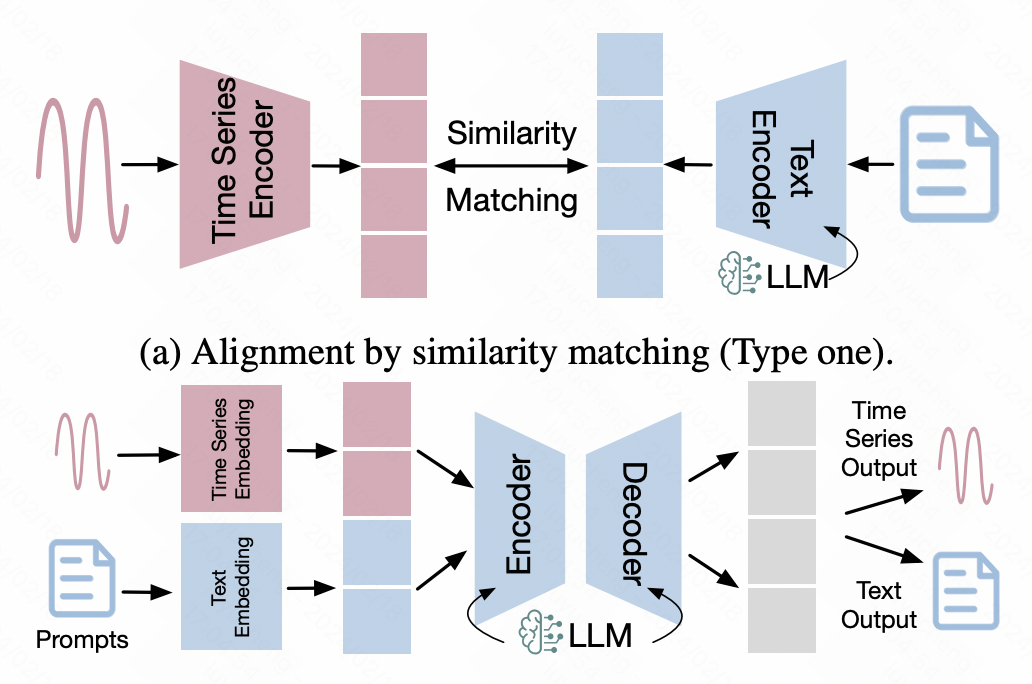

3. Time series-text alignment

This type of method relies on alignment technology in the multi-modal field to combine time The representation of the sequence is aligned to the text space, thereby achieving the goal of directly inputting time series data into the large NLP model.

In this type of method, some multi-modal alignment methods are widely used. The most typical one is multi-modal alignment based on contrastive learning. Similar to CLIP, a time series encoder and a large model are used to input the representation vectors of time series and text respectively, and then contrastive learning is used to shorten the distance between positive sample pairs. Aligning representations of time series and textual data in latent space.

Another method is finetune based on time series data, using the NLP large model as the backbone, and introducing additional network adaptation time series data on this basis. Among them, efficient cross-modal finetune methods such as LoRA are relatively common. They freeze most parameters of the backbone and finetune only a small number of parameters, or introduce a small number of adapter parameters for finetune to achieve multi-modal alignment.

Picture

Picture

4. Introducing visual information

This method is relatively rare. It usually establishes a connection between time series and visual information. Then the multi-modal capabilities that have been studied in depth using images and text are introduced to extract effective features for downstream tasks. For example, ImageBind uniformly aligns the data of 6 modalities, including time series type data, to achieve the unification of large multi-modal models. Some models in the financial field convert stock prices into chart data, and then use CLIP to align images and texts to generate chart-related features for downstream time series tasks.

5. Large model tools

This type of method no longer improves the NLP large model, or transforms the time series data form for large model adaptation, but directly treats the NLP large model as a Tools for solving time series problems. For example, let the large model generate code to solve time series prediction and apply it to time series prediction; or let the large model call open source API to solve time series problems. Of course, this method is more biased toward practical applications.

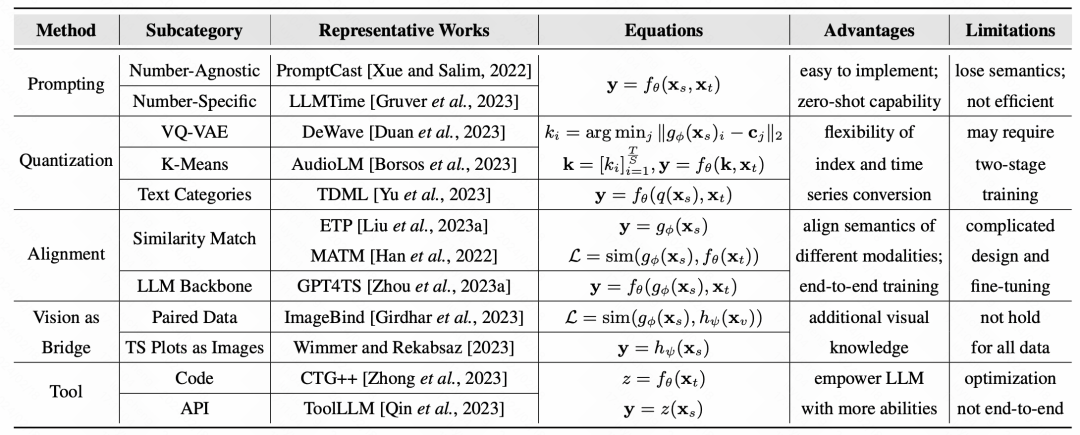

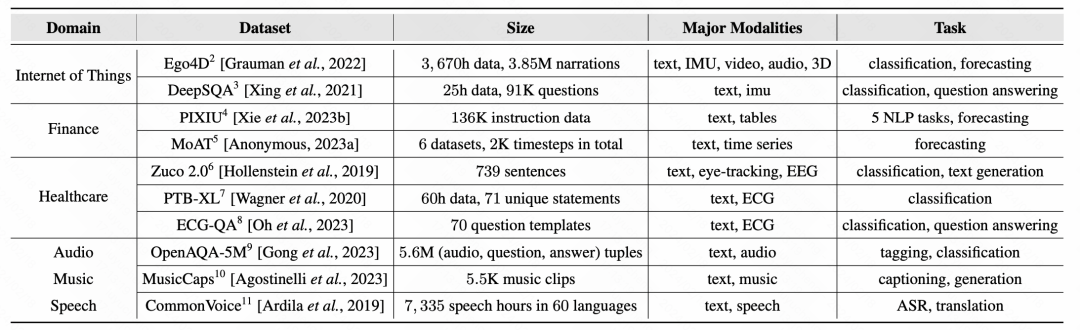

Finally, the article summarizes the representative work and representative data sets of various methods:

Pictures

Pictures

picture

picture

The above is the detailed content of How to apply NLP large models to time series? A summary of the five categories of methods!. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- LeCun predicts AGI: Large models and reinforcement learning are both rampant! My 'world model' is the new way

- How to catch inappropriate content in the era of big models? EU bill requires AI companies to ensure users' right to know

- Make your own tools for large models such as GPT-4 to identify ChatGPT fraud

- If you want the large model to learn more examples in prompt, this method allows you to enter more characters

- Topic modeling technology in the field of NLP