Technology peripherals

Technology peripherals AI

AI How to deal with non-independent and identically distributed data and common methods

How to deal with non-independent and identically distributed data and common methodsHow to deal with non-independent and identically distributed data and common methods

Non-independent and identically distributed means that the samples in the data set do not meet the independent and identically distributed conditions. This means that the samples are not independently drawn from the same distribution. This situation can have a negative impact on the performance of some machine learning algorithms, especially if the distribution is imbalanced or there is inter-class correlation.

In machine learning and data science, it is usually assumed that data are independently and identically distributed, but actual data sets often have non-independent and identically distributed situations. This means that there may be a correlation between the data and may not fit the same probability distribution. In this case, the model's performance may be affected. In order to deal with the problem of non-independent and identical distribution, the following strategies can be adopted: 1. Data preprocessing: By cleaning the data, removing outliers, filling in missing values, etc., the correlation and distribution deviation of the data can be reduced. 2. Feature selection: Selecting features that are highly correlated with the target variable can reduce the impact of irrelevant features on the model and improve the performance of the model. 3. Feature transformation: By transforming the data, such as logarithmic transformation, normalization, etc., the data can be made closer to independent and identical

The following are common methods to deal with non-independent and identical distribution :

1. Data resampling

Data resampling is a method of dealing with non-independent and identical distributions by fine-tuning the data set to reduce the correlation between data samples. Commonly used resampling methods include Bootstrap and SMOTE. Bootstrap is a sampling method with replacement, which generates new data sets through multiple random samplings. SMOTE is a method of synthesizing minority class samples to balance the class distribution by generating new synthetic samples based on minority class samples. These methods can effectively deal with sample imbalance and correlation problems and improve the performance and stability of machine learning algorithms.

2. Distribution adaptive method

The distribution adaptive method is a method that can adaptively adjust model parameters to adapt to non- Independent and identically distributed data. This method can automatically adjust model parameters according to the distribution of data to improve model performance. Common distribution adaptation methods include transfer learning, domain adaptation, etc.

3. Multi-task learning method

The multi-task learning method is a method that can handle multiple tasks at the same time, and can share models parameters to improve model performance. This method can combine different tasks into a whole, so that the correlation between tasks can be exploited to improve the performance of the model. Multi-task learning methods are often used to process non-independent and identically distributed data, and can combine data sets from different tasks to improve the generalization ability of the model.

4. Feature selection method

The feature selection method is a method that can select the most relevant features to train the model. By selecting the most relevant features, noise and irrelevant information in non-IID data can be reduced, thereby improving model performance. Feature selection methods include filtering methods, packaging methods, and embedded methods.

5. Ensemble learning method

The ensemble learning method is a method that can integrate multiple models to improve overall performance. By combining different models, the bias and variance between models can be reduced, thereby improving the model's generalization ability. Integrated learning methods include Bagging, Boosting, Stacking, etc.

The above is the detailed content of How to deal with non-independent and identically distributed data and common methods. For more information, please follow other related articles on the PHP Chinese website!

How You can Register for NVIDIA AI Summit 2024?Apr 16, 2025 am 09:49 AM

How You can Register for NVIDIA AI Summit 2024?Apr 16, 2025 am 09:49 AMThe NVIDIA AI Summit 2024: A Deep Dive into India's AI Revolution Following the Datahack Summit 2024, India gears up for the NVIDIA AI Summit 2024, scheduled for October 23rd-25th at the Jio World Convention Centre in Mumbai. This pivotal event prom

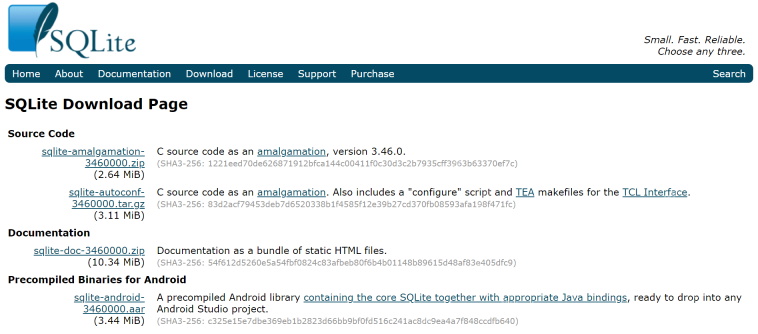

What is SQLite?Apr 16, 2025 am 09:48 AM

What is SQLite?Apr 16, 2025 am 09:48 AMIntroduction Imagine a fast, simple database engine—no configuration needed—that integrates directly into your applications and offers robust SQL support without a server. That's SQLite, widely used in applications and web browsers for its ease of u

Today, I Tried Wordware AI Roast, and It is Hilarious - Analytics VidhyaApr 16, 2025 am 09:37 AM

Today, I Tried Wordware AI Roast, and It is Hilarious - Analytics VidhyaApr 16, 2025 am 09:37 AMGet Roasted by an AI! A Hilarious Dive into Wordware AI YouTube roast videos are hugely popular, but have you ever been roasted by artificial intelligence? I recently experienced the comedic wrath of Wordware AI, and it was a hilariously humbling ex

Top 7 Algorithms for Data Structures in Python - Analytics VidhyaApr 16, 2025 am 09:28 AM

Top 7 Algorithms for Data Structures in Python - Analytics VidhyaApr 16, 2025 am 09:28 AMIntroduction Efficient software development hinges on a strong understanding of algorithms and data structures. Python, known for its ease of use, provides built-in data structures like lists, dictionaries, and sets. However, the true power is unlea

Violin Plots: A Tool for Visualizing Data DistributionsApr 16, 2025 am 09:27 AM

Violin Plots: A Tool for Visualizing Data DistributionsApr 16, 2025 am 09:27 AMViolin Plots: A Powerful Data Visualization Tool This article delves into violin plots, a compelling data visualization technique merging box plots and density plots. We'll explore how these plots unveil data patterns, making them invaluable for dat

Comprehensive Guide to Advanced Python ProgrammingApr 16, 2025 am 09:25 AM

Comprehensive Guide to Advanced Python ProgrammingApr 16, 2025 am 09:25 AMAdvanced Python for Data Scientists: Mastering Classes, Generators, and More This article delves into advanced Python concepts crucial for data scientists, building upon the foundational knowledge of Python's built-in data structures. We'll explore

Guide to Read and Write SQL QueriesApr 16, 2025 am 09:23 AM

Guide to Read and Write SQL QueriesApr 16, 2025 am 09:23 AMSQL Query Interpretation Guide: From Beginner to Mastery Imagine you are solving a puzzle where every SQL query is part of the image, and you are trying to get the complete picture from it. This guide will introduce some practical methods to teach you how to read and write SQL queries. Whether you look at SQL from a beginner's perspective or from a professional programmer's perspective, interpreting SQL queries will help you get answers faster and easier. Start exploring and you will soon realize how SQL usage revolutionizes the way you think about databases. Overview Master the basic structure of SQL query. Interpret various SQL clauses and functions. Analyze and understand complex SQL queries. Efficient debugging and excellent

This Research Paper Won the ICML 2024 Best Paper AwardApr 16, 2025 am 09:21 AM

This Research Paper Won the ICML 2024 Best Paper AwardApr 16, 2025 am 09:21 AMA Groundbreaking Paper on Dataset Diversity in Machine Learning The machine learning (ML) community is abuzz over a recent ICML 2024 Best Paper Award winner that challenges the often-unsubstantiated claims of "diversity" in datasets. Resea

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Zend Studio 13.0.1

Powerful PHP integrated development environment

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Atom editor mac version download

The most popular open source editor