GPT (Generative Pre-trained Transformer) is a pre-trained language model based on the Transformer model. Its main purpose is to generate natural language text. In GPT, the process of following prompts is called Conditional Generation, which means that given some prompt text, GPT can generate text related to these prompts. The GPT model learns language patterns and semantics through pre-training, and then uses this learned knowledge when generating text. In the pre-training stage, GPT is trained through large-scale text data and learns the statistical characteristics, grammatical rules and semantic relationships of vocabulary. This enables GPT to reasonably organize the language when generating text to make it coherent and readable. In conditional generation, we can give one or more prompt texts as the basis for generating text. For example, given a question as a prompt, GPT can generate answers relevant to the question. This approach can be applied to many natural language processing tasks, such as machine translation, text summarization, and dialogue generation. In short

1. Basic concepts

Before introducing how to follow the prompts of the GPT model, you need to understand some basic concepts first.

1. Language model

Language model is used to probability model natural language sequences. Through the language model, we can calculate the probability value of a given sequence under the model. In the field of natural language processing, language models are widely used in multiple tasks, including machine translation, speech recognition, and text generation. The main goal of a language model is to predict the probability of the next word or character, based on the words or characters that have appeared before. This can be achieved through statistical methods or machine learning techniques such as neural networks. Statistical language models are usually based on n-gram models, which assume that the occurrence of a word is only related to the previous n-1 words. Language models based on neural networks, such as recurrent neural networks (RNN) and Transformer models, can capture longer contextual information, thereby improving the performance of the model

2. Pre-training model

The pre-training model refers to a model that is trained unsupervised on large-scale text data. Pre-trained models usually adopt self-supervised learning, which uses contextual information in text data to learn language representation. Pre-trained models have achieved good performance in various natural language processing tasks, such as BERT, RoBERTa, and GPT.

3.Transformer model

The Transformer model is a neural network model based on the self-attention mechanism, proposed by Google in 2017. The Transformer model has achieved good results in tasks such as machine translation. Its core idea is to use a multi-head attention mechanism to capture contextual information in the input sequence.

2. GPT model

The GPT model is a pre-trained language model proposed by OpenAI in 2018. Its core is based on Transformer The architecture of the model. The training of the GPT model is divided into two stages. The first stage is self-supervised learning on large-scale text data to learn language representation. The second stage is fine-tuning on specific tasks, such as text generation, sentiment analysis, etc. The GPT model performs well in text generation tasks and is able to generate natural and smooth text.

3. Conditional generation

In the GPT model, conditional generation refers to the generation and prompting given some prompt text. Related text. In practical applications, prompt text usually refers to some keywords, phrases or sentences, which are used to guide the model to generate text that meets the requirements. Conditional generation is a common natural language generation task, such as dialogue generation, article summarization, etc.

4. How the GPT model follows the prompts

When the GPT model generates text, it will predict the probability of the next word based on the input text sequence. Distribution, and samples according to the probability distribution to generate the next word. In conditional generation, the prompt text and the text to be generated need to be spliced together to form a complete text sequence as input. Here are two common ways how GPT models follow prompts.

1. Prefix matching

Prefix matching is a simple and effective method, which is to splice the prompt text in front of the generated text to form a Complete text sequence as input. During training, the model learns how to generate subsequent text based on previous text. At generation time, the model generates prompt-related text based on the prompt text. The disadvantage of prefix matching is that the position and length of the prompt text need to be manually specified, which is not flexible enough.

2. Conditional input

#Conditional input is a more flexible method, that is, the prompt text is used as a conditional input, and each generated text is time steps are input into the model together. During training, the model will learn how to generate text that meets the requirements based on the prompt text. When generating, you can arbitrarily specify the content and location of the prompt text to generate text related to the prompt. The advantage of conditional input is that it is more flexible and can be adjusted according to specific application scenarios.

The above is the detailed content of How does the GPT model follow the prompts and guidance?. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

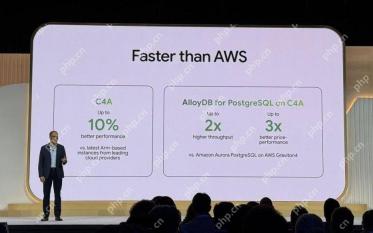

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SublimeText3 Mac version

God-level code editing software (SublimeText3)

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft