Technology peripherals

Technology peripherals AI

AI In-depth understanding of charts: ChartLlama, open source chart behemoths such as Tencent and Nanyang Polytechnic

In-depth understanding of charts: ChartLlama, open source chart behemoths such as Tencent and Nanyang PolytechnicIn-depth understanding of charts: ChartLlama, open source chart behemoths such as Tencent and Nanyang Polytechnic

In the field of image understanding, multi-modal large models have fully demonstrated their excellent performance. However, there is still room for improvement in existing multimodal models for diagram understanding and generation tasks that are often handled in work.

Although the current state-of-the-art models in the field of graph understanding perform well on simple test sets, they are unable to handle more complex question and answer tasks due to their lack of language understanding and output capabilities. Task. On the other hand, the performance of multi-modal large models trained based on large language models is also unsatisfactory, mainly due to their lack of training samples for graphs. These problems have seriously restricted the continuous progress of multi-modal models in chart understanding and generation tasks

Recently, Tencent, Nanyang Technological University and Southeast University proposed ChartLlama. The research team created a high-quality graph dataset and trained a multi-modal large-scale language model focused on graph understanding and generation tasks. ChartLlama combines multiple functions such as language processing and chart generation to provide a powerful research tool for scientific researchers and related professionals.

Paper address: https://arxiv.org/abs/2311.16483

Home page address: https://tingxueronghua.github.io/ChartLlama/

The ChartLlama team designed a clever diversified data collection strategy, using GPT-4 to generate data with specific themes, distributions and trends of data to ensure the diversity of the data set. The team combined open source plotting libraries with the programming capabilities of GPT-4 to write precise charting code to produce accurate graphical data representations. In addition, the team also used GPT-4 to describe the chart content and generate question and answer pairs, and generated rich and diverse training samples for each chart to ensure that the trained model can fully understand the chart

In the field of chart understanding, traditional models can only complete some simple questions, such as reading numbers and other simple question and answer tasks, and cannot answer more complex questions. These models have difficulty following long instructions and often make errors in questions and answers involving mathematical operations. In contrast, ChartLlama can effectively avoid these problems. The specific comparison is as follows:

In addition to traditional tasks, the research team also defined several new tasks, including three tasks involving chart generation. The paper provides relevant examples:

Given a chart and instructions, examples of chart reconstruction and chart editing

Given a chart and instructions, examples of chart reconstruction and chart editing

The process of generating chart examples is based on instructions and raw data

ChartLlama performs well on various benchmark data sets, reaching the state-of-the-art level, And the amount of training data required is also smaller. It adopts a flexible data generation and collection method, greatly expands the chart types and task types in chart understanding and generation tasks, and promotes the development of the field

Method Overview

ChartLlama has designed a flexible data collection method that leverages the powerful language and programming capabilities of GPT-4 to create rich multi-modal chart datasets.

ChartLlama’s data collection consists of three main phases:

- Chart data generation: ChartLlama not only collects data from traditional data sources, but also leverages the power of GPT-4 to generate synthetic data. GPT-4 is guided to produce diverse and balanced chart data by providing specific features such as topics, distributions, and trends. Since the generated data contains known data distribution characteristics, this makes the construction of instruction data more flexible and diverse.

- Chart generation: Next, use the powerful programming capabilities of GPT-4 and use open source libraries (such as Matplotlib) to write charts based on the generated data and function documents. Drawing scripts produced a series of carefully rendered diagrams. Since the drawing of charts is entirely based on open source tools, this algorithm can generate more types of charts for training. Compared with existing data sets, such as ChatQA, which only supports three chart types, the data set built by ChartLlama supports up to 10 chart types and can be arbitrarily expanded.

-

Instruction data generation: In addition to chart rendering, ChartLlama further uses GPT-4 to describe chart content and construct a variety of question and answer data to ensure training A proven model can fully understand the graph. This comprehensive instruction-adapted corpus incorporates narrative text, question-answer pairs, and source or modified code for diagrams. Past data sets only support 1-3 chart understanding tasks, while ChartLlama supports up to 10 chart understanding and generation tasks, which can better help train large graphics and text models to understand the information in icons

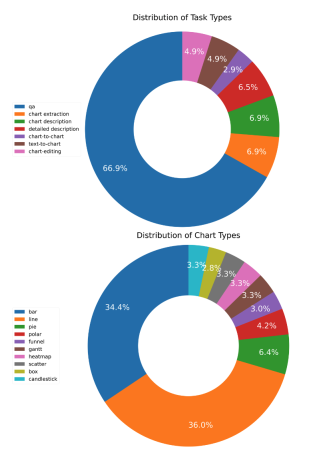

Using the above steps, ChartLlama has built a dataset containing multiple tasks and multiple chart types. The proportions of different types of tasks and charts in the total data set are as follows:

Please refer to the original paper for more detailed instructions and instructions

Experimental results

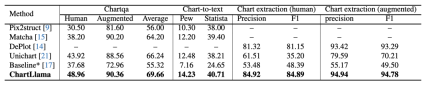

Whether it is a traditional task or a new task, ChartLlama has demonstrated the most superior performance. Traditional tasks include chart question-and-answer, chart summary, and structured data extraction of charts. Comparing ChartLlama with previous state-of-the-art models, the results are shown below:

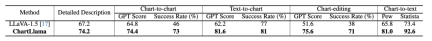

The researchers also evaluated ChartLlama’s unique task capabilities, including generating Chart code, summarize chart and edit chart. They also created a test set for the corresponding task and compared it with LLaVA-1.5, the most powerful open source graphic and text model currently. Here are the results:

The research team tested ChartLlama’s question-answer accuracy on a variety of different types of charts and compared it with previous SOTA Model Unichart was compared with the proposed baseline model, and the results are as follows:

Overall, ChartLlama not only pushes the boundaries of multi-modal learning , and also provides a more accurate and efficient tool for chart understanding and generation. Whether in academic writing or corporate presentations, ChartLlama will make understanding and creating charts more intuitive and efficient, taking an important step forward in generating and interpreting complex visual data.

Interested readers can go to the original text of the paper to get more research content

The above is the detailed content of In-depth understanding of charts: ChartLlama, open source chart behemoths such as Tencent and Nanyang Polytechnic. For more information, please follow other related articles on the PHP Chinese website!

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AM

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AMThis article explores the growing concern of "AI agency decay"—the gradual decline in our ability to think and decide independently. This is especially crucial for business leaders navigating the increasingly automated world while retainin

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AM

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AMEver wondered how AI agents like Siri and Alexa work? These intelligent systems are becoming more important in our daily lives. This article introduces the ReAct pattern, a method that enhances AI agents by combining reasoning an

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM"I think AI tools are changing the learning opportunities for college students. We believe in developing students in core courses, but more and more people also want to get a perspective of computational and statistical thinking," said University of Chicago President Paul Alivisatos in an interview with Deloitte Nitin Mittal at the Davos Forum in January. He believes that people will have to become creators and co-creators of AI, which means that learning and other aspects need to adapt to some major changes. Digital intelligence and critical thinking Professor Alexa Joubin of George Washington University described artificial intelligence as a “heuristic tool” in the humanities and explores how it changes

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AM

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AMLangChain is a powerful toolkit for building sophisticated AI applications. Its agent architecture is particularly noteworthy, allowing developers to create intelligent systems capable of independent reasoning, decision-making, and action. This expl

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AM

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AMRadial Basis Function Neural Networks (RBFNNs): A Comprehensive Guide Radial Basis Function Neural Networks (RBFNNs) are a powerful type of neural network architecture that leverages radial basis functions for activation. Their unique structure make

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AM

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AMBrain-computer interfaces (BCIs) directly link the brain to external devices, translating brain impulses into actions without physical movement. This technology utilizes implanted sensors to capture brain signals, converting them into digital comman

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AM

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AMThis "Leading with Data" episode features Ines Montani, co-founder and CEO of Explosion AI, and co-developer of spaCy and Prodigy. Ines offers expert insights into the evolution of these tools, Explosion's unique business model, and the tr

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AM

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AMThis article explores Retrieval Augmented Generation (RAG) systems and how AI agents can enhance their capabilities. Traditional RAG systems, while useful for leveraging custom enterprise data, suffer from limitations such as a lack of real-time dat

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Dreamweaver Mac version

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

WebStorm Mac version

Useful JavaScript development tools