Home >Technology peripherals >AI >A new breakthrough in AI simultaneous interpretation, Google releases Translatotron 3 model: it can bypass the text conversion step

A new breakthrough in AI simultaneous interpretation, Google releases Translatotron 3 model: it can bypass the text conversion step

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-12-02 17:17:131014browse

Google issued a press release today to officially introduce their new artificial intelligence model called Translatotron 3. This model does not require any parallel speech data and can achieve speech-to-speech simultaneous interpretation

Google launched the Translatotron S2ST system in 2019 and launched the second version in July 2021. In a paper published on May 27, 2023, Google announced that it is deploying new methods for training Translatotron 3

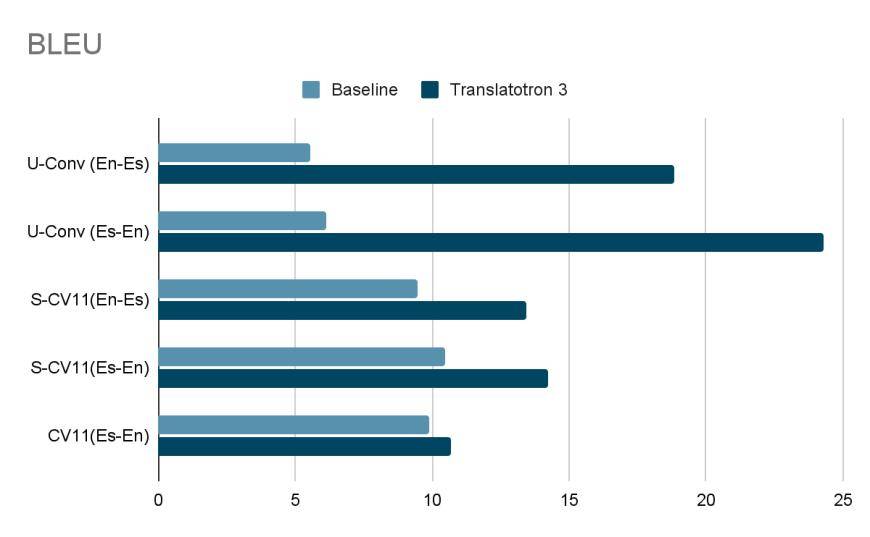

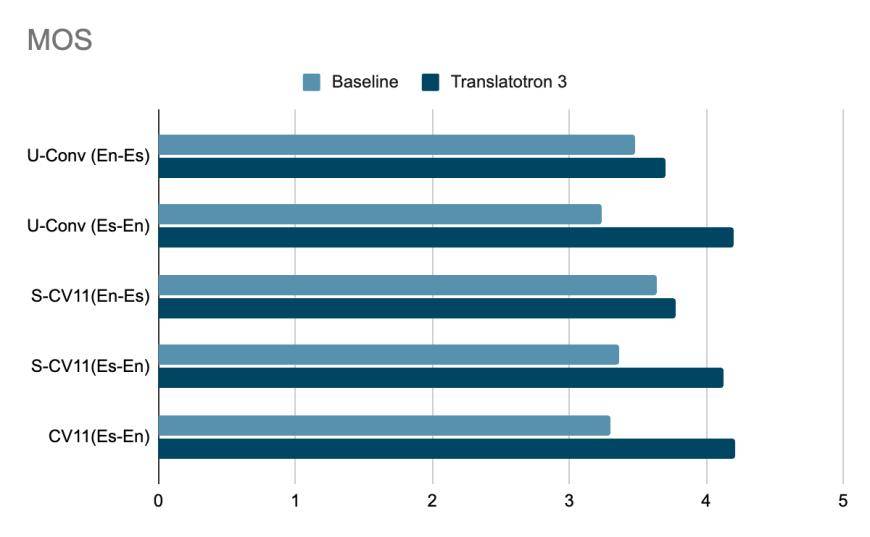

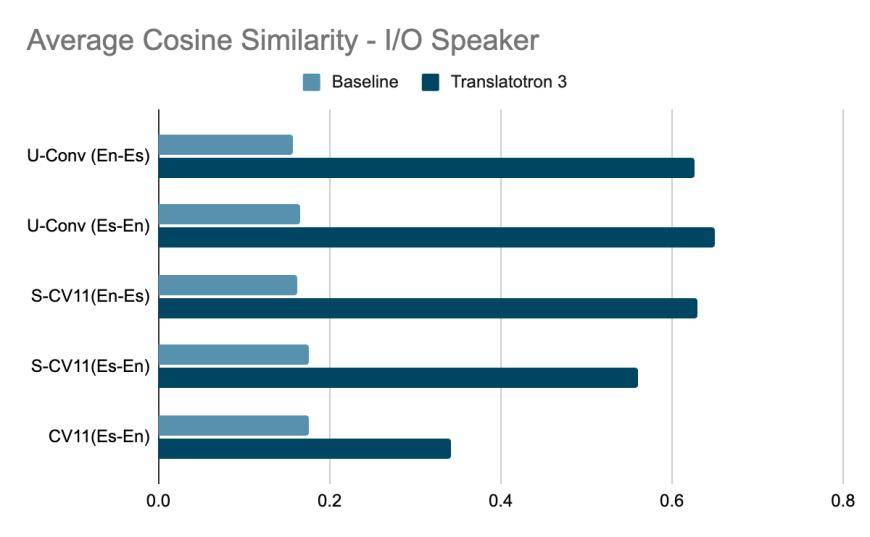

According to the researchers, Translatotron 2 already provides excellent translation quality, speech robustness (Robust) and speech naturalness, while Translatotron 3 achieves "the first fully unsupervised end-to-end model of direct speech-to-speech translation" ".

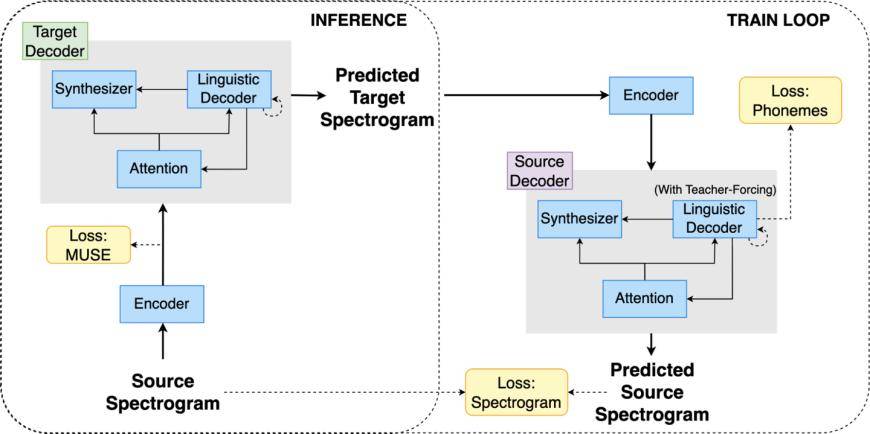

Traditionally S2ST is solved through a cascade method of automatic speech recognition machine translation text to speech synthesis, but Translatotron 3 adopts a novel end-to-end architecture that directly maps source language speech to the target language without Rely on intermediate text representation

The Translatotron 3 model could also be used to create tools to help people with language impairments, or to develop more engaging and effective personalized language learning tools.

The content that needs to be rewritten is: Source: IT Home

The above is the detailed content of A new breakthrough in AI simultaneous interpretation, Google releases Translatotron 3 model: it can bypass the text conversion step. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology