Home >Technology peripherals >AI >Jia Yangqing, the former chief AI scientist of Alibaba, speaks out again: 'Magic reform' in the open source field is not necessary

Jia Yangqing, the former chief AI scientist of Alibaba, speaks out again: 'Magic reform' in the open source field is not necessary

- 王林forward

- 2023-11-17 13:43:061255browse

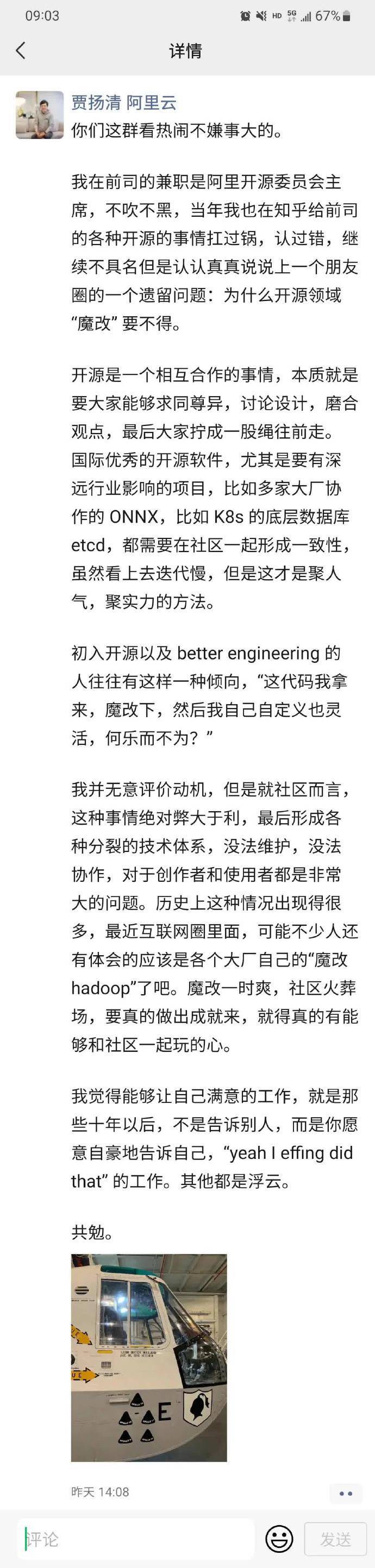

Recently, Jia Yangqing, the former chief AI scientist of Alibaba, complained that a new domestic model actually uses the LLaMA architecture, and only changed a few variable names in the code, triggering heated discussions on the Internet. After the large model was accused of responding and releasing the training model process, Jia Yangqing recently published a post talking about why "magic modification" is not necessary in the open source field.

He also said that although temporary magic modification will bring pleasure, if you really want to achieve success in the community, you must be truly willing to play with the community

The above is the detailed content of Jia Yangqing, the former chief AI scientist of Alibaba, speaks out again: 'Magic reform' in the open source field is not necessary. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology