How to implement FMCW radar position recognition elegantly (IROS2023)

Hello everyone, my name is Yuan Jianhao, and I am very happy to come to the Heart of Autonomous Driving platform to share our work on radar position recognition at IROS2023.

Positioning using Frequency Modulated Continuous Wave (FMCW) radar is of increasing interest due to its inherent resistance to challenging environments. However, complex artifacts of the radar measurement process require appropriate uncertainty estimates - to ensure safe and reliable application of this promising sensor modality. In this work, we propose a multi-session map management system that builds an “optimal” map based on the learned variance properties in the embedding space for further localization. Using the same variance property, we also propose a new method to introspectively reject positioning queries that may be incorrect. To this end, we apply robust noise-aware metric learning that both exploits short-timescale variations in radar data along the driving path (for data augmentation) and predicts downstream uncertainties in metric space-based position identification. We demonstrate the effectiveness of our approach through extensive cross-validation testing on the Oxford Radar RobotCar and MulRan datasets. Here, we surpass the current state-of-the-art in radar position identification and other uncertainty-aware methods using only a single nearest neighbor query. We also show an increase in performance in a difficult test environment when rejecting queries based on uncertainty, something we did not observe with competing uncertainty-aware location recognition systems.

Off the Radar’s starting point

Position identification and localization are important tasks in the field of robotics and autonomous systems, as they enable the system to understand and navigate its environment. Traditional vision-based location recognition methods are often susceptible to changes in environmental conditions, such as lighting, weather, and occlusion, resulting in performance degradation. To address this issue, there is increasing interest in using FMCW radar as a robust sensor alternative in this adversarial environment.

Existing work has demonstrated the effectiveness of hand-crafted and learning-based feature extraction methods for FMCW radar position recognition. Despite the success of existing work, the deployment of these methods in safety-critical applications such as autonomous driving is still limited by calibration uncertainty estimates. In this area, the following points need to be considered:

- Security requires uncertainty estimates to be well calibrated with false positive rates to enable introspective rejection;

- Real-time deployment needs to be fast Reasoning capabilities based on single-scan uncertainty;

- Repeated route traversal in long-term autonomy requires online continuous map maintenance.

Although VAE is commonly used for generation tasks, its probabilistic latent space can serve as an effective metric space representation for location recognition and allows a priori assumptions about the data noise distribution, which also provides a standardized Accidental Uncertainty Estimation. Therefore, in this paper, in order to achieve reliable and safe deployment of FMCW radar in autonomous driving, we utilize a variational contrastive learning framework and propose a unified uncertainty-based radar position identification method.

System Process Overview

System Process Overview

In the offline stage, we use a variational contrastive learning framework to learn a hidden embedding space with estimation uncertainty such that radars from similar topological locations Scans are close to each other and vice versa. In the online phase, we developed two uncertainty-based mechanisms to handle continuously collected radar scans for inference and map construction. For repeated traversals of the same route, we actively maintain an integrated map dictionary by replacing highly uncertain scans with more certain scans. For query scans with low uncertainty, we retrieve matching map scans from the dictionary based on metric space distance. In contrast, we reject predictions for scans with high uncertainty.

Introduction to the method of Off the Radar

This article introduces a variational contrast learning framework for radar position identification to describe the uncertainty in position identification. . Main contributions include:

- Contrast learning framework for uncertainty awareness.

- Introspective query mechanism based on calibration uncertainty estimation.

- Online recursive map maintenance for changing environments.

Variational Contrastive Learning

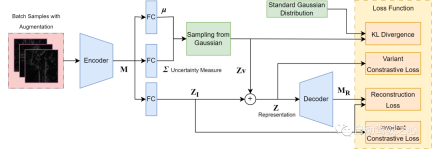

Overview of the variational contrastive learning framework, based on [^Lin2018dvml]. By encoder -The decoder structure learns a metric space with two reparameterized parts: a deterministic embedding for recognition and a set of parameters modeling a multivariate Gaussian distribution with its variance as the uncertainty measure. Holistic learning is driven by both reconstruction and contrastive losses to ensure informative and discriminative hidden representations of radar scans.

The work in this part is both a key enabler of our core contribution and a novel integration of deep variational metric learning with radar position recognition, as well as a new way to represent uncertainty in position recognition. As shown in the figure, we adopt a structure to decompose the radar scan embedding into a noise-induced variable part, which captures the variance of sources of uncertainty unrelated to the prediction, and a semantically invariant part for scene representation. basic characteristics. The variable part is later sampled from a prior multivariate equal square Gaussian distribution and added to the invariance part to form the overall representation. The variable output is used directly as an uncertainty measure. We assume that we only consider aleatory uncertainties in model predictions caused by inherent fuzziness and randomness in the data as the main source of uncertainty. Especially for radar scans, this can be due to speckle noise, saturation, and temporary occlusions. Standard metric learning methods, regardless of the chosen loss function, tend to force identical embeddings between pairs of positive examples while ignoring the potential variance between them. However, this may cause the model to be insensitive to small features and overfit the training distribution. Therefore, to model the noise variance, we use the additional probabilistic variance output in the structure to estimate the aleatory uncertainty. To build such a noise-aware radar-aware representation, we use four loss functions to guide the overall training.

1) Invariant contrast loss on the deterministic representation (Z_I) to separate task-irrelevant noise from radar semantics so that the invariant embedding contains sufficient causal information; and

2) Variable contrast lossEstablish a meaningful metric space on the overall representation (Z). Both contrast losses take the following form.

One of the batches consists of m samples and a temporally approximated frame augmentation of synthetic rotation using the "rotation" strategy, which is just rotation augmentation for rotation invariance. Our goal is to maximize the probability that an augmented sample is recognized as the original instance, while minimizing the probability of the reversal case.

where embedding (Z) is (Z_I) or (Z), as described in equations 1) and 2).

3) Kullback–Leibler (KL) divergence Between the learned Gaussian distribution and the standard isotropic multivariate Gaussian distribution, this is our a priori assumption of data noise. This ensures the same distribution of noise across all samples and provides a static reference for the absolute value of the variable output.

4) Reconstruction loss Between the extracted feature map (M) and the decoder output (M_R), this forces the overall representation (Z) to contain enough information from the original radar scan to reconstruction. However, we only reconstruct a lower-dimensional feature map instead of pixel-level radar scan reconstruction to reduce the computational cost in the decoding process.

While the ordinary VAE structure driven only by KL divergence and reconstruction loss also provides latent variance, it is considered unreliable for use under uncertainty due to its well-known posterior collapse and vanishing variance problems estimate. This ineffectiveness is mainly due to the imbalance of the two losses during training: when the KL divergence dominates, the latent space posterior is forced to equal the prior, while when the reconstruction loss dominates, the latent variance is pushed to zero. However, in our approach, we achieve more stable training by introducing a variable contrastive loss as an additional regularizer, where the variance is driven to maintain robust boundaries between cluster centers in the metric space. As a result, we obtain a more reliable underlying spatial variance that reflects the underlying aleatory uncertainty in radar perception. We choose to demonstrate the benefits of our specific approach to learning uncertainty in a feature-increasing loss setting. In this area, state-of-the-art techniques for radar position recognition already use losses with many (i.e., more than 2) negative samples, so we extend on this basis.

Continuous Map Maintenance

Continuous map maintenance is an important feature of the online system, as we aim to fully utilize the scan data obtained during autonomous vehicle operations, and improve the map recursively. The process of merging new radar scans into a parent map consisting of previously traversed scans is as follows. Each radar scan is represented by a hidden representation and an uncertainty metric. During the merging process, we search for matching positive samples for each new scan with a topological distance below a threshold. If the new scan has lower uncertainty then it is integrated into the parent map and replaces the matching scan, otherwise it is discarded.

Map maintenance diagram: red and green nodes represent radar scans with higher and lower uncertainties respectively. We always maintain a parent map as a positioning reference for each location, consisting only of scans with the lowest uncertainty. Note that the dashed edges represent the parent map's initial state, and the solid edges represent updated versions of the parent map.

By performing the maintenance process iteratively, we can gradually improve the quality of the integrated parent map. Therefore, the maintenance algorithm can serve as an effective online deployment strategy because it continuously exploits multiple experiences of the same route traversal to improve recognition performance while maintaining a constant parent map size, resulting in budgeted computational and storage costs.

Introspection Query

The estimated variance for all dimensions is close to 1 due to model uncertainty with measurements from a standard Gaussian distribution. Therefore, we can fully define the scale and resolution of uncertainty rejection using two hyperparameters \Delta and N. The resulting threshold T is defined as follows:

Given a scan with m-dimensional latent variance, we average over all dimensions to obtain a scalar uncertainty measure

Prediction Rejection

At inference time, we perform introspective query rejection, where query scans with variances above a defined threshold are rejected for identification. Existing methods, such as STUN and MC Dropout, dynamically divide the uncertainty range of a batch of samples into threshold levels. However, this requires multiple samples during inference and may lead to unstable rejection performance, especially when there are only a small number of samples. In contrast, our static thresholding strategy provides sample-independent threshold levels and provides consistent single-scan uncertainty estimation and rejection. This feature is critical for real-time deployment of location recognition systems, since radar scans are acquired frame by frame while driving.

Experimental details

This article uses two data sets: 1) Oxford Radar RobotCar and 2) MulRan. Both data sets use the CTS350-X Navtech FMCW scanning radar. The radar system operates in the 76 GHz to 77 GHz range and can generate up to 3768 range readings with a resolution of 4.38 cm.

Benchmarks The recognition performance of is done by comparing with several existing methods, including the original VAE, Gadd et The state-of-the-art radar site identification method proposed by al (called BCRadar), and the non-learning based method RingKey (part of ScanContext, without rotation refinement). Additionally, the performance is compared with MC Dropout and STUN, which serve as baselines for uncertainty-aware place recognition.

Ablation StudyTo evaluate the effectiveness of our proposed introspective query (Q) and map maintenance (M) modules, we performed an ablation study by comparing different variants of our approach, Respectively expressed as OURS(O/M/Q/QM), the details are as follows:

- O: No map maintenance, no introspection query

- M: Only map maintenance

- Q: Introspection query only

- QM: Both map maintenance and introspection query. Specifically, we compared the recognition performance between O and M, and the uncertainty between Q and QM Estimating performance.

Common Settings To ensure fair comparison, we adopt a common batch contrastive loss for all contrastive learning based methods, resulting in consistent performance across benchmarks loss function.

Implementation Details

Scan Settings

For all methods we will have polar coordinates with A = 400 bearings and B = 3768 cells The radar scans were converted to Cartesian scans and each box was 4.38 cm in size, with a side length of W = 256 and a box size of 0.5 m.

Training hyperparameters

We use VGG-19 [^simonyan2014very^] as the background feature extractor and use a linear layer to project the extracted features to a lower embedding dimension d=128. We trained all baselines for 10 epochs in Oxford Radar RobotCar and 15 iterations in MulRan with a learning rate of 1e{-5}, batch size is 8.

Evaluation Metrics

To evaluate location recognition performance, we use Recall@N (R@N) Metric, which is the accuracy of localization determined by determining whether at least one candidate out of N candidates is close to the ground truth indicated by GPS/INS. This is particularly important for safety assurance in autonomous driving applications, as it reflects the system's calibration of the false negative rate. We also use Average Precision (AP) to measure the average precision across all recall levels. Finally, we use F-scores and \beta=2/1/0.5 To assign the importance level of recall to precision as a comprehensive indicator to evaluate the overall recognition performance.

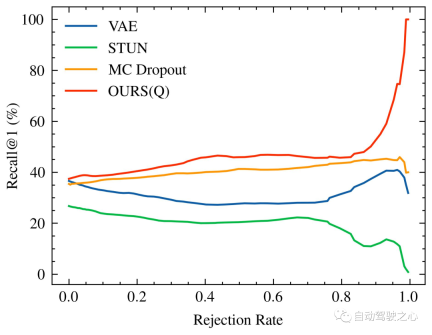

In addition, in order to evaluate the uncertainty estimation performance. We use Recall@RR, where we perform introspective query rejection and evaluate Recall@N at different uncertainty threshold levels =1 --Reject all queries whose scan uncertainty is greater than the threshold. We reject 0-100% of queries as a result.

Summary of results

Location recognition performance

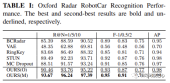

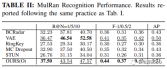

As shown in Table 1 in the Oxford Radar RobotCar experiment, our method only Using the metric learning module, the highest performance was achieved on all metrics. Specifically, in terms of Recall@1 , our method OURS(O) demonstrates the variance decoupled representation learned through a variational contrastive learning framework. Effectiveness, achieving a recognition performance of over 90.46%. This is further supported by MulRan experimental results, as shown in Table 2, our method has the best performance in Recall@1, overall F-scores and AP are better than all other methods. Although in MulRan experiments, VAE outperforms our method in Recall@5/10 , But our method is the best among the two settings F-1/0.5/2 and AP shows that our method has higher precision and recall, thus achieving more accurate and robust recognition performance.

Oxford Radar RobotCar recognition performance. The best and second best results are bold and __underline__ respectively.

Mulran recognition performance. The format is the same as above.

In addition, by further leveraging the continuous map maintenance in Oxford Radar RobotCar, we were able to further improve Recall@1 to 93.67%, exceeding the current The state-of-the-art method, STUN, exceeds this by 4.18%. This further demonstrates the effectiveness of the learned variance as an effective uncertainty measure and the uncertainty-based map integration strategy in improving place recognition performance.

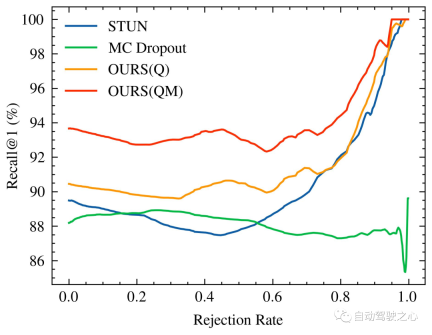

Uncertainty Estimation Performance

Identification performance changes as the percentage of rejected uncertain queries increases, especially Recall@1, as shown in Figure 1 in the Oxford Radar RobotCar experiment, as shown in Figure 1 in the MulRan experiment 2 shown. Notably, our method is the only one that demonstrates continuously improving recognition performance as the uncertain query rejection rate increases in both experimental settings. In the MulRan experiment, OURS(Q) is the only method that continues to steadily improve the Recall@RR indicator as the rejection rate increases. Compared to VAE and STUN, which also estimate model uncertainty like our method, OURS(Q) is within Recall@RR=0.1/0.2/0.5 achieved an improvement of (1.32/3.02/8.46)%, while VAE and STUN dropped -(3.79/5.24/8.80)% and -(2.97/4.16/6.30)% respectively.

Oxford Radar RobotCar's introspection query rejection performance. Recall@1 increases/decreases as the percentage of rejected indeterminate queries increases. Since the performance of VAE is low compared to other methods (specifically (48.42/48.08/18.48)% of Recall@RR=0.1/0.2/0.5), no visualization was performed.

#Mulran's introspection query rejection performance. The format is the same as above.

On the other hand, compared to MC Dropout, which estimates epistemic uncertainty due to data bias and model error, although it has a higher ## in the early stages of the Oxford Radar RobotCar experiment #Recall@1 increased, but its performance was generally lower than ours, and failed to achieve greater improvements as the rejection rate further increased. Finally, comparing OURS(Q) and OURS(QM) in the Oxford Radar RobotCar experiment, we observed that the change patterns of Recall@RR are similar, and they There is a considerable gap between them. This suggests that introspective query and map maintenance mechanisms independently contribute to the place recognition system, each leveraging uncertainty measures in an integral way.

Discussion about Off the Radar

Qualitative Analysis and Visualization

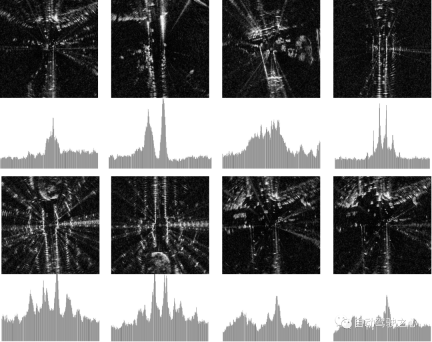

To qualitatively assess uncertainty in radar perception Sources of uncertainty, we provide a visual comparison of high/low uncertainty samples in the two datasets estimated using our method. As shown, high-uncertainty radar scans typically show heavy motion blur and sparse undetected areas, while low-uncertainty scans typically contain distinct features with stronger intensity in the histogram.

Визуализация радиолокационных сканирований с разным уровнем неопределенности. Четыре примера слева взяты из набора данных Oxford Radar RobotCar, а четыре примера справа — из MulRan. Мы показываем 10 лучших образцов с самой высокой (верхней)/самой низкой (нижней) неопределенностью. Сканирование радара отображается в декартовых координатах для повышения контрастности. Гистограмма под каждым изображением показывает особенности дескриптора RingKey для интенсивности, извлеченной из всех азимутальных углов.

Это еще раз подтверждает нашу гипотезу об источниках неопределенности в восприятии радара и служит качественным доказательством того, что наши измерения неопределенности улавливают этот шум данных.

Разница в наборах данных

В наших тестовых экспериментах мы наблюдали значительные различия в производительности распознавания между двумя наборами данных. Мы считаем, что размер доступных обучающих данных может быть законной причиной. Обучающий набор Oxford Radar RobotCar включает более 300 км опыта вождения, тогда как набор данных MulRan включает только около 120 км. Однако также примите во внимание снижение производительности метода дескриптора RingKey. Это говорит о том, что в восприятии радиолокационной сцены могут быть присущие неразличимые особенности. Например, мы обнаружили, что среды с редкими открытыми областями часто приводят к одинаковому сканированию и неоптимальной производительности распознавания. В этом наборе данных мы показываем, что происходит с нашей системой и различными базовыми показателями в таких ситуациях высокой неопределенности.

Оригинальная ссылка: https://mp.weixin.qq.com/s/wu7whicFEAuo65kYp4quow

The above is the detailed content of How to implement FMCW radar position recognition elegantly (IROS2023). For more information, please follow other related articles on the PHP Chinese website!

Tesla's Robovan Was The Hidden Gem In 2024's Robotaxi TeaserApr 22, 2025 am 11:48 AM

Tesla's Robovan Was The Hidden Gem In 2024's Robotaxi TeaserApr 22, 2025 am 11:48 AMSince 2008, I've championed the shared-ride van—initially dubbed the "robotjitney," later the "vansit"—as the future of urban transportation. I foresee these vehicles as the 21st century's next-generation transit solution, surpas

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AM

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AMRevolutionizing the Checkout Experience Sam's Club's innovative "Just Go" system builds on its existing AI-powered "Scan & Go" technology, allowing members to scan purchases via the Sam's Club app during their shopping trip.

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AM

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AMNvidia's Enhanced Predictability and New Product Lineup at GTC 2025 Nvidia, a key player in AI infrastructure, is focusing on increased predictability for its clients. This involves consistent product delivery, meeting performance expectations, and

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AM

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AMGoogle's Gemma 2: A Powerful, Efficient Language Model Google's Gemma family of language models, celebrated for efficiency and performance, has expanded with the arrival of Gemma 2. This latest release comprises two models: a 27-billion parameter ver

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AM

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AMThis Leading with Data episode features Dr. Kirk Borne, a leading data scientist, astrophysicist, and TEDx speaker. A renowned expert in big data, AI, and machine learning, Dr. Borne offers invaluable insights into the current state and future traje

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SublimeText3 Chinese version

Chinese version, very easy to use