Technology peripherals

Technology peripherals AI

AI LLaMA fine-tuning reduces memory requirements by half, Tsinghua proposes 4-bit optimizer

LLaMA fine-tuning reduces memory requirements by half, Tsinghua proposes 4-bit optimizerLLaMA fine-tuning reduces memory requirements by half, Tsinghua proposes 4-bit optimizer

The training and fine-tuning of large models have high requirements on video memory, and the optimizer state is one of the main expenses of video memory. Recently, the team of Zhu Jun and Chen Jianfei of Tsinghua University proposed a 4-bit optimizer for neural network training, which saves the memory overhead of model training and can achieve an accuracy comparable to that of a full-precision optimizer.

The 4-bit optimizer was experimented on numerous pre-training and fine-tuning tasks and can reduce the memory overhead of fine-tuning LLaMA-7B by up to 57% while maintaining accuracy without loss of accuracy. .

Paper: https://arxiv.org/abs/2309.01507

Code: https://github.com/thu-ml /low-bit-optimizers

Memory bottleneck of model training

From GPT-3, Gopher to LLaMA, large models have better Performance has become the consensus of the industry. However, in contrast, the video memory size of a single GPU has grown slowly, which makes video memory the main bottleneck for large model training. How to train large models with limited GPU memory has become an important problem.

To this end, we first need to clarify the sources of video memory consumption. In fact, there are three types of sources, namely:

1. "Data memory", including the input data and the activation value output by each layer of the neural network, its size is directly affected by the batch size and The impact of image resolution/context length;

2. "Model memory", including model parameters, gradients, and optimizer states, its size and the number of model parameters Proportional;

3. "Temporary video memory" includes temporary memory and other caches used in GPU kernel calculations. As the size of the model increases, the proportion of the model's video memory gradually increases, becoming a major bottleneck.

The size of the optimizer state is determined by which optimizer is used. Currently, AdamW optimizers are often used to train Transformers, which need to store and update two optimizer states during the training process, namely first and second moments. If the number of model parameters is N, then the number of optimizer states in AdamW is 2N, which is obviously a huge graphics memory overhead.

Taking LLaMA-7B as an example, the number of parameters of this model is about 7B. If the full-precision (32-bit) AdamW optimizer is used to fine-tune it, then the optimizer state will The occupied video memory size is approximately 52.2GB. In addition, although the naive SGD optimizer does not require additional states and saves the memory occupied by the optimizer state, the performance of the model is difficult to guarantee. Therefore, this article focuses on how to reduce the optimizer state in model memory while ensuring that optimizer performance is not compromised.

Methods to save optimizer memory

Currently, in terms of training algorithms, there are three main methods to save optimizer memory overhead:

1. Low-rank approximation of the optimizer state through the idea of low-rank decomposition (Factorization);

2. Only train a small number of parameters to avoid saving most of the optimizer state, such as LoRA;

3. Compression-based method, using low-precision numerical format to represent the optimizer state.

Specifically, Dettmers et al. (ICLR 2022) proposed a corresponding 8-bit optimizer for SGD with momentum and AdamW, by using block-wise quantization and The technology of dynamic exponential numerical format achieves results that match the original full-precision optimizer in tasks such as language modeling, image classification, self-supervised learning, and machine translation.

Based on this, this paper further reduces the numerical accuracy of the optimizer state to 4 bits, proposes a quantization method for different optimizer states, and finally proposes a 4-bit AdamW optimizer. At the same time, this paper explores the possibility of combining compression and low-rank decomposition methods, and proposes a 4-bit Factor optimizer. This hybrid optimizer enjoys both good performance and better memory efficiency. This paper evaluates 4-bit optimizers on a number of classic tasks, including natural language understanding, image classification, machine translation, and instruction fine-tuning of large models.

On all tasks, the 4-bit optimizer achieves comparable results to the full-precision optimizer while occupying less memory.

Problem setting

Framework of compression-based memory efficient optimizer

First, we need to understand how to introduce compression operations into commonly used optimizers, which is given by Algorithm 1. where A is a gradient-based optimizer (such as SGD or AdamW). The optimizer takes in the existing parameters w, gradient g and optimizer state s and outputs new parameters and optimizer state. In Algorithm 1, the full-precision s_t is transient, while the low-precision (s_t ) ̅ is persisted in the GPU memory. The important reason why this method can save video memory is that the parameters of neural networks are often spliced together from the parameter vectors of each layer. Therefore, the optimizer update is also performed layer by layer/tensor. Under Algorithm 1, the optimizer state of at most one parameter is left in the memory in the form of full precision, and the optimizer states corresponding to other layers are in a compressed state. .

The main compression method: quantization

Quantization is Using low-precision numerical values to represent high-precision data, this article decouples the quantification operation into two parts: normalization and mapping, thereby enabling more lightweight design and experimentation of new quantification methods. The two operations of normalization and mapping are sequentially applied on the full-precision data in an element-wise manner. Normalization is responsible for projecting each element in the tensor to the unit interval, where tensor normalization (per-tensor normalization) and block-wise normalization (block-wise normalization) are defined as follows:

# Different normalization methods have different granularities, their ability to handle outliers will be different, and they will also bring different additional memory overhead. The mapping operation is responsible for mapping normalized values to integers that can be represented with low precision. Formally speaking, given a bit width b (that is, each value is represented by b bits after quantization) and a predefined function T

mapping operation Is defined as:

Therefore, how to design an appropriate T plays a very important role in reducing quantization error. This article mainly considers linear mapping (linear) and dynamic exponential mapping (dynamic exponent). Finally, the process of dequantization is to apply the inverse operators of mapping and normalization in sequence.

Compression method of first-order moment

The following mainly proposes different methods for the optimizer state of AdamW (first-order moment and second-order moment) Quantitative methods. For first moments, the quantization method in this paper is mainly based on the method of Dettmers et al. (ICLR 2022), using block normalization (block size 2048) and dynamic exponential mapping.

In preliminary experiments, we directly reduced the bit width from 8 bits to 4 bits and found that the first-order moment is very robust to quantization and has achieved matching results on many tasks. , but there is also a performance loss on some tasks. To further improve performance, we carefully studied the pattern of first moments and found that there are many outliers in a single tensor.

Previous work has done some research on the outlier patterns of parameters and activation values. The distribution of parameters is relatively smooth, while activation values have the characteristics of channel distribution. This article found that the distribution of outliers in the optimizer state is complex, with some tensors having outliers distributed in fixed rows, and other tensors having outliers distributed in fixed columns.

Row-first block normalization may encounter difficulties for tensors with column-wise distribution of outliers. Therefore, this paper proposes to use smaller blocks with a block size of 128, which can reduce the quantization error while keeping the additional memory overhead within a controllable range. The figure below shows the quantization error for different block sizes.

Compression method of second-order moment

Compared with the first-order moment, the quantization of the second-order moment is more difficult and will bring instability to the training. This paper determines that the zero point problem is the main bottleneck in quantifying second-order moments. In addition, an improved normalization method is proposed for ill-conditioned outlier distributions: rank-1 normalization. This article also attempts the decomposition method (factorization) of the second-order moment.

Zero point problem

In the quantization of parameters, activation values, and gradients, zero points are often indispensable. And it is also the point with the highest frequency after quantization. However, in Adam's iterative formula, the size of the update is proportional to the -1/2 power of the second moment, so changes in the range around zero will greatly affect the size of the update, causing instability.

The following figure shows the distribution of Adam's second moment - 1/2 power before and after quantization in the form of a histogram, that is, h (v) =1/(√v 10^(-6) ). If the zeros are included (figure b), then most values are pushed up to 10^6, resulting in large approximation errors. A simple approach is to remove the zeros in the dynamic exponential map, after doing so (figure c) the approximation to the second moment becomes more accurate. In actual situations, in order to effectively utilize the expressive ability of low-precision numerical values, we proposed to use a linear mapping that removes zero points, and achieved good results in experiments.

##Rank-1 Normalization

Based on the complex outlier distribution of first-order moments and second-order moments, and inspired by the SM3 optimizer, this article proposes a new normalization method named rank-1 normalization. For a non-negative matrix tensor x∈R^(n×m), its one-dimensional statistic is defined as:

and then rank -1 Normalization can be defined as:

rank-1 Normalization exploits one dimension of the tensor in a more fine-grained way Information to handle row-wise or column-wise outliers more intelligently and efficiently. In addition, rank-1 normalization can be easily generalized to high-dimensional tensors, and as the tensor size increases, the additional memory overhead it generates is smaller than that of block normalization.

In addition, this article found that the low-rank decomposition method for second-order moments in the Adafactor optimizer can effectively avoid the zero-point problem, so the combination of low-rank decomposition and quantization methods was also explored. . The figure below shows a series of ablation experiments for second-order moments, which confirms that the zero point problem is the bottleneck of quantifying second-order moments. It also verifies the effectiveness of rank-1 normalization and low-rank decomposition methods.

Experimental results

Based on the observed phenomena and methods used, the research finally proposed two Low-precision optimizer: 4-bit AdamW and 4-bit Factor, and compared with other optimizers, including 8-bit AdamW, Adafactor, SM3. Studies were selected for evaluation on a wide range of tasks, including natural language understanding, image classification, machine translation, and instruction fine-tuning of large models. The table below shows the performance of each optimizer on different tasks.

After that, we tested the memory and computational efficiency of the 4-bit optimizer, and the results are shown in the table below. Compared with the 8-bit optimizer, the 4-bit optimizer proposed in this article can save more memory, with a maximum saving of 57.7% in the LLaMA-7B fine-tuning experiment. In addition, we provide a fusion operator version of 4-bit AdamW, which can save memory without affecting computational efficiency. For the instruction fine-tuning task of LLaMA-7B, 4-bit AdamW also brings acceleration effects to training due to reduced cache pressure. Detailed experimental settings and results can be found in the paper link. Replace one line of code to use it in PyTorch We provide it out of the box For the 4-bit optimizer, you only need to replace the original optimizer with a 4-bit optimizer. It currently supports low-precision versions of Adam and SGD. At the same time, we also provide an interface for modifying quantization parameters to support customized usage scenarios.

import lpmmoptimizer = lpmm.optim.AdamW (model.parameters (), lr=1e-3, betas=(0.9, 0.999))

The above is the detailed content of LLaMA fine-tuning reduces memory requirements by half, Tsinghua proposes 4-bit optimizer. For more information, please follow other related articles on the PHP Chinese website!

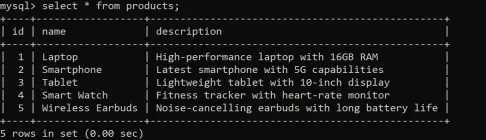

How to Use Aliases in SQL? - Analytics VidhyaApr 21, 2025 am 10:30 AM

How to Use Aliases in SQL? - Analytics VidhyaApr 21, 2025 am 10:30 AMSQL alias: A tool to improve the readability of SQL queries Do you think there is still room for improvement in the readability of your SQL queries? Then try the SQL alias! Alias This convenient tool allows you to give temporary nicknames to tables and columns, making your queries clearer and easier to process. This article discusses all use cases for aliases clauses, such as renaming columns and tables, and combining multiple columns or subqueries. Overview SQL alias provides temporary nicknames for tables and columns to enhance the readability and manageability of queries. SQL aliases created with AS keywords simplify complex queries by allowing more intuitive table and column references. Examples include renaming columns in the result set, simplifying table names in the join, and combining multiple columns into one

Code Execution with Google Gemini FlashApr 21, 2025 am 10:14 AM

Code Execution with Google Gemini FlashApr 21, 2025 am 10:14 AMGoogle's Gemini: Code Execution Capabilities of Large Language Models Large Language Models (LLMs), successors to Transformers, have revolutionized Natural Language Processing (NLP) and Natural Language Understanding (NLU). Initially replacing rule-

Tree of Thoughts Method in AI - Analytics VidhyaApr 21, 2025 am 10:11 AM

Tree of Thoughts Method in AI - Analytics VidhyaApr 21, 2025 am 10:11 AMUnlocking AI's Potential: A Deep Dive into the Tree of Thoughts Technique Imagine navigating a dense forest, each path promising a different outcome, your goal: discovering hidden treasure. This analogy perfectly captures the essence of the Tree of

How to Implement Normalization with SQL?Apr 21, 2025 am 10:05 AM

How to Implement Normalization with SQL?Apr 21, 2025 am 10:05 AMIntroduction Imagine transforming a cluttered garage into a well-organized, brightly lit space where everything is easily accessible and neatly arranged. In the world of databases, this process is called normalization. Just as a tidy garage improve

Delimiters in Prompt EngineeringApr 21, 2025 am 10:04 AM

Delimiters in Prompt EngineeringApr 21, 2025 am 10:04 AMPrompt Engineering: Mastering Delimiters for Superior AI Results Imagine crafting a gourmet meal: each ingredient measured precisely, each step timed perfectly. Prompt engineering for AI is similar; delimiters are your essential tools. Just as pre

6 Ways to Clean Up Your Database Using SQL REPLACE()Apr 21, 2025 am 09:57 AM

6 Ways to Clean Up Your Database Using SQL REPLACE()Apr 21, 2025 am 09:57 AMSQL REPLACE Functions: Efficient Data Cleaning and Text Operation Guide Have you ever needed to quickly fix large amounts of text in your database? SQL REPLACE functions can help a lot! It allows you to replace all instances of a specific substring with a new substring, making it easy to clean up data. Imagine that your data is scattered with typos—REPLACE can solve this problem immediately. Read on and I'll show you the syntax and some cool examples to get you started. Overview The SQL REPLACE function can efficiently clean up data by replacing specific substrings in text with other substrings. Use REPLACE(string, old

R-CNN vs R-CNN Fast vs R-CNN Faster vs YOLO - Analytics VidhyaApr 21, 2025 am 09:52 AM

R-CNN vs R-CNN Fast vs R-CNN Faster vs YOLO - Analytics VidhyaApr 21, 2025 am 09:52 AMObject Detection: From R-CNN to YOLO – A Journey Through Computer Vision Imagine a computer not just seeing, but understanding images. This is the essence of object detection, a pivotal area in computer vision revolutionizing machine-world interactio

What is KL Divergence that Revolutionized Machine Learning? - Analytics VidhyaApr 21, 2025 am 09:49 AM

What is KL Divergence that Revolutionized Machine Learning? - Analytics VidhyaApr 21, 2025 am 09:49 AMKullback-Leibler (KL) Divergence: A Deep Dive into Relative Entropy Few mathematical concepts have as profoundly impacted modern machine learning and artificial intelligence as Kullback-Leibler (KL) divergence. This powerful metric, also known as re

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft