Technology peripherals

Technology peripherals AI

AI What is KL Divergence that Revolutionized Machine Learning? - Analytics Vidhya

What is KL Divergence that Revolutionized Machine Learning? - Analytics VidhyaWhat is KL Divergence that Revolutionized Machine Learning? - Analytics Vidhya

Kullback-Leibler (KL) Divergence: A Deep Dive into Relative Entropy

Few mathematical concepts have as profoundly impacted modern machine learning and artificial intelligence as Kullback-Leibler (KL) divergence. This powerful metric, also known as relative entropy or information gain, is now essential across numerous fields, from statistical inference to the cutting edge of deep learning. This article explores KL divergence, its origins, applications, and its crucial role in the age of big data and AI.

Key Takeaways

- KL divergence quantifies the difference between two probability distributions.

- It's a game-changer in machine learning and information theory, requiring only two probability distributions for calculation.

- It measures the extra information needed to encode data from one distribution using the code optimized for another.

- KL divergence is vital in training advanced generative models like diffusion models, optimizing noise distribution, and improving text-to-image generation.

- Its strong theoretical foundation, flexibility, scalability, and interpretability make it invaluable for complex models.

Table of Contents

- Introduction to KL Divergence

- KL Divergence: Essential Components and Transformative Influence

- Understanding KL Divergence: A Step-by-Step Guide

- KL Divergence in Diffusion Models: A Revolutionary Application

- Advantages of KL Divergence

- Real-World Applications of KL Divergence

- Frequently Asked Questions

Introduction to KL Divergence

KL divergence measures the disparity between two probability distributions. Consider two models predicting the same event – their differences can be quantified using KL divergence.

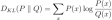

For discrete probability distributions P and Q, the KL divergence from Q to P is:

This formula, while initially complex, is intuitively understood as the average extra information needed to encode data from P using a code optimized for Q.

KL Divergence: Essential Components and Transformative Influence

Calculating KL divergence requires:

- Two probability distributions defined over the same event space.

- A logarithmic function (base 2 or natural logarithm).

With these simple inputs, KL divergence has revolutionized various fields:

- Machine Learning: Crucial in variational inference and generative models (e.g., VAEs), measuring how well a model approximates the true data distribution.

- Information Theory: Provides a fundamental measure of information content and compression efficiency.

- Statistical Inference: Essential in hypothesis testing and model selection.

- Natural Language Processing: Used in topic modeling and language model evaluation.

- Reinforcement Learning: Aids in policy optimization and exploration strategies.

Understanding KL Divergence: A Step-by-Step Guide

Let's dissect KL divergence:

- Probability Comparison: We compare the probability of each event under distributions P and Q.

- Ratio Calculation: We compute the ratio P(x)/Q(x), showing how much more (or less) likely each event is under P compared to Q.

- Logarithmic Transformation: The logarithm of this ratio ensures non-negativity and that the divergence is zero only when P and Q are identical.

- Weighting: We weight the log ratio by P(x), emphasizing events more likely under P.

- Summation: Finally, we sum the weighted log ratios across all events.

The result is a single value representing the difference between P and Q. Note that KL divergence is asymmetric: DKL(P || Q) ≠ DKL(Q || P). This asymmetry is a key feature, indicating the direction of the difference.

KL Divergence in Diffusion Models: A Revolutionary Application

Diffusion models, like DALL-E 2 and Stable Diffusion, are a prime example of KL divergence's power. They generate remarkably realistic images from text descriptions.

KL divergence's role in diffusion models includes:

- Training: Measures the difference between the true and estimated noise distributions at each step, enabling the model to effectively reverse the diffusion process.

- Variational Lower Bound: Often used in the training objective, ensuring generated samples closely match the data distribution.

- Latent Space Regularization: Helps regularize the latent space, ensuring well-behaved representations.

- Model Comparison: Used to compare different diffusion model architectures.

- Conditional Generation: In text-to-image models, it measures how well generated images match text descriptions.

Advantages of KL Divergence

KL divergence's strengths include:

- Strong Theoretical Basis: Grounded in information theory, offering interpretability in terms of information bits.

- Flexibility: Applicable to both discrete and continuous distributions.

- Scalability: Effective in high-dimensional spaces, suitable for complex machine learning models.

- Mathematical Properties: Satisfies non-negativity and convexity, beneficial for optimization.

- Interpretability: Its asymmetry is intuitively understood in terms of encoding and compression.

Real-World Applications of KL Divergence

KL divergence's impact extends to various applications:

- Recommendation Systems: Used to measure how well models predict user preferences.

- Image Generation: Essential in training AI image generation models.

- Language Models: Plays a role in training chatbots and other language models.

- Climate Modeling: Used to compare and assess the reliability of climate models.

- Financial Risk Assessment: Utilized in risk models for market prediction.

Conclusion

KL divergence is a powerful tool that extends beyond pure mathematics, impacting machine learning, market predictions, and more. Its importance in our data-driven world is undeniable. As AI and data analysis advance, KL divergence's role will only become more significant.

Frequently Asked Questions

Q1. What does “KL” stand for? A: Kullback-Leibler, named after Solomon Kullback and Richard Leibler.

Q2. Is KL divergence a distance metric? A: No, its asymmetry prevents it from being a true distance metric.

Q3. Can KL divergence be negative? A: No, it's always non-negative.

Q4. How is KL divergence used in machine learning? A: In model selection, variational inference, and evaluating generative models.

Q5. What’s the difference between KL divergence and cross-entropy? A: Minimizing cross-entropy is equivalent to minimizing KL divergence plus the entropy of the true distribution.

The above is the detailed content of What is KL Divergence that Revolutionized Machine Learning? - Analytics Vidhya. For more information, please follow other related articles on the PHP Chinese website!

![[Ghibli-style images with AI] Introducing how to create free images with ChatGPT and copyright](https://img.php.cn/upload/article/001/242/473/174707263295098.jpg?x-oss-process=image/resize,p_40) [Ghibli-style images with AI] Introducing how to create free images with ChatGPT and copyrightMay 13, 2025 am 01:57 AM

[Ghibli-style images with AI] Introducing how to create free images with ChatGPT and copyrightMay 13, 2025 am 01:57 AMThe latest model GPT-4o released by OpenAI not only can generate text, but also has image generation functions, which has attracted widespread attention. The most eye-catching feature is the generation of "Ghibli-style illustrations". Simply upload the photo to ChatGPT and give simple instructions to generate a dreamy image like a work in Studio Ghibli. This article will explain in detail the actual operation process, the effect experience, as well as the errors and copyright issues that need to be paid attention to. For details of the latest model "o3" released by OpenAI, please click here⬇️ Detailed explanation of OpenAI o3 (ChatGPT o3): Features, pricing system and o4-mini introduction Please click here for the English version of Ghibli-style article⬇️ Create Ji with ChatGPT

Explaining examples of use and implementation of ChatGPT in local governments! Also introduces banned local governmentsMay 13, 2025 am 01:53 AM

Explaining examples of use and implementation of ChatGPT in local governments! Also introduces banned local governmentsMay 13, 2025 am 01:53 AMAs a new communication method, the use and introduction of ChatGPT in local governments is attracting attention. While this trend is progressing in a wide range of areas, some local governments have declined to use ChatGPT. In this article, we will introduce examples of ChatGPT implementation in local governments. We will explore how we are achieving quality and efficiency improvements in local government services through a variety of reform examples, including supporting document creation and dialogue with citizens. Not only local government officials who aim to reduce staff workload and improve convenience for citizens, but also all interested in advanced use cases.

What is the Fukatsu-style prompt in ChatGPT? A thorough explanation with example sentences!May 13, 2025 am 01:52 AM

What is the Fukatsu-style prompt in ChatGPT? A thorough explanation with example sentences!May 13, 2025 am 01:52 AMHave you heard of a framework called the "Fukatsu Prompt System"? Language models such as ChatGPT are extremely excellent, but appropriate prompts are essential to maximize their potential. Fukatsu prompts are one of the most popular prompt techniques designed to improve output accuracy. This article explains the principles and characteristics of Fukatsu-style prompts, including specific usage methods and examples. Furthermore, we have introduced other well-known prompt templates and useful techniques for prompt design, so based on these, we will introduce C.

What is ChatGPT Search? Explains the main functions, usage, and fee structure!May 13, 2025 am 01:51 AM

What is ChatGPT Search? Explains the main functions, usage, and fee structure!May 13, 2025 am 01:51 AMChatGPT Search: Get the latest information efficiently with an innovative AI search engine! In this article, we will thoroughly explain the new ChatGPT feature "ChatGPT Search," provided by OpenAI. Let's take a closer look at the features, usage, and how this tool can help you improve your information collection efficiency with reliable answers based on real-time web information and intuitive ease of use. ChatGPT Search provides a conversational interactive search experience that answers user questions in a comfortable, hidden environment that hides advertisements

An easy-to-understand explanation of how to create a composition in ChatGPT and prompts!May 13, 2025 am 01:50 AM

An easy-to-understand explanation of how to create a composition in ChatGPT and prompts!May 13, 2025 am 01:50 AMIn a modern society with information explosion, it is not easy to create compelling articles. How to use creativity to write articles that attract readers within a limited time and energy requires superb skills and rich experience. At this time, as a revolutionary writing aid, ChatGPT attracted much attention. ChatGPT uses huge data to train language generation models to generate natural, smooth and refined articles. This article will introduce how to effectively use ChatGPT and efficiently create high-quality articles. We will gradually explain the writing process of using ChatGPT, and combine specific cases to elaborate on its advantages and disadvantages, applicable scenarios, and safe use precautions. ChatGPT will be a writer to overcome various obstacles,

How to create diagrams using ChatGPT! Illustrated loading and plugins are also explainedMay 13, 2025 am 01:49 AM

How to create diagrams using ChatGPT! Illustrated loading and plugins are also explainedMay 13, 2025 am 01:49 AMAn efficient guide to creating charts using AI Visual materials are essential to effectively conveying information, but creating it takes a lot of time and effort. However, the chart creation process is changing dramatically due to the rise of AI technologies such as ChatGPT and DALL-E 3. This article provides detailed explanations on efficient and attractive diagram creation methods using these cutting-edge tools. It covers everything from ideas to completion, and includes a wealth of information useful for creating diagrams, from specific steps, tips, plugins and APIs that can be used, and how to use the image generation AI "DALL-E 3."

An easy-to-understand explanation of ChatGPT Plus' pricing structure and payment methods!May 13, 2025 am 01:48 AM

An easy-to-understand explanation of ChatGPT Plus' pricing structure and payment methods!May 13, 2025 am 01:48 AMUnlock ChatGPT Plus: Fees, Payment Methods and Upgrade Guide ChatGPT, a world-renowned generative AI, has been widely used in daily life and business fields. Although ChatGPT is basically free, the paid version of ChatGPT Plus provides a variety of value-added services, such as plug-ins, image recognition, etc., which significantly improves work efficiency. This article will explain in detail the charging standards, payment methods and upgrade processes of ChatGPT Plus. For details of OpenAI's latest image generation technology "GPT-4o image generation" please click: Detailed explanation of GPT-4o image generation: usage methods, prompt word examples, commercial applications and differences from other AIs Table of contents ChatGPT Plus Fees Ch

Explaining how to create a design using ChatGPT! We also introduce examples of use and promptsMay 13, 2025 am 01:47 AM

Explaining how to create a design using ChatGPT! We also introduce examples of use and promptsMay 13, 2025 am 01:47 AMHow to use ChatGPT to streamline your design work and increase creativity This article will explain in detail how to create a design using ChatGPT. We will introduce examples of using ChatGPT in various design fields, such as ideas, text generation, and web design. We will also introduce points that will help you improve the efficiency and quality of a variety of creative work, such as graphic design, illustration, and logo design. Please take a look at how AI can greatly expand your design possibilities. table of contents ChatGPT: A powerful tool for design creation

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.