Home >Technology peripherals >AI >NVIDIA develops AI 3D video chat solution for remote conference calls

NVIDIA develops AI 3D video chat solution for remote conference calls

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-08-23 18:49:011806browse

Video conferencing systems were first commercialized more than 50 years ago, allowing people to communicate audio-visually with colleagues, friends or family members thousands of miles away. The ultimate goal of video conferencing is to enable immersive communication between remote participants, as if they are all in the same place

Existing 3D video conferencing systems, while showing potential for capturing eye contact and other non-verbal cues, require expensive 3D capture equipment

In a project called "AI-Mediated 3D Video Conferencing", teams from NVIDIA, the University of California, San Diego, and the University of North Carolina at Chapel Hill successfully developed a high-fidelity, low-cost video conferencing system using AI artificial intelligence technology. 3D telepresence method, and provides new functions that cannot be achieved based on 3D scanning

In addition, the team’s solution is compatible with a variety of existing 3D displays, including stereoscopic displays and light field displays

Extended reading: Using AI to reduce the size, Google demonstrates a new iteration of the light field calling project Project Starline

It is worth noting that Google is using artificial intelligence to improve their light field calling project Project Starline. Simply put, Project Starline is a 3D video chat room that uses light field technology to create a feeling as if the other person is actually sitting across from you. This innovative remote communication tool combines Google's hardware and software advancements to make friends, family and colleagues more immersive when communicating remotely

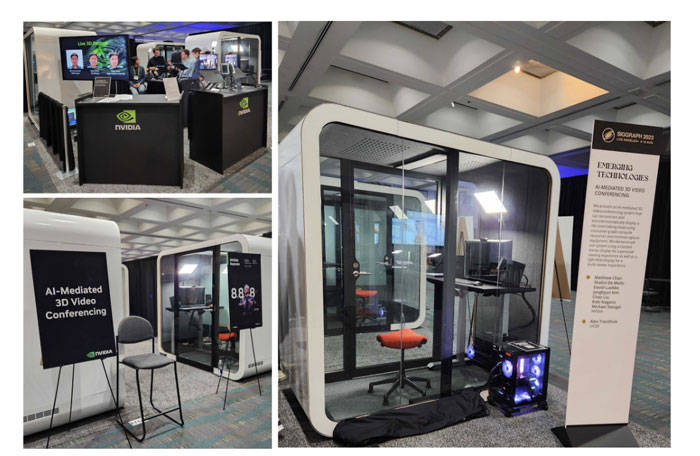

The NVIDIA team returned to their "AI-Mediated 3D Video Conferencing" project and demonstrated the setup at SIGGRAPH and wrote about it

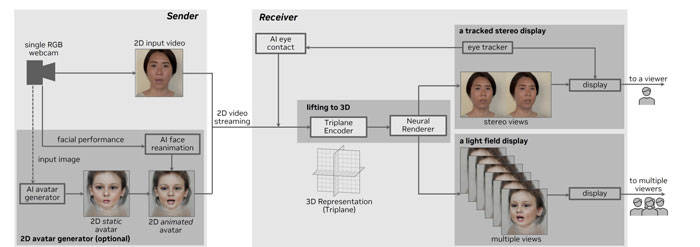

The illustrated system includes a sender for recording and transmitting 2D video from a single RGB web camera; and a receiver for receiving and converting 2D video to 3D and presenting a novel 3D view

By using a one-shot approach, researchers can infer and render a realistic 3D representation from a single unposed image in real time and generate light field images on an NVIDIA RTX A5000 laptop. Using instant AI super-resolution technology, participants can instantly see their 3D self-image. In real time, participants can observe the 2D webcam image being lifted into a stereoscopic 3D view with head tracking capabilities

Users have the option of using the 2D Avatar Generator module to generate and customize user-driven 2D Avatars in addition to using webcam images

Researchers have made important progress in 3D enhancement by proposing a new encoder based on Vision Transformer for converting 2D inputs into efficient triplanar implicit 3D representations. Given a single RGB image of the user, this method is able to automatically create a frontal 3D representation of the user and efficiently render it from a new viewpoint through volumetric 3D rendering

A rewriting of this sentence is as follows: The three-plane encoder relies entirely on synthetic data generated by pre-trained EG3D for training, while the 3D lifting module uses the generated priors to ensure that the generated views are consistent across multiple views and photos In terms of realism and being able to be applied to anyone in a one-shot manner without individual specific training

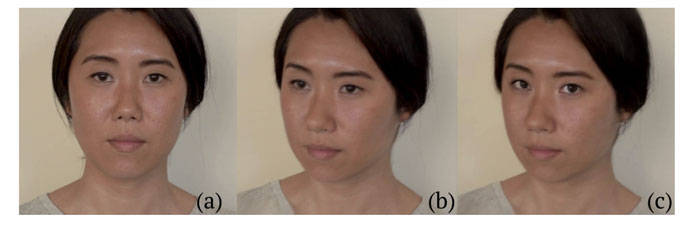

The team used state-of-the-art neural methods to achieve eye contact by synthesizing the redirected eye gaze of a given user image, upgrading the 2D image into a 3D image through gaze correction, as shown in the figure

The system supports a variety of off-the-shelf 3D displays, including single-person stereoscopic displays and multi-person light field displays

This picture shows the use of a 32-inch 3D stereoscopic display from Dimenco. It uses eye tracking and lens technology to render stereoscopic images that accurately display the position of the user's eyes. A provides an overview, while b and c demonstrate the system's ability to record stereoscopic images of participants at the correct perspective. Meanwhile, d and e illustrate that given a single RGB image, this method can generate realistic telepresence effects

In addition, the researchers used a 32-inch Looking Glass monitor to evaluate the AI system. The display can display a life-size talking head simultaneously, allowing multiple people to view it clearly. This light field display provides a clear demonstration of the technology to general audiences and those waiting for demonstrations

Next, those waiting in line can try the three-dimensional displays at different booths and experience the multi-channel artificial intelligence-guided 3D video conference call

Extended reading:AI-Mediated 3D Video Conferencing

Extended reading:Live 3D Portrait: Real-Time Radiance Fields for Single-Image Portrait View Synthesis

It is worth noting that the team optimized the encoder by using NVIDIA TensorRT to enable real-time inference on NVIDIA A6000 Ada Generation GPU. The entire system runs in less than 100 milliseconds, including capture, streaming, and rendering

The above is the detailed content of NVIDIA develops AI 3D video chat solution for remote conference calls. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology