Home >Technology peripherals >AI >DragGAN has been open sourced for 23k stars in three days, here comes another DragDiffusion

DragGAN has been open sourced for 23k stars in three days, here comes another DragDiffusion

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-06-28 15:28:171402browse

In the magical world of AIGC, we can change and synthesize the image we want by "drag" on the image. For example, let a lion turn its head and open its mouth:

The research to achieve this effect comes from the "Drag Your GAN" paper led by a Chinese author, published above Released this month and has been accepted into the SIGGRAPH 2023 conference.

More than a month has passed, and the research team recently released the official code. In just three days, the number of Stars has exceeded 23k, which shows its popularity.

Picture

Picture

GitHub address: https://github.com/XingangPan/DragGAN

Coincidentally, another similar study today - DragDiffusion came into people's sight. The previous DragGAN implemented point-based interactive image editing and achieved pixel-level precision editing effects. However, there are also shortcomings. DragGAN is based on a generative adversarial network (GAN), and its versatility is limited by the capacity of the pre-trained GAN model.

In new research, several researchers from the National University of Singapore and ByteDance extended this type of editing framework to a diffusion model and proposed DragDiffusion. They leverage large-scale pre-trained diffusion models to greatly improve the applicability of point-based interactive editing in real-world scenarios.

While most current diffusion-based image editing methods are suitable for text embedding, DragDiffusion optimizes the diffusion latent representation to achieve precise spatial control.

Picture

Picture

- Paper address: https://arxiv.org/pdf/2306.14435.pdf

- Project address: https://yujun-shi.github.io/projects/dragdiffusion.html

The researchers said that the diffusion model generates images in an iterative manner, and the "one-step" optimization of the diffusion potential representation is enough to generate coherent results, allowing DragDiffusion to efficiently complete high-quality editing.

They conducted extensive experiments under various challenging scenarios (such as multiple objects, different object categories) to verify the plasticity and versatility of DragDiffusion. The relevant code will also be released soon.

Let’s take a look at the effect of DragDiffusion.

First of all, we want to raise the head of the kitten in the picture below. The user only needs to drag the red point to the blue point:

Next, we want to make the mountain a little higher, no problem, just drag the red key point:

Picture

Picture

If you want to turn the head of the sculpture, you can do it by dragging:

If you want to turn the head of the sculpture, you can do it by dragging:

Picture

Picture

Let the flowers on the shore bloom wider:

Let the flowers on the shore bloom wider:

Method introduction

The DRAGDIFFUSION proposed in this article aims to optimize specific diffusion latent variables to achieve interactive, point-based Image editing.

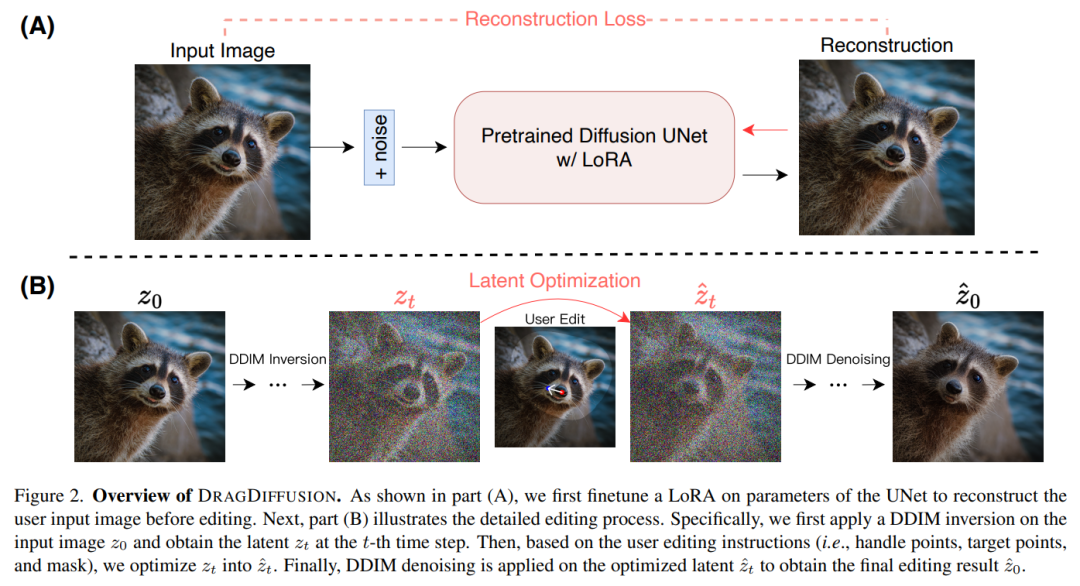

To achieve this goal, the study first fine-tunes LoRA based on a diffusion model to reconstruct user input images. This can ensure that the style of the input and output images remains consistent.

Next, the researchers used DDIM inversion (which is a method to explore the inverse transformation and latent space operation of the diffusion model) on the input image to obtain the diffusion latent variable at a specific step.

During the editing process, the researcher repeatedly used motion supervision and point tracking to optimize the previously obtained t-th step diffusion latent variable, thereby dragging the content of the processing point )" to the target location. The editing process also applies a regularization term to ensure that the unmasked areas of the image remain unchanged.

Finally, use DDIM to denoise the optimized latent variables at step t to obtain the edited results. The overall overview diagram is as follows:

Picture

Picture

Experimental results

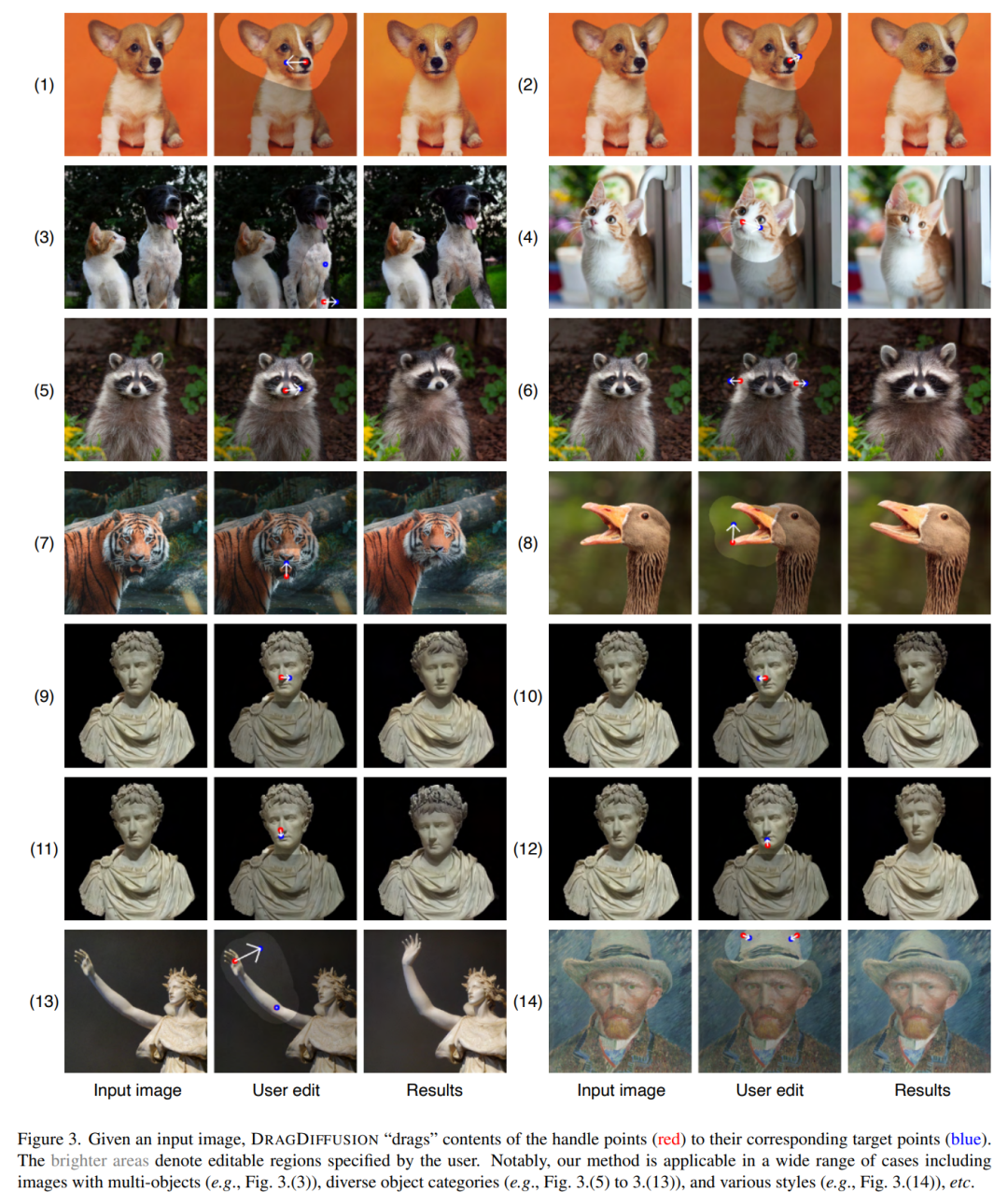

given Define an input image, and DRAGDIFFUSION "drag" the contents of the key points (red) to the corresponding target points (blue). For example, in picture (1), turn the puppy’s head over, in picture (7), close the tiger’s mouth, etc.

Pictures

Pictures

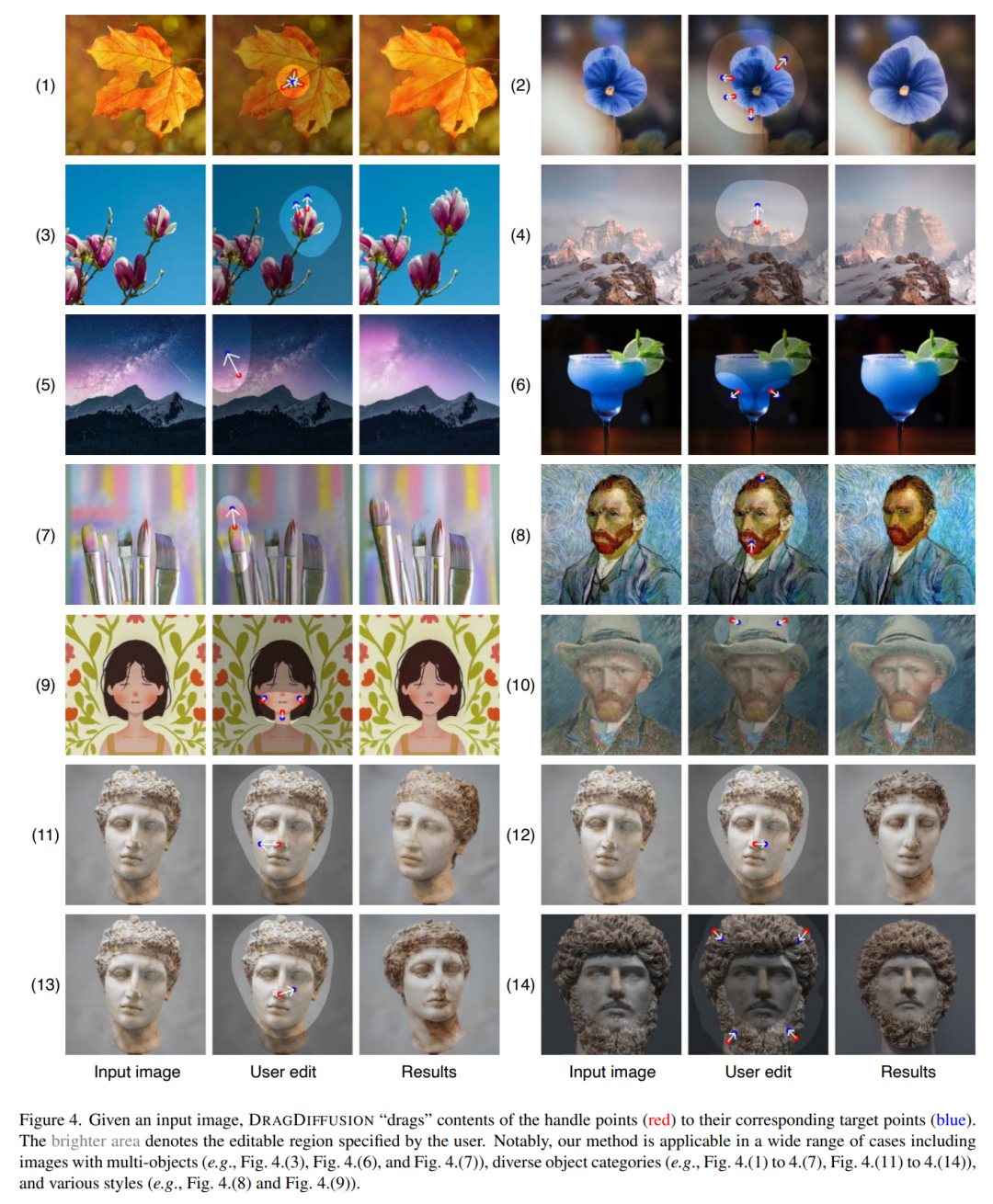

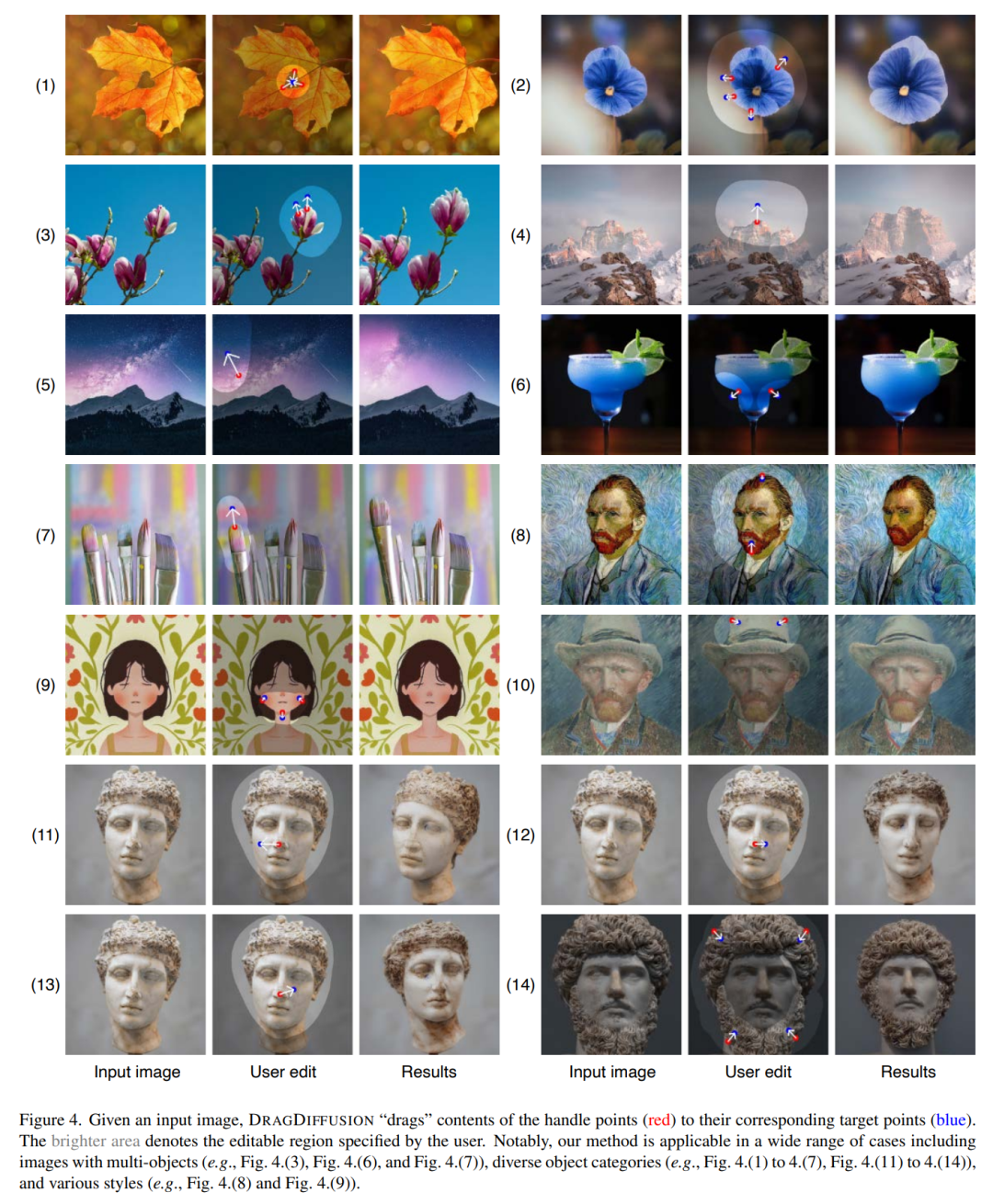

Here are more sample demonstrations. As shown in Figure (4), make the mountain peak taller, Figure (7) make the pen tip larger, etc.

picture

picture

The above is the detailed content of DragGAN has been open sourced for 23k stars in three days, here comes another DragDiffusion. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology