Home >Technology peripherals >AI >He wanted to avoid the exam 70 years ago, but he influenced the entire Internet

He wanted to avoid the exam 70 years ago, but he influenced the entire Internet

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-06-27 12:51:32752browse

Who would have thought that a student's "willfulness" of not wanting to take an exam would later affect the entire Internet.

In an information theory class at MIT 70 years ago, a teacher posed a multiple-choice question in order to "reduce" students' stress.

Either take the final exam or write a paper to improve the existing algorithm. Choose yourself.

The teacher’s name was Robert Fanno. What he didn’t tell the students was that this “existing algorithm” was co-authored by him and Shannon, the founder of information theoryshannon-fanocoding. In order to improve the algorithm's shortcomings, he himself has invested a lot of time in research.

(Teacher’s inner OS: I didn’t expect it.)

Although it’s a bit damaging, this trick really works. This group of students didn't need to take the exam as soon as they heard "hand in a paper" and decided to write a paper after patting their heads, including David·Hafman.

You won’t know if you don’t choose, but you will be shocked if you choose. Huffman, who was just starting out, quickly realized the pitfall that the teacher had dug - this paper was too difficult to solve.

It took several months to write this, and despite struggling, Huffman still found nothing.

But fate is sometimes very strange. Just when Huffman finally gave up "skipping the exam" and was about to throw the paper notes into the trash can, he suddenly had an idea! The answer appears!

Huffman gave up research on existing codes and turned to new explorations, and finally discovered a method based on ordered frequency binary tree coding.

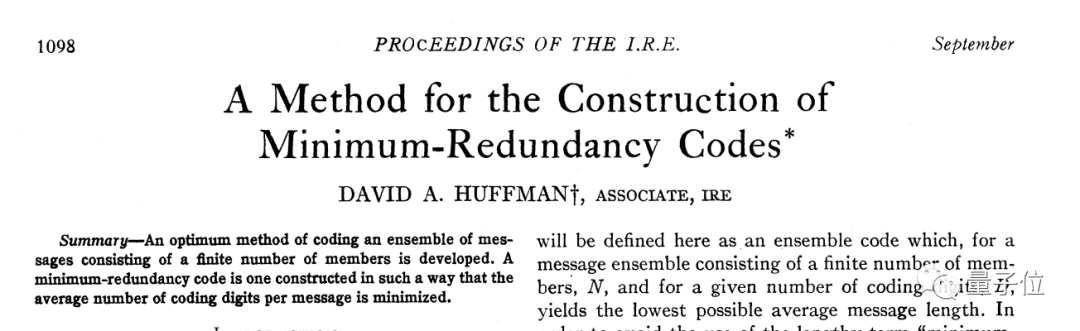

The efficiency of this idea he proposed successfully surpassed his teacher's methodology. Even in the subsequent development, the coding method named after him-Huffman coding directly changed the data compression paradigm.

As for the final report at that time, it has been quoted nearly 10,000 times.

Inefficient traditional coding methods

In 1951, Robert Fanno, who was teaching at MIT, was thinking about a difficult problem in information theory:

How to use binary codeefficiently represents numbers, letters or other symbols?

The most common and direct method at the time was to assign a unique binary number to each character.

For example, the letter A may be represented as 01000001,! Represented as 00100001, each eight-digit number corresponds to one character.

This makes the code easy to parse, but the efficiency is extremely low.

There is also an optimization method, similar to Morse code. The common letter E is represented by just a dot, but the uncommon Q requires a longer and more laborious "————··——".

This method will lead to different code lengths and the information is not easy to understand; moreover, gaps need to be added between characters during transmission, otherwise different character combinations cannot be distinguished.

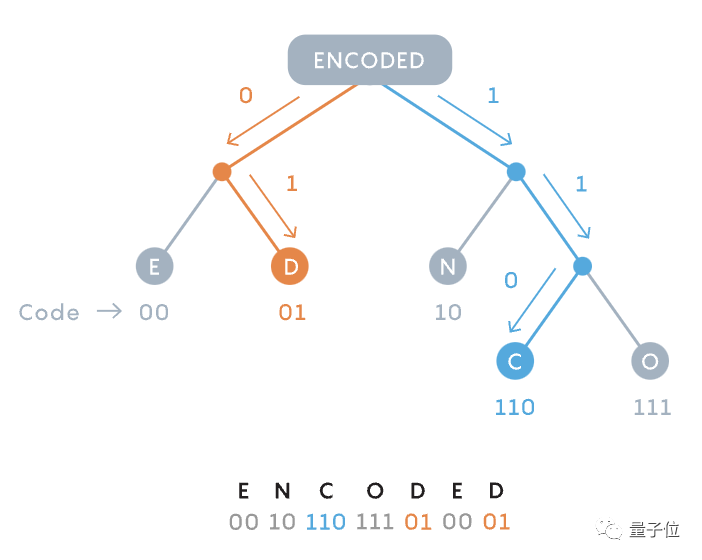

Fanno realized that perhaps the advantages of these two methods could be combined - representing characters in binary codes of different lengths. Furthermore, in order to avoid code "overlapping", he also constructed a binary tree.

Picture

Picture

He exhaustively tested the possibility of every permutation for maximum efficiency, and finally arrived at a valid situation:

Each message is divided into two branches according to frequency, and the frequency of use of letters on both sides should be basically the same as much as possible.

Picture

Picture

This way, commonly used characters will be on shorter, less dense branches.

In 1948, Shannon, the father of information theory, proposed this method in his article "Mathematical Theory of Communication" introducing information theory; soon after, Fanno also independently published it in the form of a technical report. Therefore, this method is called Shannon-Fano coding.

But this method does not always work. For example, when the probability of occurrence of letters is {0.35, 0.17, 0.17, 0.16, 0.15}, the ideal encoding cannot be given.

Fanno believes that there must be a better compression strategy. Ever since, such an important task was placed in the hands of his students.

A flash of inspiration, a paper of the century

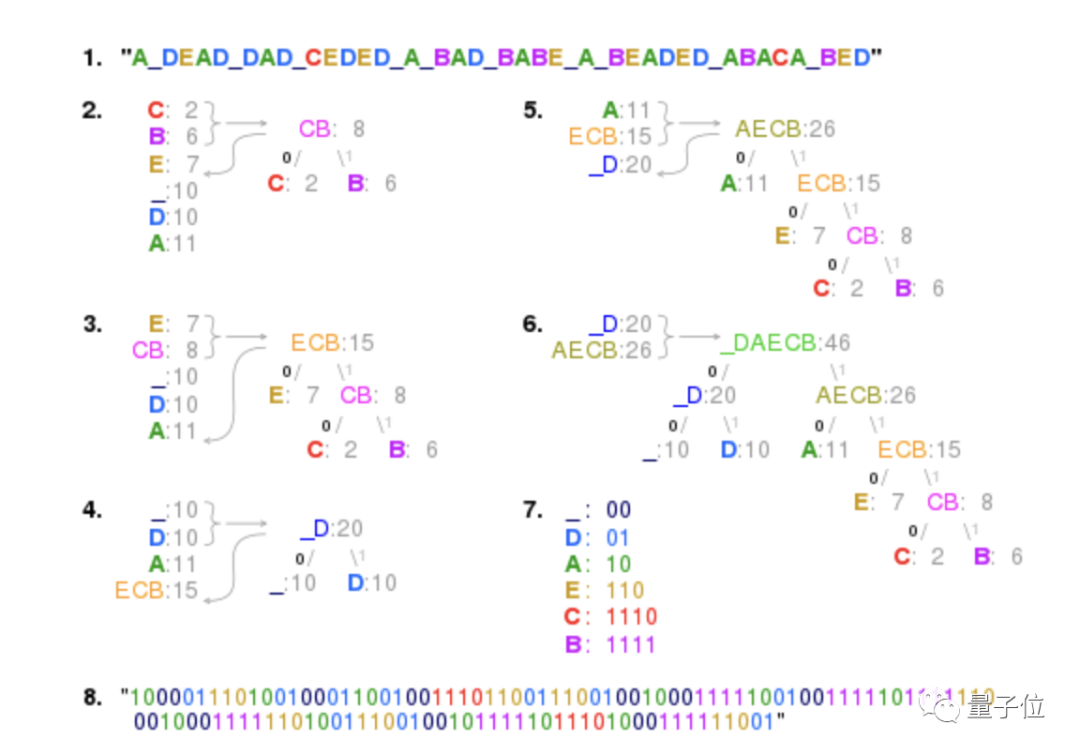

If Professor Fanno’s method is to build a character tree from top to bottom, and keep it as much as possible between pairs of branches symmetry.

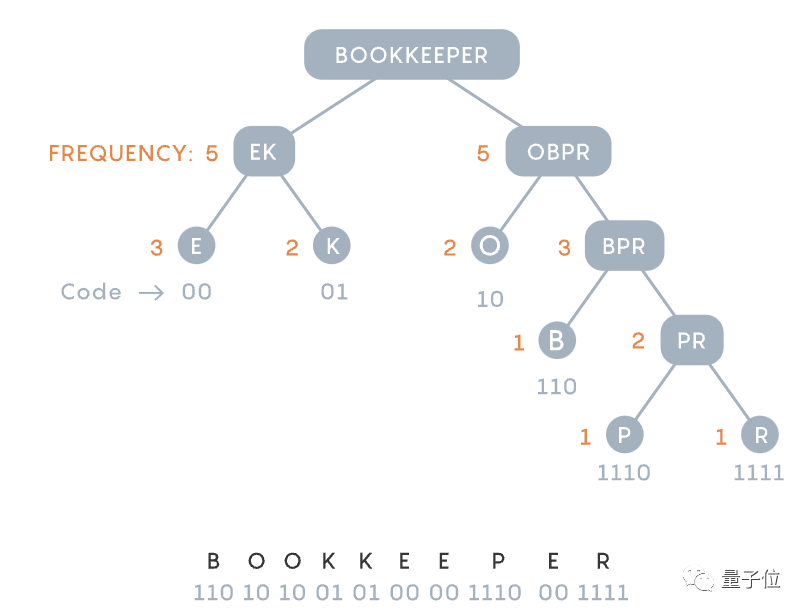

Then Huffman's method directly subverts this process-Build a binary tree from the bottom up.

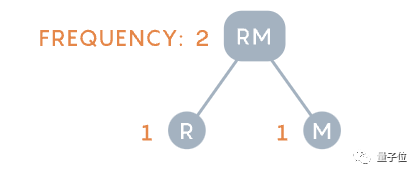

He believes that no matter what happens, in a valid code, the two least common characters should have the two longest codes .

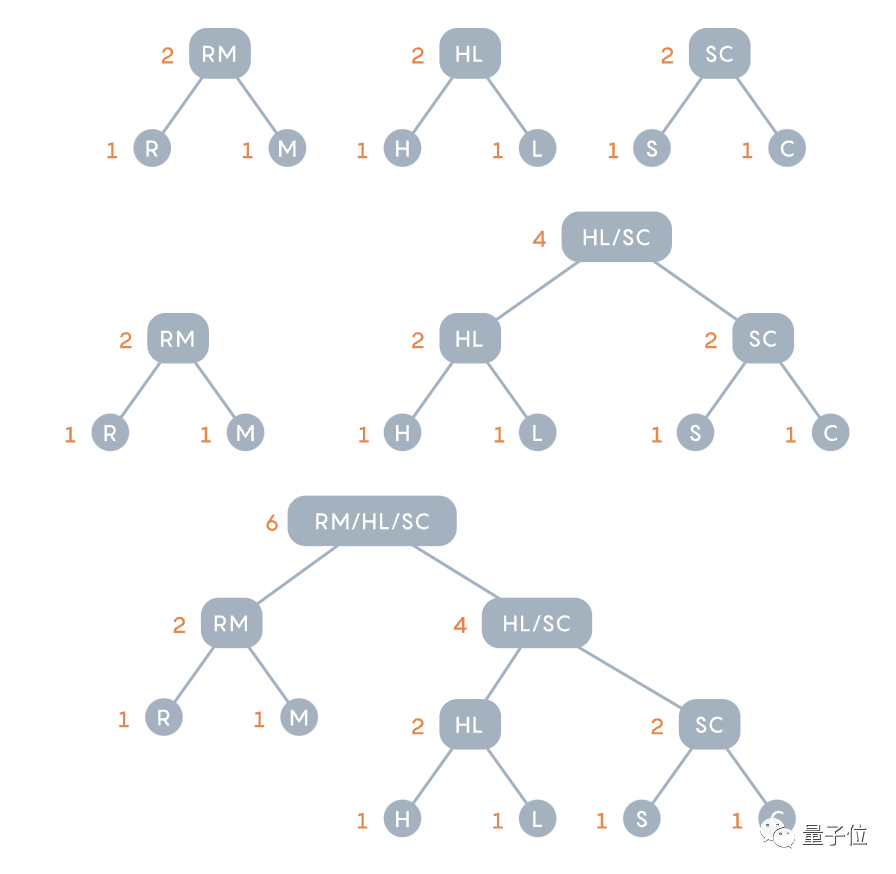

So first determine the two least common characters, combine them together as a branch pair, and then repeat the process to find the least common among the remaining characters and the character pair just constructed characters (pairs).

Picture

Picture

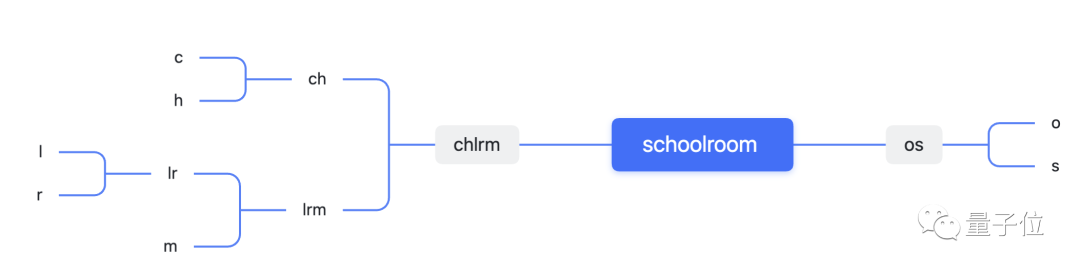

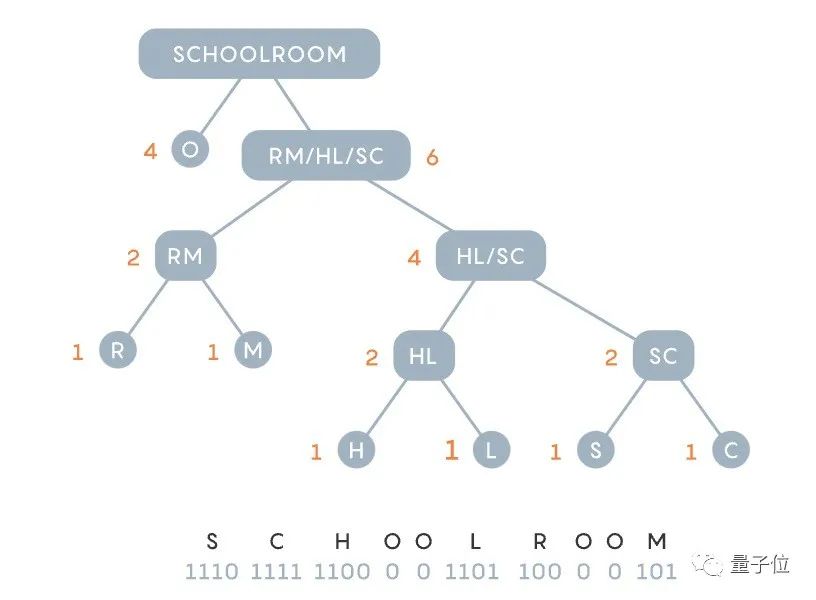

Take schoolroom as an example, where O appears four times, and S, C, H, L, R, and M each appear once.

Fano's method is to first assign O and another letter to the left branch, so that both sides have a total usage of 5 times, and the generated code totals 27 bits.

Picture

Picture

In contrast, Huffman’s method starts with uncommon r and m and combines them into one letter right.

Picture

Picture

After the combination, the existing characters (pairs) include: O (4 times), RM (2 times) and the single letter S, C, H and L.

Divide according to frequency of occurrence, repeat the previous operation - group the two uncommon options, and then update the number tree and frequency diagram.

In the end, "schoolroom" became 11101111110000110110000101, which is 1 less than Fano's top-down approach.

Picture

Picture

Although 1 bit is not much here, when expanded to billions of bytes, this That’s a big savings.

In fact, Huffman’s method has proven to be very powerful. According to Google Scholar statistics, the paper that year has been cited 9,570 times.

Picture

Picture

As for his teacher’s method, it has almost never been used again.

To this day, almost all lossless compression methods use Huffman's method in whole or in part, which can compress images, audio, tables, etc. It supports everything from the PNG image standard to the ubiquitous software PKZip.

Knuth, the pioneer of modern computer science and winner of the Turing Award, once described Huffman's achievements this way:

In the fields of computer science and data communications, Huffman coding is what people have been using the basic idea.

Later, Huffman recalled that "eureka" moment. At that time, he was about to throw the paper notes into the trash can, but suddenly his thoughts came together and the answer appeared in his mind:

That was the most bizarre moment in my life.

The realization suddenly dawned on me, like lightning.

and said that if he had known that his professor Fano had struggled with this problem, he might never have tried to solve it, let alone at the age of 25 Just be bold and try.

Achievement and sense of order, using mathematics to play art

Huffman coding changed the data compression paradigm and won many honors and medals for it.

For example, Huffman won the Golden Jubilee Award for Technical Innovation from the IEEE Information Theory Society in 1998, and the Richard Hamming Medal from the Institute of Electrical and Electronics Engineers (IEEE) in 1999. ).

But even so, in the course of his life, compared to the invention of the lossless compression method, what he was most proud of was this doctoral thesis.

Title: The Synthesis of Sequential Switching Circuits.

Picture

Picture

While Huffman was studying for his PhD at MIT, he published this important paper discussing sequential switching circuits. At that time, Huffman was almost the first scholar to explain how to design an asynchronous sequential switching circuit, and this theory later provided important logical support for the development of computers. The release of this paper not only helped him obtain the Louis E. Levy Medal from the Franklin Institute, but also naturally allowed him to obtain the qualification to stay at the school and teach courses on switching circuits.

Picture While in school, Huffman also proposed an innovative mathematical formula that can convert a # without losing any information. ##Convert a binary number sequence into another binary number sequence

While in school, Huffman also proposed an innovative mathematical formula that can convert a # without losing any information. ##Convert a binary number sequence into another binary number sequence

William O. Baker, then Vice President of Research at Bell Labs, recruited him to a review committee mainly responsible for reviewing future technology plans for the National Security Agency. Dr. Baker served as a scientific advisor to five presidents: Eisenhower, Kennedy, Johnson, Nixon, and Reagan.

In 1967, Hoffman, who was already a full professor, chose to leave MIT and join the University of California, Santa Cruz (UCSC). During this period, he led the creation of the Department of Computer Science and participated in the development of academic courses, laying the foundation for the subsequent development of the Department of Computer Science. Lay an important foundation.

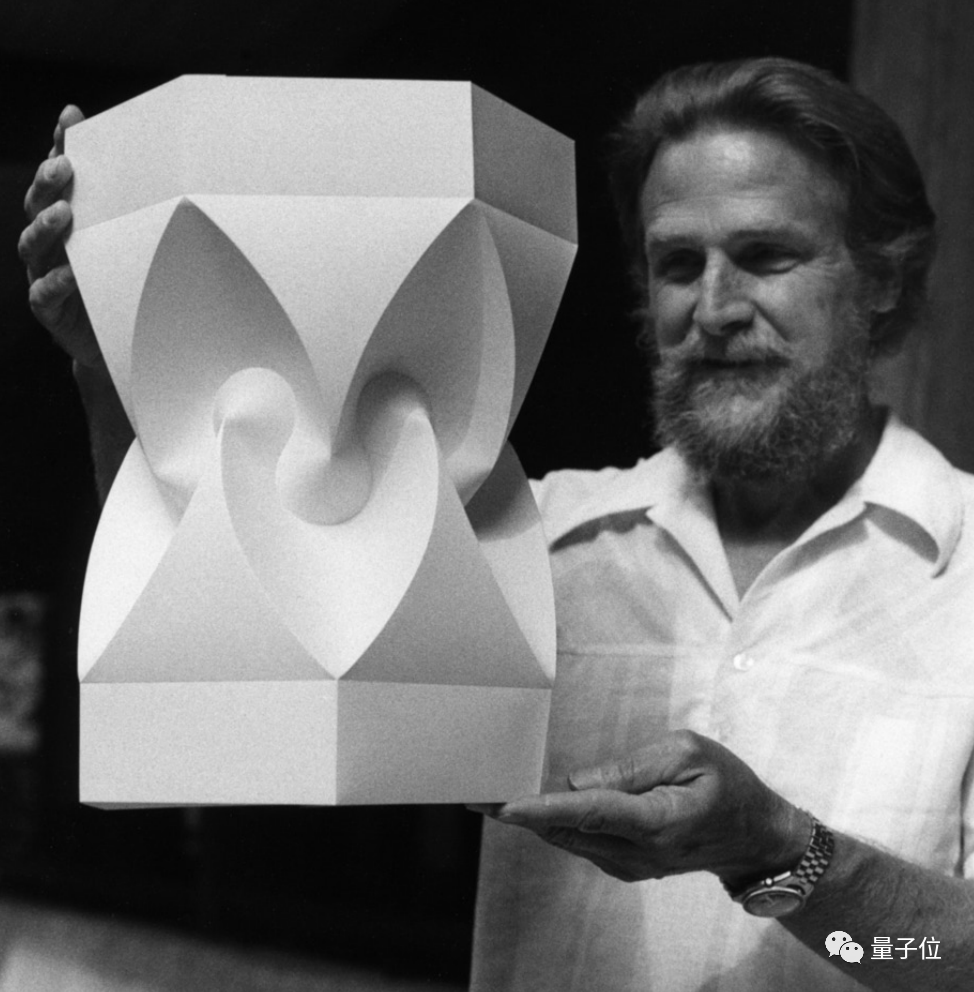

Mathematics can be said to be one of Huffman’s lifelong pursuits, so much so that when he later engaged in art, he could not do without mathematics.

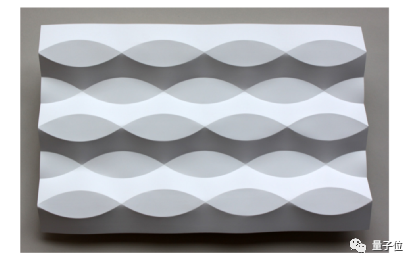

Picture

Picture

Beginning in the 1970s, Huffman became interested in origami. He studied mathematics and origami art at the same time, and made hundreds of curved origami pieces. Works, and published a paper specifically analyzing the mathematical properties of curved origami, becoming a pioneer in the field of origami mathematics.

Looking back, Huffman has won numerous honors and commendations throughout his life, but has never applied for a patent for any of his inventions.

Looking back, Huffman has won numerous honors and commendations throughout his life, but has never applied for a patent for any of his inventions.

Finally, let me borrow a passage from Huffman himself.

As a scientist and teacher, I am really persistent. If I feel that I haven't found the simplest solution to a problem, I become extremely dissatisfied, and this dissatisfaction will continue until I find the best solution. To me, this is the essence of being a scientist.

The above is the detailed content of He wanted to avoid the exam 70 years ago, but he influenced the entire Internet. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology