Technology peripherals

Technology peripherals AI

AI Video generation with controllable time and space has become a reality, and Alibaba's new large-scale model VideoComposer has become popular

Video generation with controllable time and space has become a reality, and Alibaba's new large-scale model VideoComposer has become popularVideo generation with controllable time and space has become a reality, and Alibaba's new large-scale model VideoComposer has become popular

In the field of AI painting, Composer proposed by Alibaba and ControlNet based on Stable diffusion proposed by Stanford have led the theoretical development of controllable image generation. However, the industry's exploration of controllable video generation is still relatively blank.

Compared with image generation, controllable video is more complex, because in addition to the controllability of the space of the video content, it also needs to meet the controllability of the time dimension. Based on this, the research teams of Alibaba and Ant Group took the lead in making an attempt and proposed VideoComposer, which simultaneously achieves video controllability in both time and space dimensions through a combined generation paradigm.

- Paper address: https://arxiv.org/abs/2306.02018

- Project homepage: https://videocomposer.github.io

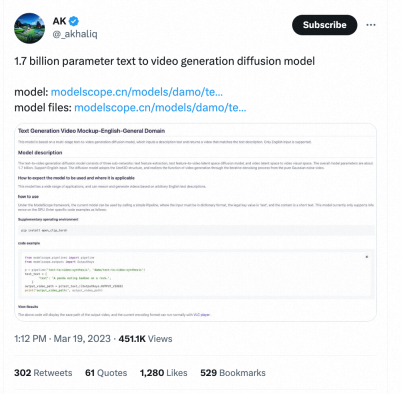

Some time ago, Alibaba The Wensheng video model was low-key and open sourced in the MoDa community and Hugging Face. It unexpectedly attracted widespread attention from developers at home and abroad. The video generated by the model even received a response from Musk himself. The model received orders for many days in a row on the MoDa community. Tens of thousands of international visits per day.

#Text-to-Video on Twitter

VideoComposer As the latest achievement of the research team, it has once again received widespread attention from the international community focus on.

##VideoComposer on Twitter In fact, controllability has become a higher benchmark for visual content creation, which has made significant progress in customized image generation, but there are still three problems in the field of video generation. Big Challenge:

- Complex data structure, the generated video needs to satisfy both the diversity of dynamic changes in the time dimension and the content consistency in the spatio-temporal dimension;

- Complex Guidance conditions, existing controllable video generation requires complex conditions that cannot be constructed manually. For example, Gen-1/2 proposed by Runway needs to rely on depth sequences as conditions, which can better achieve structural migration between videos, but cannot solve the controllability problem well;

- Lack of motion controllability. Motion pattern is a complex and abstract attribute of video. Motion controllability is a necessary condition to solve the controllability of video generation.

Prior to this, Composer proposed by Alibaba has proven that compositionality is extremely helpful in improving the controllability of image generation, and this study on VideoComposer is also Based on the combined generation paradigm, it improves the flexibility of video generation while solving the above three major challenges. Specifically, the video is decomposed into three guiding conditions, namely text conditions, spatial conditions, and video-specific timing conditions, and then the Video LDM (Video Latent Diffusion Model) is trained based on this. In particular, it uses efficient Motion Vector as an important explicit timing condition to learn the motion pattern of videos, and designs a simple and effective spatiotemporal condition encoder STC-encoder to ensure the spatiotemporal continuity of condition-driven videos. In the inference stage, different conditions can be randomly combined to control the video content.

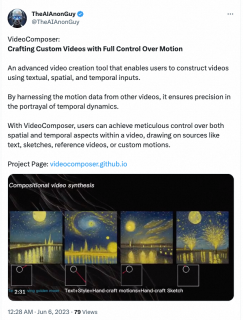

Experimental results show that VideoComposer can flexibly control the time and space patterns of videos, such as generating specific videos through single pictures, hand-drawn drawings, etc., and can even easily use simple hand-drawn directions. Control the target's movement style. This study directly tested the performance of VideoComposer on 9 different classic tasks, and all achieved satisfactory results, proving the versatility of VideoComposer.

Figure (a-c) VideoComposer is able to generate videos that meet text, spatial and temporal conditions or a subset thereof; (d ) VideoComposer can use only two strokes to generate a video that satisfies Van Gogh's style, while satisfying the expected movement mode (red strokes) and shape mode (white strokes)

Method introduction

Video LDM ## Hidden space. Video LDM first introduces a pre-trained encoder to map the input video . Then, the pre-trained decoder D is used to map the latent space to the pixel space . In VideoComposer, the parameters are set to Diffusion model. To learn the actual video content distribution , the diffusion model learns to gradually denoise from the normal distribution noise to restore the real visual content. This process is actually simulating a reversible Markov chain with a length of T=1000. In order to perform a reversible process in the latent space, Video LDM injects noise into  to a latent space expression, where

to a latent space expression, where

.

.

In order to fully explore the use of spatial local inductive bias and sequence temporal inductive bias for denoising, VideoComposer will

##VideoComposer

combination condition. VideoComposer decomposes video into three different types of conditions, namely textual conditions, spatial conditions, and critical timing conditions, which together determine spatial and temporal patterns in the video. VideoComposer is a general composable video generation framework, so more customized conditions can be incorporated into VideoComposer based on the downstream application, not limited to those listed below:

- Text conditions: Text description provides visual instructions for the video with rough visual content and motion aspects. This is also a commonly used condition for T2V;

- Spatial conditions:

- Single Image, select the first frame of a given video as the spatial condition to generate image to video , to express the content and structure of the video;

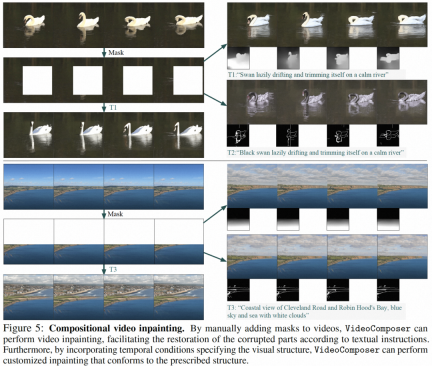

- Single Sketch, use PiDiNet to extract the sketch of the first video frame as the second spatial condition;

- Style (Style), in order to further transfer the style of a single image to the synthesized video, select image embedding as a style guide;

##

- Timing conditions:

- Motion Vector (Motion Vector), motion vector as a unique element of video is expressed as a two-dimensional vector, that is, horizontal and vertical directions. It explicitly encodes pixel-by-pixel movement between two adjacent frames. Due to the natural properties of motion vectors, this condition is treated as a temporally smooth synthesized motion control signal, which extracts motion vectors in standard MPEG-4 format from compressed video;

- depth sequence ( Depth Sequence), in order to introduce video-level depth information, use the pre-trained model in PiDiNet to extract the depth map of the video frame;

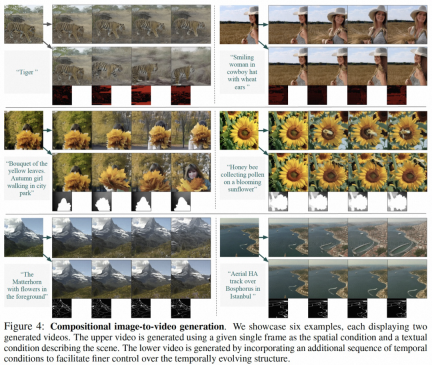

- Mask Sequence (Mask Sequence), introduce the tubular mask to mask local spatiotemporal content and force the model to predict the masked area based on observable information;

- Sketch Sequnce, compared with a single sketch, a sketch sequence can provide More control over details for precise, custom compositions.

# Spatiotemporal conditional encoder. Sequence conditions contain rich and complex spatiotemporal dependencies, which pose a great challenge to controllable instructions. To enhance the temporal perception of input conditions, this study designed a spatiotemporal condition encoder (STC-encoder) to incorporate spatiotemporal relationships. Specifically, a lightweight spatial structure is first applied, including two 2D convolutions and an avgPooling, to extract local spatial information, and then the resulting condition sequence is input to a temporal Transformer layer for temporal modeling. In this way, STC-encoder can facilitate the explicit embedding of temporal cues and provide a unified entry for conditional embedding for diverse inputs, thereby enhancing inter-frame consistency. In addition, the study repeated the spatial conditions of a single image and a single sketch in the temporal dimension to ensure their consistency with the temporal conditions, thus facilitating the condition embedding process.

After the conditions are processed through the STC-encoder, the final condition sequence has the same spatial shape as the STC-encoder and is then fused by element-wise addition. Finally, the merged conditional sequence is concatenated along the channel dimension as a control signal. For text and style conditions, a cross-attention mechanism is utilized to inject text and style guidance.

Training and inference

Two-stage training strategy. Although VideoComposer can be initialized through pre-training of image LDM, which can alleviate the training difficulty to a certain extent, it is difficult for the model to have the ability to perceive temporal dynamics and the ability to generate multiple conditions at the same time. , this will increase the difficulty of training combined video generation. Therefore, this study adopted a two-stage optimization strategy. In the first stage, the model was initially equipped with timing modeling capabilities through T2V training; in the second stage, VideoComposer was optimized through combined training to achieve better performance.

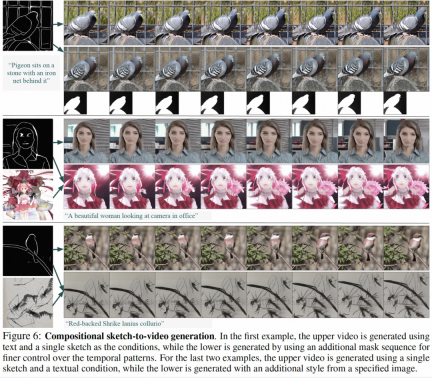

reasoning. During the inference process, DDIM is used to improve inference efficiency. And adopt classifier-free guidance to ensure that the generated results meet the specified conditions. The generation process can be formalized as follows:

where ω is the guidance ratio; c1 and c2 are two sets of conditions. This guidance mechanism is judged by the set of two conditions, and can give the model more flexible control through intensity control.

Experimental results

In the experimental exploration, the study demonstrated that VideoComposer serves as a unified model with a universal generation framework and verified the capabilities of VideoComposer on 9 classic tasks.

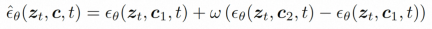

Part of the results of this research are as follows, in static picture to video generation (Figure 4), video Inpainting (Figure 5), static sketch generation to video (Figure 6), hand-painted motion control Video (Figure 8) and motion transfer (Figure A12) can both reflect the advantages of controllable video generation.

Public information shows that Alibaba’s research on visual basic models mainly focuses on the research of large visual representation models, visual generative large models and their downstream applications. It has published more than 60 CCF-A papers in related fields and won more than 10 international championships in multiple industry competitions, such as the controllable image generation method Composer, the image and text pre-training methods RA-CLIP and RLEG, and the uncropped long Video self-supervised learning HiCo/HiCo, speaking face generation method LipFormer, etc. all come from this team.

The above is the detailed content of Video generation with controllable time and space has become a reality, and Alibaba's new large-scale model VideoComposer has become popular. For more information, please follow other related articles on the PHP Chinese website!

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AM

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AMThis article explores the growing concern of "AI agency decay"—the gradual decline in our ability to think and decide independently. This is especially crucial for business leaders navigating the increasingly automated world while retainin

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AM

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AMEver wondered how AI agents like Siri and Alexa work? These intelligent systems are becoming more important in our daily lives. This article introduces the ReAct pattern, a method that enhances AI agents by combining reasoning an

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM"I think AI tools are changing the learning opportunities for college students. We believe in developing students in core courses, but more and more people also want to get a perspective of computational and statistical thinking," said University of Chicago President Paul Alivisatos in an interview with Deloitte Nitin Mittal at the Davos Forum in January. He believes that people will have to become creators and co-creators of AI, which means that learning and other aspects need to adapt to some major changes. Digital intelligence and critical thinking Professor Alexa Joubin of George Washington University described artificial intelligence as a “heuristic tool” in the humanities and explores how it changes

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AM

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AMLangChain is a powerful toolkit for building sophisticated AI applications. Its agent architecture is particularly noteworthy, allowing developers to create intelligent systems capable of independent reasoning, decision-making, and action. This expl

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AM

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AMRadial Basis Function Neural Networks (RBFNNs): A Comprehensive Guide Radial Basis Function Neural Networks (RBFNNs) are a powerful type of neural network architecture that leverages radial basis functions for activation. Their unique structure make

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AM

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AMBrain-computer interfaces (BCIs) directly link the brain to external devices, translating brain impulses into actions without physical movement. This technology utilizes implanted sensors to capture brain signals, converting them into digital comman

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AM

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AMThis "Leading with Data" episode features Ines Montani, co-founder and CEO of Explosion AI, and co-developer of spaCy and Prodigy. Ines offers expert insights into the evolution of these tools, Explosion's unique business model, and the tr

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AM

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AMThis article explores Retrieval Augmented Generation (RAG) systems and how AI agents can enhance their capabilities. Traditional RAG systems, while useful for leveraging custom enterprise data, suffer from limitations such as a lack of real-time dat

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Dreamweaver Mac version

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

WebStorm Mac version

Useful JavaScript development tools