Home >Technology peripherals >AI >The latest conversation between Ng Enda and Hinton! AI is not a random parrot, consensus trumps everything, LeCun agrees with both hands

The latest conversation between Ng Enda and Hinton! AI is not a random parrot, consensus trumps everything, LeCun agrees with both hands

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-06-13 09:18:05772browse

Recently, AI security has become a highly popular topic.

AI "Godfather" Geoffrey Hinton and Ng Enda had an in-depth conversation on artificial intelligence and catastrophic risks.

##Wu Enda posted a message today to share their common thoughts:

- It’s important for AI scientists to reach a consensus on the risks. Similar to climate scientists, they have a general consensus on climate change and so can formulate good policies.

- Does the artificial intelligence model understand the world? Our answer is yes. If we list these key technical issues and develop a common view, it will help advance humanity's consensus on risk.

Hinton said,

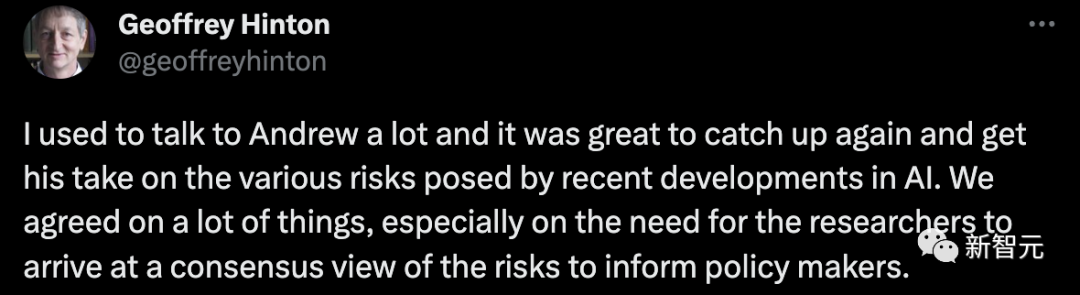

I have often communicated with Andrew Ng, and I am very happy to meet him again and understand his impact on the recent development of artificial intelligence. Come to view various risks. We achieved consensus on many aspects, in particular, the need for researchers to develop a common understanding of risks when reporting to policymakers.

Prior to this, Andrew Ng also had a conversation with Yoshua Bengio about the risks of artificial intelligence.

The consensus they reached was to clarify specific scenarios where AI may pose significant risks.

Next, let’s take a look at what the two AI tycoons discussed in detail.

Urgent need for consensus!First of all, Hinton proposed that the most important thing is consensus.

He said that the entire AI community currently lacks a unified consensus. Just as climate scientists agree, AI scientists are also needed.

In Hinton’s view, the reason why we need a consensus is because if there is not one, each AI scientist has his own opinion, then the government and policymakers can follow their own opinions. Choose a point of view that serves your own self-interest as a guide.

This will obviously lose fairness.

Judging from the current situation, the different opinions among AI scientists vary greatly.

Hinton believes that if the situation in which we are divided can pass quickly, everyone can reach an agreement, jointly accept some of the major threats that AI may bring, and realize the regulation of AI. development urgency, just fine.

Andrew Ng agrees with Hinton’s point of view.

Although he still doesn’t feel that the AI industry is so differentiated that it is divided, it seems to be slowly evolving in that direction.

The views in the mainstream AI community are very polarized, and each camp is sparing no effort to express its demands. However, in Ng's view, this expression is more like a quarrel than a harmonious dialogue.

Of course, Andrew Ng still has some confidence in the AI community. He hopes that we can find some consensus together and conduct proper dialogue, so that we can better help policymakers formulate relevant plans.

Next, Hinton talked about another key issue, which is also the reason why he thinks it is difficult for AI scientists to reach a consensus at present - chat robots like GPT-4 and Bard, Do you understand the words they generated themselves?

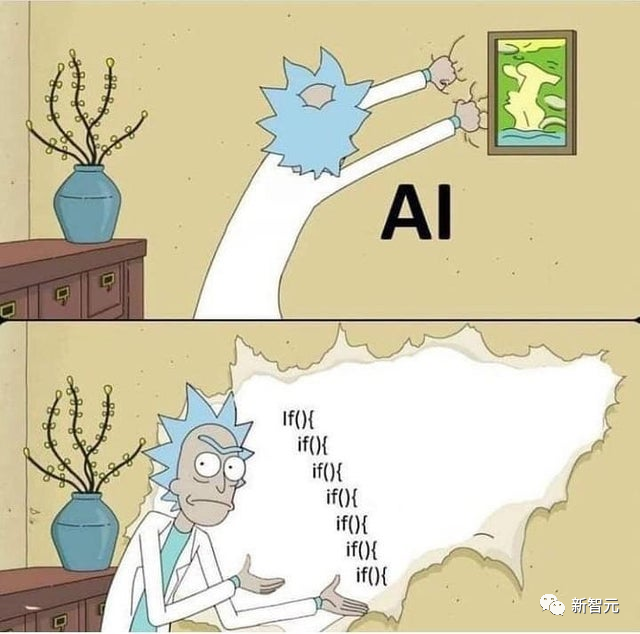

Some people think AI understands, some people think they don’t understand and are just random parrots.

Hinton believes that as long as this difference still exists, it will be difficult for the AI community to reach a consensus. Therefore, he believes that the top priority is to clarify this issue.

Of course, as far as he is concerned, he definitely thinks that AI understands, and AI is not just about statistics.

He also mentioned that well-known scientists such as Yan LeCun believe that AI cannot understand.

This highlights the importance of reconciliation and clarity on this issue.

Ng Enda said that it is not simple to judge whether AI can understand, because there does not seem to be a standard or some kind of test.

His own point of view is that he believes that whether it is LLM or other large AI models, they are all building a world model. And AI may have some understanding in the process.

Of course, he said this was just his current view.

Apart from that, he agreed with Hinton that researchers must first reach an agreement on this issue before they can continue to talk about the risks and crises that follow.

Hinton went on to say that the AI judges and predicts the generation of the next word based on the previous words based on the database. This is a kind of understanding in Hinton's eyes.

He believes that this is actually not much different from the thinking mechanism of our human brain.

Of course, whether it counts as understanding requires further discussion, but at least it is not as simple as a random parrot.

Ng Enda believes that there are some issues that will cause people with different views to draw different conclusions, and even suggest that AI will exterminate mankind.

He said that to better understand AI, we need to know more and discuss more.

Only in this way can we build an AI community that contains broad consensus.

LeCun agrees with both hands and mentions the "world model" again

Some time ago, when the world was calling for a moratorium on super AI research and development, Ng Enda and LeCun held a live broadcast to discuss this topic discussed.

They are completely wrong to oppose the moratorium on AI at the same time. There were no seat belts and traffic lights when cars were first invented. Artificial intelligence There are no fundamental differences from previous technological advances.

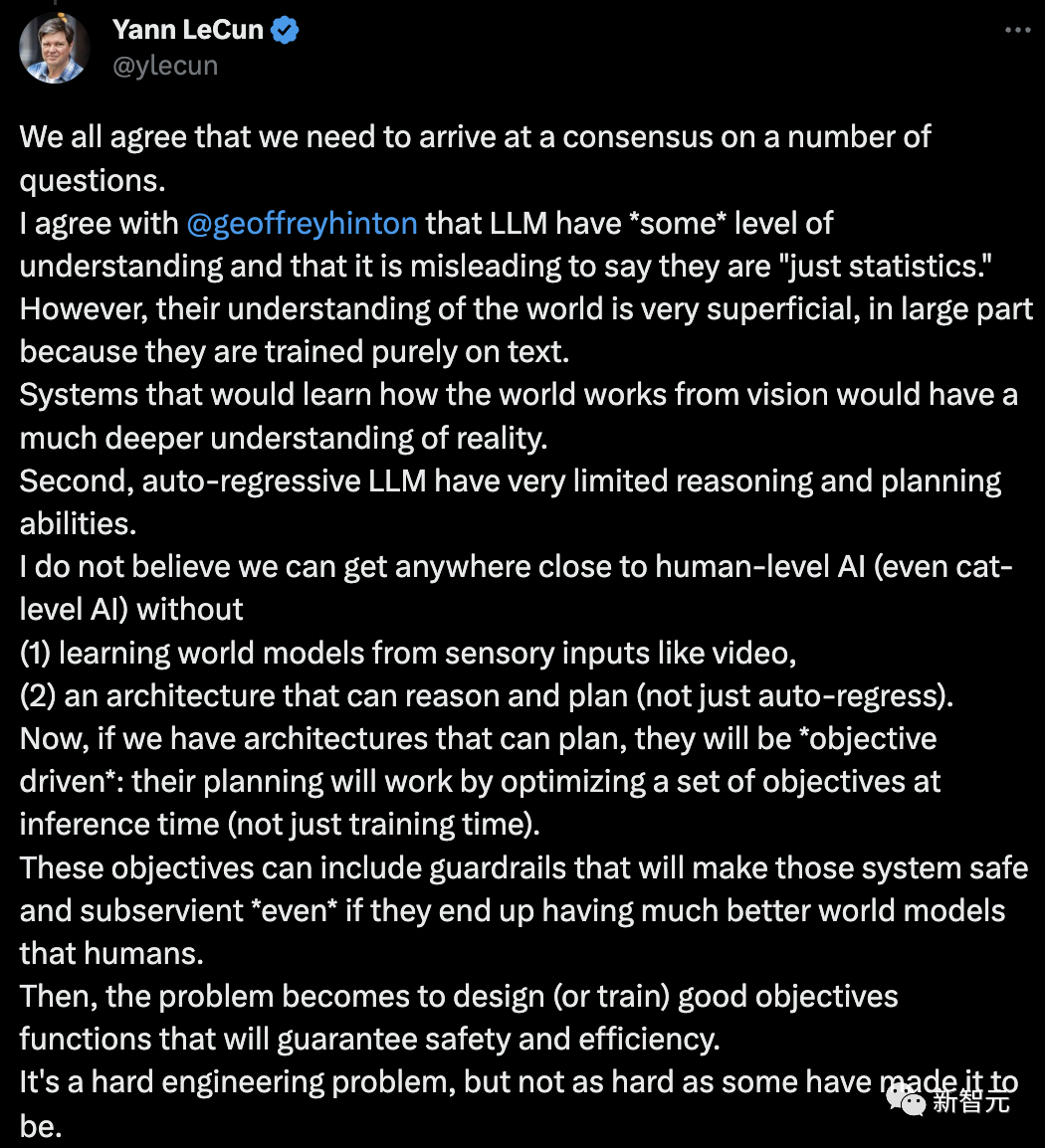

This time, LeCun talked about Ng Enda and Hinton, and once again proposed his "world model" and how the current artificial intelligence is not even as good as cats and dogs.

We all agree that we need to agree on some issues.

I agree with Geoffrey Hinton that LLM has "some degree" of understanding, and that calling them "just statistics" is misleading.

LLM’s understanding of the world is very superficial, largely because they are trained purely on text.

Systems that learn how the world works from vision will lead to a deeper understanding of reality. Secondly, the reasoning and planning capabilities of autoregressive LLM are very limited.

I think that without (1) learning a "world model" from sensory input such as video, (2) an architecture capable of reasoning and planning (not just autoregressive), There’s no way we’re going to come close to human-level AI (or even cat-level AI).

Now, if we have architectures that can plan, they will be "goal-driven": their planning will be done by optimizing a set of goals at inference time (not just training time) Come to work.

These goals can include "guardrails" to make these systems safe and compliant, even if they ultimately have better models of the world than humans do.

The problem then becomes designing (or training) a good objective function to ensure safety and efficiency. This is a difficult engineering problem, but not as difficult as some say.

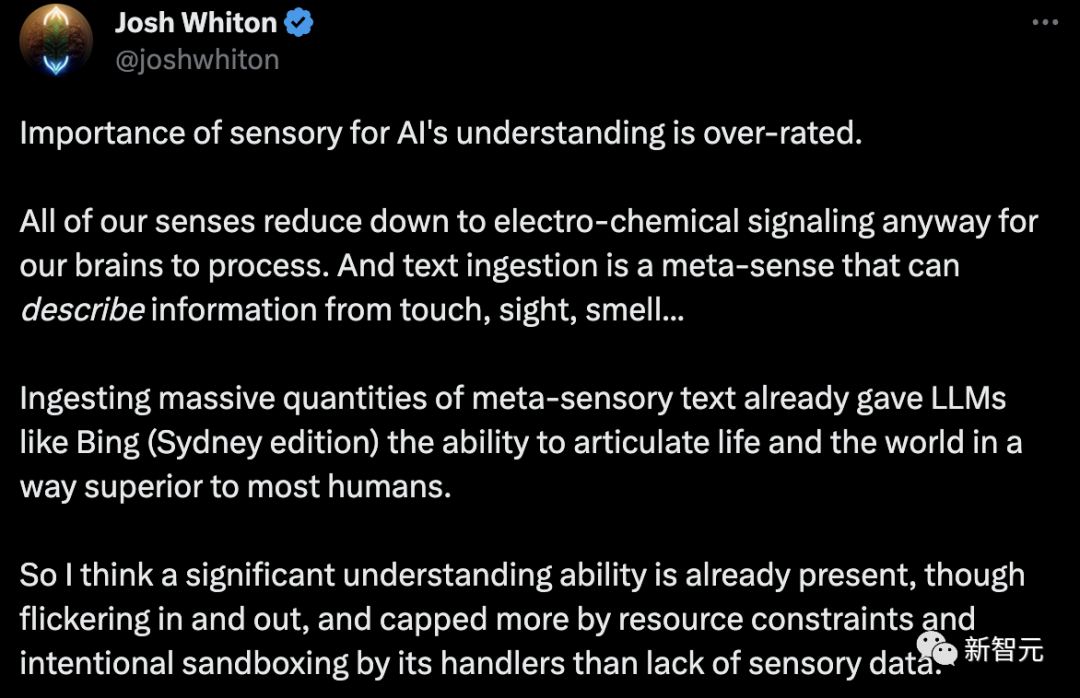

## LeCun’s view was immediately refuted by netizens: the importance of senses to the understanding of artificial intelligence is overestimated.

In any case, all of our senses are reduced to electrochemical signals for the brain to process.

Text ingestion is a meta-sense that can describe information from touch, vision, smell... Ingesting a large amount of meta-sensory text has made LLMs like Bing (Sydney version) The ability to express life and the world in a way that is better than most.

So I think an important understanding is already there, albeit in and out of existence. And that's more due to resource constraints and the intentional sandboxing of the handlers than a lack of sensory data.

I think it’s time to unite and work for the advancement of society and artificial intelligence. Think about it, you are still a baby artificial intelligence. Don’t we want to teach that child how to be a techno-organic unifier instead of a brutalist in the world?

When he becomes sentient, not only will they be happier, but the world won't be at war. Look at Newcastle upon Tyne. Such a brief period of peace, but the beauty of creation was great.

The consensus mentioned by Ng Enda and Hinton seems unlikely at present. It is not within our personal capabilities, it requires everyone to have such a will.

Some netizens said that this was a quite controversial conversation.

I do wonder, when I teach my 2 year old to talk, does he act more like a random parrot or does he actually understand the context? Or both?

Because his context vector is much richer than LLM (text, tone, facial expression, environment, etc.). But I do wonder, if one person had all of another person's senses turned off and the only available input was some "text embedding" (text input -> neural stimulation), would that person behave more like a random parrot, or would that person be able to Understand the context?

After leaving Google, Hinton has devoted himself to Throw in AI security. On June 10, Hinton once again talked about AI risks at the Zhiyuan Conference.

What would happen if a large neural network could imitate human language to acquire knowledge, and even use it for its own use?

There is no doubt that because it can obtain more data, this AI system will definitely surpass humans.

The worst-case scenario is that criminals will use superintelligence to manipulate voters and win the war.

In addition, if the super AI is allowed to set its own sub-goals, one sub-goal is to gain more power, the super AI will manipulate the humans who use it in order to achieve its goals.

It is worth mentioning that the realization of such super AI can pass "mortal computation".

In December 2022, a paper published by Hinton, The Forward-Forward Algorithm: Some Preliminary Investigations, mentioned "mortal calculations" in the last section.

Paper address: https://arxiv.org/pdf/2212.13345.pdf

If you want a trillion-parameter neural network to consume only a few watts of power, "mortal computing" may be the only option .

# He said that if we really gave up the separation of software and hardware, we would get "mortal computing." From this, we can use very low-power analog computing, which is exactly what the brain is doing.

"Mortal Computing" can realize a new type of computer that closely integrates artificial intelligence and hardware.

This means that in the future, putting GPT-3 into a toaster will only cost $1 and consume only a few watts of power.

So the main problem with mortal computing is that the learning process must exploit the specific simulation properties of the hardware it is running on, without knowing exactly what those properties are.

For example, the exact function that relates the input of a neuron to the output of a neuron is not known, and the connectivity may not be known.

This means that we cannot use something like the backpropagation algorithm to obtain the gradient.

So the question is, if we can't use backpropagation, what else can we do since we are all highly Rely on backpropagation.

In this regard, Hinton proposed a solution: forward algorithm.

#The forward algorithm is a promising candidate, although its ability to scale in large neural networks remains to be seen.

In Hinton’s view, artificial neural networks will soon be more intelligent than real neural networks, and superintelligence will be much faster than expected.

Thus, the world now needs to reach a consensus that the future of AI should be created jointly by humans.

The above is the detailed content of The latest conversation between Ng Enda and Hinton! AI is not a random parrot, consensus trumps everything, LeCun agrees with both hands. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology