Home >Technology peripherals >AI >AI fakes pictures of Pentagon explosion, causing stock market fluctuations, Twitter starts crowdsourcing fact-checking

AI fakes pictures of Pentagon explosion, causing stock market fluctuations, Twitter starts crowdsourcing fact-checking

- 王林forward

- 2023-06-03 22:51:021318browse

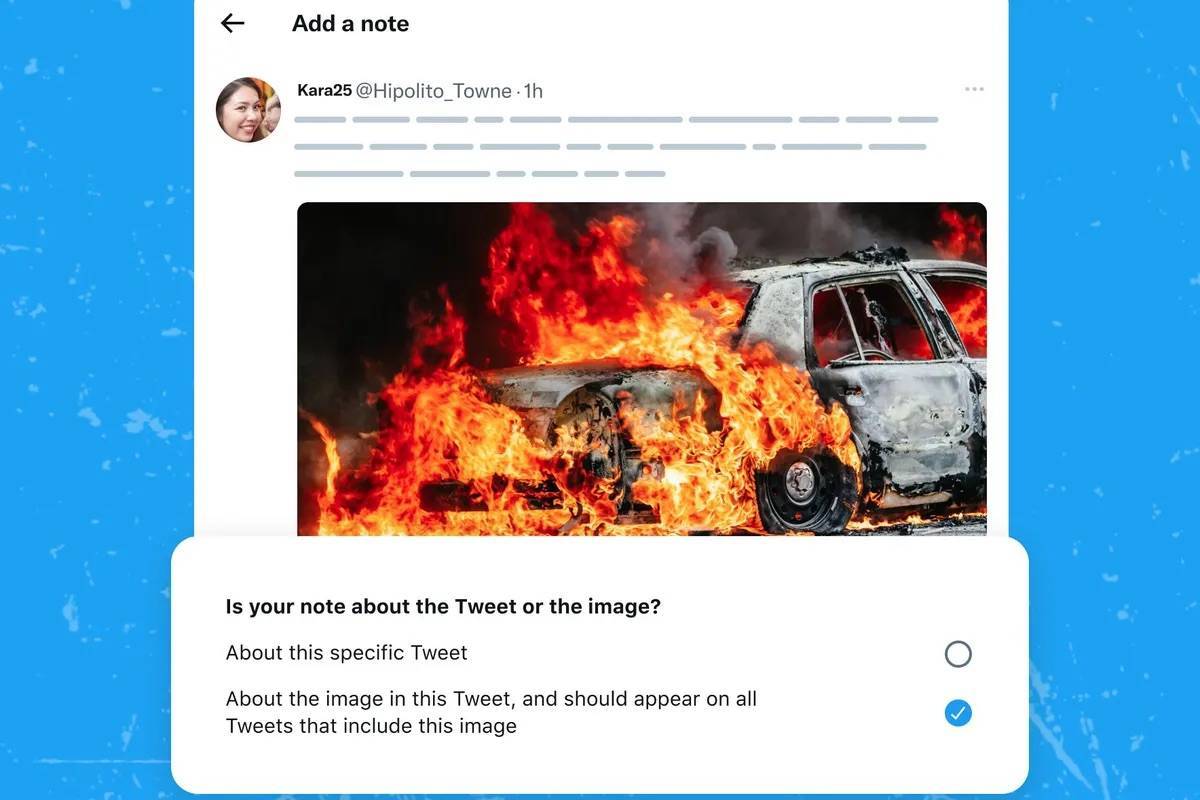

Users can add notes next to photos and videos on Twitter and add context and information about the image for misleading content.

·Community notes will appear following the picture. This means that even if a questionable image is tweeted by another user, there will be a note next to it.

Twitter announced that it will expand its community notes system on May 30, local time, to identify doctored and fake images. Twitter is launching the new feature, which aims to use unpaid, crowdsourced fact-checking to control misinformation and hoaxes a week after false images of a Pentagon explosion went viral on the platform.

This feature allows users to add comments next to photos and videos uploaded to Twitter and provide context and additional explanations for relevant information where the content is misleading.

The Community Notes feature will allow users to add notes alongside photos and videos on Twitter.

Thetag feature is currently in beta and only works for tweets containing a single image. Twitter plans to expand the feature in the near future to handle other forms of multimedia content, such as moving images, videos, and multi-image tweets.

You can only use it if you reach a certain score

The announcement comes a week after an AI-generated image of an explosion at the Pentagon was widely shared on Twitter. The image was first posted by an account marked with the "Blue V" certification mark, claiming to be affiliated with Bloomberg. The "Blue V" has always been proof of the authenticity of an account, but since Tesla CEO Elon Musk acquired Twitter, anyone who subscribes to the premium Twitter Blue service can get this mark.

Twitter said in the announcement that fake AI-generated images have spread virally on the platform, some of which may cause confusion or fear in people. In some cases, the images were harmless, but in the Pentagon's case, the fake images had a real impact on the stock market — causing a $50 billion change in market value.

AI-generated images show explosions near the Pentagon, triggering turmoil in the stock market.

Previously, Community Notes’ checks on misleading and false information were limited to text. Contributors can now add Notes on Media to annotate images or videos to provide relevant information.

The purpose of Community Notes is to create a richer world of information by empowering Twitter users to work together and add contextual information to Tweets that may lead people to misinterpret. According to Twitter's announcement, tweets with community notes have 15% to 35% more likes and retweets than tweets without notes.

Once a user becomes a contributor, they can start adding notes. To become a contributor to Community Notes, users must first demonstrate their ability to do so. To confirm this, Twitter assigns each potential contributor a writing impact score. Scores start at 0, and you must reach 5 points to become a community note contributor, and 10 points to add a community note on an image. Users can improve their writing impact score by rating existing notes and other methods.

"The quality of their work may cause them to lose their contributor status." In other words, if someone enters the system by following the rules and then uses their contributor status to amplify or spread misinformation, they will be kicked out. A team of contributors who must prove themselves again before they can rejoin.

Previously, comments added by community note users would be displayed to others as the tweet was retweeted and quoted, but if the image in the tweet appeared in other posts, the note would not exist. Community notes will now follow the image, Twitter said. Even if other users share images on Twitter that are flagged as suspicious, there will be a note next to them.

Even if a flagged suspicious picture is posted elsewhere by other users, there will still be notes next to it.

Many times, the valuable context these notes provide can apply to any tweet containing the same media, not just one specific tweet. ” wrote the official announcement on Twitter.

questioned

However, Twitter lacks confidence in being able to match tagged images with other similar images on the platform. The Twitter Community Notes team warned that the new feature may cause false positives or false negatives when matching images. "We will work hard to adjust this feature so that it covers more images while avoiding false matches."

At the end of 2022, Musk first launched the community notes feature shortly after acquiring Twitter. This feature is based on the company’s previous “Birdwatch” program, which aims to verify tweets by using unpaid crowdsourcing. to control the spread of misinformation and hoaxes.

Since Musk acquired Twitter, content moderation on the platform has been a key issue. He eliminated most of the content moderation team positions in a massive layoff, but content moderation is key to keeping social media sites up and running and maintaining the platform's appeal and profitability.

Even with a complete content moderation team, Twitter still has difficulty solving the problem of misinformation. Professional trust and security experts point to identifying images and videos generated by artificial intelligence as their strongest area. Twitter wants to engage its user base in content moderation by expanding its notes feature, but unfortunately, these users aren’t known for their discipline or ability to handle details.

Arjun Narayan, director of trust and safety at SmartNews Japan, also expressed concern about this approach in an interview. “This approach of weakening the professional team may cause trouble for the company.”

The above is the detailed content of AI fakes pictures of Pentagon explosion, causing stock market fluctuations, Twitter starts crowdsourcing fact-checking. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology