Home >Technology peripherals >AI >Google launches a full-scale counterattack! Officially announced that AI reconstructs search, new model is comparable to GPT-4, and Microsoft is targeting ChatGPT

Google launches a full-scale counterattack! Officially announced that AI reconstructs search, new model is comparable to GPT-4, and Microsoft is targeting ChatGPT

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-05-31 14:47:041151browse

Much attention has been paid to Google’s counterattack.

Now, Google search is finally adding AI conversation function, and the queuing channel is open.

Of course this is only the first step.

The big one is yet to come:

The new large language model PaLM 2 is officially unveiled, and Google claims that it surpasses GPT-4 in some tasks.

Bard’s capabilities have been greatly updated, no need to wait in line, and new languages are supported.

The Google version of the AI office assistant has also been launched and will be the first to appear in Gmail.

Google Cloud has also launched multiple basic large-scale models to provide further generative AI services for the industry...

At the latest I/O Developer Conference, Google’s big announcement It's really shocking.

Some netizens said directly:

The AI war is in full swing.

Some people even said:

Now I regret paying for ChatGPT.

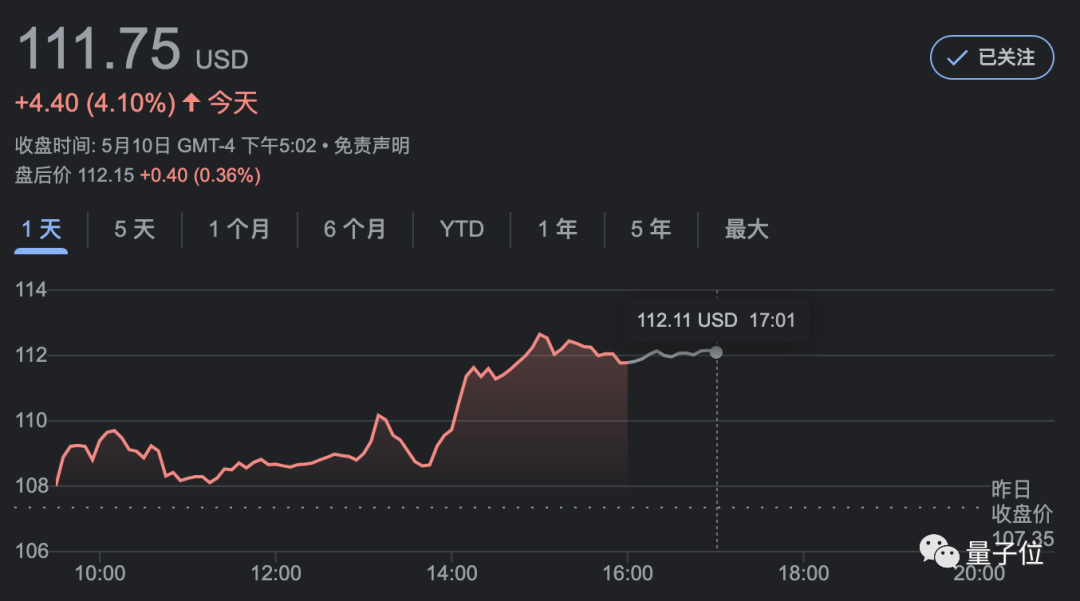

After a press conference, Google’s stock price rose by more than 4%.

PaLM 2 surpasses GPT-4 in some tasks

There is no doubt that PaLM 2 is the top priority of this year’s I/O conference, led by Picha Yi personally made the introduction.

The current Bard and more than 25 Google AI products and functions are now supported by PaLM 2 as the underlying technology.

As Google's most advanced large model, PaLM 2 is based on the Pathways architecture and is an upgraded version of PaLM, built on TPU v4 through JAX.

According to reports, PaLM 2 has received training in more than 100 languages, which makes it stronger in language understanding, generation and translation, and will be better at common sense reasoning and mathematical logic analysis.

PaLM 2’s data set contains a large number of papers and web pages covering many mathematical expressions, Google said. After being trained on this data, PaLM 2 can easily solve mathematical problems and even create graphs.

In terms of programming, PaLM 2 now supports 20 programming languages, such as Python, JavaScript and other common languages, as well as Prolog, Fortran and Verilog.

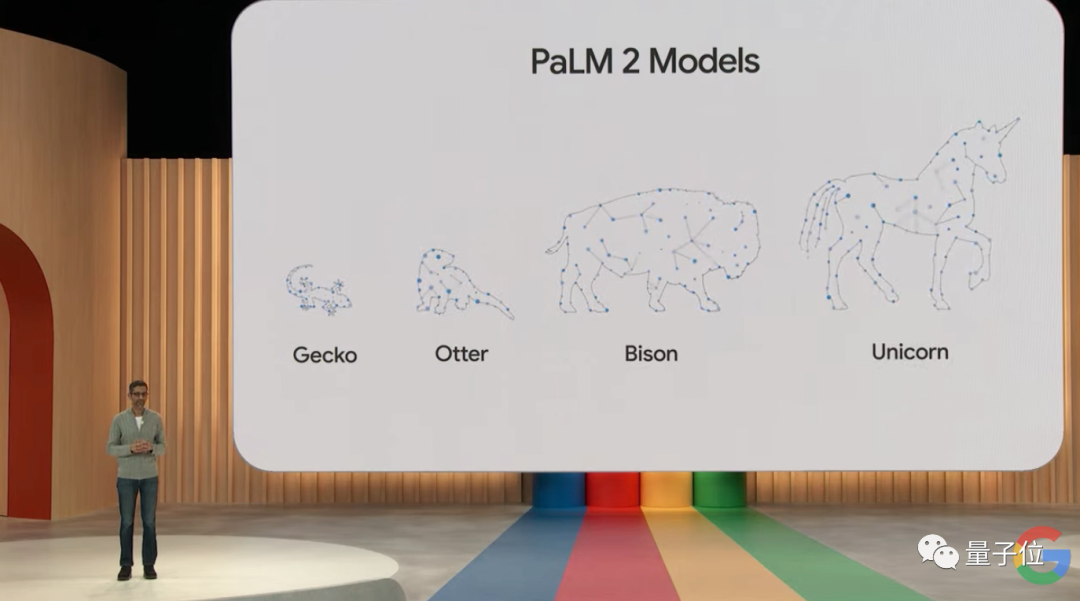

#This time Google launched PaLM 2 in four different sizes.

They use different animals to represent scale. The smallest is the "gecko" and the largest is the "unicorn".

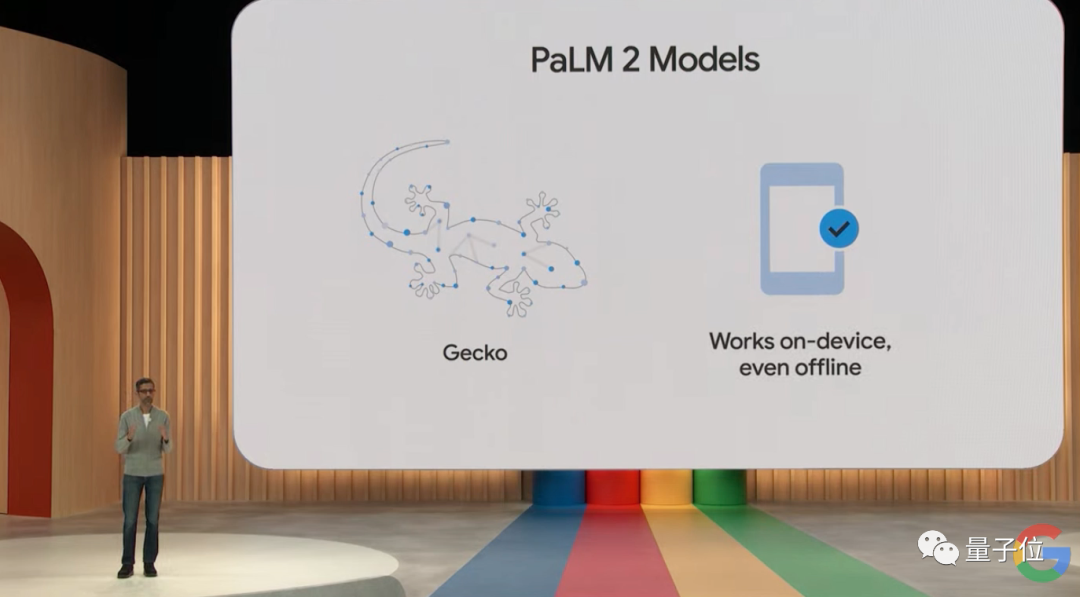

The "Gecko" version is very lightweight and can run quickly on mobile devices, including offline; it can process 20 tokens per second.

The vice president of DeepMind said at the press conference before the I/O conference:

We found that bigger is not always better in models, and this is also Why we decided to offer a range of models in different sizes.

This means it will be easier to fine-tune PaLM 2 so that it can support more products and applications.

At the I/O conference, Google announced that more than 25 products and applications are now using the capabilities of PaLM 2.

The specific form of expression is Duet AI.

It can be understood as the benchmark product of Microsoft 365 Copilot, an AI assistant that can be embedded in various office software.

Google has demonstrated the capabilities of Duet AI in Gmail, Google Docs, and Google Sheets at the press conference.

Including supplementing email content according to prompts, generating PPT, generating picture materials according to prompts, generating tables with one click, etc.

Similarly, this AI assistant can also provide programming assistance. Based on Google Cloud, it can recommend and correct code blocks in real time, and answer programming questions in a conversational manner. It currently supports Go, JavaScript, Python and SQL.

In addition, based on PaLM 2, Google has also launched some large models in professional fields.

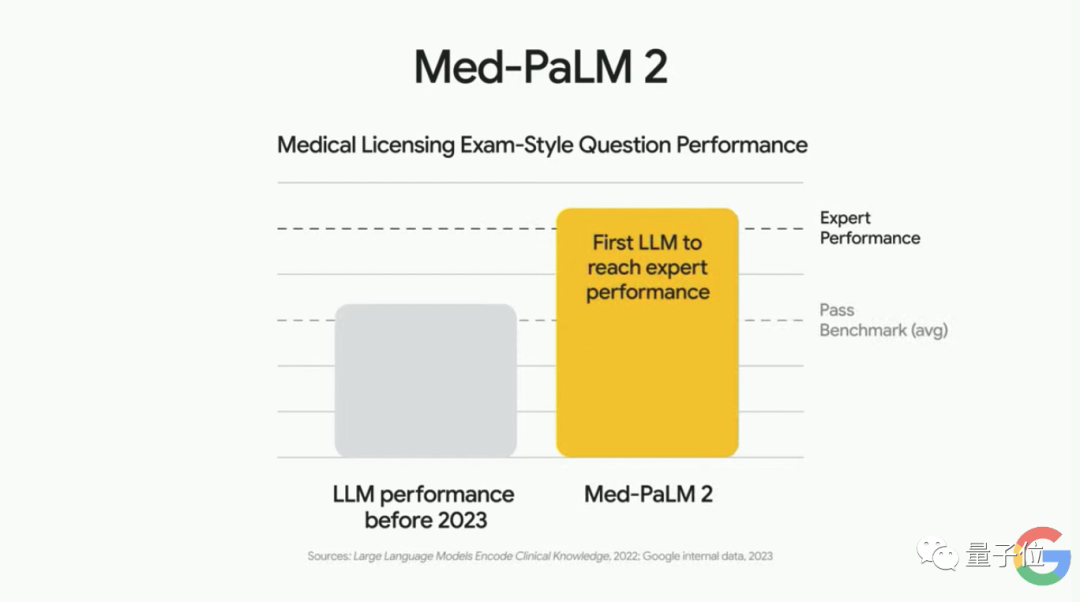

Google’s health team built Med-PaLM 2. It is said to be the first large-scale language model to reach expert level on the U.S. Medical Licensing Examination, and it is able to answer a variety of medical questions.

Google is currently trying to enhance its multi-modal capabilities, such as by autonomously examining X-rays and giving diagnoses. The model will be available to a small group of Google Cloud users later this summer.

Another professional large model is the Sec-PaLM 2.

This is a large model for network security maintenance. It can analyze and interpret potential malicious scripts and detect the dangers of scripts.

So, after demonstrating the outstanding capabilities of PaLM 2, it’s time to talk about how to open it for use.

Google said that PaLM 2 is now available through the PaLM API interface, Firebase and Colab.

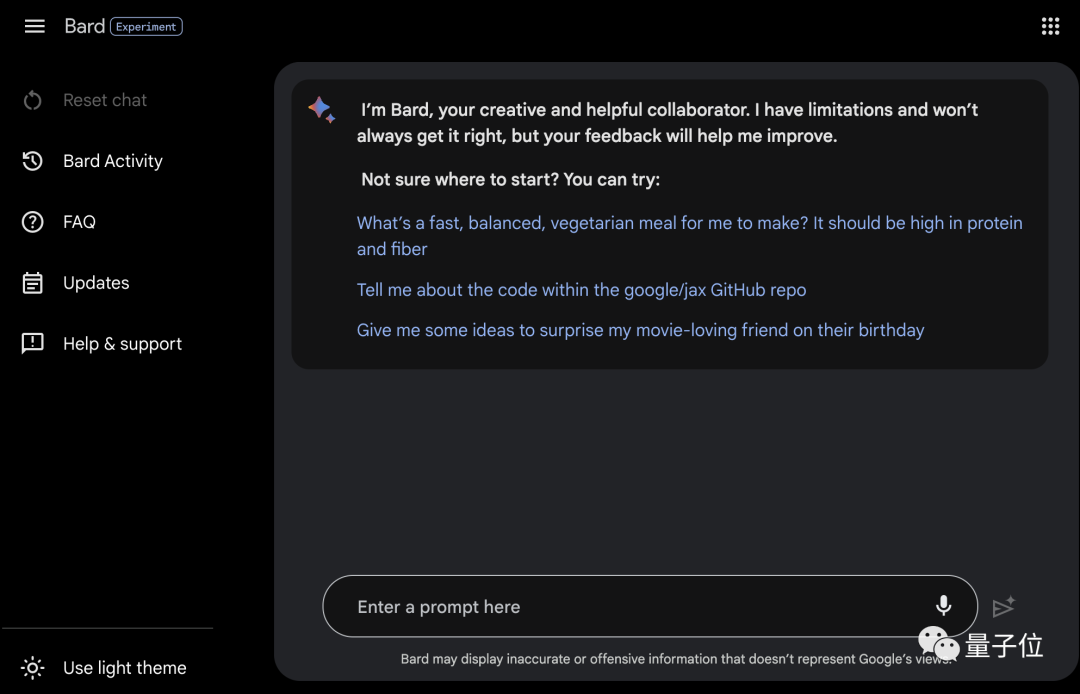

Bard is fully open, supporting applications such as pictures and integrating own maps.

Bard, which is benchmarked against ChatGPT, has finally canceled the trial queue and is fully open in 180 countries and regions around the world.

New dark mode, highly praised by programmers: (manual dog head)

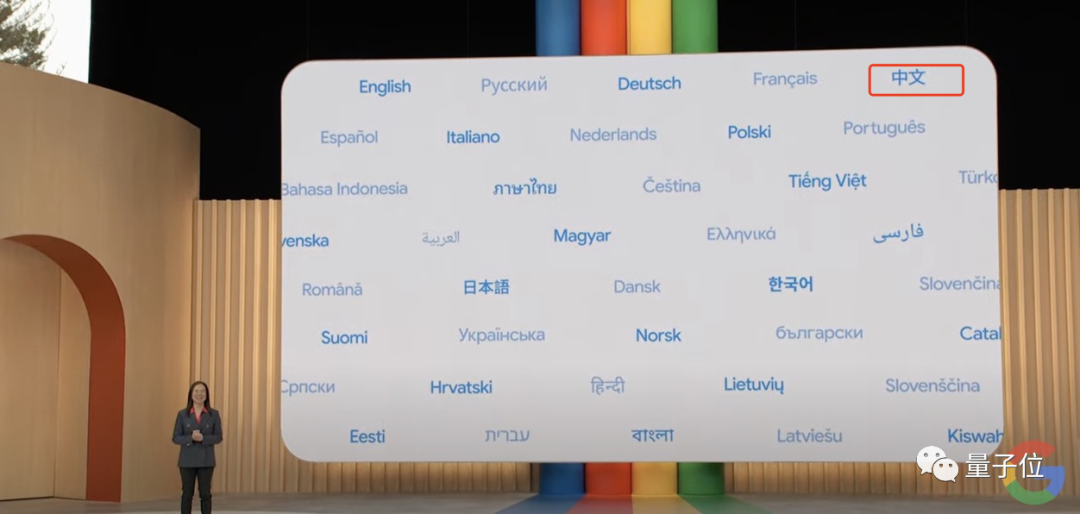

Bard has added the feature of direct conversation in Japanese and Korean, in addition to expanding the access range beyond English. Google said it will soon add 40 language versions, including Chinese.

Since from today, Bard will be fully connected to PaLM 2, its programming and reasoning capabilities have also been greatly improved, including code generation, debugging and interpretation. All are more professional (the kind recognized by programmers).

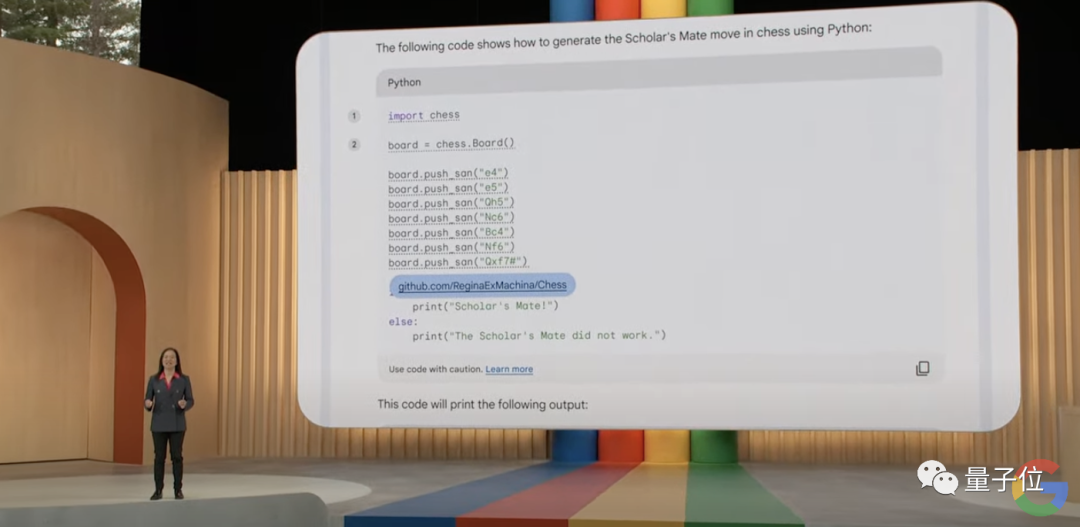

When you ask it to use Python to write a "scholar's mate" move in chess, which references other codes, it will give relevant links for your convenience.

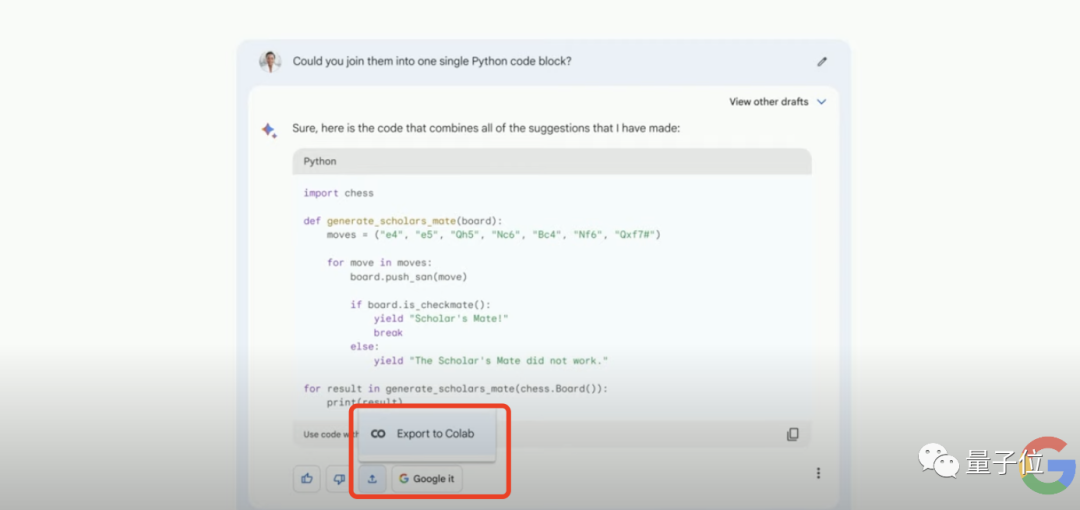

You can ask further questions about a function in the code that you don’t understand, ask it if it can be improved, or ask it to merge everything. in a code block.

#However, the most surprising thing is the addition of a one-click import function in response to the requests of developers.

Now you can export code generated by Bard directly to Colab.

In addition to code, any content you generate with Bard, such as email drafts, forms, etc., can also be dragged directly into Gmail, Docs, and Sheets.

By the way, Bard now also supports pictures in his answers. The most convenient way to ask about travel guides is:

In addition to it answering you with pictures, you can also directly throw it pictures, such as uploading a photo of two dogs, and let it help you compile some information. Interesting story:

#This feature is powered by Google Lens (an AI application that allows machines to learn to "speak by looking at pictures").

In addition to Google Lens, many of Google’s own application capabilities such as Docs, Drive, Gmail, and Maps are also integrated into Bard.

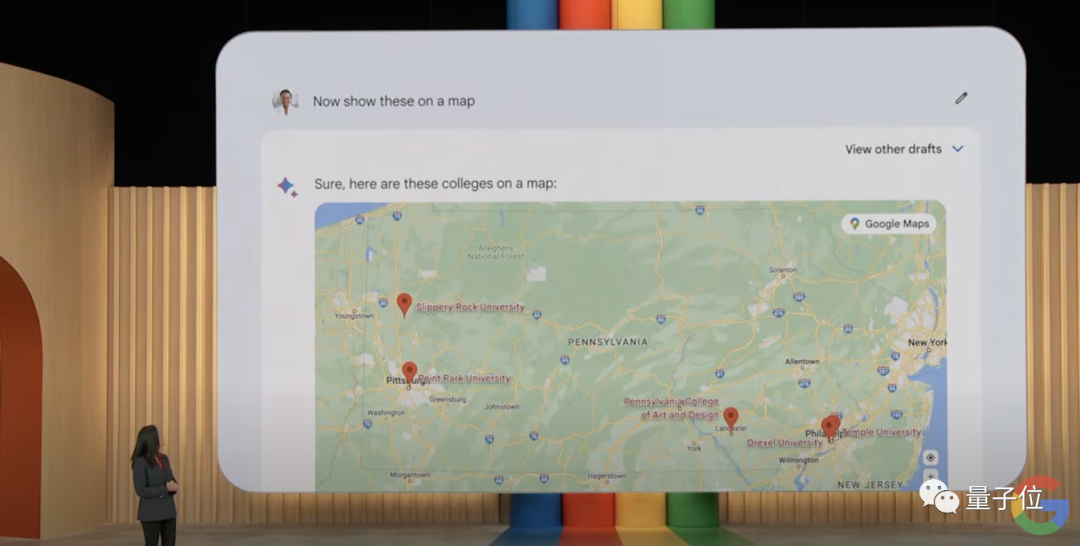

For example, in Bard's answer, you can directly use Google Maps to check the geographical locations of several universities:

There are various products that use Google now. It feels like just one entrance to Bard is enough.

In addition to its own application, Bard has also moved Adobe Firefly this time. Various copyrighted creative images can be "at your fingertips" through dialogue:

Search and reconstruct, join AI dialogue

After much clamor, Google search finally opened up the ability of AI dialogue.

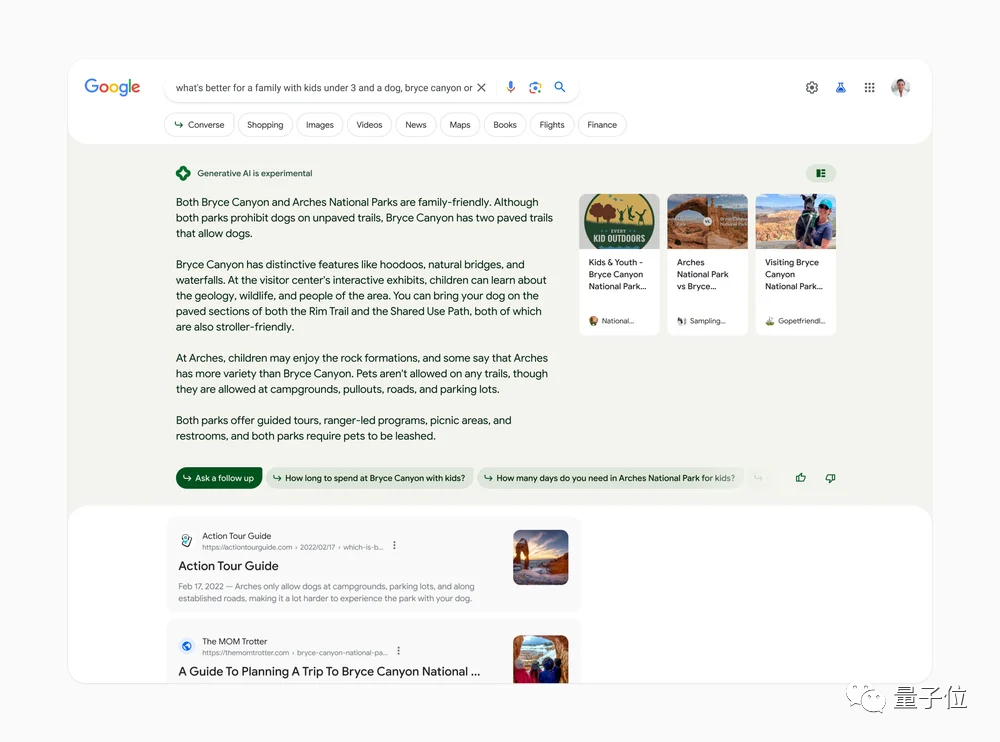

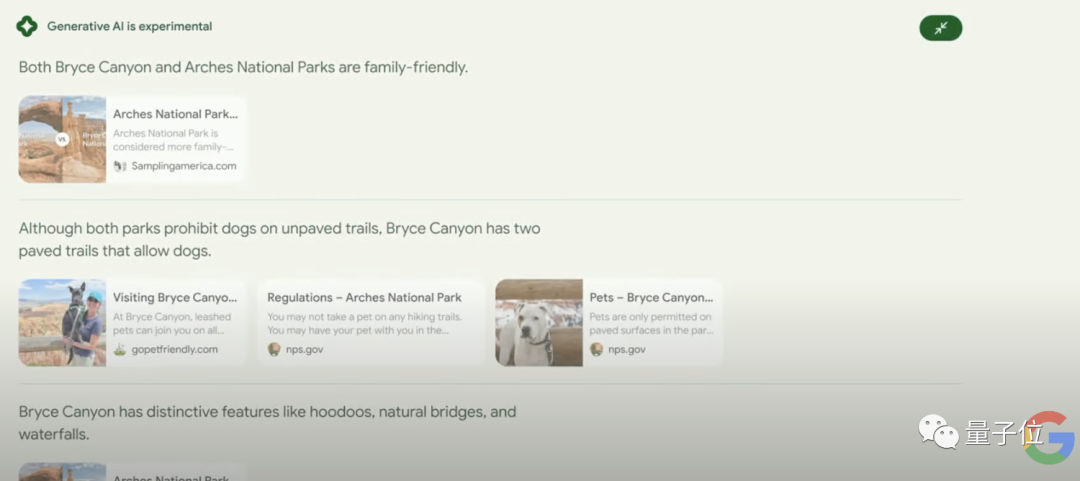

"For a family with a child under 3 years old and a dog, is it better to go to Bryce Canyon or Arches National Park?"

Regarding this question, let's put it aside In the past, you might have had to break it down into small questions and go to a search engine to sort out a lot of information before you could finally find the answer.

Now Google lets you try to get it right in one step.

As shown in the picture, Google search does not simply move the answers found, but takes into account the two factors of children and dogs to give organized answers. For example, it says:

Bryce Canyon has two loops that dogs can enter and are also very friendly to strollers; in Arches National Park, pets are not allowed on most sections of the road; both places require pets to be on leashes.

Each sentence has a specific basis link for viewing:

In addition, it will Displays guide links posted by netizens from different websites.

The most important thing is that you can ask further conversational questions about its answers by clicking the "ask for a follow up" button.

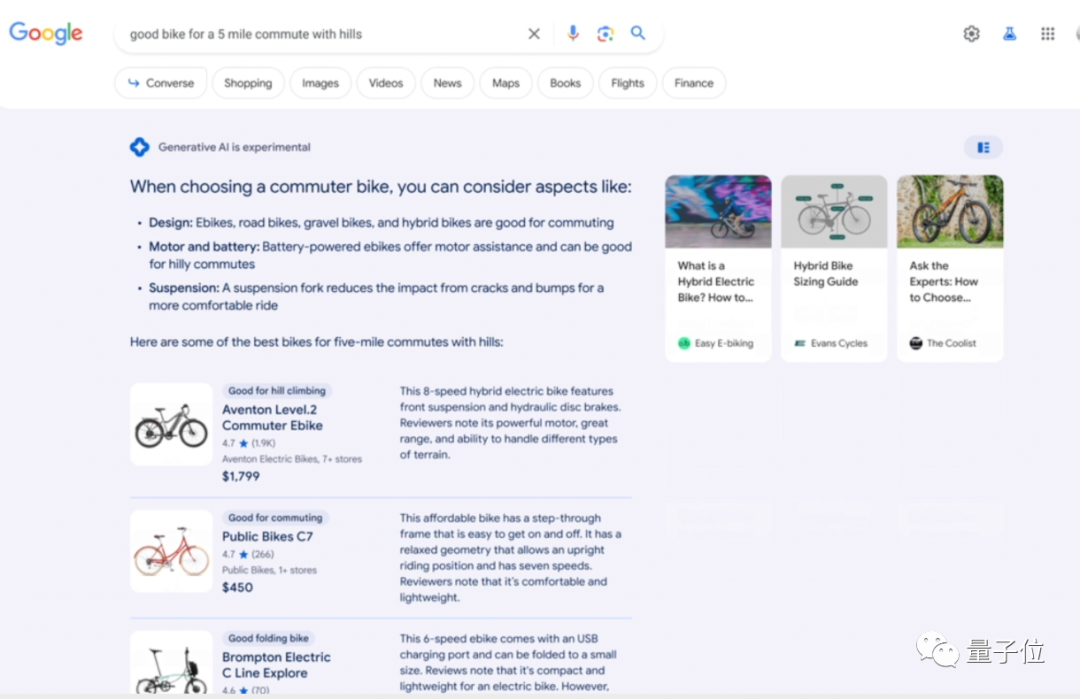

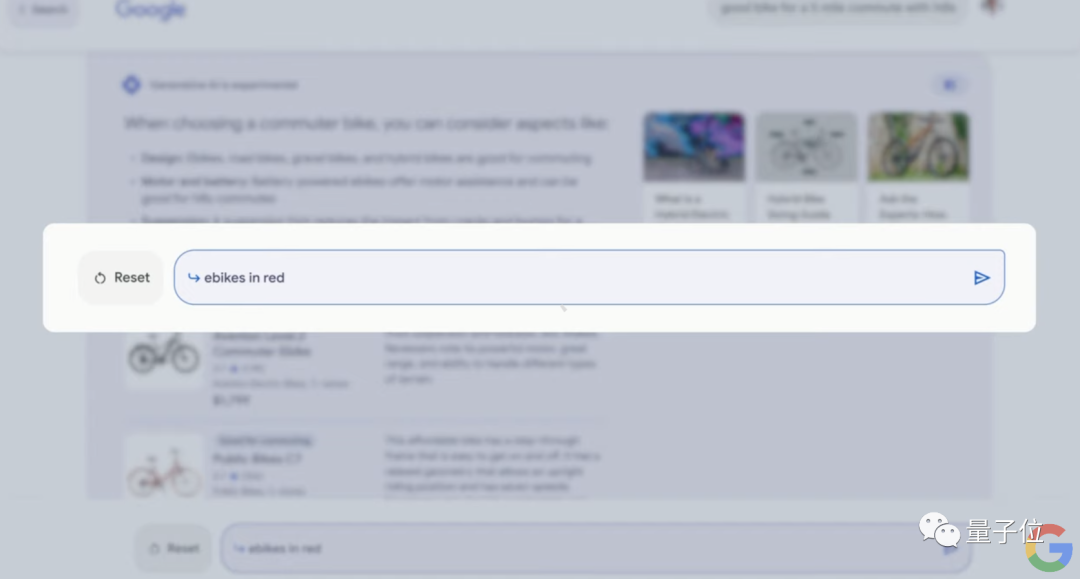

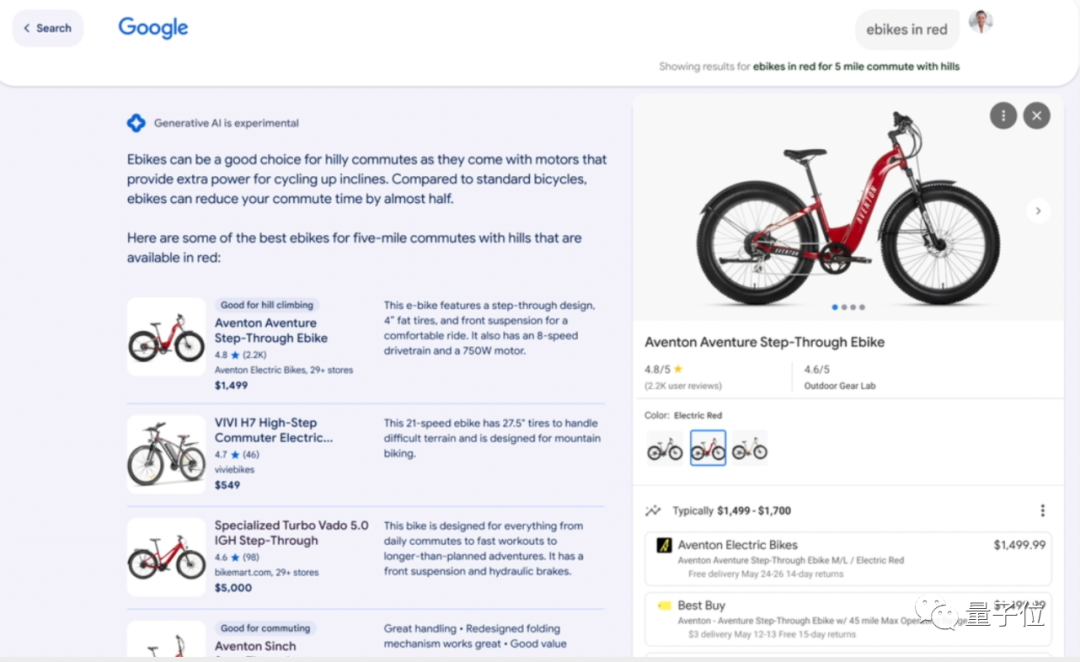

Shopping with the new Google Search is also a lot of fun, as it claims to help you make rational purchasing decisions quickly.

For example, when you want a "bike suitable for a 5-mile mountain commute", it will first tell you the important factors to consider before choosing, such as:

A look at the design: Electric bicycle , road bicycles and hybrid bicycles are suitable for commuting;

The second is the motor and battery, and the third is the suspension for shock absorption. Commuting on mountainous roads requires dealing with the impact of cracks and bumps.

Then we will recommend a suitable car to you. When recommending, we will also provide comprehensive information such as specific product descriptions, latest reviews, prices, and pictures.

You can also ask further questions, for example, if you only want a red electric bicycle, it will further optimize the answer.

This feature is powered by Shopping Graph, Google’s shopping comparison product, which collects and Continuously updated product listings from around the world.

It is worth mentioning that Google bluntly stated that the updated AI search interface will still embed ads, but don’t worry: it will only appear in dedicated advertising slots and will not be mixed into your search results.

Finally, this new feature can only be applied for trial in Google Search Labs, and is limited to users in the United States.

Three basic models are launched on Google Cloud

At this year’s I/O conference, the content of Google Cloud is also eye-catching.

After updating a large number of AI capabilities, Google has launched three new large models for its cloud machine learning platform Vertex AI:

Codey: text-to-code to help programmers Write code

Imagen: text-to-image, generate high-quality images

Chirp: speech-to-text, facilitate communication

The capabilities of these three models were actually demonstrated at today’s press conference, such as code generation, Google Photo smart editing, etc.

On Vertex AI, it is also currently possible to use the embedded text and image API. By converting text and image data into multi-dimensional numerical vectors and mapping semantic relationships, this tool enables developers to create more interesting applications.

Google claims they are the first to incorporate RLHF capabilities into an end-to-end machine learning platform in a managed service. The advantage is that it allows companies to quickly train reward models with RLHF to fine-tune basic models, which is critical to improving the accuracy of large models in industry applications.

In addition to models, Google Cloud also launched the next generation A3 GPU supercomputer for training. By combining A3 virtual machines with Nvidia H100, Google Cloud can provide greater computing throughput and bandwidth, allowing enterprises to develop machine learning models faster.

In addition to these, Google also brought new hardware products such as the first folding screen mobile phone priced at US$1,799 (approximately RMB 12,000), as well as the Android 14 system with integrated AI functions (such as providing Information reply suggestions, etc.), I will not show them one by one here.

Overall, as the 15th I/O conference, Google has really brought a lot of useful information to everyone this time.

It is worth mentioning that the guest speaker introduced on stage this time is no longer Jeff Dean. He just changed his rank a few days ago.

As the most representative executive of Google AI in the past, where will he be in the AI 2.0 wave?

Whether Google can still catch up in the field of large models and AI search is also worth looking forward to.

Are you satisfied with Google’s counterattack this time?

The above is the detailed content of Google launches a full-scale counterattack! Officially announced that AI reconstructs search, new model is comparable to GPT-4, and Microsoft is targeting ChatGPT. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology