Home >Technology peripherals >AI >OpenAI CEO attends U.S. Senate hearing to discuss how to better regulate AI

OpenAI CEO attends U.S. Senate hearing to discuss how to better regulate AI

- 王林forward

- 2023-05-28 17:52:551554browse

Recently, Google is fully developing generative artificial intelligence technology. At its annual I/O conference, the company announced plans to embed AI tools into nearly all of its products, from Google Docs to programming and online search.

Google’s announcement is significant. Billions of people will now have access to powerful, cutting-edge artificial intelligence models that help them complete tasks ranging from generating text to answering questions to writing and debugging code. As MIT Technology Review editor-in-chief Mat Honan wrote in his analysis of the I/O conference, it's clear that artificial intelligence is now a core product of Google.

(Source: STEPHANIE ARNET/MITTR)

(Source: STEPHANIE ARNET/MITTR)

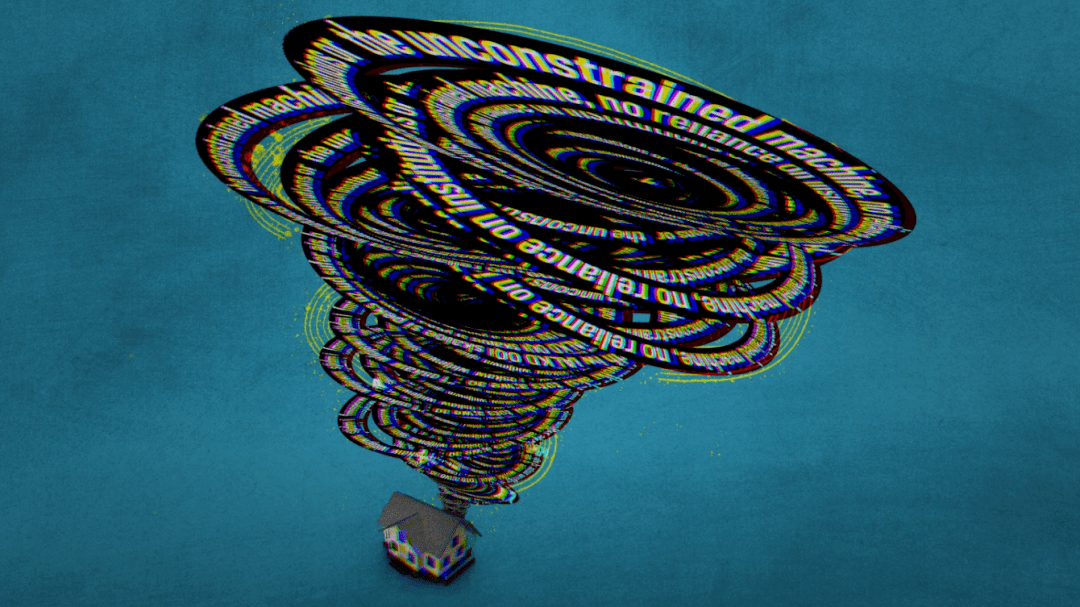

Gradually introducing these new features into Google products is their approach, but sooner or later problems will surface. The company hasn't solved any of the common problems with these AI models: They still like to make things up; they're still easily manipulated to break the rules set by humans; they're still vulnerable to attacks; and there's almost nothing stopping them from being used as generators Tools for disinformation, scams and spam.

These types of AI tools are relatively new, so they remain largely unregulated. But this is unsustainable. The popularity of ChatGPT is gradually cooling down, but regulatory needs continue to grow, and regulatory agencies are beginning to face the challenges posed by ChatGPT technology.

U.S. regulators are trying to find a way to regulate these powerful artificial intelligence tools. This week, OpenAI CEO Sam Altman testified before the U.S. Senate (after having an “educational” dinner with politicians the night before). The hearing comes a week after US Vice President Kamala Harris held a meeting with the CEOs of Alphabet, Microsoft, OpenAI and Anthropic.

Picture | OpenAI founder Sam Altman (Source: Data map)

Picture | OpenAI founder Sam Altman (Source: Data map)

In the statement, Harris pointed out that these companies have ethical and legal responsibilities to ensure the safety of their products. Majority Leader Chuck Schumer, D-N.Y., has introduced legislation to regulate artificial intelligence, which could include creating a new agency to enforce the rules.

Jennifer King, a privacy and data policy researcher at Stanford University’s Institute for Human-Centered Artificial Intelligence, said: “Everyone wants something they do to be seen. People are responding to all this development. Feeling very anxious.”

King said it would be difficult to get bipartisan support for a new AI bill: “It will depend on the extent to which (generative AI) is seen as a real societal-level threat. However, , she further pointed out that Federal Trade Commission Chairman Lena Khan has taken positive action. Earlier this month, Khan wrote an op-ed calling for the regulation of artificial intelligence now to prevent the technology industry from being too lax in the past. resulting errors. According to her, U.S. agencies regulating artificial intelligence are more likely to use existing legal toolkits, such as antitrust and business practices laws.

Meanwhile, in Europe, lawmakers are about to reach a final agreement on the Artificial Intelligence Act (AI Act). Members of the European Parliament last week signed off on a draft regulation calling for a ban on the use of facial recognition technology in public places. It also bans predictive policing and emotion recognition when biometric data is harvested online, as well as indiscriminate arrests of everyone.

Parliament hopes to introduce more regulations to regulate generative artificial intelligence, while the European Union will also strengthen supervision and require more transparency from companies involved in developing large-scale artificial intelligence models. Potential measures include labeling AI-generated content, disclosing in summary form what copyrighted data was used in model training, and establishing safeguards to prevent models from generating illegal content.

But here’s the thing: the EU is still a long way from implementing these rules. Many of the proposed elements of the Artificial Intelligence Act did not make it to the final version. Difficult negotiations remain between Parliament, the European Commission and EU member states. It will be several years before we see the Artificial Intelligence Act come into effect.

Well-known technology figures began to work in the opposite direction, going in the opposite direction. Microsoft chief economist Michael Schwarz said at a recent event that we should wait until we see "meaningful harm" from artificial intelligence before regulating it. He likened AI to a driver’s license—many people only get a driver’s license after being killed in an accident. Only with a certain level of harm can we truly understand the problem. ”

This statement is too outrageous. The harms caused by artificial intelligence have been well documented for years. It is biased and discriminatory and generates fake news and scams. Thousands of people have been pushed into poverty due to false accusations of fraud, as a result of innocent arrests caused by other AI systems. As Google massively advertises its next steps, and generative AI becomes more and more integrated into our society, these harms could grow exponentially.

The question we should ask ourselves is: How much harm are we willing to see? And my answer is: We’ve seen enough.

Support: Ren

Original text:

https://www.technologyreview.com/2023/05/16/1073167/how-do-you-solve-a-problem-like-out-of-control-ai/

The above is the detailed content of OpenAI CEO attends U.S. Senate hearing to discuss how to better regulate AI. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology