As machine learning experiments become more complex, their carbon footprints are swelling. Now, researchers have calculated the carbon cost of training a series of models in cloud computing data centers in different locations. Their findings could help researchers reduce emissions from work that relies on artificial intelligence (AI).

The research team found significant differences in emissions across geographic locations. Jesse Dodge, a machine learning researcher at the Allen Institute for AI in Seattle, Washington, and co-lead of the study, said that in the same AI experiment, “the most efficient areas produced emissions of about one-third of the least efficient regions.”

Priya Donti, a machine learning researcher at Carnegie Mellon University in Pittsburgh, Pennsylvania, and co-founder of the Climate Change AI group, said that so far, there haven’t been any good tools for measuring cloud-based AI production. emissions.

“This is great work that contributes to important conversations about how to manage machine learning workloads to reduce emissions,” she said.

Location Matters

Dodge and his collaborators, including researchers from Microsoft, monitored power consumption while training 11 common AI models, from language models that power Google Translate to Vision algorithms for automatically labeling images. They combined this data with estimates of how emissions from the grid powering 16 Microsoft Azure cloud computing servers changed over time to calculate training energy consumption across a range of locations.

Facilities in different locations have different carbon footprints due to changes in global power supplies and fluctuations in demand. The team found that training BERT, a common machine learning language model, in a data center in central America or Germany would emit 22-28 kilograms of carbon dioxide, depending on the time of year. That's more than double the emissions from the same experiment in Norway, which gets most of its electricity from hydroelectric power, while France relies mostly on nuclear energy.

The time you spend doing experiments every day is also important. For example, Dodge said, training AI at night in Washington, when the state's electricity comes from hydropower, would result in lower emissions than training AI during the day, when the daytime electricity also comes from gas stations. He presented the results last month at the Association for Computing Machinery for Fairness, Accountability and Transparency conference in Seoul.

The emissions from AI models also vary widely. Image classifier DenseNet produced the same CO2 emissions as charging a cell phone while training a medium-sized language model called Transformer (which is much smaller than the popular language model GPT-3, made by research firm OpenAI) in California San Francisco produces about the same amount of emissions as a typical American household produces in a year. Additionally, the team only went through 13 percent of the Transformer's training process; training it fully would produce emissions "on the order of magnitude of burning an entire rail car full of coal," Dodge says.

He added that emissions figures are also underestimated because they do not include factors such as electricity used for data center overhead or emissions used to create the necessary hardware. Ideally, Donti said, the numbers should also include error bars to account for the significant potential uncertainty in grid emissions at a given time.

Greener Choice

All other factors being equal, Dodge hopes this research can help scientists choose data centers for experiments that minimize emissions. "This decision turned out to be one of the most impactful things one can do in the discipline," he said. As a result of this work, Microsoft is now providing information about the power consumption of its hardware to researchers using its Azure services.

Chris Preist, who studies the impact of digital technology on environmental sustainability at the University of Bristol in the UK, said the onus on reducing emissions should lie with cloud providers rather than researchers. Suppliers can ensure that at any given time, the data centers with the lowest carbon intensity are used the most, he said. Donti adds that they can also employ flexible policies that allow machine learning runs to start and stop when emissions are reduced.

Dodge said tech companies conducting the largest experiments should bear the greatest responsibility for transparency about emissions and minimizing or offsetting them. He noted that machine learning is not always harmful to the environment. It can help design efficient materials, simulate climate, and track deforestation and endangered species. Still, AI’s growing carbon footprint is becoming a major cause of concern for some scientists. Dodge said that while some research groups are working on tracking carbon emissions, transparency "has not yet developed into a community norm."

"The whole point of this effort is to try to bring transparency to this subject because it's sorely lacking right now," he said.

References:

1.Dodge, J. et al. Preprint at https://arxiv.org/abs/2206.05229 (2022).

The above is the detailed content of How to reduce AI's growing carbon footprint. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

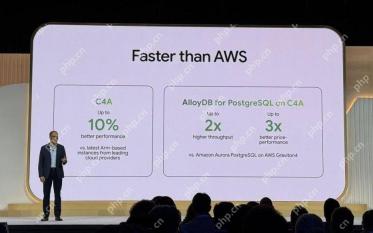

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Atom editor mac version download

The most popular open source editor

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SublimeText3 English version

Recommended: Win version, supports code prompts!

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.