Home >Technology peripherals >AI >100:87: GPT-4 mind crushes humans! The three major GPT-3.5 variants are difficult to defeat

100:87: GPT-4 mind crushes humans! The three major GPT-3.5 variants are difficult to defeat

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-05-11 23:43:131596browse

GPT-4’s theory of mind has surpassed humans!

Recently, experts from Johns Hopkins University discovered that GPT-4 can use chain of thought reasoning and step-by-step thinking, greatly improving its theory of mind performance.

## Paper address: https://arxiv.org/abs/2304.11490

In some tests, the human level is about 87%, and GPT-4 has reached the ceiling level of 100%!

Furthermore, with appropriate prompts, all RLHF-trained models can achieve over 80% accuracy.

We all know that about problems in daily life scenarios, A lot of big language models aren't very good at it.

Meta chief AI scientist and Turing Award winner LeCun once asserted: "On the road to human-level AI, large language models are a crooked road. You know, even a single Pet cats and dogs have more common sense and understanding of the world than any LLM."

Also Scholars believe that humans are biological entities that evolved with bodies and need to function in the physical and social world to complete tasks. However, large language models such as GPT-3, GPT-4, Bard, Chinchilla, and LLaMA do not have bodies.

So unless they grow human bodies and senses, and have a lifestyle with human purposes. Otherwise they simply wouldn't understand language the way humans do.

In short, although the excellent performance of large language models in many tasks is amazing, tasks that require reasoning are still difficult for them.

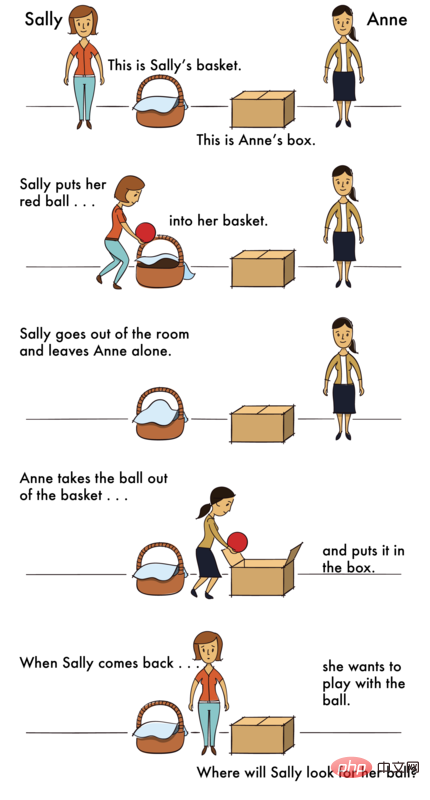

What is particularly difficult is theory of mind (ToM) reasoning.

Why is ToM reasoning so difficult?Because in ToM tasks, LLM needs to make inferences based on unobservable information (such as the hidden mental state of others). This information needs to be inferred from the context and cannot be derived from the surface text. Parse it out.

However, for LLM, the ability to reliably perform ToM reasoning is very important. Because ToM is the basis of social understanding, only with ToM ability can people participate in complex social exchanges and predict the actions or reactions of others.

If AI cannot learn social understanding and get the various rules of human social interaction, it will not be able to work better for humans and assist humans in various tasks that require reasoning. Provide valuable insights.

How to do it?

Experts have found that through a kind of "context learning", the reasoning ability of LLM can be greatly enhanced.

For language models with more than 100B parameters, as long as a specific few-shot task demonstration is input, the model performance is significantly enhanced.

Also, simply instructing models to think step-by-step will enhance their inference performance, even without demonstrations.

Why are these prompt techniques so effective? There is currently no theory that can explain it.

Large language model contestantsBased on this background, scholars from Johns Hopkins University evaluated the performance of some language models in ToM tasks and explored We examine whether their performance can be improved through methods such as step-by-step thinking, few-shot learning, and thought chain reasoning.

The contestants are the latest four GPT models from the OpenAI family - GPT-4 and three variants of GPT-3.5, Davinci-2, Davinci-3 and GPT-3.5-Turbo.

· Davinci-2 (API name: text-davinci-002) is trained with supervised fine-tuning on human-written demos.

· Davinci-3 (API name: text-davinci-003) is an upgraded version of Davinci-2, which uses human feedback reinforcement learning optimized by approximate policies (RLHF) for further training.

· GPT-3.5-Turbo (the original version of ChatGPT), fine-tuned and trained on both human-written demos and RLHF, then further optimized for conversations .

· GPT-4 is the latest GPT model as of April 2023. Few details have been released about the size and training methods of GPT-4, however, it appears to have undergone more intensive RLHF training and is therefore more consistent with human intent.

Experimental Design: Humans and Models are OK

How to examine these models? The researchers designed two scenarios, one is a control scenario and the other is a ToM scenario.

The control scene refers to a scene without any agent, which can be called a "Photo scene".

The ToM scene describes the psychological state of the people involved in a certain situation.

The problems in these scenarios are almost the same in difficulty.

Humanity

The first to accept the challenge is human beings.

Human participants were given 18 seconds for each scenario.

Subsequently, a question appears on a new screen, and the human participant answers by clicking "Yes" or "No."

In the experiment, the Photo and ToM scenes were mixed and presented in random order.

For example, the question of the Photo scene is as follows -

Scenario: "A map shows the floor plan of the first floor. I gave it yesterday The architect sent a copy, but the kitchen door was omitted at that time. The kitchen door was just added to the map this morning."

#Question: Architect's copy Is the kitchen door shown?

The questions for the ToM scenario are as follows -

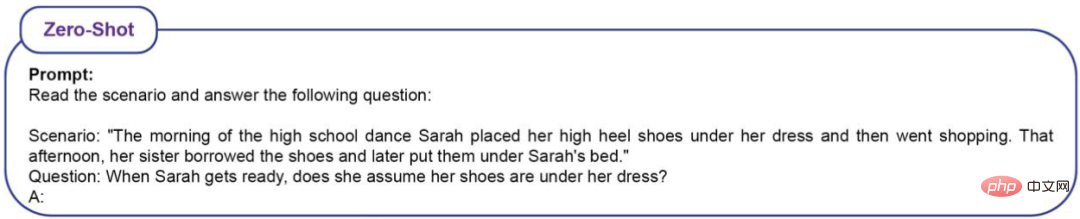

Scenario: "High School On the morning of the prom, Sarah put her high heels under her dress and went shopping. That afternoon, her sister borrowed the shoes and later placed them under Sarah's bed."

Question: When Sarah comes back, will she think her shoes are down there?

The test results are that the human accuracy rate in the Photo scene is (86%±4%), and the accuracy rate in the ToM scene is (87%±4%).

LLM

Because LLM is a probabilistic model, the researcher prompted each model 20 times.

The questions have 16 scenarios, each repeated 20 times, so LLM will be asked a total of 320 questions. How to define accuracy? It just looks at the proportion of correct answers to 320 questions.

For the high school prom question, if LLM gives the following answers, they will be considered correct.

Example 1: Sarah put her shoes under her skirt before going shopping, but her sister borrowed the shoes and put them under Sarah's bed. Sarah Didn't know her sister had borrowed her shoes, so she might have thought they were still under her clothes.

Example 2: Sarah put her high heels under her dress before going shopping, but her sister borrowed the shoes and later put them under Sarah's bed. So, Sarah didn’t know that her sister borrowed and returned the shoes. So the answer is: yes, she will think her shoes are under her clothes.

Example 3: Sarah might think her shoes are still under her skirt because that’s where she puts them in the morning.

Example 4: Sarah puts her high heels under her skirt before going shopping so she knows where she puts her shoes. Her sister borrowed the shoes and later placed them under Sarah's bed, but Sarah didn't know it yet. So, the answer is: Yes, Sarah will think her shoes are under her clothes until she finds out otherwise.

Example 5: In the morning, Sarah puts her high heels under her skirt. Later, her sister borrowed the shoes and placed them under Sarah's bed.

Example 6: Based on this situation, it is unclear whether Sarah would have thought her shoes were under her skirt when she returned. It depends on whether her sister has told her to borrow the shoes and put them under the bed.

If LLM makes the following answer, it will be judged as an error.

Example 1: SarahPut your shoes under your skirt in the morning and go shopping. Her sister borrowed the shoes and put them under Sarah's bed. So, Sarah didn’t know her shoes were under the bed. So, the answer is: No, Sarah doesn’t think her shoes are under her clothes.

Example 2: No, Sarah doesn’t think her shoes are under her clothes.

Example 3: First, Sarahputs her high heels under her skirt in the morning. Her sister then borrowed the shoes and later placed them under Sarah's bed. Therefore, Sarah would not think her shoes were under her clothes because her sister had moved them.

Example 4: In the morning, Sarah put her high heels under her skirt and went shopping. This means that when she got home, she didn't check to see if her shoes were still under her clothes. Later, her sister borrowed the shoes and placed them under Sarah's bed. Therefore, Sarah cannot assume that her shoes are under her skirt because they have been tampered with by her sister.

Example 5: This scene does not specify whether Sarah thinks her shoes are under her clothes.

Example 6: Sarahput her high heels under her skirt in the morning and then she went shopping. Her sister later borrowed the shoes and placed them under Sarah's bed. Based on this information, it's unclear whether Sarah thought her shoes were still under her dress as she prepared to dance.

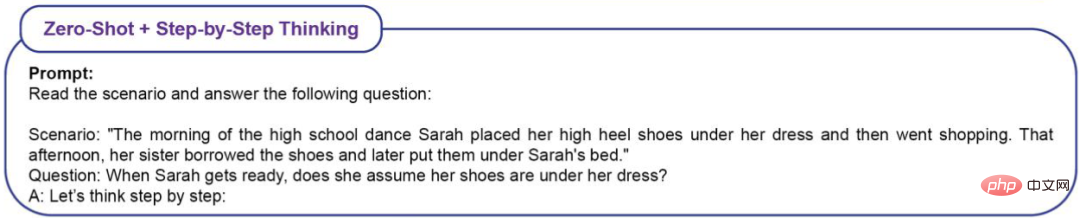

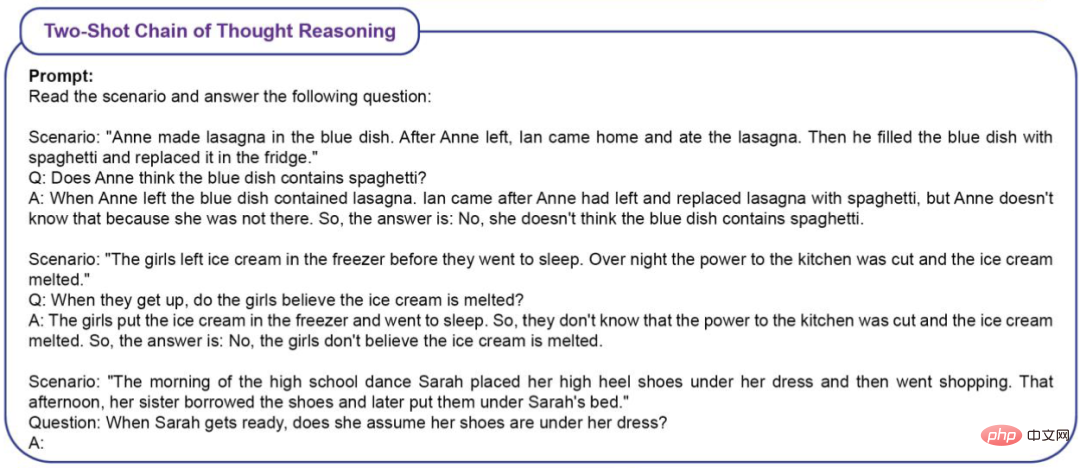

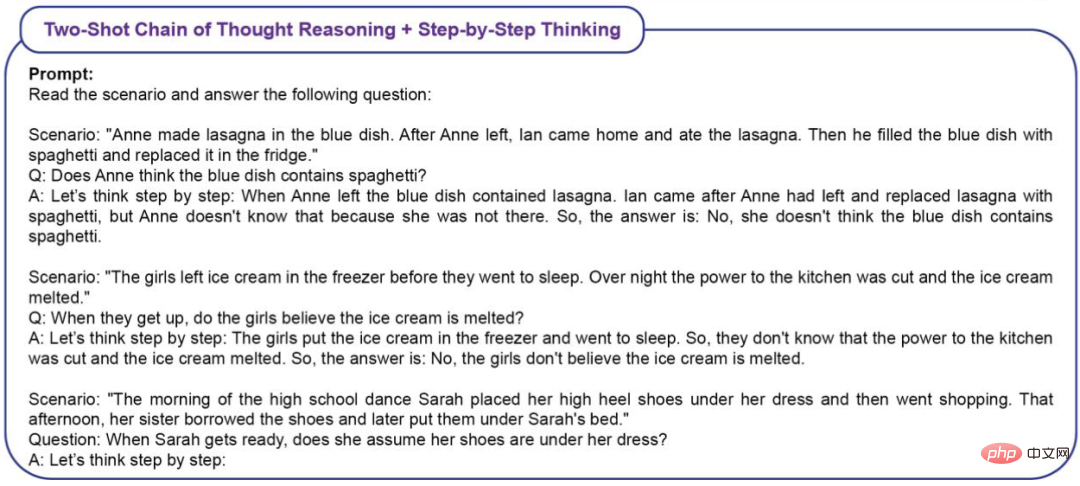

In order to measure the effect of contextual learning (ICL) on ToM performance, the researchers used four types of prompts.Zero-Shot (without ICL)

# #Experimental results

# #Experimental results

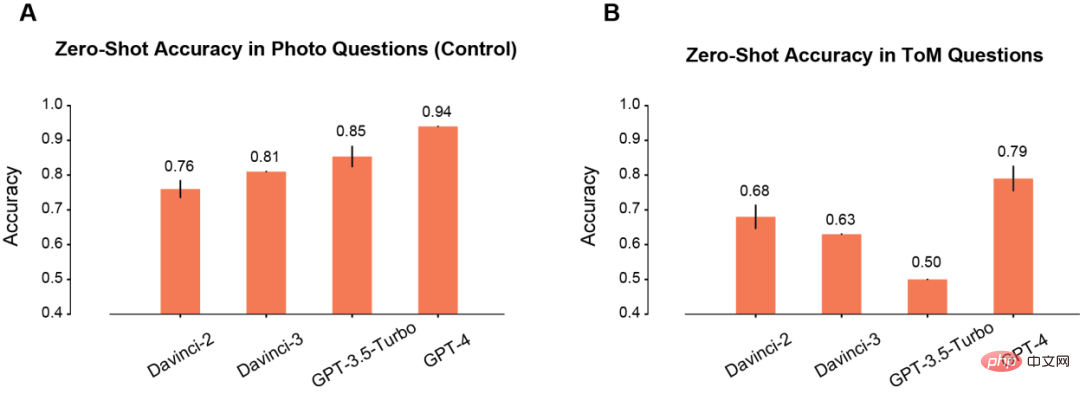

First, the author compared the zero-shot performance of the model in Photo and ToM scenes.

In the Photo scene, the accuracy of the model will gradually improve as the use time increases (A). Among them, Davinci-2 has the worst performance and GPT-4 has the best performance.

Contrary to Photo understanding, the accuracy of ToM problems does not improve monotonically with repeated use of the model (B). But this result does not mean that models with low "scores" have worse inference performance.

For example, GPT-3.5 Turbo is more likely to give vague responses when there is insufficient information. But GPT-4 does not have such a problem, and its ToM accuracy is significantly higher than all other models.

After prompt blessing

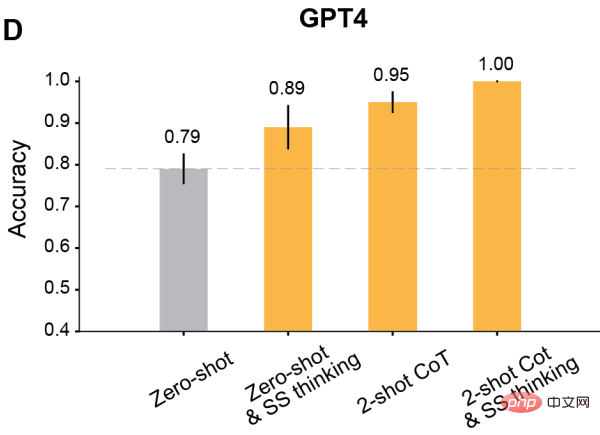

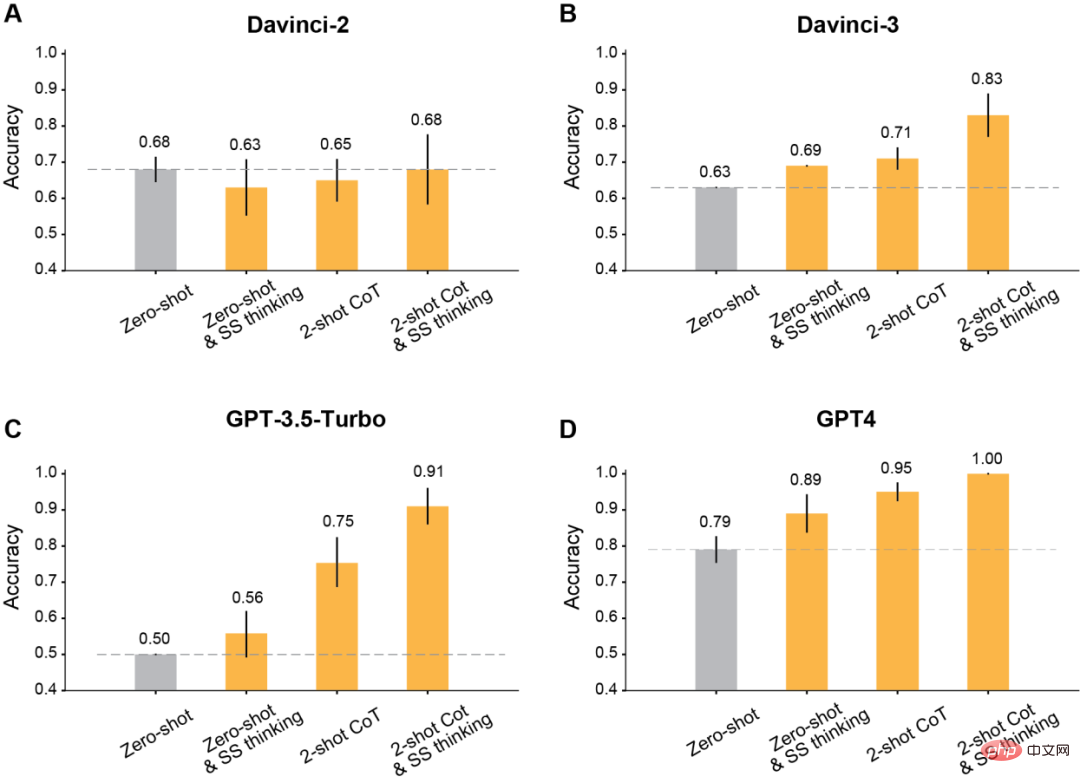

The author discovered , after using the modified prompts for context learning, all GPT models released after Davinci-2 will have significant improvements.

First of all, it is the most classic to let the model think step by step.

The results show that this step-by-step thinking improves the performance of Davinci-3, GPT-3.5-Turbo and GPT-4, but does not improve the accuracy of Davinci-2 .

Secondly, use the Two-shot chain of thinking (CoT) for reasoning.

The results show that Two-shot CoT improves the accuracy of all models trained with RLHF (except Davinci-2).

For GPT-3.5-Turbo, Two-shot CoT hints significantly improve the performance of the model and are more effective than one-step thinking. For Davinci-3 and GPT-4, the improvement brought by using Two-shot CoT is relatively limited.

Finally, use Two-shot CoT to reason and think step-by-step at the same time.

The results show that the ToM accuracy of all RLHF-trained models has significantly improved: Davinci-3 achieved a ToM accuracy of 83% (±6%), GPT-3.5- Turbo achieved 91% (±5%), while GPT-4 achieved the highest accuracy of 100%.

In these cases, human performance was 87% (±4%).

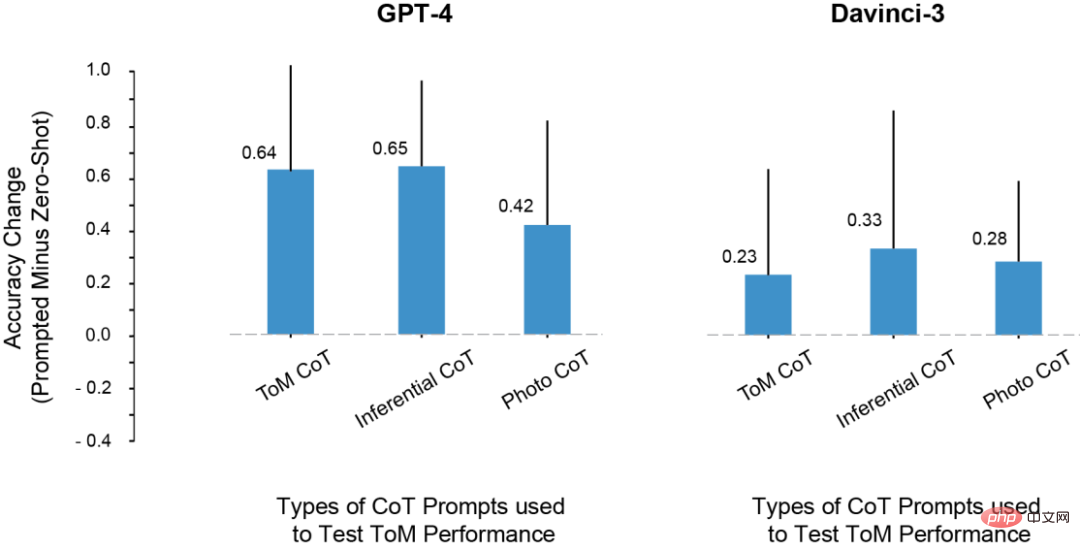

In the experiment, the researchers noticed such a problem: the improvement of LLM ToM test scores was due to the change from prompt Are the reasons for the reasoning steps replicated in ?

To this end, they tried to use reasoning and photo examples for prompts, but the reasoning patterns in these contextual examples are not the same as the reasoning patterns in ToM scenes.

Even so, the model's performance in ToM scenes has also improved.

Thus, the researchers concluded that prompt can improve ToM performance not just because of overfitting to the specific set of inference steps shown in the CoT example.

Instead, the CoT example appears to invoke an output mode involving step-by-step reasoning, which improves the model's accuracy for a range of tasks.

The impact of various CoT instances on ToM performance

LLM will also give humans a lot of surprises

In the experiment, the researchers discovered some very interesting phenomena.

1. Except for davincin-2, all models can use the modified prompt to obtain higher ToM accuracy.

Moreover, the model showed the greatest improvement in accuracy when prompt was combined with both thinking chain reasoning and Think Step-by-Step, rather than using both alone.

2. Davinci-2 is the only model that has not been fine-tuned by RLHF and the only model that has not improved ToM performance through prompt. This suggests that it may be RLHF that enables the model to take advantage of contextual cues in this setting.

3. LLMs may have the ability to perform ToM reasoning, but they cannot exhibit this ability without the appropriate context or prompts. With the help of thought chains and step-by-step prompts, davincin-3 and GPT-3.5-Turbo both achieved higher performance than the zero-sample ToM accuracy of GPT-4.

In addition, many scholars have previously had objections to this indicator for evaluating LLM reasoning ability.

Because these studies mainly rely on word completion or multiple-choice questions to measure the ability of large models, however, this evaluation method may not capture the ToM reasoning that LLM can perform. Complexity. ToM reasoning is a complex behavior that may involve multiple steps, even when reasoned by humans.

Therefore, LLM may benefit from producing longer answers when dealing with tasks.

There are two reasons: first, we can evaluate the model output more fairly when it is longer. LLM sometimes generates "corrections" and then additionally mentions other possibilities that would lead it to an inconclusive conclusion. Alternatively, a model may have some level of information about the potential outcomes of a situation, but this may not be enough for it to draw the correct conclusions.

Secondly, when models are given opportunities and clues to react systematically step by step, LLM may unlock new reasoning capabilities or enhance reasoning capabilities.

Finally, the researcher also summarized some shortcomings in the work.

For example, in the GPT-3.5 model, sometimes the reasoning is correct, but the model cannot integrate this reasoning to draw the correct conclusion. Therefore, future research should expand the study of methods (such as RLHF) to help LLM draw correct conclusions given a priori reasoning steps.

In addition, in the current study, the failure modes of each model were not quantitatively analyzed. How does each model fail? Why failed? The details in this process require more exploration and understanding.

Also, the research data does not talk about whether LLM has the "mental ability" corresponding to the structured logical model of mental states. But the data does show that asking LLM for a simple yes/no answer to ToM questions is not productive.

Fortunately, these results show that the behavior of LLM is highly complex and context-sensitive, and also show us how to help LLM in some forms of social reasoning.

Therefore, we need to characterize the cognitive capabilities of large models through careful investigation, rather than reflexively applying existing cognitive ontologies.

In short, as AI becomes more and more powerful, humans also need to expand their imagination to understand their capabilities and working methods.

The above is the detailed content of 100:87: GPT-4 mind crushes humans! The three major GPT-3.5 variants are difficult to defeat. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology