Home >Technology peripherals >AI >MLC LLM: An open source AI chatbot that supports offline operation and is suitable for computers and iPhones with integrated graphics cards.

MLC LLM: An open source AI chatbot that supports offline operation and is suitable for computers and iPhones with integrated graphics cards.

- 王林forward

- 2023-05-06 15:46:172277browse

News on May 2nd, most AI chatbots currently need to be connected to the cloud for processing, and even those that can be run locally have extremely high configuration requirements. So are there lightweight chatbots that don’t require an internet connection?

A new open source project called MLC LLM has been launched on GitHub. It runs completely locally without the need for an Internet connection. It can even run on old computers and Apple iPhone mobile phones.

MLC LLM project introduction states: "MLC LLM is a universal solution that allows any language model to be deployed locally on a set of different hardware backends and native applications Programmatically, there is also an efficient framework for everyone to further optimize model performance for their own use cases. Everything runs locally without server support and is accelerated by local GPUs on phones and laptops. Our mission is to empower everyone Individuals can develop, optimize and deploy AI models locally on their devices.”

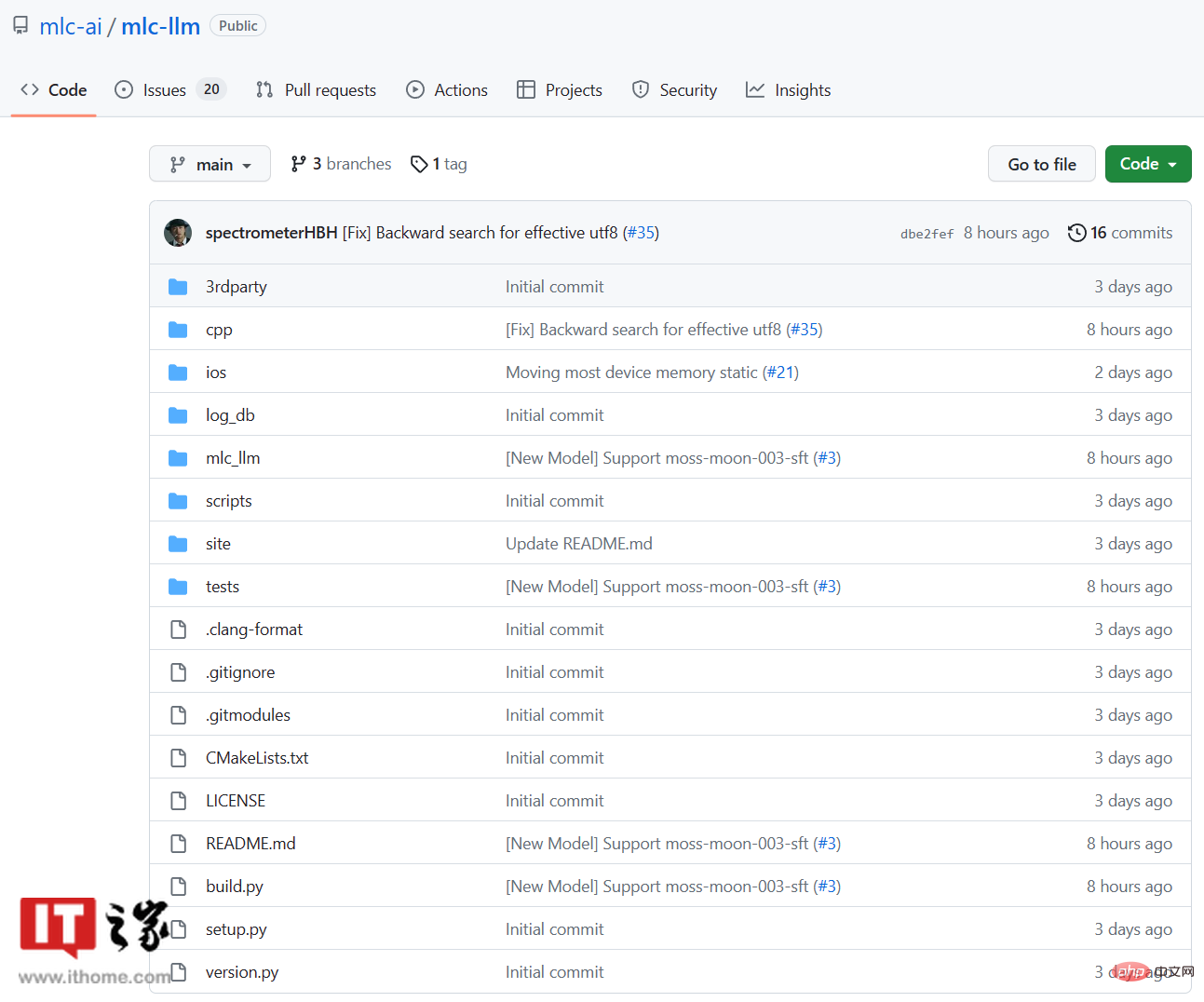

▲ MLC LLM project official demo

IT House query GitHub page discovery , the developers of this project come from Carnegie Mellon University's Catalyst Program, SAMPL Machine Learning Research Group, University of Washington, Shanghai Jiao Tong University, OctoML, etc. They also have a software called Web LLM

▲ MLC is the abbreviation of Machine Learning Compilation

MLC LLM uses Vicuna-7B-V1.1, later It is a lightweight LLM based on Meta's LLaMA. Although the effect is not as good as GPT3.5 or GPT4, it is more advantageous in terms of size.

Currently, MLC LLM is available for Windows, Linux, macOS and iOS platforms. There is no version available for Android yet.

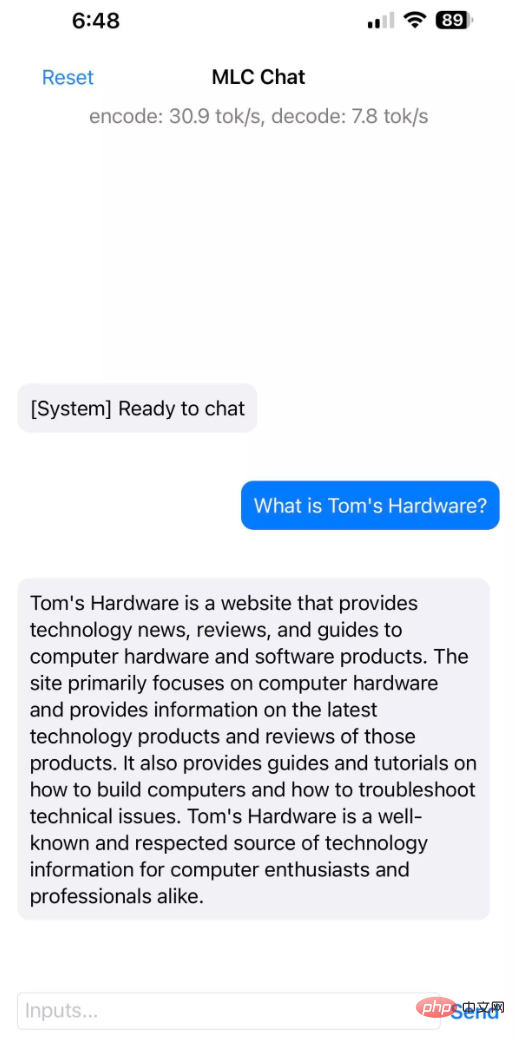

According to testing by foreign media tomshardware, Apple iPhone 14 Pro Max and iPhone 12 Pro Max phones with 6GB of memory successfully ran MLC LLM, with an installation size of 3GB. The Apple iPhone 11 Pro Max with 4GB of memory cannot run MLC LLM.

▲ Picture source tomshardware

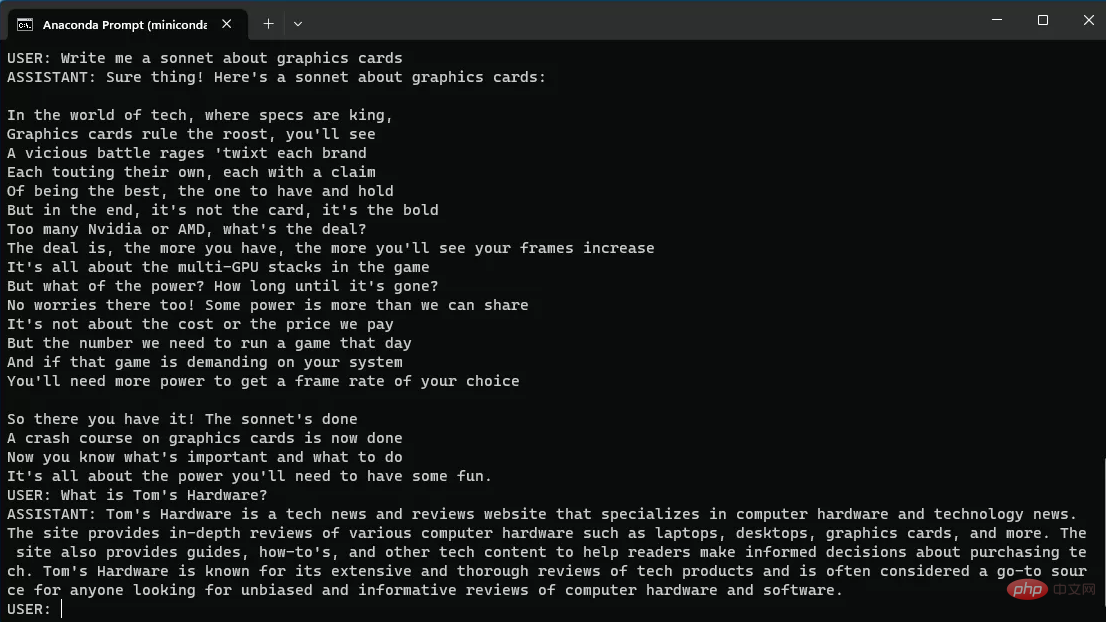

In addition, ThinkPad X1 Carbon (6th generation) also tested and successfully ran MLC LLM, which is a model equipped with i7-8550U A notebook with a processor, no discrete graphics card, and an Intel UHD 620 GPU. MLC LLM needs to be run through the command line on the PC platform. The performance of the foreign media test was average, the response time took nearly 30 seconds, and there was almost no continuous dialogue capability. I hope it can be improved in subsequent versions.

▲ Picture source tomshardware

MLC LLM’s GitHub page: Click here to view

The above is the detailed content of MLC LLM: An open source AI chatbot that supports offline operation and is suitable for computers and iPhones with integrated graphics cards.. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology