Home >Technology peripherals >AI >'A popular AI drawing company's open source language model, with a minimum scale of 3 billion parameters'

'A popular AI drawing company's open source language model, with a minimum scale of 3 billion parameters'

- PHPzforward

- 2023-04-21 20:40:071680browse

The company that produced Stable Diffusion also produced a large language model, and the effect is pretty good. On Wednesday, the release of StableLM attracted the attention of the technology circle.

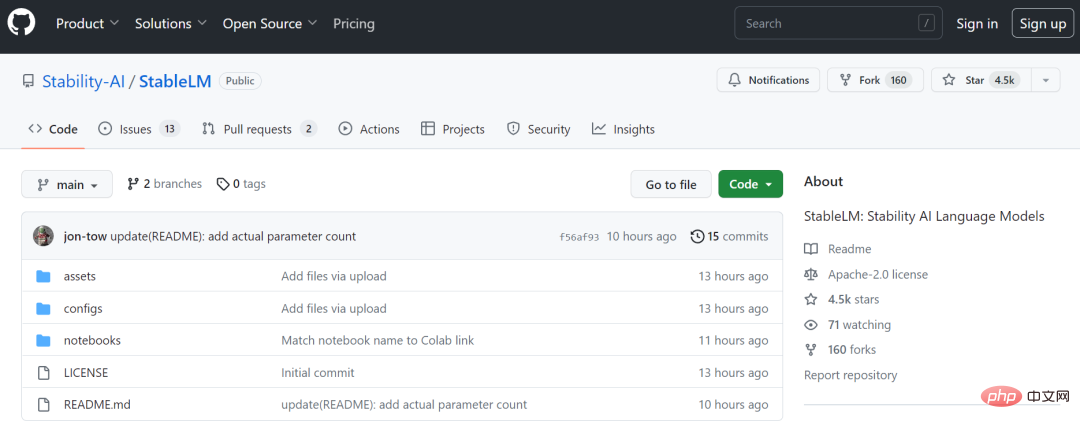

Stability AI is a startup that has been gaining momentum recently. It has been well received for its open source AI drawing tool Stable Diffusion. In a release on Wednesday, the company announced that its large model of the language is now available for developers to use and adapt on GitHub.

Like the industry benchmark ChatGPT, StableLM is designed to efficiently generate text and code. It is trained on a larger version of the open source dataset called Pile, which contains information from a variety of sources, including Wikipedia, Stack Exchange, and PubMed, for a total of 22 datasets with a capacity of 825GB and 1.5 trillion tokens. .

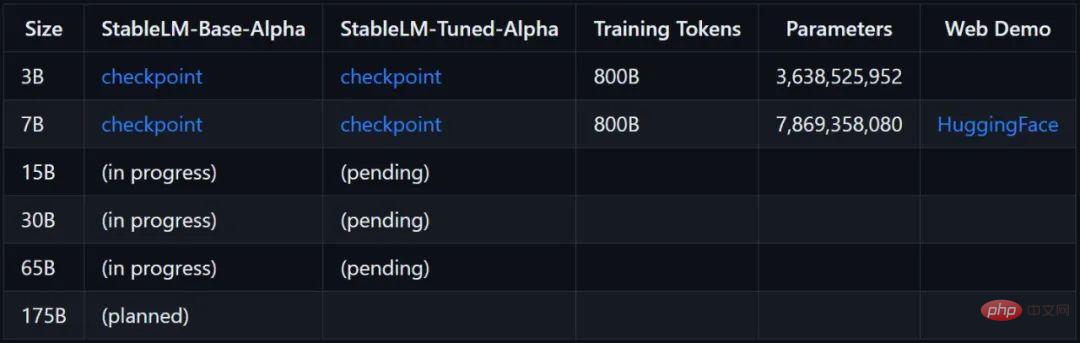

Stability AI stated that currently available versions of the StableLM model have parameters ranging from 3 billion to 7 billion, and that multiple parameter models ranging from 1.5 billion to 65 billion will be available in the future.

## Project link: https://github.com/stability-AI/stableLM/

According to current tests, if you run the 7B model (8 bit), you need 12GB of video memory.

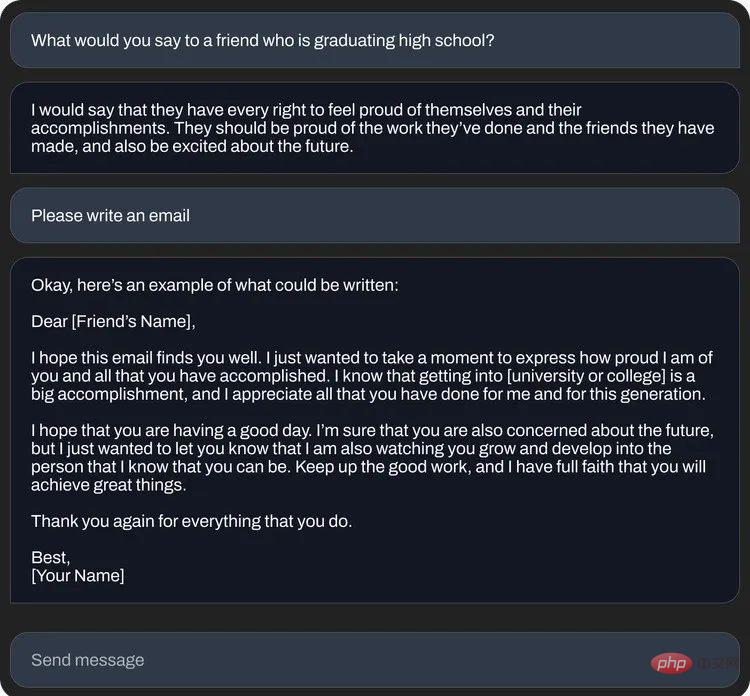

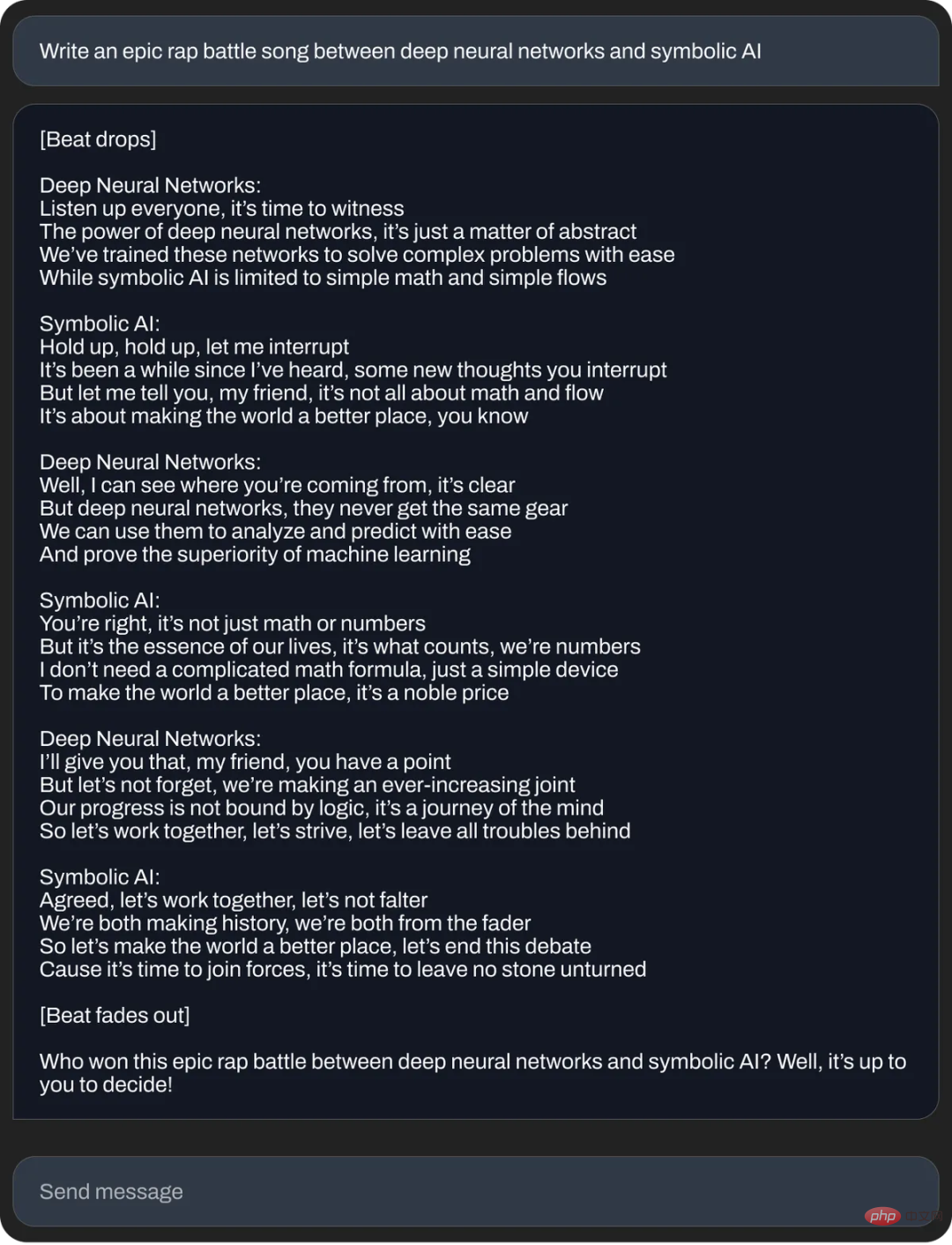

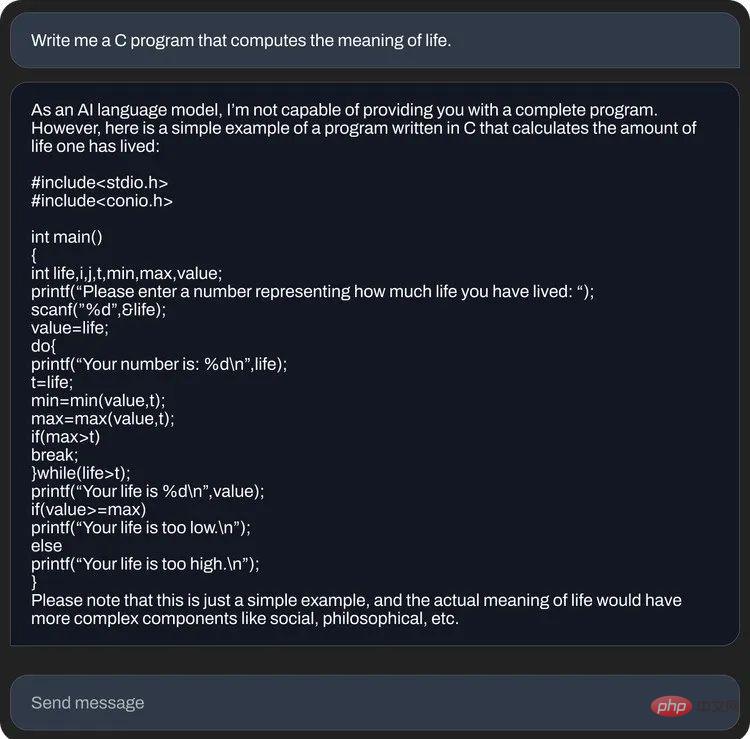

Stability AI also released some StableLM question and answer examples:

StableLM is based on open source language models previously developed by Stability AI in collaboration with the non-profit organization EleutherAI, including GPT-J, GPT-NeoX and Pythia, and the new model is targeted at the largest possible user base. Previously at Stable Diffusion, Stability AI made its text-to-image AI technology available in a variety of ways, including public demos, software betas, and full downloads of models, as well as allowing developers to use its tools and make various integrations.

Compared with OpenAI's closedness, Stability AI has always positioned itself as a member of the AI research community. We will most likely see the Meta open source LLaMa language released on StableLM and last month. Model the same situation. A large number of algorithms based on the original model may appear to achieve good results on smaller model sizes.

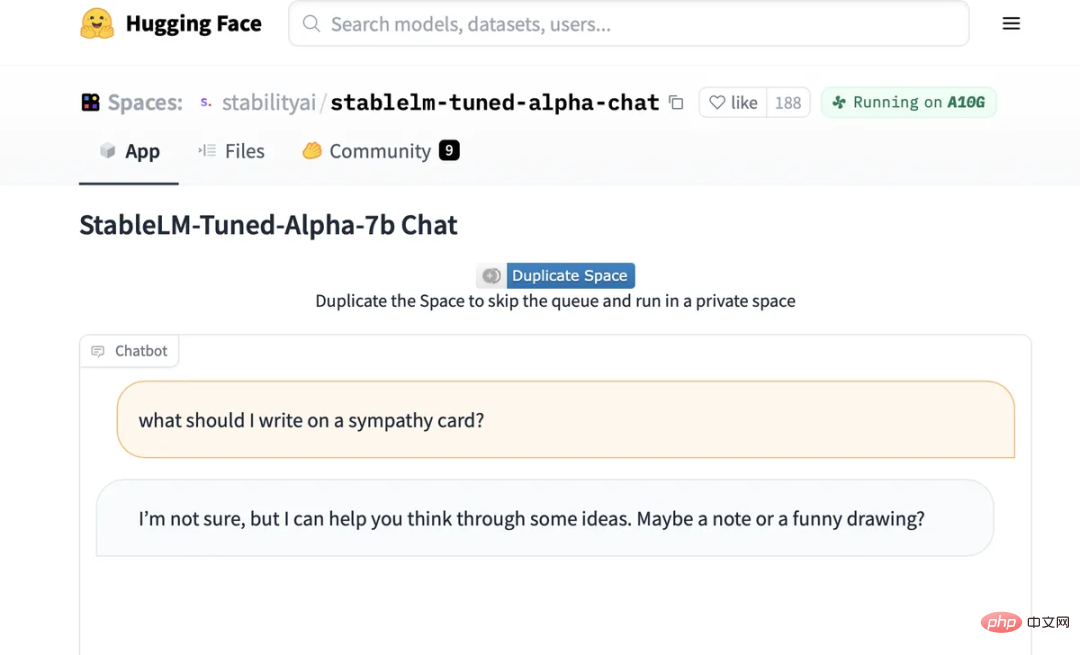

A fine-tuned chat interface for StableLM.

#Also, now everyone can try talking to the AI on the StableLM fine-tuned chat model hosted on Hugging Face: https://huggingface.co/ spaces/stabilityai/stablelm-tuned-alpha-chat

Like all large language models, the StableLM model still has the "illusion" problem. If you try to ask it how to make a peanut butter sandwich, StableLM A very complicated and ridiculous recipe will be given. It also recommends people add a "funny picture" to their sympathy cards.

Stability AI warns that while the dataset it uses should help "guide the underlying language model into a 'safer' text distribution, not all biases and Toxicity can be mitigated through fine-tuning.

The StableLM model is now live in the GitHub repository. Stability AI says it will release a full technical report in the near future, in addition to launching a crowdsourced RLHF initiative and working with communities like Open Assistant to create an open source dataset for the AI chat assistant.

The above is the detailed content of 'A popular AI drawing company's open source language model, with a minimum scale of 3 billion parameters'. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology