Technology peripherals

Technology peripherals AI

AI From Transformer to Diffusion Model, learn about reinforcement learning methods based on sequence modeling in one article

From Transformer to Diffusion Model, learn about reinforcement learning methods based on sequence modeling in one articleFrom Transformer to Diffusion Model, learn about reinforcement learning methods based on sequence modeling in one article

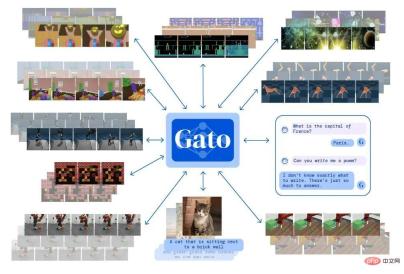

Large-scale generative models have brought huge breakthroughs to natural language processing and even computer vision in the past two years. Recently, this trend has also affected reinforcement learning, especially offline reinforcement learning (offline RL), such as Decision Transformer (DT)[1], Trajectory Transformer (TT)[2], Gato[3], Diffuser[4], etc. This method regards reinforcement learning data (including status, action, reward and return-to-go) as a string of destructured sequence data, and models these sequence data as the core task of learning. These models can be trained using supervised or self-supervised learning methods, avoiding the unstable gradient signals in traditional reinforcement learning. Even using complex policy improvement and value estimation methods, they show very good performance in offline reinforcement learning.

This article will briefly talk about these reinforcement learning methods based on sequence modeling. In the next article, I will introduce our newly proposed Trajectory Autoencoding Planner (TAP), which uses Vector Quantised Variational AutoEncoder (VQ-VAE) is a method for sequence modeling and efficient planning in latent action space.

Transformer and Reinforcement Learning

The Transformer architecture [5] was proposed in 2017 and slowly triggered a revolution in natural language processing. The subsequent BERT and GPT-3 gradually The combination of self-supervised Transformer continues to be pushed to new heights. While properties such as few-shot learning continue to emerge in the field of natural language processing, it also begins to spread to fields such as computer vision [6][7] .

However, for reinforcement learning, this process does not seem to be particularly obvious before 2021. In 2018, the multi-head attention mechanism was also introduced into reinforcement learning [8]. This type of work is basically applied in fields similar to semi-symbolic (sub-symbolic) to try to solve the problem of reinforcement learning generalization. Since then, such attempts have been tepid. According to the author's personal experience, Transformer actually does not show a stable and overwhelming advantage in reinforcement learning, and it is also difficult to train. In one of our work using Relational GCN for reinforcement learning in 20 years [9], we actually tried Transformer behind the scenes, but it was basically much worse than the traditional structure (similar to CNN), and it was difficult to stably train and obtain a usable one. policy. Why Transformer is not compatible with traditional online reinforcement learning (online RL) is still an open question. For example, Melo [10] explained that it is because the parameter initialization of traditional Transformer is not suitable for reinforcement learning. I will not discuss it more here.

In mid-2021, the release of Decision Transformer (DT) and Trajectory Transformer (TT) set off a new wave of Transformer applications in RL. The idea of these two works is actually very straightforward: If Transformer and online reinforcement learning algorithms are not very compatible, how about simply treating reinforcement learning as a self-supervised learning task? Taking advantage of the fact that the concept of offline reinforcement learning is also very popular, both of these works have locked their main target tasks into modeling offline datasets, and then use this sequence model for control and decision-making.

For reinforcement learning, the so-called sequence is composed of state (state) s, action (action)  , reward (reward) r and value ( value) v The trajectory composed of

, reward (reward) r and value ( value) v The trajectory composed of  . The value is currently generally replaced by return-to-go, which can be regarded as a Monte Carlo estimation. The offline data set consists of these trajectories. The generation of trajectories is related to the dynamics of the environment and the behavior policy. The so-called sequence modeling is to model the probability distribution (distribution) that generates this sequence, or strictly speaking, some of the conditional probabilities.

. The value is currently generally replaced by return-to-go, which can be regarded as a Monte Carlo estimation. The offline data set consists of these trajectories. The generation of trajectories is related to the dynamics of the environment and the behavior policy. The so-called sequence modeling is to model the probability distribution (distribution) that generates this sequence, or strictly speaking, some of the conditional probabilities.

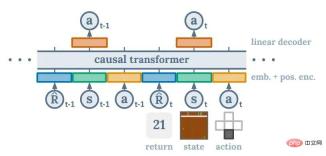

Decision Transformer

DT's approach is to model a mapping from past data and values to actions (return-conditioned policy), which is to model the mathematical expectation of the conditional probability of an action.  . This idea is very similar to Upside Down RL [11], but it is very likely that the direct motivation behind it is to imitate GPT2/3's method of completing downstream tasks based on prompts. One problem with this approach is that there is no systematic way to decide what the best target value is. However, the authors of DT found that even if the target value is set to the highest return in the entire data set, the final performance of DT can be very good.

. This idea is very similar to Upside Down RL [11], but it is very likely that the direct motivation behind it is to imitate GPT2/3's method of completing downstream tasks based on prompts. One problem with this approach is that there is no systematic way to decide what the best target value is. However, the authors of DT found that even if the target value is set to the highest return in the entire data set, the final performance of DT can be very good.

For those with a background in reinforcement learning Humanly speaking, it is very counter-intuitive that a method like DT can achieve strong performance. If methods such as DQN and Policy Gradient can only regard the neural network as a fitting function that can be used for interpolation generalization, then policy improvement and valuation in reinforcement learning are still the core of constructing policies. It can be said that DT is entirely based on neural networks. The entire process behind how it connects a potentially unrealistically high target value to an appropriate action is completely black box. The success of DT can be said to be somewhat unreasonable from the perspective of reinforcement learning, but I think this is the charm of this kind of empirical research. The author believes that the generalization ability of neural networks, or Transformers, may exceed the previous expectations of the entire RL community.

DT is also very simple among all sequence modeling methods, and almost all core problems of reinforcement learning are solved inside Transformer. This simplicity is one of the reasons why it is currently the most popular. However, its black-box nature also causes us to lose a lot of grip on the algorithm design level, and some achievements in traditional reinforcement learning are difficult to incorporate into it. The effectiveness of these results has been repeatedly confirmed in some very large-scale experiments (such as AlphaGo, AlphaStar, VPT).

Trajectory Transformer

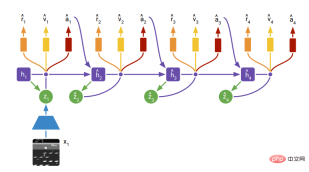

TT’s approach is more similar to the traditional model-based reinforcement learning (model-based RL) planning method. In terms of modeling, it discretizes the elements in the entire sequence, and then uses a discrete auto-regressive method like GPT-2 to model the entire offline data set. This allows it to model the continuation of any given sequence minus the return-to-go. Because it models the distribution of subsequent sequences, TT actually becomes a sequence generation model. By looking for a sequence with better value estimation in the generated sequence, TT can output an "optimal plan". As for the method of finding the optimal sequence, TT uses a method commonly used in natural language: a variant of beam search. Basically, it is to always retain the optimal part of the sequence

in the expanded sequence, and then find the next optimal sequence set  based on them.

based on them.

From the perspective of reinforcement learning, TT is not as unorthodox as DT. What’s interesting about it is that (like DT) it completely abandons the causal graph structure of the original Markov Decision Process in reinforcement learning. Previous model-based methods, such as PETS, world model, dramerv2, etc., all follow the definition of policy function, transfer function, reward function, etc. in the Markov process (or implicit Markov), that is, the condition of the state distribution is The state of the previous step, and actions, rewards, and values are all determined by the current state. The entire reinforcement learning community generally believes that this can improve sample efficiency, but such a graph structure may actually be a constraint. The transition from RNN to Transformer in the field of natural language and from CNN to Transformer in the field of computer vision actually reflects: as the data increases, letting the network learn the graph structure by itself is more conducive to obtaining a better-performing model.

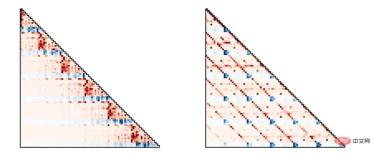

##DreamerV2, Figure 3Since TT basically hands over all sequence prediction tasks to With Transformer, Transformer can learn better graph structures from data more flexibly. As shown in the figure below, the behavioral strategies modeled by TT show different graph structures according to different tasks and data sets. The left side of the figure corresponds to the traditional Markov strategy, and the right side of the figure corresponds to an action moving average strategy.

Trajectory Transformer, Figure 4

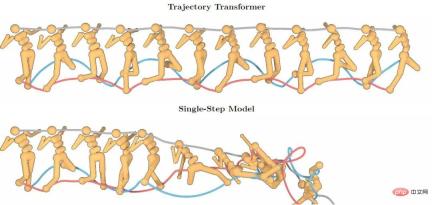

Transformer Powerful sequence modeling The ability brings higher long sequence modeling accuracy. The figure below shows that TT's predictions over 100 steps still maintain high accuracy, while single-step prediction models that follow Markov properties quickly collapse due to the problem of superposition of prediction errors. .

##Trajectory Transformer, Figure 2TT Although different from traditional methods in terms of specific modeling and prediction It is different, but the prediction ability it provides still leaves a good starting point for other results that will be integrated into reinforcement learning in the future. However, TT has an important problem in prediction speed: because it needs to model the distribution of the entire sequence, it discretizes all elements in the sequence according to dimensions. This means that a 100-dimensional state needs to occupy 100 elements in the sequence. position, which makes it easy for the actual length of the sequence being modeled to become particularly long. For Transformer, its computational complexity for sequence length N is , which makes sampling a future prediction from TT very expensive. Even task TT with less than 100 dimensions requires several seconds or even tens of seconds to make a one-step decision. Such a model is difficult to put into real-time robot control or online learning.  Gato

Gato

Gato BlogOther sequence generation models: Diffusion model

The diffusion model (Diffusion Model) can be said to be very popular in the field of image generation recently. DALLE-2 and Stable Diffusion are both based on it for image generation. Diffuser has also applied this method to offline reinforcement learning. The idea is similar to TT. It first models the conditional distribution of the sequence, and then samples possible future sequences based on the current state.Diffuser has greater flexibility than TT: it can let the model fill in the middle path while setting the starting point and end point, so that it can be goal-driven (rather than maximizing rewards) function) control. It can also mix multiple goals and a priori conditions for achieving the goals to help the model complete the task. ##Diffuser Figure 1Diffuser is also quite subversive compared to the traditional reinforcement learning model. The generated plan does not unfold gradually on a timeline, but becomes gradually more precise from vagueness in the sense of the entire sequence. Further research on the diffusion model itself is also a hot topic in computer vision, and there is likely to be a breakthrough in the model itself in the next few years. However, the diffusion model itself currently has a special flaw compared to other generation models, that is, its generation speed is slower than other generation models. Many experts in related fields believe that this may be alleviated in the next few years. However, the generation time of several seconds is currently difficult to accept for scenarios where reinforcement learning requires real-time control. Diffuser proposed a method to improve the generation speed: add a small amount of noise from the previous step's plan to regenerate the next step's plan, but doing so will reduce the performance of the model to a certain extent.

The above is the detailed content of From Transformer to Diffusion Model, learn about reinforcement learning methods based on sequence modeling in one article. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

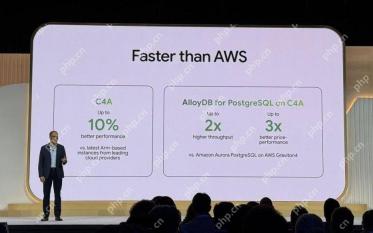

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Atom editor mac version download

The most popular open source editor

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SublimeText3 English version

Recommended: Win version, supports code prompts!

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.