Technology peripherals

Technology peripherals AI

AI Natural language is integrated into NeRF, and LERF, which generates 3D images with just a few words, is here.

Natural language is integrated into NeRF, and LERF, which generates 3D images with just a few words, is here.Natural language is integrated into NeRF, and LERF, which generates 3D images with just a few words, is here.

NeRF (Neural Radiance Fields), also known as neural radiation fields, has quickly become one of the most popular research fields since it was proposed, and the results are amazing. However, the direct output of NeRF is only a colored density field, which provides little information to researchers. The lack of context is one of the problems that need to be faced. The effect is that it directly affects the construction of interactive interfaces with 3D scenes.

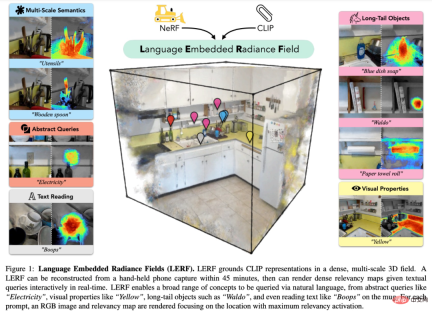

But natural language is different. Natural language interacts with 3D scenes very intuitively. We can use the kitchen scene in Figure 1 to explain that objects can be found in the kitchen by asking where the cutlery is, or asking where the tools used to stir are. However, completing this task requires not only the query capabilities of the model, but also the ability to incorporate semantics at multiple scales.

In this article, researchers from UC Berkeley proposed a novel method and named it LERF (Language Embedded Radiance Fields), which combines CLIP (Contrastive Language-Image Pre -training) are embedded into NeRF, making these types of 3D open language queries possible. LERF uses CLIP directly, without the need for fine-tuning through datasets such as COCO, or relying on masked region suggestions. LERF preserves the integrity of CLIP embeddings at multiple scales and is also able to handle a variety of linguistic queries, including visual attributes (e.g., yellow), abstract concepts (e.g., electric current), text, etc., as shown in Figure 1.

##Paper address: https://arxiv.org/pdf/2303.09553v1.pdf

Project homepage: https://www.lerf.io/

LERF can interactively provide languages for real-time Prompt to extract 3D related diagrams. For example, on a table with a lamb and a water cup, enter the prompt lamb or water cup, and LERF can give the relevant 3D picture:

Method

Method

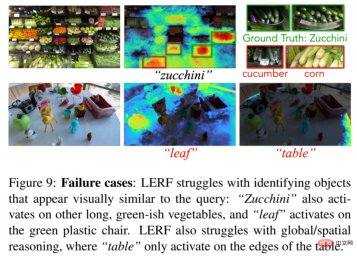

#LERF itself produces coherent results, but the resulting correlation map can sometimes be incomplete and contain some outliers, as shown in Figure 5 below. To standardize the optimized language field, this study introduces self-supervised DINO through shared bottlenecks. In terms of architecture, optimizing language embedding in 3D should not affect the density distribution in the underlying scene representation, so this study captures the inductive bias in LERF by training two independent networks. Settings (inductive bias): one for feature vectors (DINO, CLIP) and one for standard NeRF output (color, density). To demonstrate LERF’s ability to process real-world data, the study collected 13 scenes, including grocery stores, kitchens, bookstores, figurines, etc. . Figure 3 selects 5 representative scenarios to demonstrate LERF’s ability to process natural language. ##Figure 3 Figure 7 is 3D visual comparison of LERF and LSeg. In the eggs in the calibration bowl, LSeg is inferior to LERF: Figure 8 shows that under limited segmentation data LSeg trained on the set lacks the ability to effectively represent natural language. Instead, it only performs well on common objects within the training set distribution, as shown in Figure 7. However, the LERF method is not perfect yet. The following are failure cases. For example, when calibrating zucchini vegetables, other vegetables will appear:

Experiment

The above is the detailed content of Natural language is integrated into NeRF, and LERF, which generates 3D images with just a few words, is here.. For more information, please follow other related articles on the PHP Chinese website!

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AM

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AMThis article explores the growing concern of "AI agency decay"—the gradual decline in our ability to think and decide independently. This is especially crucial for business leaders navigating the increasingly automated world while retainin

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AM

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AMEver wondered how AI agents like Siri and Alexa work? These intelligent systems are becoming more important in our daily lives. This article introduces the ReAct pattern, a method that enhances AI agents by combining reasoning an

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM"I think AI tools are changing the learning opportunities for college students. We believe in developing students in core courses, but more and more people also want to get a perspective of computational and statistical thinking," said University of Chicago President Paul Alivisatos in an interview with Deloitte Nitin Mittal at the Davos Forum in January. He believes that people will have to become creators and co-creators of AI, which means that learning and other aspects need to adapt to some major changes. Digital intelligence and critical thinking Professor Alexa Joubin of George Washington University described artificial intelligence as a “heuristic tool” in the humanities and explores how it changes

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AM

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AMLangChain is a powerful toolkit for building sophisticated AI applications. Its agent architecture is particularly noteworthy, allowing developers to create intelligent systems capable of independent reasoning, decision-making, and action. This expl

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AM

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AMRadial Basis Function Neural Networks (RBFNNs): A Comprehensive Guide Radial Basis Function Neural Networks (RBFNNs) are a powerful type of neural network architecture that leverages radial basis functions for activation. Their unique structure make

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AM

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AMBrain-computer interfaces (BCIs) directly link the brain to external devices, translating brain impulses into actions without physical movement. This technology utilizes implanted sensors to capture brain signals, converting them into digital comman

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AM

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AMThis "Leading with Data" episode features Ines Montani, co-founder and CEO of Explosion AI, and co-developer of spaCy and Prodigy. Ines offers expert insights into the evolution of these tools, Explosion's unique business model, and the tr

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AM

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AMThis article explores Retrieval Augmented Generation (RAG) systems and how AI agents can enhance their capabilities. Traditional RAG systems, while useful for leveraging custom enterprise data, suffer from limitations such as a lack of real-time dat

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Dreamweaver Mac version

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

WebStorm Mac version

Useful JavaScript development tools