Why military AI startups have become so popular in recent years

Two weeks into the Russia-Ukraine conflict, Alexander Karp, CEO of the data analytics company Palantir, has a proposal for European leaders. In an open letter, he said Europeans should modernize their weapons with help from Silicon Valley.

#For Europe to “remain strong enough to defeat the threat of foreign occupation,” Karp writes, countries need to embrace “the interplay between technology and states. relationships, and funding relationships between destructive companies seeking to escape entrenched contractor control and federal agencies.”

And the military has begun to answer this call. NATO announced on June 30 that it was creating a $1 billion innovation fund that will invest in early-stage startups and venture capital funds to develop "priority" technologies such as artificial intelligence, big data processing and automation.

Since the Russia-Ukraine conflict, the UK has launched a new artificial intelligence strategy specifically for defense, and Germany has allocated nearly $5 billion to the military after already injecting $100 billion into it. Research and artificial intelligence.

"War is a catalyst for change," says Kenneth Payne, author of "I, Warbot: The Dawn of Artificial Intelligence Conflict" and head of defense studies at King's College London.

The Russia-Ukraine conflict has increased the urgency to bring more artificial intelligence tools to the battlefield. Those who stand to benefit the most are startups like Palantir, which hope to cash in as militaries race to update their arsenals with the latest technology. But as the technology becomes more advanced, long-standing ethical questions about using artificial intelligence in warfare become more pressing, and the prospect of limiting and regulating its use looks as remote as ever.

The relationship between technology and the military has not always been friendly. In 2018, after employee protests and outrage, Google withdrew from the Pentagon's Project Maven, which sought to build an image recognition system to improve drone strikes. The incident sparked a heated debate about human rights and the ethics of developing artificial intelligence for autonomous weapons.

The project also led many prominent AI researchers to pledge not to work on lethal AI. These include Turing Award winner Yoshua Bengio, founders of industry-leading AI lab DeepMind Demis Hassabis, Shane Legg and Mustafa Suleyman.

Four years later, Silicon Valley is closer to the military than ever. Yll Bajraktari, who was once the executive director of the National Security Council on Artificial Intelligence (NSCAI) and currently works for the Special Competition Research Program, said that not only large companies, but also many startups are now starting to get involved in this field. Bajraktari is lobbying for greater adoption of AI in the United States.

Why choose AI

Companies selling military AI claim that their technology can achieve a wide range of functions. The companies say AI can help with everything from the "mundane" to the "lethal," such as screening targets, processing data from satellites, identifying patterns in data, helping soldiers make faster decisions on the battlefield, and more. Image recognition software can help identify targets. Autonomous drones could be used for surveillance or attack on land, air or water, or to help soldiers deliver supplies more safely than by land.

Payne said the use of AI on the battlefield is still in its infancy, and the military is going through a period of experimentation where sometimes the technologies don't pan out. There are countless examples of AI companies tending to make big promises about technologies that don’t turn out to work as advertised, and war zones can be one of the most challenging areas to deploy AI technology because There is little relevant training data.

Arthur Holland Michel, an expert on drones and other surveillance technologies, argued in a paper for the United Nations Institute for Disarmament Research that this could lead to autonomous systems with "complex and "Unpredictable way" fails.

Despite this, many militaries are still actively promoting the implementation of AI. In a vaguely worded press release in 2021, the British Army proudly announced that it was using AI for the first time in military operations to provide information about the surrounding environment and terrain. The United States is working with startups to develop self-driving military vehicles. In the future, hundreds or even thousands of autonomous drones being developed by the U.S. and British militaries could prove to be powerful and deadly weapons.

Many experts are concerned about this. Meredith Whittaker, senior adviser for artificial intelligence at the Federal Trade Commission and faculty director of the AI Now Institute, said the push is really more about enriching tech companies than improving military operations.

In a joint article for Prospect magazine with Lancaster University sociology professor Lucy Suchman, Lancaster University sociology professor Lucy Suchman argued that AI boosters are fanning the flames of Cold War rhetoric and trying to Create a setting that positions big tech companies as “critical national infrastructure” and therefore regulation becomes very important. They warn that the military's adoption of artificial intelligence is an inevitability rather than what it truly is: an active choice involving moral complexities and trade-offs.

AIWAR chests

As the controversy surrounding Maven receded, calls for more AI defenses have grown louder over the past few years. One of the loudest voices has been former Google CEO Eric Schmidt, who chairs the NSCAI and has called for the United States to take a more aggressive approach to military AI adoption.

NSCAI outlined steps the U.S. should take to accelerate the pace of artificial intelligence by 2025 in a report last year, calling on the U.S. military to invest $8 billion annually in these technologies or risk falling behind. in other countries.

The U.S. Department of Defense is requesting $874 million for artificial intelligence in 2022, the Department of Defense said in a March 2022 report, although that figure does not reflect the department’s total AI investments.

It’s not just the U.S. military that believes in this need. Heiko Borchert, co-director of the Defense Artificial Intelligence Observatory at Helmut Schmidt University in Hamburg, Germany, said that European countries tend to be more cautious when adopting new technologies, and they are also investing more in artificial intelligence.

France and the UK have identified artificial intelligence as a key defense technology, and the European Commission, the EU’s executive arm, has set aside $1 billion to develop new defense technologies.

The above is the detailed content of Why military AI startups have become so popular in recent years. For more information, please follow other related articles on the PHP Chinese website!

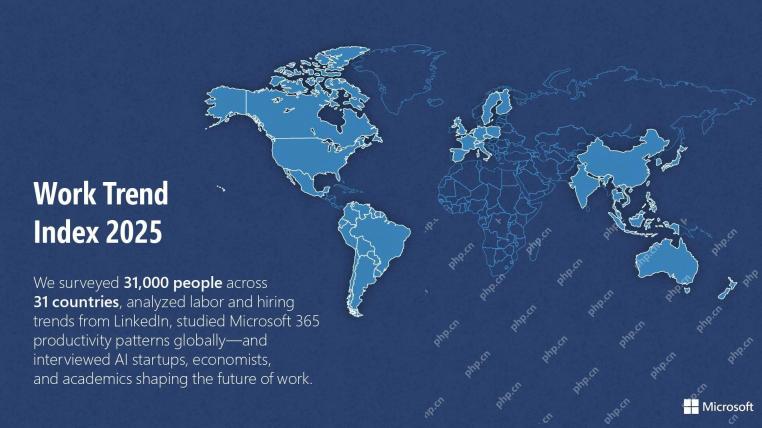

Microsoft Work Trend Index 2025 Shows Workplace Capacity StrainApr 24, 2025 am 11:19 AM

Microsoft Work Trend Index 2025 Shows Workplace Capacity StrainApr 24, 2025 am 11:19 AMThe burgeoning capacity crisis in the workplace, exacerbated by the rapid integration of AI, demands a strategic shift beyond incremental adjustments. This is underscored by the WTI's findings: 68% of employees struggle with workload, leading to bur

Can AI Understand? The Chinese Room Argument Says No, But Is It Right?Apr 24, 2025 am 11:18 AM

Can AI Understand? The Chinese Room Argument Says No, But Is It Right?Apr 24, 2025 am 11:18 AMJohn Searle's Chinese Room Argument: A Challenge to AI Understanding Searle's thought experiment directly questions whether artificial intelligence can genuinely comprehend language or possess true consciousness. Imagine a person, ignorant of Chines

China's 'Smart' AI Assistants Echo Microsoft Recall's Privacy FlawsApr 24, 2025 am 11:17 AM

China's 'Smart' AI Assistants Echo Microsoft Recall's Privacy FlawsApr 24, 2025 am 11:17 AMChina's tech giants are charting a different course in AI development compared to their Western counterparts. Instead of focusing solely on technical benchmarks and API integrations, they're prioritizing "screen-aware" AI assistants – AI t

Docker Brings Familiar Container Workflow To AI Models And MCP ToolsApr 24, 2025 am 11:16 AM

Docker Brings Familiar Container Workflow To AI Models And MCP ToolsApr 24, 2025 am 11:16 AMMCP: Empower AI systems to access external tools Model Context Protocol (MCP) enables AI applications to interact with external tools and data sources through standardized interfaces. Developed by Anthropic and supported by major AI providers, MCP allows language models and agents to discover available tools and call them with appropriate parameters. However, there are some challenges in implementing MCP servers, including environmental conflicts, security vulnerabilities, and inconsistent cross-platform behavior. Forbes article "Anthropic's model context protocol is a big step in the development of AI agents" Author: Janakiram MSVDocker solves these problems through containerization. Doc built on Docker Hub infrastructure

Using 6 AI Street-Smart Strategies To Build A Billion-Dollar StartupApr 24, 2025 am 11:15 AM

Using 6 AI Street-Smart Strategies To Build A Billion-Dollar StartupApr 24, 2025 am 11:15 AMSix strategies employed by visionary entrepreneurs who leveraged cutting-edge technology and shrewd business acumen to create highly profitable, scalable companies while maintaining control. This guide is for aspiring entrepreneurs aiming to build a

Google Photos Update Unlocks Stunning Ultra HDR For All Your PicturesApr 24, 2025 am 11:14 AM

Google Photos Update Unlocks Stunning Ultra HDR For All Your PicturesApr 24, 2025 am 11:14 AMGoogle Photos' New Ultra HDR Tool: A Game Changer for Image Enhancement Google Photos has introduced a powerful Ultra HDR conversion tool, transforming standard photos into vibrant, high-dynamic-range images. This enhancement benefits photographers a

Descope Builds Authentication Framework For AI Agent IntegrationApr 24, 2025 am 11:13 AM

Descope Builds Authentication Framework For AI Agent IntegrationApr 24, 2025 am 11:13 AMTechnical Architecture Solves Emerging Authentication Challenges The Agentic Identity Hub tackles a problem many organizations only discover after beginning AI agent implementation that traditional authentication methods aren’t designed for machine-

Google Cloud Next 2025 And The Connected Future Of Modern WorkApr 24, 2025 am 11:12 AM

Google Cloud Next 2025 And The Connected Future Of Modern WorkApr 24, 2025 am 11:12 AM(Note: Google is an advisory client of my firm, Moor Insights & Strategy.) AI: From Experiment to Enterprise Foundation Google Cloud Next 2025 showcased AI's evolution from experimental feature to a core component of enterprise technology, stream

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Dreamweaver Mac version

Visual web development tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.