Home >Technology peripherals >AI >Modular MoE will become the basic model for visual multi-task learning

Modular MoE will become the basic model for visual multi-task learning

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-13 12:40:032063browse

Multi-task learning (MTL) has many challenges because the gradients between different tasks may be contradictory. To exploit the correlation between tasks, the authors introduce the Mod-Squad model, which is a modular model composed of multiple experts. The model can flexibly optimize the matching of tasks and experts, and select some experts for the task. The model allows each expert to correspond to only part of the tasks, and each task to only correspond to part of the experts, thereby maximizing the use of the positive connections between tasks. Mod-Squad integrates Mixture of Experts (MoE) layers into the Vision Transformer model and introduces a new loss function that encourages sparse but strong dependencies between experts and tasks. Furthermore, for each task, the model can retain only a small portion of the expert network and achieve the same performance as the original large model. The model achieved the best results on the Taskonomy big data set and PASCALContext data set of 13 vision tasks.

##Paper address: https://arxiv.org/abs/2212.08066

Project address: https://vis-www.cs.umass.edu/mod-squad/

Github address: https: //github.com/UMass-Foundation-Model/Mod-Squad

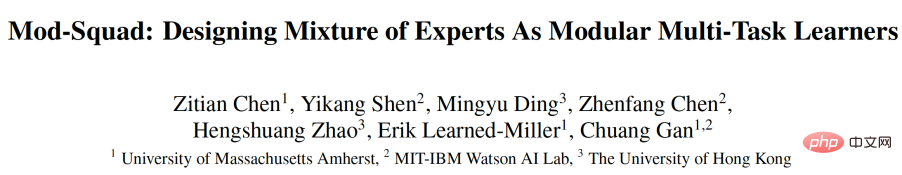

The purpose of multi-task learning (MTL) is to model the relationship between tasks, and build unified models for multiple tasks. As shown in Figure 1, the main motivation of Mod-Squad is to allow experts to be updated only by some tasks instead of all tasks, and only part of the experts are updated by each task. This allows the full capacity of the model to be utilized while avoiding interference between tasks.

Figure 1. Mod-Squad: Experts and tasks choose each other. MoE ViT: All specialists are used by all tasks.

The following is a brief introduction to the article.

Model structure

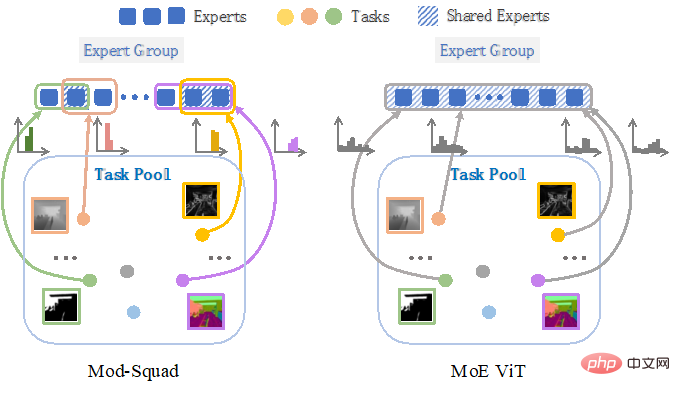

Figure 2. Mod-Squad: Insert the expert group (mixture-of-expert) To Vision Transformer.

As shown in Figure 2, the structure of Mod-Squad is to introduce Mixture-of-expert (MoE) into Vision Transformer (ViT). MoE is a machine learning model in which multiple experts form a hybrid model. Each expert is an independent model, and each model contributes differently to different inputs. Finally, the contributions of all experts are weighted and combined together to get the final output. The advantage of this approach is that it can dynamically select the best expert based on the content of the input image and control the amount of computation.

After the previous MoE model converges, different experts can be used according to different pictures, but for a certain task, the model will converge to tend to use all experts. Mod-Squad allows the model to use different experts for images, and after convergence, the model can reach a state where only a part of the experts are used for a task. Next, we will introduce how this is achieved.

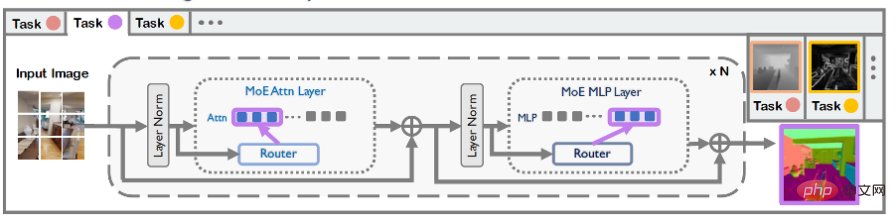

Maximize mutual information between experts and tasksThis paper proposes a joint probability model of tasks and experts to optimize the allocation between experts E and tasks T . This probability model will be used to calculate mutual information between experts and tasks, and serve as an additional loss function to optimize the weight network in MoE. The formula of mutual information is as follows. The probabilities of E and T can be obtained from the weight network in MoE. For details, please refer to the paper.

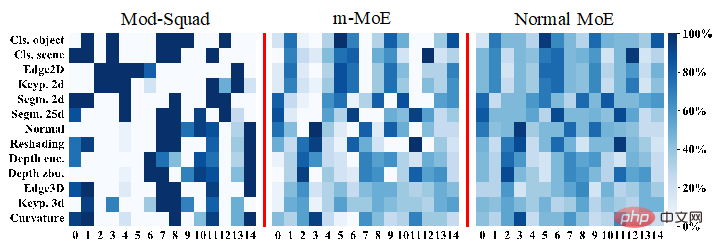

After maximizing the mutual information between the task and the expert, the model can allow the expert and the task to have sparse and very strong dependencies relationship, as shown in Figure 3. The one on the far left is the Mod-Squad task usage expert frequency. As can be seen, Mod-Squad has sparser but sharper frequencies between missions and specialists.

Figure 3. Frequency plot comparison of tasks using different experts. The horizontal axis represents different experts, the vertical axis represents different tasks, and darker colors represent higher frequency of use. The frequency plot of Mod-Squad is sparser and sharper.

The advantage of sparse and very strong dependencies between this task and experts is:

1. Similar tasks tend to Use the same expert;

2. Experts tend to be used by a group of positively related tasks;

#3. The capacity of the model is fully utilized Use, but each task only uses part of the capacity, and the capacity can be adjusted according to the task;

#4. A small single-task model can be extracted from the large multi-task model for a specific task, and Has the same performance as larger models. This feature can be used to extract small single-task models from very multi-task models.

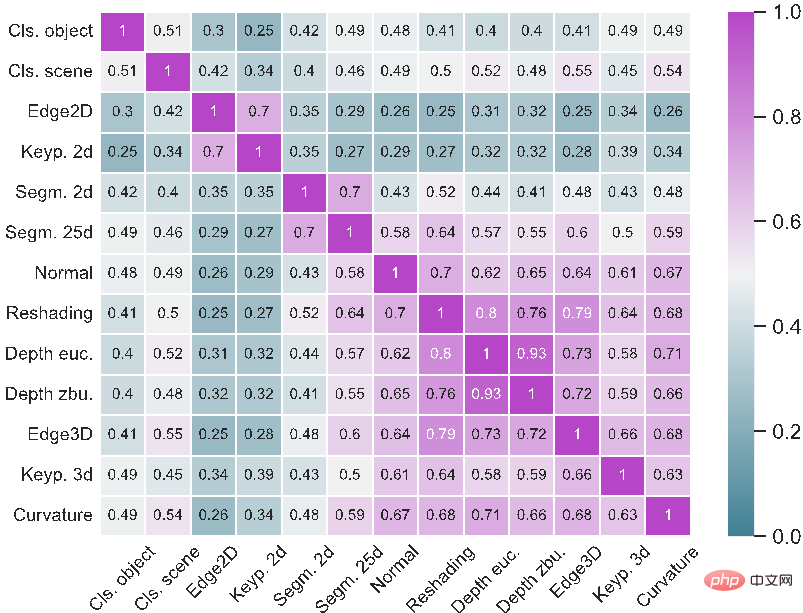

Based on the frequency of sharing experts between tasks, the model can also calculate the similarity between tasks, as shown in the figure below. It can be seen that 3D-biased tasks tend to use the same experts and are therefore more similar.

Experimental part

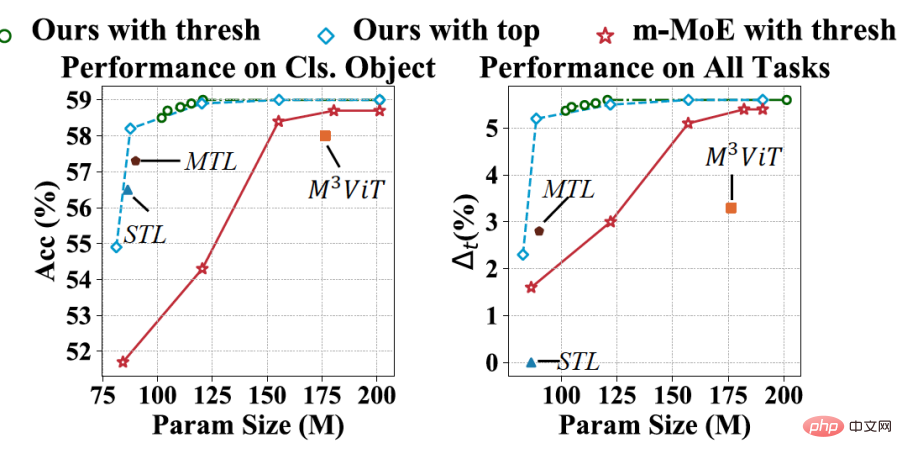

Mod-Squad can prune a single task without losing accuracy. The vertical axis of the figure below is performance, and the horizontal axis is parameter quantity.

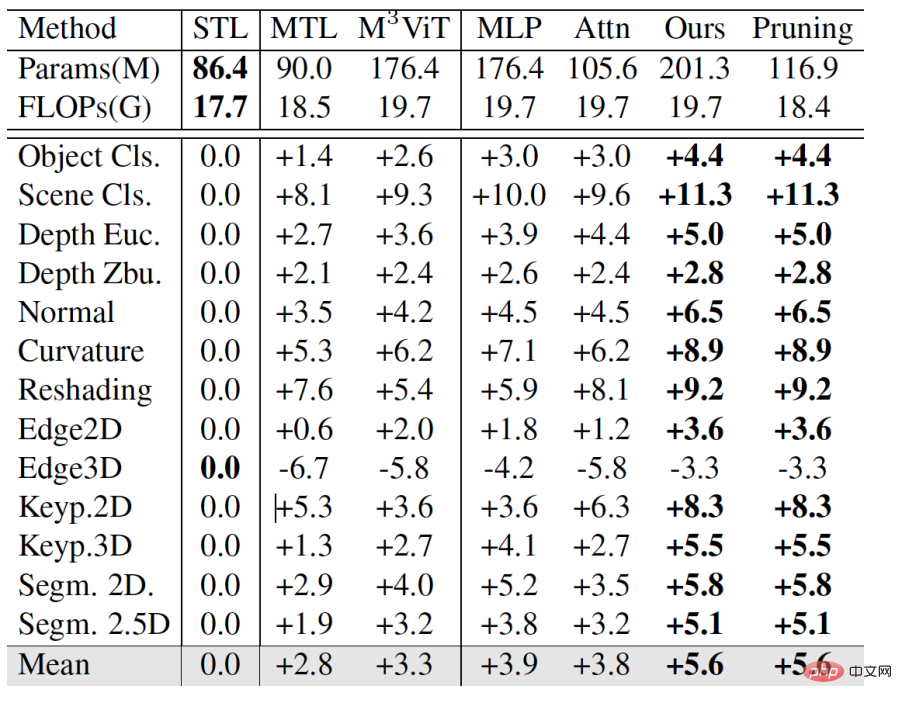

There has also been a great improvement in the large data set Taskonomy. It can be seen that Mod-Squad is higher on average than pure MTL. 2.8 points, and maintains the same performance after pruning.

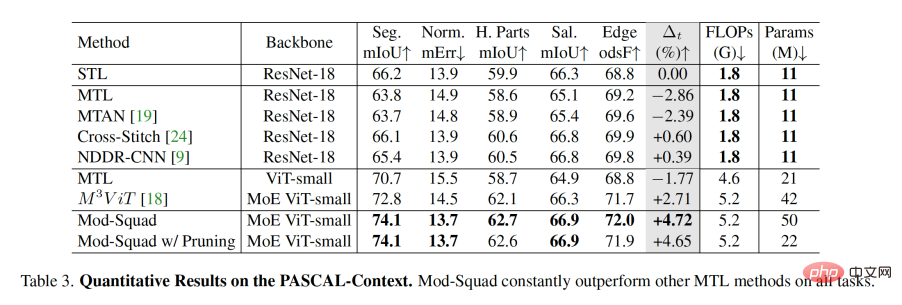

Compared with other methods on PASCAL-Context, Mod-Squad is nearly two points higher on average than other MoE methods.

Please refer to the original text for specific details.

The above is the detailed content of Modular MoE will become the basic model for visual multi-task learning. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology