Home >Technology peripherals >AI >The single-view NeRF algorithm S^3-NeRF uses multi-illumination information to restore scene geometry and material information.

The single-view NeRF algorithm S^3-NeRF uses multi-illumination information to restore scene geometry and material information.

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-13 10:58:021430browse

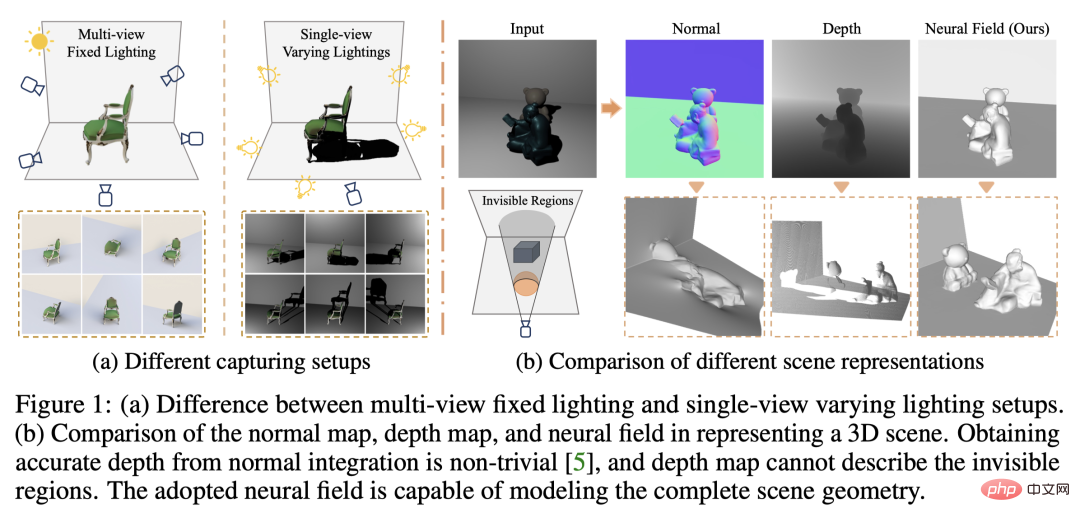

Currently, 3D image reconstruction work usually uses a multi-view stereo reconstruction method (Multi-view Stereo) that captures the target scene from multiple viewpoints (multi-view) under constant natural lighting conditions. However, these methods usually assume Lambertian surfaces and have difficulty recovering high-frequency details.

Another method of scene reconstruction is to utilize images captured from a fixed viewpoint but different point lights. Photometric Stereo methods, for example, take this setup and use its shading information to reconstruct the surface details of non-Lambertian objects. However, existing single-view methods usually use normal maps or depth maps to represent the visible surface, which makes them unable to describe the back side of objects and occluded areas, and can only reconstruct 2.5D scene geometry. Additionally, normal maps cannot handle depth discontinuities.

In a recent study, researchers from the University of Hong Kong, the Chinese University of Hong Kong (Shenzhen), Nanyang Technological University, and MIT-IBM Watson AI Lab proposed a method to use a single View multi-light (single-view, multi-lights) images to reconstruct complete 3D scenes.

- ## Paper link: https://arxiv.org/abs/2210.08936

- Paper homepage: https://ywq.github.io/s3nerf/

- Code link: https://github.com/ywq/s3nerf

Unlike existing single-view approaches based on normal maps or depth maps, S 3-NeRF is based on neural scene representation and uses the shading and shadow information in the scene to reconstruct the entire 3D scene (including visible/invisible areas). Neural scene representation methods use multilayer perceptrons (MLPs) to model continuous 3D space, mapping 3D points to scene attributes such as density, color, etc. Although neural scene representation has made significant progress in multi-view reconstruction and new view synthesis, it has been less explored in single-view scene modeling. Unlike existing neural scene representation-based methods that rely on multi-view photo consistency, S3-NeRF mainly optimizes the neural field by utilizing shading and shadow information under a single view.

We found that simply introducing light source position information directly into NeRF as input cannot reconstruct the geometry and appearance of the scene. To make better use of the captured photometric stereo images, we explicitly model the surface geometry and BRDF using a reflection field, and employ physically based rendering to calculate the color of the scene's 3D points, which is obtained via stereo rendering. The color of the two-dimensional pixel corresponding to the ray. At the same time, we perform differentiable modeling of the visibility of the scene and calculate the visibility of the point by tracing the rays between the 3D point and the light source. However, considering the visibility of all sample points on a ray is computationally expensive, so we optimize shadow modeling by calculating the visibility of surface points obtained by ray tracing.

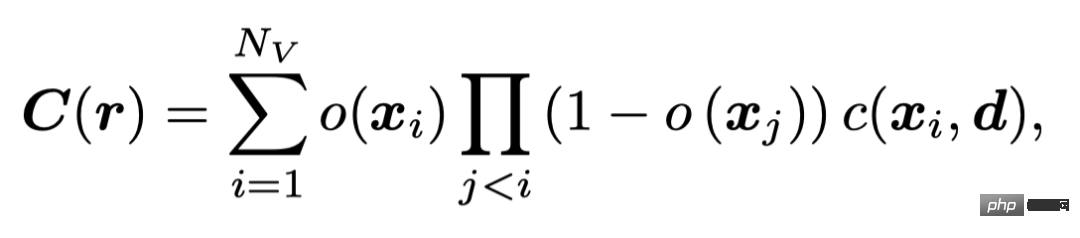

We use an occupancy field similar to UNISURF to represent scene geometry. UNISURF maps the 3D point coordinates and line of sight direction to the occupancy value and color of the point through MLP, and obtains the color of the pixel through stereo rendering,

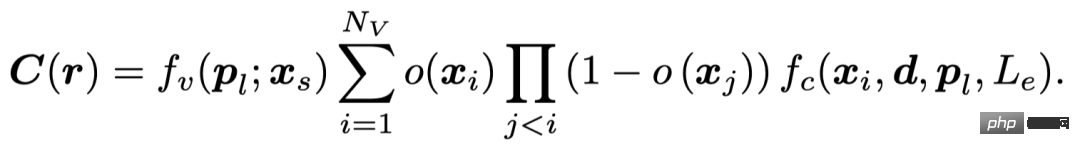

Nv# is the number of sampling points on each ray.

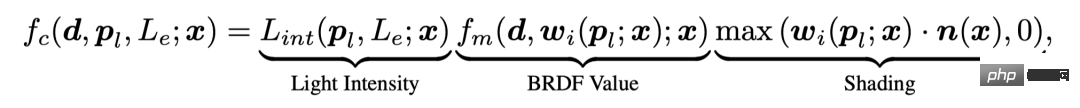

In order to effectively utilize the shading information in photometric stereo images, S3-NeRF explicitly models the BRDF of the scene and uses physically based Render the color of the 3D point. At the same time, we model the light visibility of 3D points in the scene to take advantage of the rich shadow cues in the image, and obtain the final pixel value through the following equation.

Physically Based Rendering Model

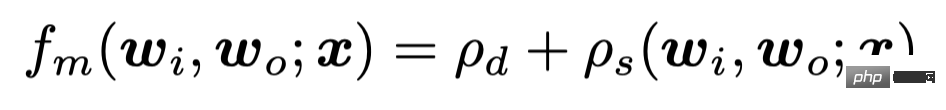

Our approach considers non-Lambertian surfaces and spatially varying BRDFs. The value of point x observed from the line of sight direction d under the near-field point light source (pl, Le) can be expressed as

where, we consider For the light attenuation problem of point light sources, the intensity of light incident on the point is calculated through the distance between the light source and the point. We use a BRDF model that considers diffuse reflection and specular reflection

to represent the specular reflectance through a weighted combination of Sphere Gaussian basis

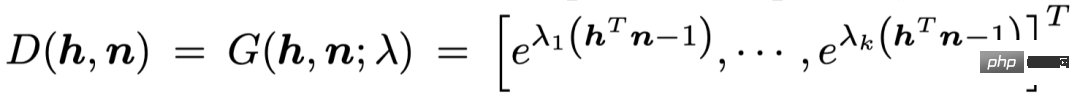

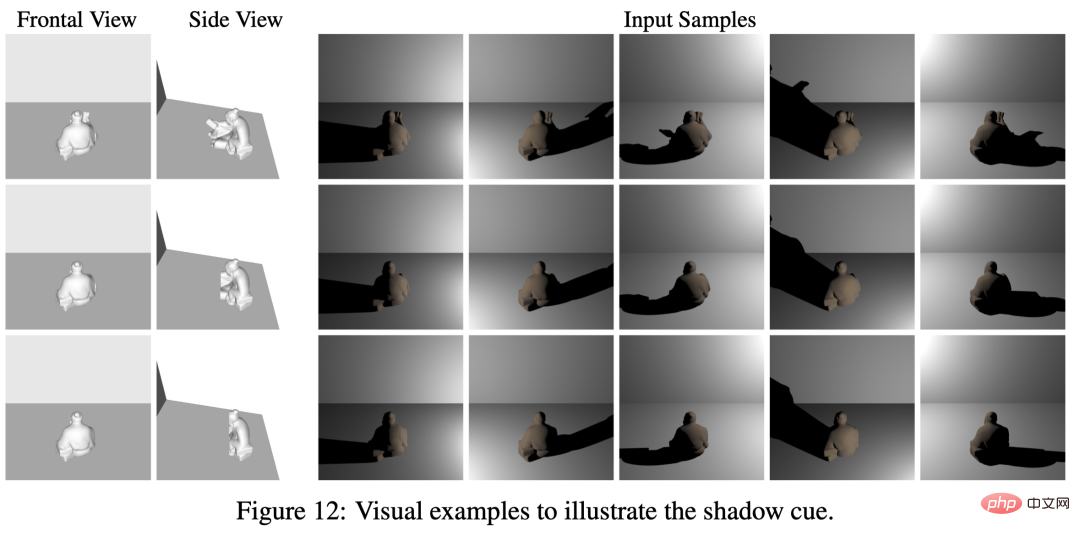

Shadow modeling

Shadows are one of the crucial clues in scene geometry reconstruction. The three objects in the picture have the same shape and appearance in front view, but have different shapes on the back. Through the shadows produced under different lighting, we can observe that the shapes of the shadows are different, which reflects the geometric information of the invisible areas in the front view. The light creates certain constraints on the back contour of the object through the shadows reflected in the background.

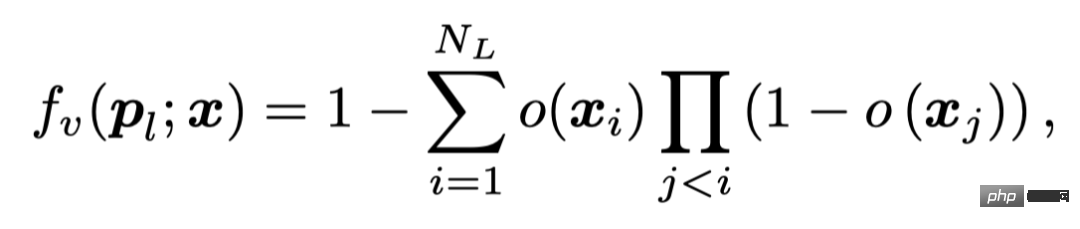

We reflect the light visibility of the point by calculating the occupancy value between the 3D point and the light source

Among them, NL is the number of points sampled on the point-light source line segment.

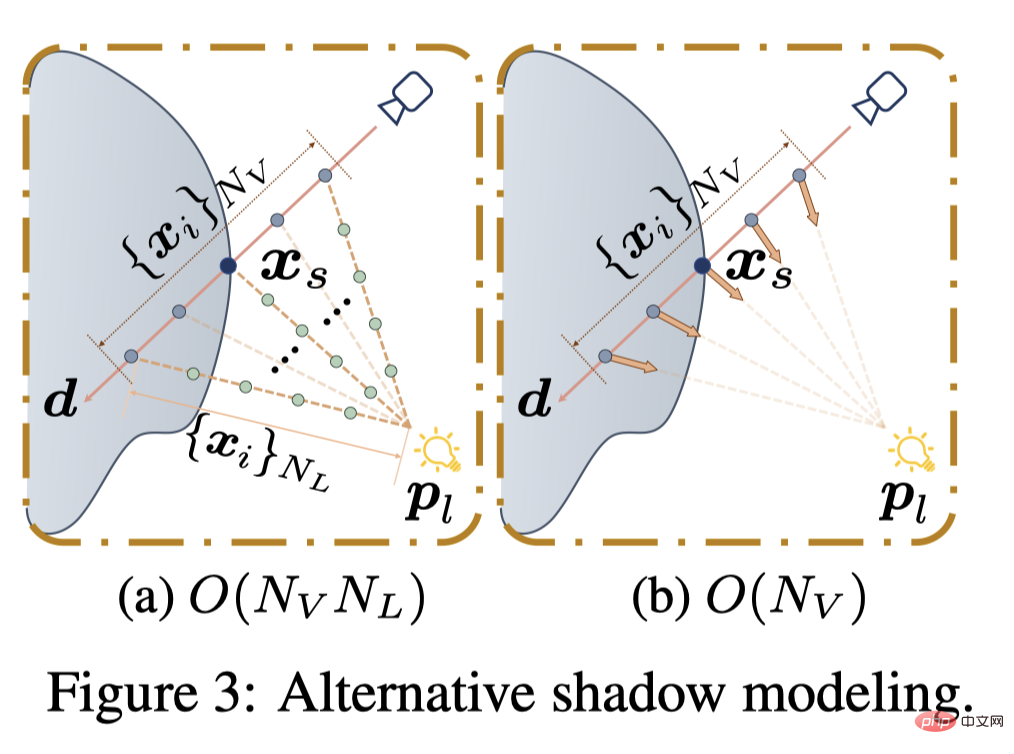

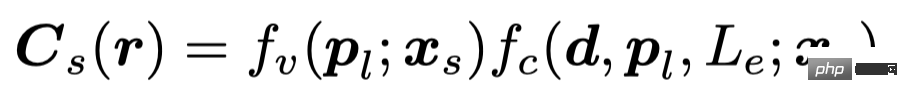

Due to the large cost of calculating the visibility of all Nv points sampled by pixel points along the ray (O (NvNL)), some existing methods use MLP to directly return the visibility of the point (O (Nv )), or pre-extract surface points after obtaining the scene geometry (O (NL)). S3-NeRF calculates the light visibility of the pixel online through the surface points located by root-finding, and expresses the pixel value through the following formula.

Scene Optimization

Our method does not require supervision of shadows; Rely on image reconstruction loss for optimization. Considering that there are no additional constraints brought by other perspectives in a single perspective, if a sampling strategy like UNISURF is adopted to gradually reduce the sampling range, it will cause the model to begin to degrade after the sampling interval is reduced. Therefore, we adopt a strategy of joint stereo rendering and surface rendering, using root-finding to locate the surface points to render the color and calculate L1 loss.

Experimental results

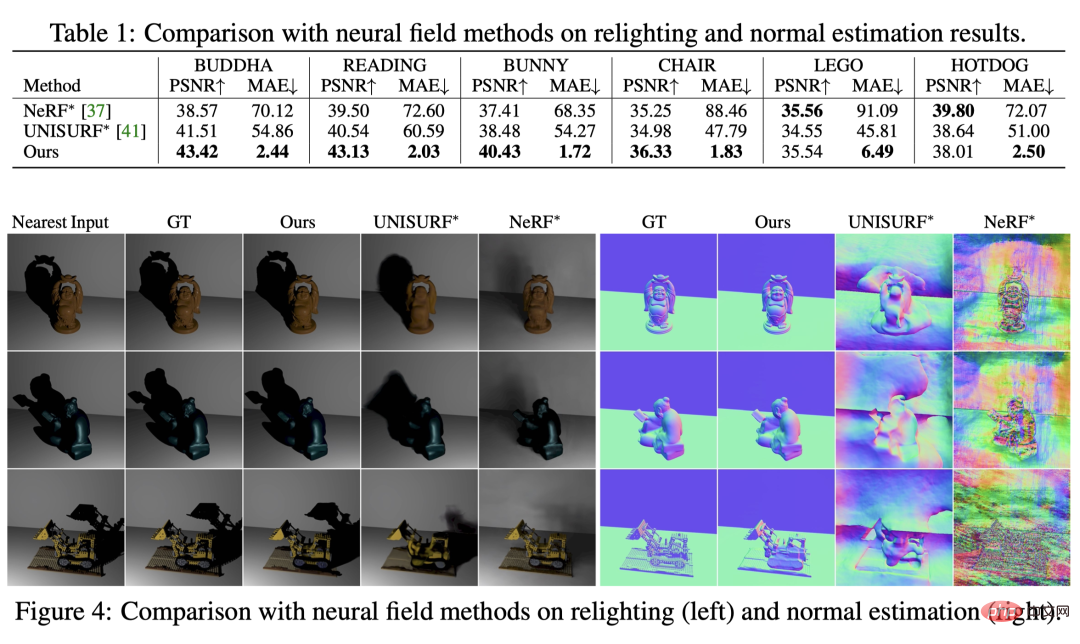

Comparison with neural radiation field method

We first compare with two baseline methods based on neural radiation fields (due to different tasks, we introduce light source information in their color MLP). You can see that they are unable to reconstruct scene geometry or accurately generate shadows under new lighting.

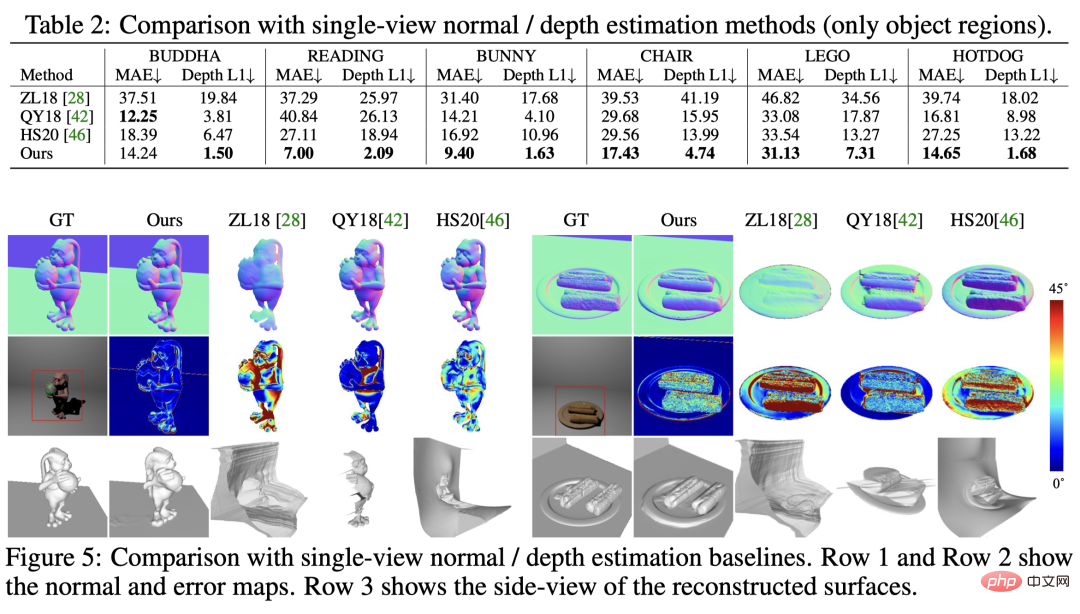

Comparison with single-view shape estimation method

Now and now From the comparison of single-view normal/depth estimation methods, we can see that our method achieves the best results in both normal estimation and depth estimation, and can simultaneously reconstruct visible and invisible areas in the scene.

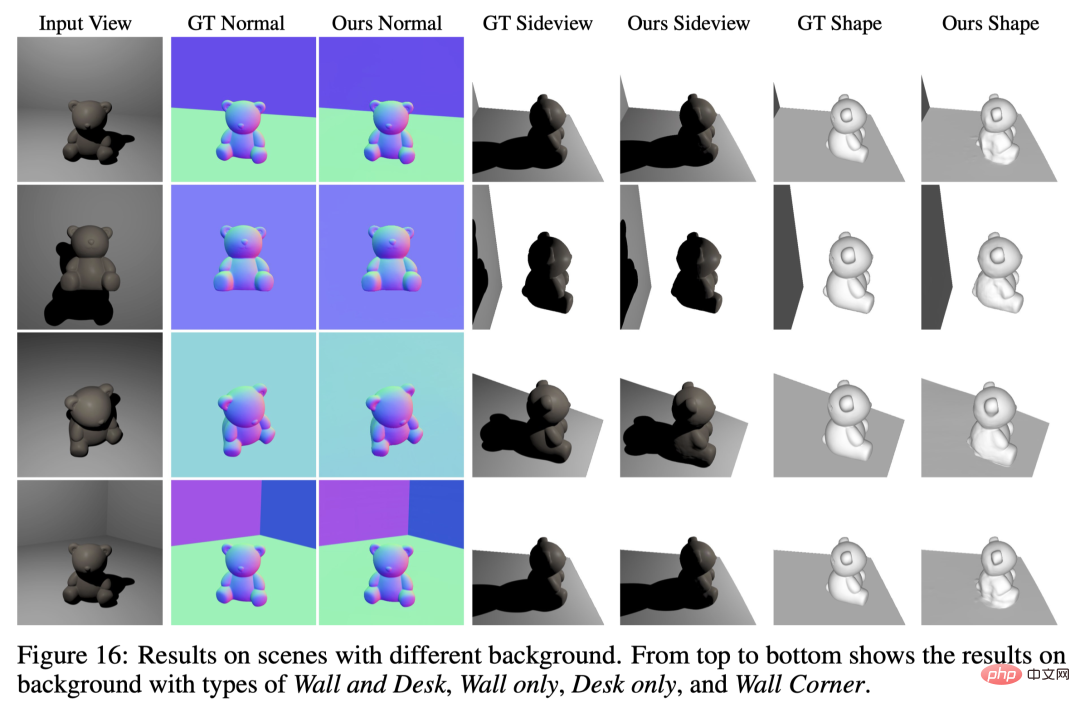

Scene reconstruction for different backgrounds

Our method is applicable to various scenes with different background conditions.

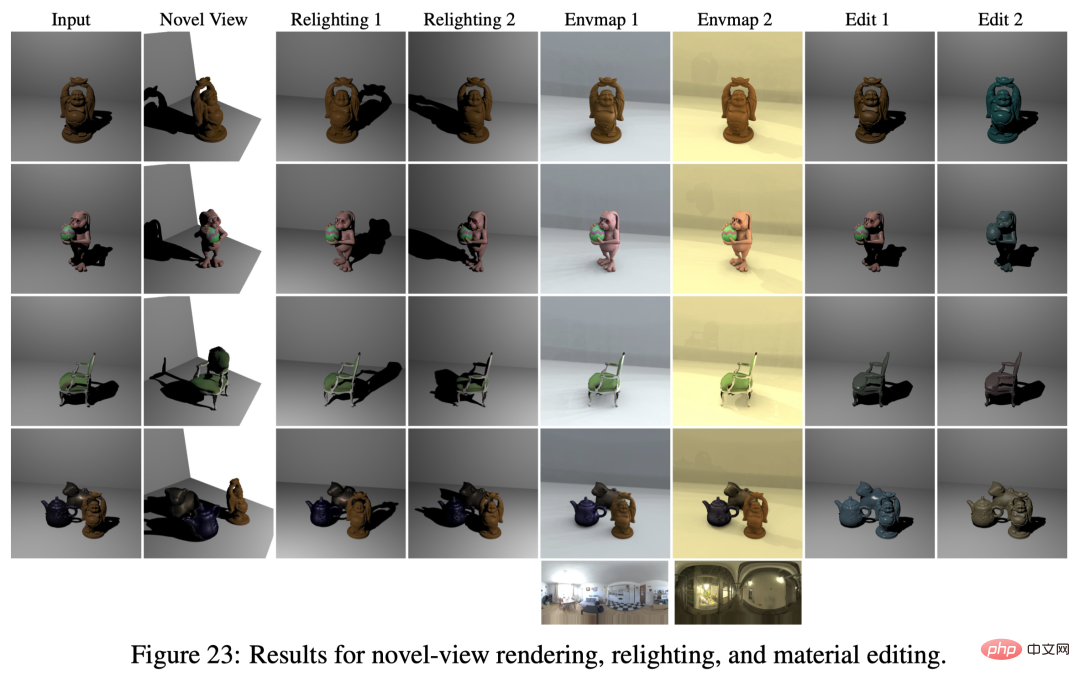

New view rendering, changing lighting and material editing

Neural based For the scene modeling of the reflection field, we have successfully decoupled the geometry/material/lighting of the scene, etc., so it can be applied to applications such as new view rendering, changing scene lighting, and material editing.

Reconstruction of real shooting scenes

We shot three real scenarios to explore its practicality. We fixed the camera position, used the mobile phone's flashlight as a point light source (the ambient light source was turned off), and moved the handheld flashlight randomly to capture images under different light sources. This setup does not require light source calibration, we apply SDPS‑Net to get a rough estimate of the light source direction, and initialize the light source position by roughly estimating the camera-object and light source-object relative distances. Light source positions are jointly optimized with the scene's geometry and BRDF during training. It can be seen that even with a more casual data capture setting (without calibration of the light source), our method can still reconstruct the 3D scene geometry well.

Summary

- S3-NeRF images captured under multiple point light sources using a single view to optimize the neural reflection field to reconstruct 3D scene geometry and material information.

- By utilizing shading and shadow clues, S3-NeRF can effectively restore the geometry of visible/invisible areas in the scene, achieving Reconstruction of complete scene geometry/BRDF from monocular perspective.

- Various experiments show that our method can reconstruct scenes of various complex geometries/materials, and can cope with backgrounds of various geometries/materials and different light quantities/light source distributions.

The above is the detailed content of The single-view NeRF algorithm S^3-NeRF uses multi-illumination information to restore scene geometry and material information.. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology