Home >Technology peripherals >AI >The 3D reconstruction of Jimmy Lin's face can be achieved with two A100s and a 2D CNN!

The 3D reconstruction of Jimmy Lin's face can be achieved with two A100s and a 2D CNN!

- 王林forward

- 2023-04-13 08:19:061298browse

Three-dimensional reconstruction (3D Reconstruction) technology has always been a key research area in the field of computer graphics and computer vision.

Simply put, 3D reconstruction is to restore the 3D scene structure based on 2D images.

It is said that after Jimmy Lin was in a car accident, his facial reconstruction plan used three-dimensional reconstruction.

Different technical routes for three-dimensional reconstruction are expected to be integrated

In fact, three-dimensional reconstruction technology has been used in games, movies, surveying, positioning, navigation, It has been widely used in autonomous driving, VR/AR, industrial manufacturing and consumer goods fields.

With the development of GPU and distributed computing, as well as hardware, depth cameras such as Microsoft's Kinect, Asus' XTion and Intel's RealSense have gradually matured, and the cost of 3D reconstruction has increased. Showing a downward trend.

Operationally speaking, the 3D reconstruction process can be roughly divided into five steps.

The first step is to obtain the image.

Since 3D reconstruction is the inverse operation of the camera, it is necessary to first use the camera to obtain the 2D image of the 3D object.

This step cannot be ignored, because lighting conditions, camera geometric characteristics, etc. have a great impact on subsequent image processing.

The second step is camera calibration.

This step is to use the images captured by the camera to restore the objects in the space.

It is usually assumed that there is a linear relationship between the image captured by the camera and the object in the three-dimensional space. The process of solving the parameters of the linear relationship is called camera calibration.

The third step is feature extraction.

Features mainly include feature points, feature lines and regions.

In most cases, feature points are used as matching primitives. The form in which feature points are extracted is closely related to the matching strategy used.

Therefore, when extracting feature points, you need to first determine which matching method to use.

The fourth step is stereo matching.

Stereo matching refers to establishing a correspondence between image pairs based on extracted features, that is, imaging points of the same physical space point in two different images. Correspond one to one.

The fifth step is three-dimensional reconstruction.

With relatively accurate matching results, combined with the internal and external parameters of the camera calibration, the three-dimensional scene information can be restored.

These five steps are interlocking. Only when each link is done with high precision and small errors can a relatively accurate stereoscopic vision system be designed.

In terms of algorithms, 3D reconstruction can be roughly divided into two categories. One is the 3D reconstruction algorithm based on traditional multi-view geometry.

The other is a three-dimensional reconstruction algorithm based on deep learning.

Currently, due to the huge advantages of CNN in image feature matching, more and more researchers are beginning to turn their attention to three-dimensional reconstruction based on deep learning.

However, this method is mostly a supervised learning method and is highly dependent on the data set.

The collection and labeling of data sets have always been a source of problems for supervised learning. Therefore, three-dimensional reconstruction based on deep learning is mostly studied in the direction of reconstruction of smaller objects.

In addition, the three-dimensional reconstruction based on deep learning has high fidelity and has better performance in terms of accuracy.

But training the model takes a lot of time, and the 3D convolutional layers used for 3D reconstruction are very expensive.

Therefore, some researchers began to re-examine the traditional three-dimensional reconstruction method.

Although the traditional three-dimensional reconstruction method has shortcomings in performance, the technology is relatively mature.

Then, a certain integration of the two methods may produce better results.

3D reconstruction without 3D convolutional layers

From University of London, University of Oxford, Google and Niantic (spun out from Google Researchers from institutions such as the Unicorn Company that studies AR) have explored a 3D reconstruction method that does not require 3D convolution.

They propose a simple state-of-the-art multi-view depth estimator.

This multi-view depth estimator has two breakthroughs.

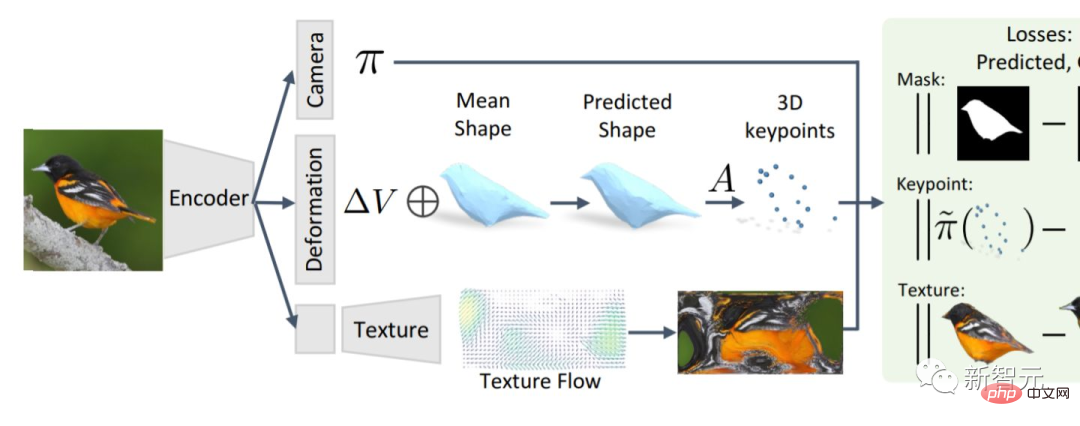

The first is a carefully designed two-dimensional CNN, which can make use of powerful image priors, and can obtain plane scanning feature quantities and geometric losses;

The second is the ability to integrate keyframes and geometric metadata into cost volumes, enabling informed depth plane scoring.

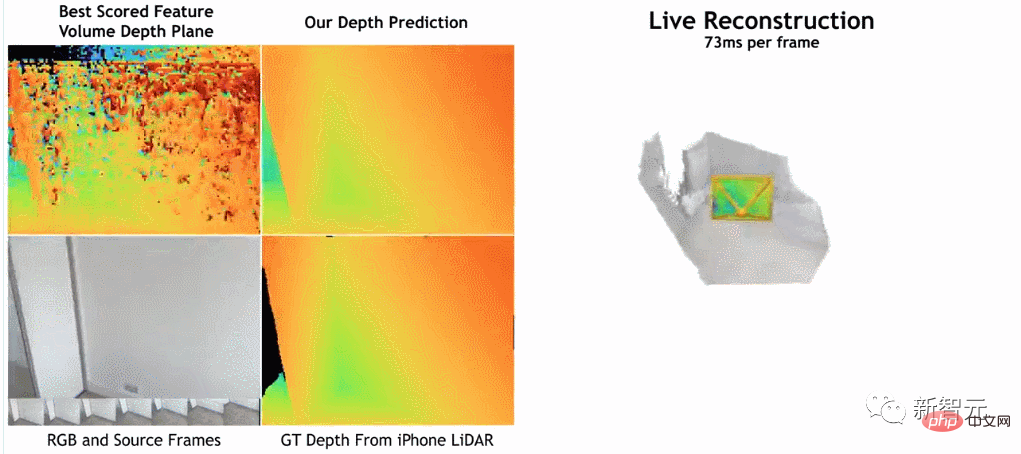

According to the researchers, their method has a clear lead over current state-of-the-art methods in depth estimation.

and is close to or better for 3D reconstruction on ScanNet and 7-Scenes, but still allows online real-time low-memory reconstruction.

Moreover, the reconstruction speed is very fast, only taking about 73ms per frame.

The researchers believe this makes accurate reconstruction possible through fast deep fusion.

#According to the researchers, their method uses an image encoder to extract data from a reference image and a source image. Extract matching features, then input them into the cost volume, and then use a 2D convolutional encoder/decoder network to process the output results of the cost volume.

The research was implemented using PyTorch, and used ResNet18 for matching feature extraction. It also used two 40GB A100 GPUs and completed the entire work in 36 hours.

In addition, although the model does not use 3D convolutional layers, it outperforms the baseline model in depth prediction indicators.

This shows that a carefully designed and trained 2D network is sufficient for high-quality depth estimation.

Interested readers can read the original text of the paper:

https://nianticlabs.github.io/simplerecon /resources/SimpleRecon.pdf

However, it should be reminded that there is a professional threshold for reading this paper, and some details may not be easily noticed.

We might as well take a look at what foreign netizens discovered from this paper.

A netizen named "stickshiftplease" said, "Although the inference time on the A100 is about 70 milliseconds, this can be shortened through various techniques, and the memory requirements do not have to be 40GB, with the smallest model running 2.6GB of memory.”

Another netizen named "IrreverentHippie" pointed out, "Please note that this research is still based on LiDAR depth sensor sampling. This is why this method achieves such good results quality and accuracy reasons".

Another netizen named "nickthorpie" made a longer comment. He said, "The advantages and disadvantages of ToF cameras are well documented. ToF solves various problems that plague original image processing. Among them, two A major issue is scalability and details. ToF always has difficulty identifying small details such as table edges or thin poles. This is crucial for autonomous or semi-autonomous applications.

In addition, since ToF is an active sensor, when multiple sensors are used together, such as at a crowded intersection or in a self-built warehouse, the picture quality will quickly degrade.

Obviously, the more data you collect on a scene, the more accurate the description you can create. Many researchers prefer to study raw image data because it is more flexible."

The above is the detailed content of The 3D reconstruction of Jimmy Lin's face can be achieved with two A100s and a 2D CNN!. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology