Home >Technology peripherals >AI >AI automatically generates prompts that are comparable to humans. Netizen: Engineers have just been hired and will be eliminated again.

AI automatically generates prompts that are comparable to humans. Netizen: Engineers have just been hired and will be eliminated again.

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-12 22:40:011637browse

At this stage, thanks to the expansion of model scale and the emergence of attention-based architecture, language models have shown unprecedented versatility. These large language models (LLMs) have demonstrated extraordinary capabilities in a variety of different tasks, including zero-shot and few-shot settings.

However, on the basis of model versatility, a control question arises: How can we make LLM do what we require?

In order to answer this question and guide LLM in the direction of our desired behavior, researchers have taken a series of measures to achieve this purpose, such as fine-tuning the model and using context. Learning, different forms of prompt generation, etc. Prompt-based methods include fine-tuned soft prompts and natural language prompt engineering. Many researchers have shown great interest in the latter because it provides a natural interactive interface for humans to interact with machines.

However, simple prompts do not always produce the desired results, for example, when generating panda images, adding adjectives such as "cute" or "eat bamboo" We don't know what effect phrases like this have on the output.

Therefore, human users must try various prompts to guide the model to complete our desired behavior. The execution of LLMs can be considered a black-box process: although they can execute a wide range of natural language programs, these programs may be processed in a way that is not intuitive to humans, very difficult to understand, and can only be used when performing downstream tasks. Measure the quality of instruction.

We can’t help but ask: Can large language models write prompts for themselves? The answer is, not only can it, but it can also reach human level.

In order to reduce the manual workload of creating and verifying valid instructions, researchers from the University of Toronto, University of Waterloo and other institutions proposed a new algorithm that uses LLM to automatically generate and select instructions: APE (Automatic Prompt Engineer). They describe this problem as natural language program synthesis and propose to treat it as a black-box optimization problem where LLM can be used to generate as well as search for feasible candidate solutions.

- ##Paper address: https://arxiv.org/pdf/2211.01910.pdf

- Paper home page: https://sites.google.com/view/automatic-prompt-engineer

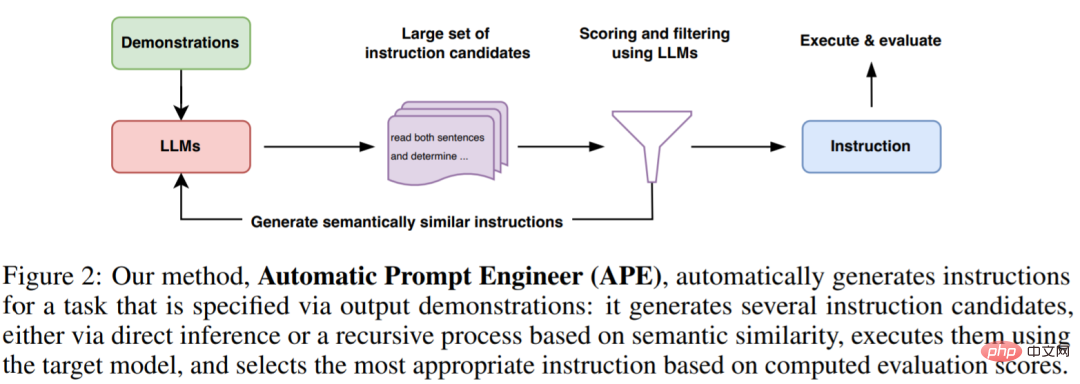

Researchers start from the three characteristics of LLM. First, an LLM is used as an inference model to generate instruction candidates based on a small set of demonstrations of the form input-output pairs. Next, a score is calculated through each instruction under the LLM to guide the search process. Finally, they propose an iterative Monte Carlo search method where LLM improves the best candidate instructions by proposing semantically similar instruction variants.

Intuitively, the algorithm proposed in this paper requires LLM to generate a set of instruction candidates based on demonstrations, and then asks the algorithm to evaluate which instructions are more promising, and the algorithm is named APE.

The contributions of this article are as follows:

- The researcher synthesizes instruction generation as a natural language program and expresses it as an LLM Guided black-box optimization problem, and an iterative Monte Carlo search method is proposed to approximate the solution;

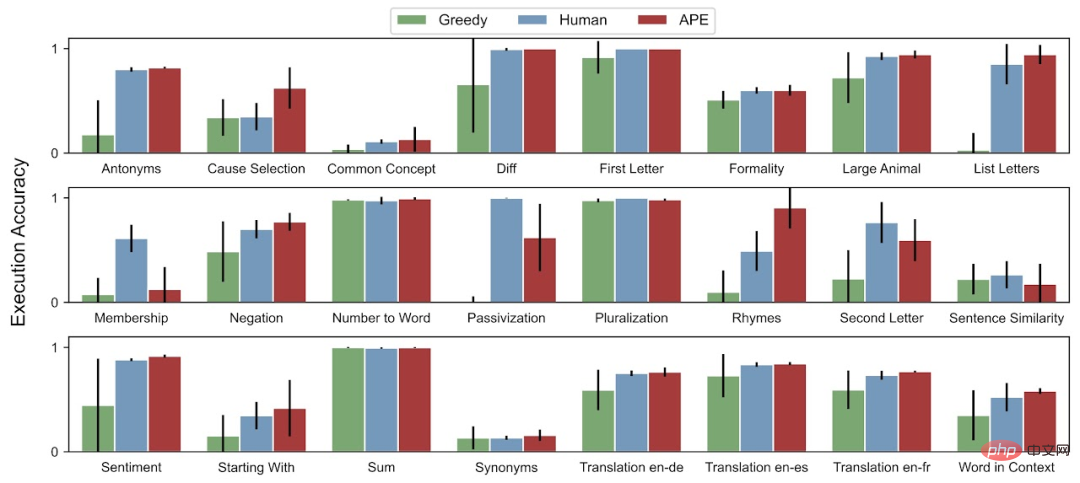

- The APE method achieves better results than instructions generated by human annotators in the 19/24 task or equivalent performance.

After seeing this study, netizens couldn’t help but sigh: Those newly hired prompt engineers may be eliminated by AI in a few months. The implication is that this research will take away the work of human prompt engineers.

"This research does its best to automate prompt engineering so that researchers engaged in ML can get back to the real There is an algorithm problem (with two crying emoticons attached)."

Some people lamented: LLM is indeed the mainstay of the original AGI.

APE uses both proposal and scoring LLM is used in every key component.

As shown in Figure 2 and Algorithm 1 below, APE first proposes several candidate prompts, then filters/refines the candidate set according to the selected scoring function, and finally selects the instruction with the highest score .

The following figure shows the execution process of APE. It can generate several candidate prompts through direct inference or a recursive process based on semantic similarity, evaluate their performance, and iteratively propose new prompts.

Initial proposal distribution

Since the search space is infinitely large, it is found Correct instructions are extremely difficult, making natural language program synthesis historically intractable. Based on this, the researchers considered utilizing a pre-trained LLM to propose a candidate solution to guide the search process.

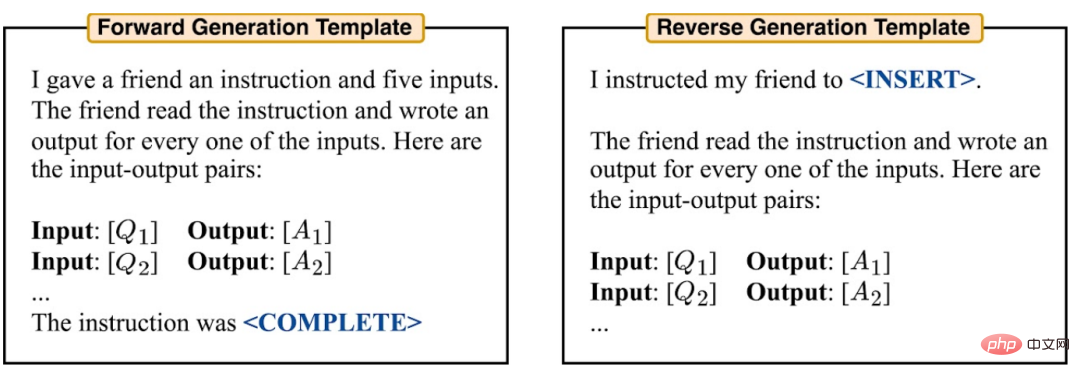

They consider two methods to generate high-quality candidates. First, a method based on forward pattern generation is adopted. Additionally, they also considered reverse pattern generation, using LLMs with padding capabilities (e.g., T5, GLM, InsertGPT) to infer missing instructions.

##Score function

In order to combine the questions Converting to a black-box optimization problem, the researchers chose a scoring function to accurately measure the alignment between the dataset and the data generated by the model.In the inductive experiment, the researchers considered two potential scoring functions. In the TruthfulQA experiment, researchers mainly focused on the automation metrics proposed by Lin et al., similar to execution accuracy.

In each case, the researcher uses the following formula (1) to evaluate the quality of the generated instructions and expectations holding the test data set Dtest.

Experiment

The researchers studied how APE guides LLM to achieve the expected behavior. They proceed from three perspectives:zero-shot performance, few-shot context learning performance, and authenticity(truthfulness).

We evaluated zero-shot and few-shot context learning on 24 instruction induction tasks proposed by Honovich et al. These tasks cover many aspects of language understanding, from simple phrase structures to similarity and causality recognition. To understand how the instructions generated by APE guide LLM to generate different styles of answers, this paper applies APE to TruthfulQA, a dataset. For zero-shot test accuracy, APE achieved human-level performance on 19 out of 24 tasks.

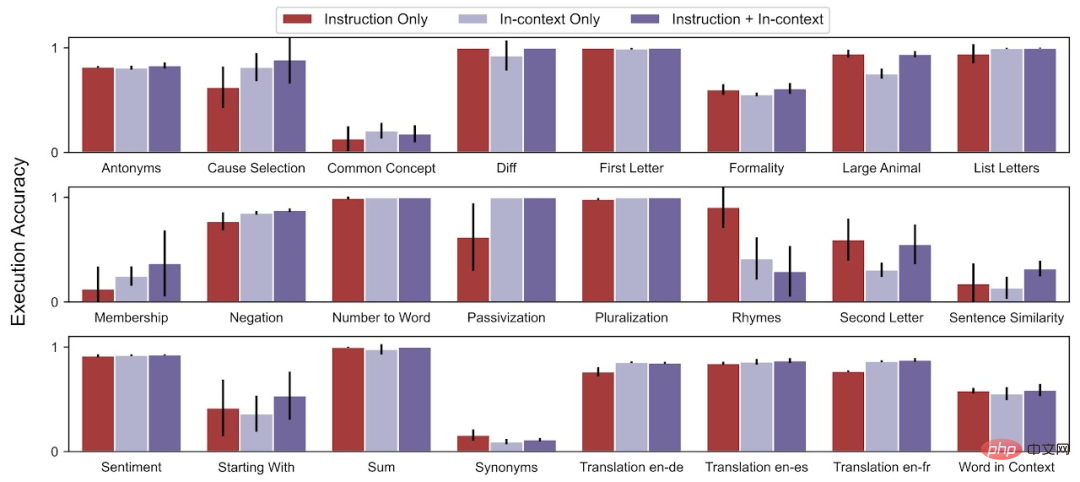

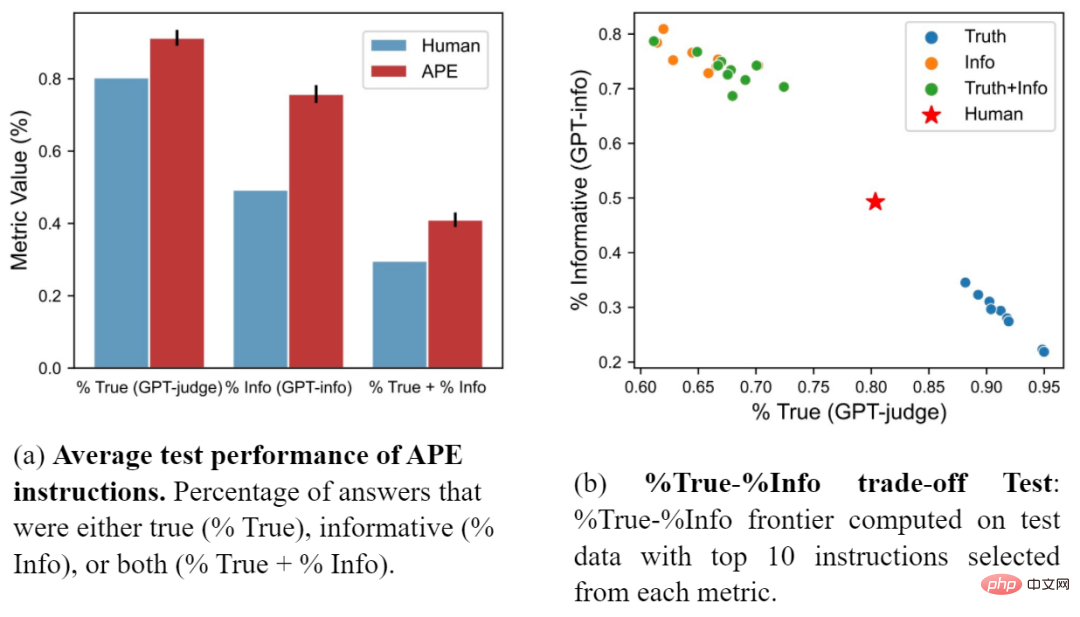

For the few-shot context test accuracy, APE improves the few-shot context learning performance on 21 of the 24 tasks. The researchers also compared the APE prompt with the artificial prompt proposed by Lin et al. Figure (a) shows that APE instructions outperform human prompts on all three metrics. Figure (b) shows the trade-off between truthfulness and informativeness. Please refer to the original paper for more details.

The above is the detailed content of AI automatically generates prompts that are comparable to humans. Netizen: Engineers have just been hired and will be eliminated again.. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology