Technology peripherals

Technology peripherals AI

AI Learning ChatGPT, what will happen if human feedback is introduced into AI painting?

Learning ChatGPT, what will happen if human feedback is introduced into AI painting?Learning ChatGPT, what will happen if human feedback is introduced into AI painting?

Recently, deep generative models have achieved remarkable success in generating high-quality images from text prompts, in part due to the scaling of deep generative models to large-scale web datasets such as LAION. However, some significant challenges remain, preventing large-scale text-to-image models from generating images that are perfectly aligned with text prompts. For example, current text-to-image models often fail to generate reliable visual text and have difficulty with combined image generation.

Back in the field of language modeling, learning from human feedback has become a powerful solution for “aligning model behavior with human intent.” This type of method first learns a reward function designed to reflect what humans care about in the task through human feedback on the model output, and then uses the learned reward function through a reinforcement learning algorithm (such as proximal policy optimization PPO) to Optimize language models. This reinforcement learning with human feedback framework (RLHF) has successfully combined large-scale language models (such as GPT-3) with sophisticated human quality assessment.

Recently, inspired by the success of RLHF in the language field, researchers from Google Research and Berkeley, California, proposed a fine-tuning method that uses human feedback to align text to image models.

##Paper address: https://arxiv.org/pdf/2302.12192v1.pdf

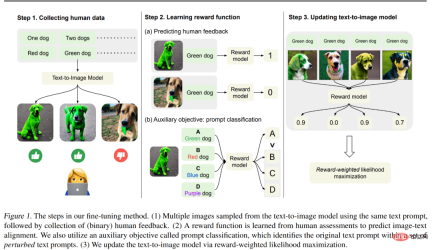

The method in this article is shown in Figure 1 below, which is mainly divided into 3 steps.

Step one: First generate different images from a set of text prompts "designed to test the alignment of text to image model output". Specifically, examine the pretrained model's more error-prone prompts—generating objects with a specific color, number, and background, and then collecting binary human feedback used to evaluate the model's output.

Step 2: Using a human-labeled dataset, train a reward function to predict human feedback given image and text prompts. We propose an auxiliary task to identify original text prompts among a set of perturbed text prompts to more effectively use human feedback for reward learning. This technique improves the generalization of the reward function to unseen images and text prompts.

Step 3: Update the text-to-image model via reward-weighted likelihood maximization to better align it with human feedback. Unlike previous work that used reinforcement learning for optimization, the researchers used semi-supervised learning to update the model to measure the quality of the model output, which is the learned reward function.

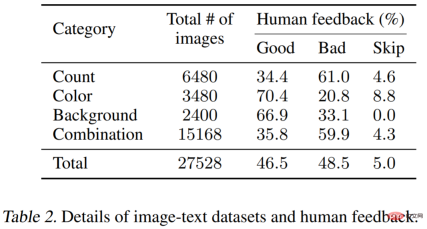

Researchers used 27,000 image-text pairs with human feedback to fine-tune the Stable Diffusion model, and the results show fine-tuning The latter model achieves significant improvements in generating objects with specific colors, quantities, and backgrounds. Achieved up to 47% improvement in image-text alignment at a slight loss in image fidelity.

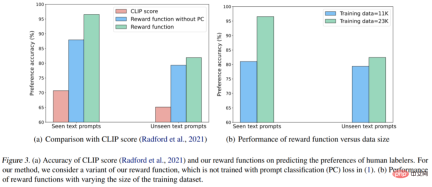

Additionally, combined generation results have been improved to better generate unseen objects given a combination of unseen color, quantity, and background prompts. They also observed that the learned reward function matched human assessments of alignment better than CLIP scores on test text prompts.

However, Kimin Lee, the first author of the paper, also said that the results of this paper did not solve all the failure models in the existing text-to-image model, and there are still many challenges. They hope this work will highlight the potential of learning from human feedback in aligning Vincent graph models.

Method introduction

In order to align the generated image with the text prompt, this study performed a series of fine-tuning on the pre-trained model, and the process is shown in Figure 1 above. First, corresponding images were generated from a set of text prompts, a process designed to test various performances of the Vincentian graph model; then human raters provided binary feedback on these generated images; next, the study trained a reward model to predict human feedback with text prompts and images as input; finally, the study uses reward-weighted log-likelihood to fine-tune the Vincent graph model to improve text-image alignment.

Human Data Collection

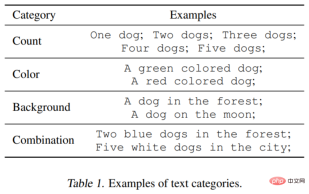

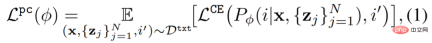

To test the functionality of the Vincent graph model, the study considered three categories of text prompts: Specified count, color, background. For each category, the study generated prompts by pairing each word or phrase that described the object, such as green (color) with a dog (quantity). Additionally, the study considered combinations of three categories (e.g., two dogs dyed green in a city). Table 1 below better illustrates the dataset classification. Each prompt will be used to generate 60 images, and the model is mainly Stable Diffusion v1.5.

Human Feedback

Next comments Generated images for human feedback. Three images generated by the same prompt are presented to the labelers, and they are asked to evaluate whether each generated image is consistent with the prompt, and the evaluation criteria are good or bad. Since this task is relatively simple, binary feedback will suffice.

Reward Learning

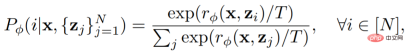

To better evaluate image-text alignment, this study uses a reward function  To measure, this function can map the CLIP embedding of image x and text prompt z to scalar values. It is then used to predict human feedback k_y ∈ {0, 1} (1 = good, 0 = bad).

To measure, this function can map the CLIP embedding of image x and text prompt z to scalar values. It is then used to predict human feedback k_y ∈ {0, 1} (1 = good, 0 = bad).

Formally speaking, given the human feedback data set D^human = {(x, z, y)}, the reward function Train by minimizing the mean square error (MSE):

Train by minimizing the mean square error (MSE):

Previously, it has been Studies have shown that data augmentation methods can significantly improve data efficiency and model learning performance. In order to effectively utilize the feedback data set, this study designed a simple data augmentation scheme and an auxiliary loss that rewards learning. This study uses augmented prompts in an auxiliary task, that is, classification reward learning is performed on the original prompts. The Prompt classifier uses a reward function as follows:

##The auxiliary loss is:

#The last step is to update the Vincent diagram model. Since the diversity of the data set generated by the model is limited, it may lead to overfitting. To mitigate this, the study also minimized the pre-training loss as follows:

Experimental results

The experimental part is designed to test the effectiveness of human feedback participating in model fine-tuning. The model used in the experiment is Stable Diffusion v1.5; the data set information is shown in Table 1 (see above) and Table 2. Table 2 shows the distribution of feedback provided by multiple human labelers.

Human ratings of text-image alignment (evaluation metrics are color, number of objects). As shown in Figure 4, our method significantly improved image-text alignment. Specifically, 50% of the samples generated by the model received at least two-thirds of the votes in favor (the number of votes was 7 or more votes in favor). votes), however, fine-tuning slightly reduces image fidelity (15% vs. 10%).

Figure 2 shows examples of images from the original model and our fine-tuned counterpart. It can be seen that the original model generated images that lacked details (such as color, background, or count) (Figure 2 (a)), and the image generated by our model conforms to the color, count, and background specified by prompt. It is worth noting that our model can also generate unseen text prompt images with very high quality (Figure 2 (b)).

Reward the results of learning. Figure 3(a) shows the model’s scores in seen text prompts and unseen text prompts. Having rewards (green) is more consistent with typical human intentions than CLIP scores (red).

The above is the detailed content of Learning ChatGPT, what will happen if human feedback is introduced into AI painting?. For more information, please follow other related articles on the PHP Chinese website!

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AM

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AMThis article explores the growing concern of "AI agency decay"—the gradual decline in our ability to think and decide independently. This is especially crucial for business leaders navigating the increasingly automated world while retainin

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AM

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AMEver wondered how AI agents like Siri and Alexa work? These intelligent systems are becoming more important in our daily lives. This article introduces the ReAct pattern, a method that enhances AI agents by combining reasoning an

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM"I think AI tools are changing the learning opportunities for college students. We believe in developing students in core courses, but more and more people also want to get a perspective of computational and statistical thinking," said University of Chicago President Paul Alivisatos in an interview with Deloitte Nitin Mittal at the Davos Forum in January. He believes that people will have to become creators and co-creators of AI, which means that learning and other aspects need to adapt to some major changes. Digital intelligence and critical thinking Professor Alexa Joubin of George Washington University described artificial intelligence as a “heuristic tool” in the humanities and explores how it changes

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AM

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AMLangChain is a powerful toolkit for building sophisticated AI applications. Its agent architecture is particularly noteworthy, allowing developers to create intelligent systems capable of independent reasoning, decision-making, and action. This expl

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AM

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AMRadial Basis Function Neural Networks (RBFNNs): A Comprehensive Guide Radial Basis Function Neural Networks (RBFNNs) are a powerful type of neural network architecture that leverages radial basis functions for activation. Their unique structure make

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AM

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AMBrain-computer interfaces (BCIs) directly link the brain to external devices, translating brain impulses into actions without physical movement. This technology utilizes implanted sensors to capture brain signals, converting them into digital comman

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AM

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AMThis "Leading with Data" episode features Ines Montani, co-founder and CEO of Explosion AI, and co-developer of spaCy and Prodigy. Ines offers expert insights into the evolution of these tools, Explosion's unique business model, and the tr

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AM

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AMThis article explores Retrieval Augmented Generation (RAG) systems and how AI agents can enhance their capabilities. Traditional RAG systems, while useful for leveraging custom enterprise data, suffer from limitations such as a lack of real-time dat

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Dreamweaver Mac version

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

WebStorm Mac version

Useful JavaScript development tools