Home >Technology peripherals >AI >To create a Chinese version of ChatGPT, what domestic academic forces can seize the opportunity?

To create a Chinese version of ChatGPT, what domestic academic forces can seize the opportunity?

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-12 20:28:061563browse

OpenAI has now become the target of pursuit in the global artificial intelligence field.

After Google presented "Bard", China's technology circle began to boil. The originally bitter natural language processing research team has now become everyone's favorite. A competition for capital and talent has begun.

"Build a Chinese version of ChatGPT" was spread in Wang Huiwen's hero post. It spread to ten and hundreds of people, becoming the common goal of Chinese AI practitioners.

However, no matter how hot the discussion about ChatGPT is in recent days, we must face a cruel reality: in this competition of chasing OpenAI, we can finally conquer the bright top. There are not many teams.

On the one hand, the cost is high, and the funds for "refining" large models are just the ticket to build ChatGPT; on the other hand, there are limited talents who can train large models. Large models are the technical cornerstone of OpenAI's creation of ChatGPT, and finding the right people is also extremely critical.

Large-scale models are bound to be the direction of industry-university-research cooperation. If ChatGPT entrants want to win, they must not only have top scientists, but also have operators who understand and have experience in the political and business environment. The team must also include star entrepreneurs who have charisma in the capital market and are willing to join in.

This article aims to take stock of the positions of major domestic potential forces in this wave of ChatGPT competition from the perspective of academic research.

1 Power of universities: Tsinghua University ranks first among all universities

Among all universities, Tsinghua University is definitely at the forefront.

Tsinghua University is an important academic research center in the field of natural language processing (NLP) in China. Its NLP research history is profound and its research team is large, including famous names such as Tang Jie, Sun Maosong, Liu Zhiyuan, and Huang Minlie. Scholars are in charge, and their work in the field of large language models has been very prominent in recent years. Moreover, many of the leaders of large models in major manufacturers are descendants of Tsinghua University, such as He Xiaodong, Vice President of JD.com, Tian Qi, Chief Scientist in the field of artificial intelligence of Huawei Cloud, etc.

After sorting it out, there are three main potential forces in Tsinghua University to catch up with this wave of ChatGPT: First, the Knowledge Engineering Laboratory (KEG), led by Li Juanzi and her disciple Tang Jie; The second is the Natural Language Processing and Social Humanities Computing Laboratory (THUNLP), whose academic leader is Sun Maosong, and the team leader Liu Zhiyuan is his disciple; the third is the Interactive Artificial Intelligence Research Group (CoAI), co-led by Zhu Xiaoyan and his student Huang Minlie.

Tsinghua Tang Jie

In the last wave of large-scale model building craze, Professor Tang Jie from the Department of Computer Science at Tsinghua University was the most prominent academic representative one. In 2020, he gathered universities in Beijing and led the development of the "Enlightenment" 1.0 and 2.0 models of Zhiyuan Research Institute.

##Tang Jie

At the same time, Tang Jie is also a scholar who attaches great importance to the integration of industry, academia and research and the construction of large-scale model ecosystems. In 2019, relying on the star product AMnier and the technical achievements of the Knowledge Engineering Laboratory, Tang Jie and Li Juanzi led the establishment of Zhipu AI. The current company team includes many students of Tang Jie, who are all the main participants in "Enlightenment" 2.0.

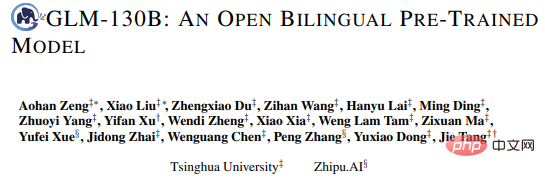

Tang Jie is from the field of data mining, and Li Juanzi is a famous scholar in the field of knowledge graphs. This determines that the characteristic of Zhipu AI’s large-scale model is “data knowledge”. Last year, Zhipu AI released the bilingual GLM-130B model, which is open source and available for free download by research institutions or individuals.

In addition, Tang Jie’s team has also established exchanges and contacts with many large companies to support them in developing large models, such as Alibaba’s M6. Tang Jie’s student Yang Zhilin is the co-founder of NLP startup Circular Intelligence and also participated in the research and development of Huawei’s “Pangu” large model. According to insiders, Zhipu AI is currently researching ChatGPT-like products and will launch them in the past two months. From the perspective of the subject direction of NLP, the Tsinghua department is the most “rooted and mature” department It is the team of Sun Maosong and Liu Zhiyuan. Tsinghua University Natural Language Processing and Social Humanities Computing Laboratory (THUNLP) is the earliest and most influential scientific research unit in China to conduct NLP research. When the laboratory was first established in the late 1970s, it was led by Huang Changning, the pioneer of NLP in China. ACL Fellow Sun Maosong was his student, and Liu Zhiyuan was Sun Maosong's student. Tsinghua Sun Maosong, Liu Zhiyuan

##Sun Maosong (left), Liu Zhiyuan (right)

THUNLP has deep accumulation in the field of NLP. In 2015, it released the Chinese poetry generation system "Nine Songs", which was trained based on a large number of human-created poems and attracted widespread attention in academia and industry.

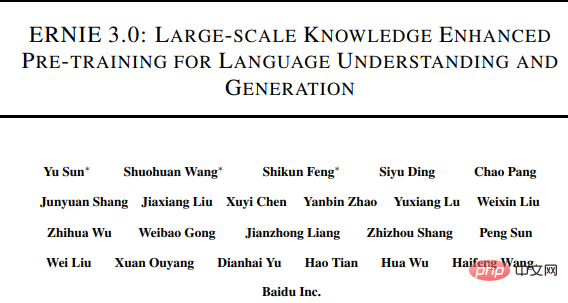

In terms of large language models, the teams of Sun Maosong and Liu Zhiyuan followed up on the pre-training paradigm in 2018, and released the ERNIE language model in early 2019 (with the same name as the Baidu version of ERNIE at the same time). Developed the CPM model, which is the predecessor of Zhiyuan Research Institute's "Wudao Wenyuan".

Sun Maosong, Liu Zhiyuan and their graduate students have incubated multiple companies in the fields of NLP and large models. Including Power Law Intelligence, founded by Tu Cunchao in 2017, focusing on the application of NLP in the legal field; Shenyan Technology, founded by Qi Fanchao in 2022, is committed to building an industrial-level Chinese information processing engine with self-developed Chinese large models.

In addition, there is Wall-Facing Intelligence founded by Zeng Guoyang last year, which focuses on the acceleration and application of large models. The company team is the backbone member of "Wudao Wenyuan". They jointly launched the OpenBMB open source community with THUNLP and the Language Large Model Technology Innovation Center of Zhiyuan Research Institute, and launched the CPM-Live tens of billions of Chinese large model live training projects, as well as large model full-process acceleration tools.

In this wave of ChatGPT, the advantage of THUNLP lies in its many NLP academic achievements and large model R&D experience. The challenge lies in engineering and commercialization.

Listening Intelligence Huang Minlie

ChatGPT As a chat robot, it is supported by dialogue system technology. In this direction, Tsinghua University The research of the interactive artificial intelligence (CoAI) research group is very outstanding.

CoAI is chaired by Zhu Xiaoyan and her student Huang Minlie. Huang Minlie is an expert in the field of conversational artificial intelligence and the author of the book "Modern Natural Language Generation". He has also previously participated in the development of the "Wudao" large model of Zhiyuan.

##黄民lie

黄民lie is also from Tsinghua University He is one of the scholars who has started to start his own business. He founded "Lingxin Intelligence" in 2021. Based on the research foundation of large models and dialogue systems, Huang Minlie chose the psychological counseling chat robot track. In 2022, his team launched an interactive robot called "AI Utopia" where users can customize AI characters and have in-depth conversations with the robot.As one of the few domestic teams capable of training large models, Huang Minlie said after recently completing the Pre-A round of financing that compared to ChatGPT, they hope to position “Lingxin Intelligence” as a “China "Character AI" - provides users with emotional companionship based on text generation. It is understood that his team has accumulated a large amount of high-quality training data in the field of mental health, with more than 3 billion model parameters. Among the major domestic Internet companies, the first echelon leading in large models include Baidu, Alibaba, and JD.com with Huawei. In addition, this wave of Internet companies that have announced their entry into the development of ChatGPT include Tencent, ByteDance, Kuaishou, 360, iFlytek, NetEase, etc. In the arms race-like ChatGPT research and development, the banknote capabilities of major manufacturers undoubtedly have an absolute advantage. Some industry insiders have commented that at present, the indicators of some major manufacturers including 360 in ChatGPT-like technology can only reach a level slightly better than GPT-2, which is different from the current ChatGPT. Compared with the generation gap, large-scale language models are fought with bare hands (such as Kuaishou, iFlytek, NetEase, etc.). Therefore, there are major uncertainties in the launch time and actual effect of the ChatGPT-like beta version. In the field of natural language processing, Baidu is the technology accumulation among major manufacturers The one with the longest history. The research and development of Baidu Wenxin large model is led by CTO Wang Haifeng. In the "Wen Xin Yi Yan" project, Wang Haifeng also serves as the general commander, and there are also core members Wu Tian (Vice President of Baidu Group and leader in the research and development of the Flying Paddle Platform) and Wu Hua (Chairman of Baidu Technical Committee and one of the founders of Baidu Translation Technology Team) one).

2 Big Factory Department

BaiduWenxin Big Model

##王海峰

Baidu is the earliest in China One of the teams that is deeply involved in the development of pre-training models. In 2019, it released the ERNIE 1.0 and 2.0 models whose Chinese effects surpassed BERT; ERNIE 3.0, released in July 2021, surpassed GPT-3 on SuperGLUE; "Pengcheng" released in the same year -Baidu·Wenxin” (ERNIE 3.0 Titan) is the first knowledge-enhanced 100-billion-level large-scale model.

"Knowledge enhancement" is the technical route taken by the Wenxin series, that is, the introduction of language knowledge and world knowledge, etc. Fusion learning from large-scale knowledge graphs and massive data to improve the learning efficiency and interpretability of large models.

In 2021, Baidu also launched a conversation robot called PLATO. The model is based on the tens of billions of parameter conversation generation large model PLATO-XL.

Baidu’s advantage lies in its rich search corpus data, user data that has been deeply cultivated in AI research for many years, and is supported by Baidu Feipiao deep learning platform and self-developed chips.

After the fierce war between Microsoft and Google, Baidu was one of the first teams in China to announce its plan to develop a ChatGPT product ("Wen Xin Yi Yan"). Insiders revealed that its product form may be an independent portal, or it may imitate Microsoft Bing and be connected with Baidu search portal.

AlibabaTongyi Big Model

Alibaba Damo Academy will enter the big model industry in 2021 and participate in the smart model industry. Yuan Research Institute developed the "Wudao·Wenhui" large model and later launched the pure text pre-training language model PLUG (27 billion parameters), which completely refers to the architecture of GPT-3, integrating language understanding and generation capabilities, and its level is close to GPT- 3.

The M6 multi-modal large model series developed by Yang Hongxia, a former member of the Intelligent Computing Laboratory of DAMO Academy (who resigned last year), and Tang Jie’s team at Tsinghua University, have parameters ranging from tens of billions to to ten trillion.

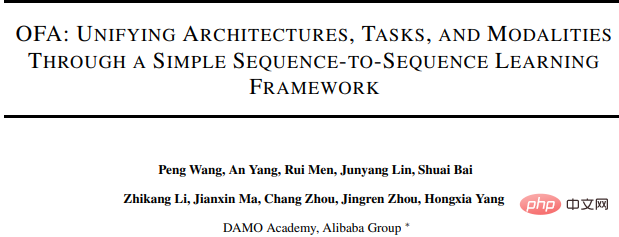

Under the leadership of Zhou Jingren, the current vice president of DAMO Academy, DAMO Academy launched the "Tongyi" model in September last year. Model.

##Zhou Jingren

The "Tongyi" large model unified the model for the first time state, architecture and tasks, and the technical support behind it is the unified learning paradigm OFA.

Last year, in order to promote the open source ecological construction of Chinese large models, DAMO Academy also launched an AI model open source community. "Model Scope" has caused huge repercussions in the industry.

Recently, DAMO Academy has confirmed that it is developing the Alibaba version of ChatGPT based on the "Tongyi" large model. In addition to text generation, it also has a painting function. It is understood that its ChatGPT-like products will be deeply integrated with DingTalk.

JD Yanxi large model

JD.com began researching AI text generation in 2020 to support JD.com Generation of product page descriptions. It is reported that 20% of the product introductions on JD.com’s mall pages are generated by the AI team using NLP technology. During this period, due to the overall depression of NLP technology, research and development was hindered, but later JD.com paid attention again and launched the Yanxi large model.

He Xiaodong, who left Microsoft to join JD.com in 2018, is currently the vice president of JD.com Group, the executive director of JD.com AI Research Institute, and the leader of JD.com’s large model research team today.

##He Xiaodong

In 2021, he Led by Zhou Bowen, the former head of JD AI (who resigned in November 2021), the domain model K-PLUG was developed on the JD Yanxipian platform. This model is closely integrated with JD.com’s e-commerce scenario, learns from specific knowledge in the e-commerce field, and can automatically generate product copywriting.

Huawei

Pangu Large Model

Huawei’s computing resources provide a natural advantage for its research on large models.Huawei began to lay out large models in 2020, and the leader is Tian Qi (IEEE Fellow, Academician of the International Eurasian Academy of Sciences).

2020 3 In August, he joined Huawei Cloud as chief scientist in the field of artificial intelligence. In the summer of the same year, after GPT-3 came out, he immediately formed a team and started the research and development of the Pangu model. Huawei therefore became one of the first major manufacturers to participate in the large model competition in 2020.

Based on Shengteng AI, in cooperation with Pengcheng Labs and Circular Intelligence, Huawei released the "Pangu" series of large models in April 2021, including Chinese language, visual, multi-mode Four major models: state and scientific computing.

Pangu NLP large model is the industry’s first large model that generates and understands Chinese at a scale of hundreds of billions. In the pre-training stage, 40 TB of Chinese text data was learned, including small sample data of industry segments. In terms of model structure, Pangu adopts the Encode and Decode architecture to ensure its performance in generation and understanding.

Huawei has R&D experience in hundreds of billions of models and powerful computing resources. However, it has said little this time and has not yet made it clear whether it will develop similar models. ChatGPT products.

3 Academic Start-up School

This wave of AI commercialization boom brought about by ChatGPT has extremely high technical barriers, and it also provides Huge opportunities for scientists to start their own businesses.

Zhou Ming, founder of Lanzhou Technology, Zhang Jiaxing, chair scientist of IDEA Research Institute, Lan Zhenzhong, Xihu Xinchen, and Zhou Bowen, founder of Xianyuan Technology, are all academic start-ups. represent.

For them, to win in the future ChatGPT business war, they also need to strengthen their engineering and market operation capabilities. Like Zhang Jiaxing and Lan Zhenzhong, they are already looking for a CEO for their R&D team.

In addition, we can also observe two entry modes: one is based on strong technical capabilities to make up for shortcomings in commercialization; the other is commercialization The experienced bosses are removed and technical talents are attracted to form the team.

We will wait and see which model will be more successful in the end.

LANZHOU TECHNOLOGYZhou Ming

When the trend of the last round of large model stack parameters was in full swing, Zhou Ming Taking a unique approach, he chose a lightweight large model route for his start-up company Lanzhou Technology.

In June 2021, Zhou Ming established Lanzhou Technology. In the following July, his team released the "Mencius" model with one billion parameters, with an effect comparable to one hundred billion Large model.

This is the result of Zhou Ming’s achievements in both academia and industry.

Zhou Ming

Zhou Ming is in the field of NLP One of the most influential Chinese scientists. He graduated with a PhD from Harbin Institute of Technology in 1991. He is a fellow student of Baidu CTO Wang Haifeng and a disciple of Harbin Institute of Technology professor and NLP master Li Sheng. During his PhD, Zhou Ming developed China's first Chinese-English translation system.

After graduation, Zhou Ming first entered Tsinghua University as a teacher. In 1999, he was poached by Kaifu Li, the founding dean of Microsoft Research Asia (MSRA), and served as the director of the NLP group in 2001. Later he was promoted to vice president of Microsoft Asia Research.

During his time at MSRA, Zhou Ming made many achievements in the industrialization of NLP technology. He participated in and led more than 100 papers at the ACL Summit. Related technologies were also applied to Windows, Office, Among world-class products such as Azure and Microsoft Xiaoice.

In 2020, Zhou Ming was determined to seek cooperation between academia and industry in a new way, so he ended his 21-year career at Microsoft and joined the Innovation Works founded by Kaifu Li. Artificial Intelligence Engineering Institute, served as chief scientist, and began to incubate an entrepreneurial team, eventually establishing Lanzhou Technology.

According to official news, Lanzhou Technology will rely on its large language model similar to the underlying technology of ChatGPT to cooperate with Chinese Online, which has massive data, to create a domestic ChatGPT. It is not yet known whether Zhou Ming’s team will continue to take the lightweight model route, or turn to making large models of more than 100 billion levels as technical support.

Xianyuan Technology Zhou Bowen

In 2022, Zhou Bowen returned to academia and joined Tsinghua University as a tenured professor of the Department of Electronic Engineering and Tsinghua University Huiyan Chair Professor of the University and Director of the Collaborative Interactive Intelligence Research Center of the Department of Electronics.

At the same time, Zhou Bowen is still a new entrepreneur. At the end of 2021, he resigned as senior vice president of JD.com and left to found Xianyuan Technology.

zhou Bowen

Zhou Bowen is a graduate of the Junior Class of the University of Science in China and holds a Ph.D. Attended University of Colorado Boulder. After graduation, he worked at IBM and served as the dean of IBM Research Artificial Intelligence Foundations (AI Foundations), chief scientist of IBM Watson Group, and IBM Distinguished Engineer. In 2017, Zhou Bowen joined JD.com as Vice President of JD.com Group and was responsible for JD.com’s AI research.

NLP, multi-modality, human-computer dialogue, etc. are all fields that Zhou Bowen has been involved in for many years. The natural language representation mechanism of the self-attention fusion multi-head mechanism he proposed later became one of the core ideas of the Transformer architecture. He also proposed two natural language generation model architectures and algorithms in the field of AIGC. In 2020, he was elected IEEE Fellow.

In Zhou Bowen’s view, the core progress of ChatGPT is the improvement of human collaboration and interactive learning rather than the enlargement of the model. Moreover, ChatGPT will definitely evolve into multi-modality in the future, which is also It is the key research direction of the Collaborative Interactive Intelligence Research Center led by him.

At Xianyuan Technology, Zhou Bowen has also implemented some AIGC applications, such as using generative artificial intelligence to accelerate consumer- and market-centered real-time innovation and improve SKU innovation success. Rate.

IDEA Research Institute Zhang Jiaxing

In the Guangdong-Hong Kong-Macao Greater Bay Area, in addition to Pengcheng Laboratory and Tencent, there is another big model player, That is, the IDEA Research Institute (full name "Guangdong-Hong Kong-Macao Greater Bay Area Digital Economy Research Institute") was founded in 2020 by well-known AI scientists such as Shen Xiangyang.

The person in charge of the large model of the IDEA Research Institute It’s Zhang Jiaxing, he is also an AI scientist who came out of MSRA.

张家兴

Zhang Jiaxing graduated from the Department of Electronics of Peking University with a Ph.D. in 2006, studying under Hou Shimin (now a professor of the Department of Electronics of Peking University). After graduation, he first stayed at Baidu for a period of time, and then joined Microsoft's Bing Search team, worked with Zhou Jingren. Later in MSRA, Zhang Jiaxing was engaged in system-oriented research, and turned to deep learning in 2012.

In 2014, Zhang Jiaxing joined Alibaba iDST (Dharma The predecessor of the institute) team, and a year later went to Ant Financial to lead the NLP technical team to apply conversational robots to financial scenarios. In 2020, Zhang Jiaxing was invited by 360 Digital CEO Wu Haisheng to join 360 Digital as chief scientist. After staying for a year and a half, he left.

Under the recommendation of MSRA Dean Zhou Lidong, Zhang Jiaxing joined the IDEA Research Institute as a chair scientist in charge of the Cognitive Computing and Natural Language Research Center .

Zhang Jiaxing’s team has always been leading the AIGC wave. He led the development of the “Fengshen Bang” open source model series, and launched the country’s first Chinese version of the Stable Diffusion model last year. "Taiyi".

After the advent of ChatGPT, Zhang Jiaxing quickly shifted the team's large model development to ChatGPT's dialogue task route at the end of last year. According to him, the class developed by his team The ChatGPT model is equivalent to ChatGPT, and has only 5 billion parameters. The text generation speed is also very fast. It is currently in internal testing and will be in public testing in the near future.

Although the current 5 billion parameters The model is already working very well, but next, Zhang Jiaxing plans to develop a ChatGPT-like product supported by the 100 billion model and promote its commercialization. This is why he has recently prepared to raise funds and find a CEO.

西湖Xinchen Lan Zhenzhong

Also looking for a CEO for the team is Lan Zhenzhong, the founder of Xihu Xinchen.

LAN Zhenzhong

Last year, in many domestic AI painting products The "Dream Stealer" (now renamed "Dream Diary") that stood out from the competition came from Lan Zhenzhong's team.

Lan Zhenzhong graduated from Sun Yat-sen University with a bachelor's degree and studied computer vision for his Ph.D. at Carnegie Mellon University. Later, when he went to work at Google, he switched to natural language processing, relying on Google The famous lightweight large model "ALBERT" was developed using TPU resources.

In June 2020, Lan Zhenzhong returned to China to join Westlake University, founded a deep learning laboratory, and started multi-modal research on the combination of language and vision.

Relying on the scientific research resources of West Lake University and the previously joined Zhiyuan "Qingyuan Club", Lan Zhenzhong started the industry-university-research model and founded West Lake Xinchen, first relying on large models to develop The psychological consultation chat robot "Xiaotian" will then launch the domestic painting product "Dream Stealer" in the Stable Diffusion wave in August 2022.

Not long after ChatGPT came out, Xihu Xinchen launched a similar text generation product "Xinchen Chat". The difference is that it can access the Internet and is multi-modal interactive. It can not only generate text but also output images.

Like many people who started out as scholars and then started their own business, Lan Zhenzhong also deeply felt that the team needed a CEO with management experience and strong resource integration capabilities to build the Chinese version of OpenAI . Please pay attention to the AI technology review tomorrow: "Lan Zhenzhong also posted a "hero post", the Chinese version of ChatGPT star company is looking for CEO"

The above is the detailed content of To create a Chinese version of ChatGPT, what domestic academic forces can seize the opportunity?. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology