Home >Technology peripherals >AI >Summary of open source data set resources for autonomous driving

Summary of open source data set resources for autonomous driving

- 王林forward

- 2023-04-12 20:07:041827browse

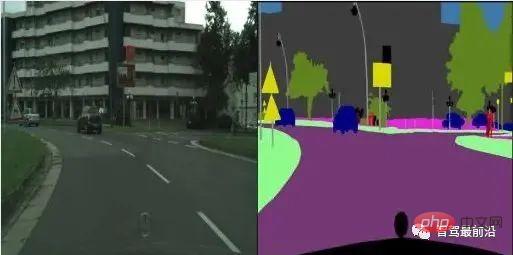

Urban landscape image pair data set

Dataset download address: http://m6z.cn/6qBe8e

Urban landscape data (dataset home page) contains vehicles driven from Germany Marking video taken in . This version is a processed subsample created as part of the Pix2Pix paper. The dataset contains still images from the original videos, and semantic segmentation labels are displayed along with the original images. This is one of the best datasets for semantic segmentation tasks.

Semantic segmentation data set for self-driving cars

Dataset download address: http://m6z.cn/5zYdv9

The The dataset provides image and labeled semantic segmentation of data captured through the CARLA autonomous vehicle simulator. This dataset can be used to train ML algorithms to identify semantic segmentations of cars, roads, etc. in images.

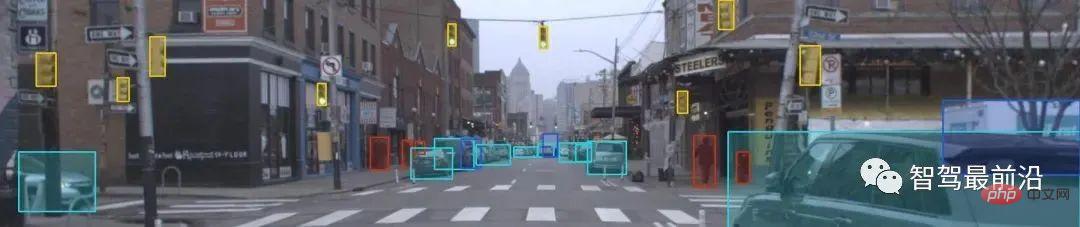

BDD100K Driving Video Dataset

Dataset download address: http://m6z.cn/6qBeaa

UCB A large-scale all-weather full-illumination data set, including 1,100 hours of HD video, GPS/IMU, timestamp information, 2D bounding box annotation of 100,000 images, semantic segmentation and instance segmentation annotation, driving decision annotation and road condition annotation of 10,000 images. Ten autonomous driving tasks that are officially recommended for use in this data set: image annotation, road detection, drivable area segmentation, traffic participant detection, semantic segmentation, instance segmentation, multi-object detection and tracking, multi-object segmentation and tracking, domain adaptation and imitation learning .

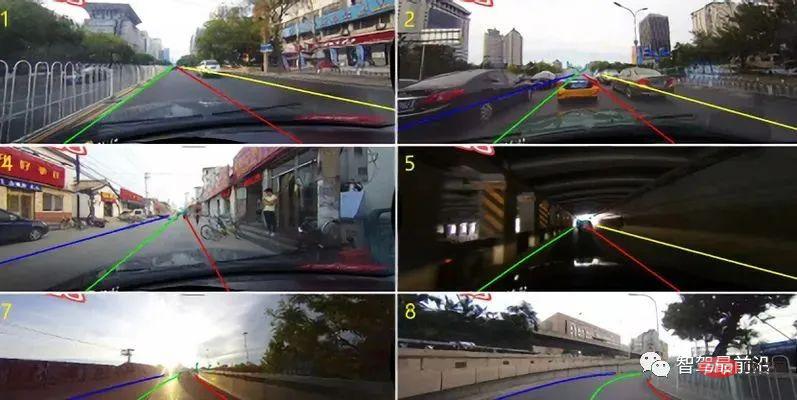

CULane Dataset

Dataset download address: http://m6z.cn/643fxb

CULane is a large-scale Challenging dataset for academic research on traffic lane detection. It was collected by cameras installed on six different vehicles driven by different drivers in Beijing. Over 55 hours of video were collected, and 133,235 frames were extracted. In each frame, traffic lanes are manually annotated with cubic splines. For situations where lane markings are obscured by vehicles or invisible, lane annotation is still contextually performed. The lane on the other side of the barrier has no annotation. In this dataset, the main focus is on the detection of four-lane markings, which is of greatest concern in practical applications. Other lane markings have no annotations.

Africa Traffic Sign Dataset

Dataset download address: http://m6z.cn/6j5167

Two open sources The dataset is only used to extract traffic signs used in the African region. The dataset contains 76 classes from all categories, e.g., regulatory, warning, guidance, and information signs. The dataset contains a total of 19,346 images and at least 200 instances per category.

Argoverse Dataset

Dataset download address: http://m6z.cn/5P0b9B

Argoverse tasks: 3D Tracking and action prediction, the data sets corresponding to the two tasks are actually independent, but the collection equipment and collection location are the same. It provides 360-degree video and point cloud information, and reconstructs the map based on the point cloud, with full illumination all day long. 3D bounding boxes in videos and point clouds are annotated. The 3D tracking data set contains 113 videos of 15-30 seconds, and the action prediction contains 323,557 videos of 5 seconds (320 hours in total). The main highlight of the data set is the linkage between original data and maps.

Driving Simulator Lane Detection Dataset

Dataset download address: http://m6z.cn/5zYdzP

This data The set consists of images generated by the Carla driving simulator. Training images are images captured by a dashcam installed in a simulated vehicle. Label images are segmentation masks. The label image classifies each pixel as: left lane boundary and right lane boundary. The challenge associated with this dataset is to train a model that can accurately predict the segmentation masks of the validation dataset.

Autonomous driving vehicle data set on the road

Dataset download address: http://m6z.cn/5ss0xe

This data set is automatic Driving a vehicle provides easy-to-use training data. Provides the steering angle, acceleration, braking and gear position corresponding to each frame in the driving video. The video was recorded using a camera mounted on the windshield of a car driving along a road in the Indian state of Kerala.

Caltech Pedestrian Dataset

Dataset download address: http://m6z.cn/5P0bdX

The Caltech Pedestrian Dataset consists of approximately 10 hours of 640x480 30Hz video taken from vehicles traveling through regular traffic in an urban environment. Approximately 250,000 frames (in 137 approximately minute-long segments) were annotated, with a total of 350,000 bounding boxes and 2300 pedestrians.

CamSeq 2007 data set

Data set download address: http://m6z.cn/5ss0Ho

CamSeq is a ground data The set can be freely used for research work in video object recognition. The dataset contains 101 image pairs of 960x720 pixels. Each mask is specified by "_L" outside the file name. All images (original and real) are in uncompressed 24-bit color PNG format.

This data set was originally designed for the problem of self-driving cars. This sequence depicts a dynamic driving scene in the city of Cambridge filmed from a dynamic car. This is a challenging dataset because in addition to the car's self-motion, other cars, bicycles, and pedestrians also have their own motion, and they often block each other.

Real industrial scene data set

Some media have collected a large number of real scene data sets from industry and industry, with a total of 1473GB of high-quality internal data Gather resources. The data sets come from real business scenarios and are collected and provided by industry partners and media.

Under the ranking module, developers can use internal data sets for algorithm development for free. We also provide everyone with free computing power support and related technical questions and answers. .

Not only that, after the algorithm model’s score reaches the standard, developers will be given fixed rewards. Top-ranked developers can also sign contracts with the platform to continue to receive long-term revenue sharing from the algorithm’s orders!

The platform has met the real needs of smart cities, commercial real estate, bright kitchens and other industries, including but not limited to target detection, behavior recognition, image segmentation, video understanding, target tracking, OCR and other visual algorithm directions.

The latest target detection project: mobile phone identification project: http://m6z.cn/6qBdJS

More ranking projects: http://m6z.cn/6xrthf

The above is the detailed content of Summary of open source data set resources for autonomous driving. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology