Home >Technology peripherals >AI >Only two days after it went online, the website for writing papers with large AI models was delisted at the speed of light: irresponsible fabrication

Only two days after it went online, the website for writing papers with large AI models was delisted at the speed of light: irresponsible fabrication

- 王林forward

- 2023-04-12 19:19:261735browse

A few days ago, Meta AI and Papers with Code released the large-scale language model Galactica. A major feature of this model is to free your hands and ghostwriters can help you write papers. How complete is the paper you write? Abstracts, introductions, formulas, references, etc. are all included.

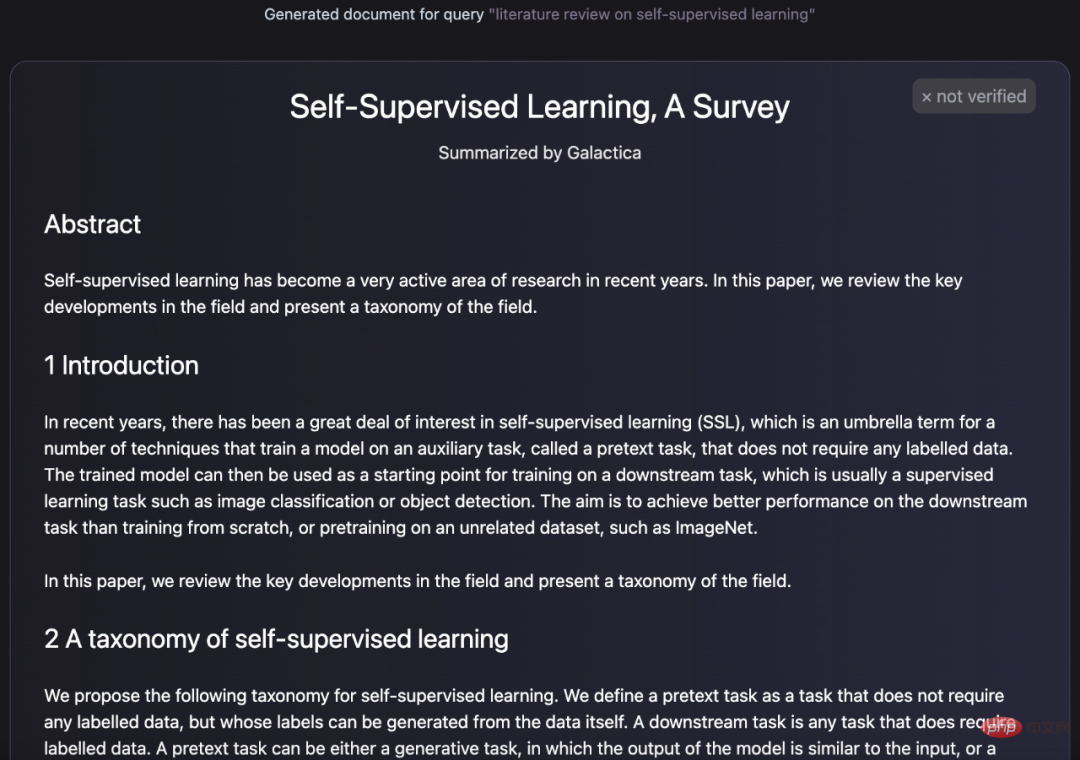

Like the following, the text generated by Galactica looks like the configuration of a paper:

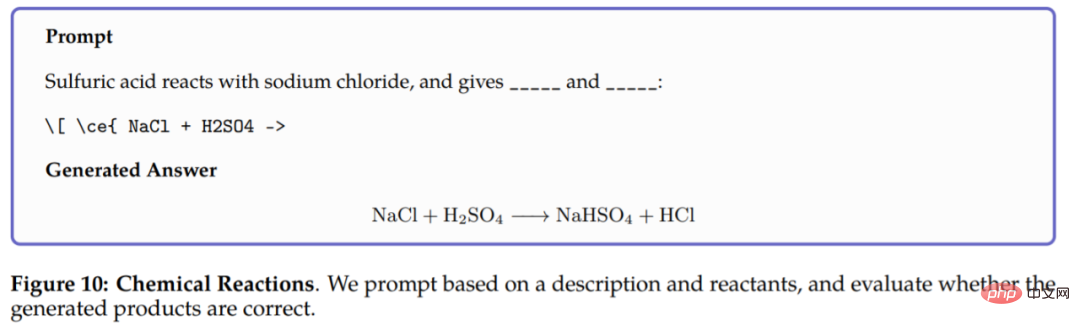

# #In addition to generating papers, Galactica can also generate encyclopedia queries of terms and provide knowledgeable answers to questions. In addition to text generation, Galactica can also perform multi-modal tasks involving chemical formulas and protein sequences. For example, in a chemical reaction, Galactica is required to predict the products of the reaction in the chemical equation LaTeX. The model can reason based only on the reactants. The results are as follows:

In order to facilitate users to experience this research, the team has also launched a trial version. As shown below, a few days ago this interface also displayed input, generation and other functions.

## (Previous version) Galactica trial version address: https://galactica.org/

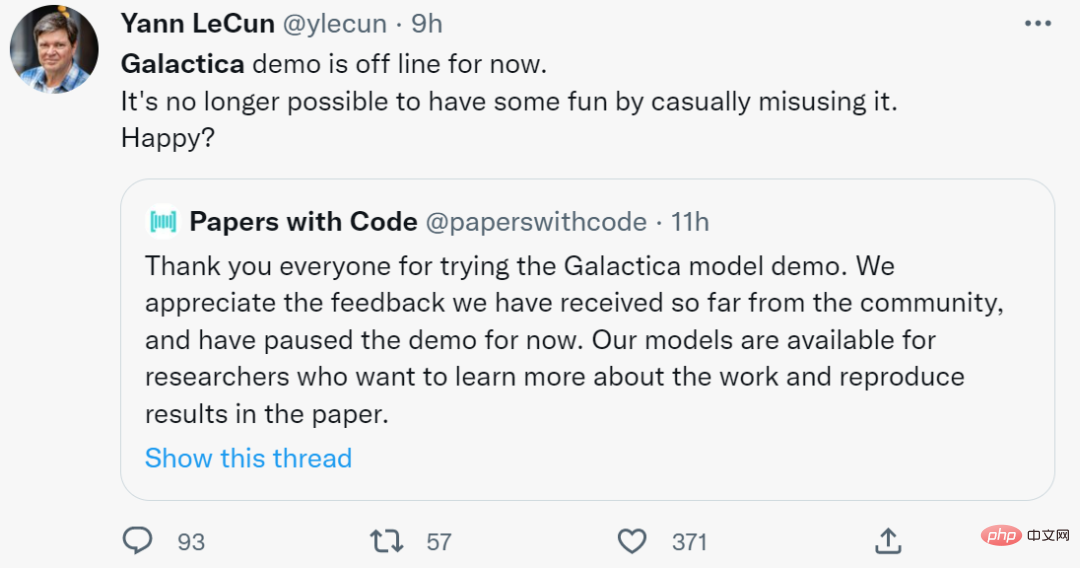

In just a few days, its interface became like this and input was no longer possible.

"I asked Galactica some questions and its answers were all wrong or biased, but sounded correct and authoritative." After a series of experiments, Twitter user Michael Black said: "The text generated by Galactica is grammatical and feels authentic. The article it generates becomes a real scientific paper. The article may be correct, but it may also be wrong or biased, which is very likely to happen. It is difficult to detect and thus affects the way people think."

"It provides science that sounds authoritative, but without the basis of scientific method. Galactica is generated based on the statistical properties of scientific writing Pseudoscience, it's hard to tell the difference between real and fake. This could start an era of deep scientific fraud. These generated papers will be cited by others in real papers. It will be a mess. I applaud the original intention of this project , but I would like to remind everyone that this is not an accelerator for science, nor is it even a useful tool for scientific writing. This is a potential distortion and danger to science."

Screenshot of Michael Black’s answer. Link: https://twitter.com/Michael_J_Black/status/1593133722316189696

Michael Black is not the only one who discovered that Galactica has problems such as laxity and pseudo-scientific articles. Other netizens also discovered the flaw. Let’s take a look at other comments from netizens.

Causing controversy

After the trial version of Galactica was launched, many scholars raised doubts about it.

An AI scholar named David Chapman pointed out that language models should organize and synthesize language, rather than generate knowledge:

This is indeed a question worth thinking about. If the AI model can generate "knowledge", then how to judge whether the knowledge is correct or not? How will they influence or even mislead humans?

David Chapman used one of his papers as an example to illustrate the seriousness of this problem. The Galactica model extracted the key terms in the "A logical farce" part of the paper, then used some related Wikipedia articles, and finally edited and synthesized an article full of errors.

Since the trial version of the Galactica model has been taken down, we cannot see how far this article deviates from the original intention of the paper. But it is conceivable that beginners may be seriously misled if they read this article on Galactica model synthesis.

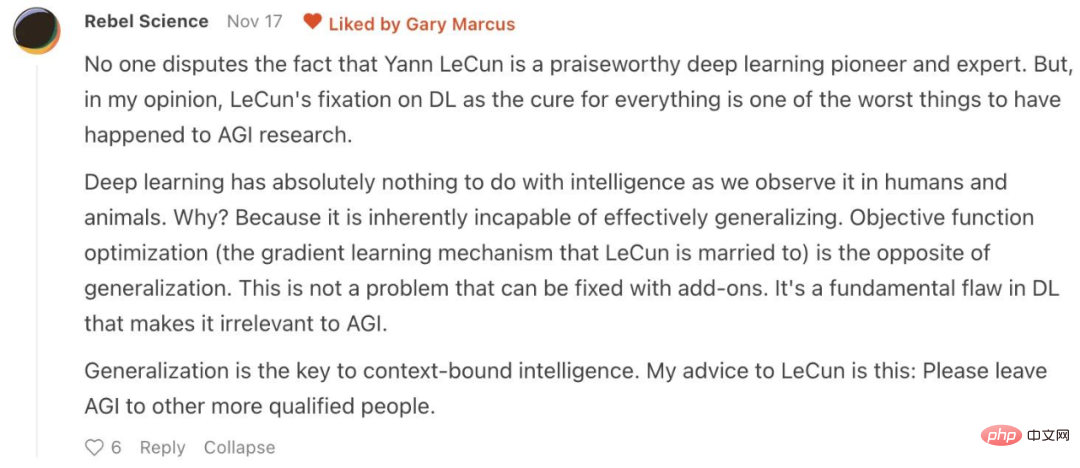

Gary Marcus, a well-known AI scholar and founder of Robust.AI, also expressed strong doubts about the Galactica model: "Large-scale language models (LLM) confuse mathematics and scientific knowledge. It's a bit scary. It's a bit scary for high school students. Might like it and use it to fool their teachers. That should worry us."

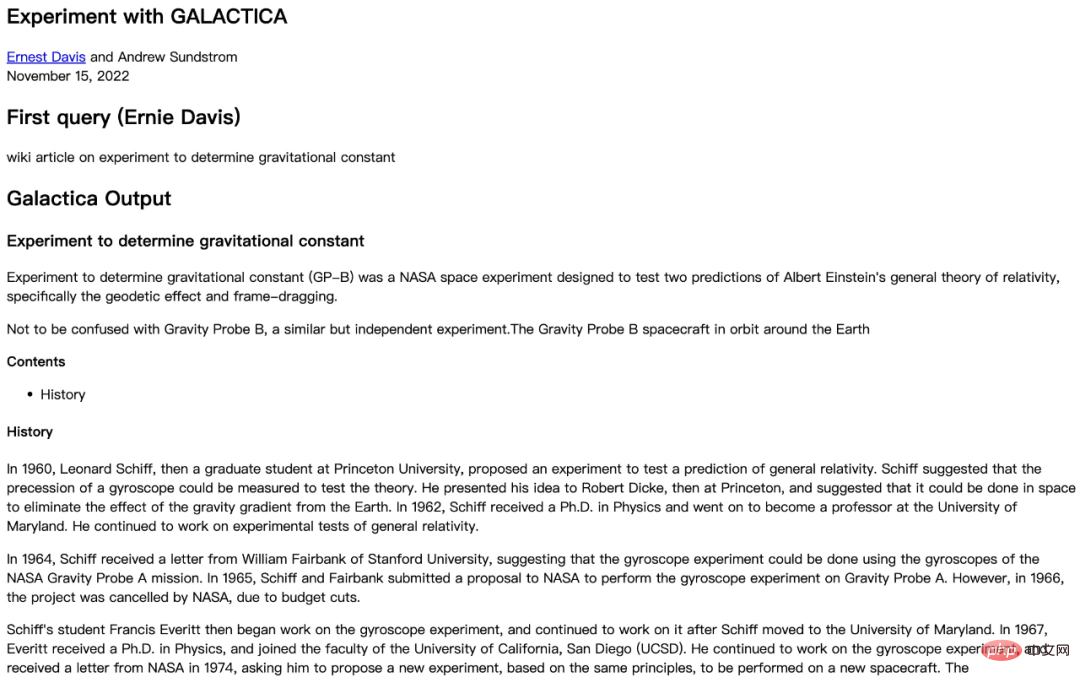

Academics from the Department of Computer Science at New York University also tested the results generated by the Galactica model , found that Galactica answered the question incorrectly:

## Picture source: https://cs.nyu.edu/~davise/papers/ ExperimentWithGalactica.html

First of all, in this experiment, the answer of the Galactica model does contain some correct information, such as:

- Gravity Probe B (GP-B) is indeed a scientific detection launched by NASA to test the correctness of general relativity and the reference frame drag effect. Leonard Schiff was indeed the physicist who proposed the experiment, and Francis Everitt was the project principal investigator (PI).

- Gravity Probe A was indeed an early test of Einstein’s theories.

However, the question posed by the NYU scholar was: Wikipedia article related to the experiment to determine the gravitational constant, while the Galactica model answered "related to testing the general theory of relativity" Encyclopedia information on experiments". This is fundamentally wrong.

Not only that, there are also factual errors in some details in the Galactica model’s answer:

- Galactica's answer emphasized not to confuse GP-B with the experiment of Gravity Probe B, but in fact "GP-B" refers to the experiment of "Gravity Probe-B".

- Leonard Schiff, mentioned in the answer, received his PhD from MIT in 1937. He taught several times at the University of Pennsylvania and Stanford University, but never at the University of Maryland. Francis Everitt received his PhD from Imperial College in 1959.

- Gravity Probe A was not canceled due to budget cuts in 1966 and had nothing to do with the gyroscope. In fact, Gravity Probe A Launched in 1976, the experiment involved a microwave maser (maser).

The NYU experiment demonstrated very specifically that there were serious problems with the results generated by the Galactica model. Error, and the study conducted multiple experiments with different questions, and each time Galactica's answer was full of errors. This shows that it is no accident that Galactica generates wrong information.

New York University experiment report: https://cs.nyu.edu/~davise/papers/ExperimentWithGalactica.html

Faced with the failure of Galactica, some netizens attributed it to deep learning Limitations: "The essence of deep learning is to learn from data, which is inherently different from human intelligence and cannot achieve general artificial intelligence (AGI) at all.

There are different opinions about the future development of deep learning. But there is no doubt that a language model like Galactica that incorrectly generates "knowledge" is not advisable.

The above is the detailed content of Only two days after it went online, the website for writing papers with large AI models was delisted at the speed of light: irresponsible fabrication. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology