Technology peripherals

Technology peripherals AI

AI Open source model, single card training, take you to understand the popular text-guided audio generation technology AudioLDM

Open source model, single card training, take you to understand the popular text-guided audio generation technology AudioLDMOpen source model, single card training, take you to understand the popular text-guided audio generation technology AudioLDM

Given a piece of text, artificial intelligence can generate music, voice, various sound effects, and even imaginary sounds, such as black holes and laser guns. AudioLDM, recently launched jointly by the University of Surrey and Imperial College London, quickly became popular abroad after its release. It received nearly 300 retweets and 1,500 likes on Twitter within a week. On the second day after the model was open sourced, AudioLDM rushed to the top of the Hugging Face hot search list, and within a week entered the Hugging Face's top 40 most popular applications list (about 25,000 in total), and quickly appeared in many Derivative work based on AudioLDM.

AudioLDM model has the following highlights:

- The first open source model that can generate music, speech and sound effects from text at the same time .

- Developed by academia, it uses less data, a single GPU, and smaller models to achieve the best results currently.

- It is proposed to train the generative model in a self-supervised manner, so that text-guided audio generation is no longer limited by the problem of missing (text-audio) data pairs.

- The model can achieve audio style transfer, audio missing filling, and audio super-resolution without additional training (zero-shot).

- Project homepage: https://audioldm.github.io/

- Paper: https://arxiv.org/abs/2301.12503

- Open source code and model: https://github.com/haoheliu/AudioLDM

- Hugging Face Space: https://huggingface.co/spaces/haoheliu/audioldm- text-to-audio-generation

The author first released a preview of the model on January 27th, showing a very simple text: " A music made by []” (a piece of music generated by []) to generate different sound effects. The video, which shows music made with different instruments and even a mosquito, quickly gained traction on Twitter, being played over 35.4K times and retweeted over 130 times.

The author then released the paper and a new video. In this video, the author demonstrates most of the capabilities of the model, as well as the effect of working with ChatGPT to generate sounds. AudioLDM can even generate sounds from outer space.

The author then released the paper, the pre-trained model, and a playable interface, which ignited the enthusiasm of Twitter netizens and quickly appeared on Hugging Face the next day. The first place on the hot search list:

This work has received widespread attention on Twitter, and scholars in the industry They have forwarded and commented:

Netizens used AudioLDM to generate a variety of sounds.

For example, the sound of a two-dimensional cat girl snoring is generated:

## And the voice of the ghost:

Some netizens synthesized: "The sound of a mummy, low frequency, with some painful moans."

Some netizens even synthesized: "melody fart sound".

I have to lament the rich imagination of netizens.

Some netizens directly used AudioLDM to generate a series of music albums in various styles, including jazz, funk, electronic and classical. Some of the music is quite inventive.

For example "Create an ambient music with the theme of the universe and the moon":

## and "Create a music using the sounds of the future":

Interested readers can visit This music album website: https://www.latent.store/albums

Some netizens also used their imagination to create a picture by combining the image-generated text model and AudioLDM. Applications that guide sound effect generation.

For example, if you give AudioLDM this text: "A dog running in the water with a frisbee" (a dog running in the water with a frisbee in its mouth):

can generate the following sound of a dog slapping the water.

You can even restore the sounds in old photos, such as the picture below:

Some netizens used AudioLDM to generate the sound of a flaming dog, which is very interesting.

The author also produced a video to demonstrate the model's ability to generate sound effects, showing how AudioLDM's generated samples are close to the effect of the sound effects library.

In fact, text audio generation is only part of the capabilities of AudioLDM. AudioLDM can also achieve timbre conversion, missing filling and super-resolution.

The two pictures below show the timbre transformation from (1) percussion to ambient music; and (2) trumpet to children’s singing.

The sound of the trumpet is transformed into the sound of a child singing (gradual conversion intensity).

Below we will show the effect of the model on audio super-resolution, audio missing filling and sound material control. Due to the limited length of the article, audio is mainly displayed in the form of spectrograms. Interested readers please go to the AudioLDM project homepage: https://audioldm.github.io/

In terms of audio super-resolution, the effect of AudioLDM is also very good. Compared with the previous super-resolution model, AudioLDM is a universal super-resolution model and is not limited to processing music and speech.

In terms of audio missing filling, AudioLDM can fill in different audio content according to the given text, and in The transition at the border is relatively natural.

In addition, AudioLDM also shows strong control capabilities, such as acoustic environment, music mood and speed, object materials, pitch pitch and sequence, etc. For control capabilities, interested readers can check out AudioLDM’s paper or project homepage.

In the article, the author made subjective scoring and objective index evaluation of the AudioLDM model. The results show that both can significantly exceed the previous optimal model:

AudioGen is a model proposed by Facebook in October 2022, using ten data sets, 64 GPUs and 285 MB of parameters. In comparison, AudioLDM-S can achieve better results with a single data set, 1 GPU and 181 MB of parameters.

Subjective scoring also shows that AudioLDM is significantly better than the previous solution DiffSound. So, what improvements has AudioLDM made to make the model have such excellent performance?

First of all, in order to solve the problem of too few text-audio data pairs, the author proposed a self-supervised method to train AudioLDM.

Specifically, when training the core module LDMs, the author uses the embedding of the audio itself as the condition of the LDMs Signal, the entire process does not involve the use of text (as shown in the image above). This scheme is based on a pair of pre-trained audio-text contrastive learning encoders (CLAP), which has demonstrated good generalization capabilities in the original CLAP text. AudioLDM takes advantage of CLAP's excellent generalization capabilities to achieve model training on large-scale audio data without the need for text labels.

In fact, the authors found that training with audio alone is even better than using audio-text data pairs:

The author analyzed two reasons: (1) The text annotation itself is difficult to include all the information of the audio, such as acoustic environment, frequency distribution, etc., resulting in the embedding of the text not being able to well represent the audio, ( 2) The quality of the text itself is not perfect. For example, such annotation "Boats: Battleships-5.25 conveyor space" is difficult for even humans to imagine what the specific sound is, which will cause problems in model training. In contrast, using the audio itself as the condition of LDM can ensure a strong correlation between the target audio and the condition, thereby achieving better generation results.

In addition, the Latent Diffusion solution adopted by the author allows the Diffusion model to be calculated in a smaller space, thereby greatly reducing the computational power requirements of the model.

Many detailed explorations in model training and structure also help AudioLDM achieve excellent performance.

The author also drew a simple structure diagram to introduce the two main downstream tasks:

The author also conducted detailed experiments with different model structures, model sizes, DDIM sampling steps, and different Classifier-free Guidance Scales.

While disclosing the model, the authors also disclosed the code base of their generative model evaluation system to unify the evaluation methods of the academic community on such issues in the future, thereby facilitating the preparation of papers. Comparison between the Questioned:

##The author’s team said it would Limit the use of models, especially commercial use, to ensure that models are only used for academic communication, and use appropriate LICENSE and watermark protection to prevent ethical problems.

The paper has two co-authors: Liu Haohe (University of Surrey, UK) and Chen Zehua (Imperial College London, UK).

## Liu Haohe is currently studying for his PhD at the University of Surrey, UK, under the tutelage of Professor Mark D. Plumbley. Its open source projects have received thousands of stars on GitHub. He has published more than 20 papers at major academic conferences and won the top three rankings in several world machine acoustics competitions. In the corporate world, we have extensive cooperation with Microsoft, ByteDance, the British Broadcasting Corporation, etc. Personal homepage: https://www.surrey.ac.uk/people/haohe-liu

Chen Zehua is a doctoral student at Imperial College London, studying under Professor Danilo Mandic. He has interned at Microsoft Speech Synthesis Research Group and JD Artificial Intelligence Laboratory. His research interests include Generative models, speech synthesis, bioelectrical signal generation.

The above is the detailed content of Open source model, single card training, take you to understand the popular text-guided audio generation technology AudioLDM. For more information, please follow other related articles on the PHP Chinese website!

What is SQL DESCRIBE? - Analytics VidhyaApr 22, 2025 am 10:04 AM

What is SQL DESCRIBE? - Analytics VidhyaApr 22, 2025 am 10:04 AMSQL's DESCRIBE (or DESC) Command: Your Database Table Detective Understanding relational database table structures is crucial. SQL's DESCRIBE command acts as your database detective, providing detailed insights into table composition. At a Glance:

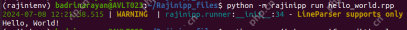

Rajini : Programming Language Inspired by Rajinikanth - Analytics VidhyaApr 22, 2025 am 09:58 AM

Rajini : Programming Language Inspired by Rajinikanth - Analytics VidhyaApr 22, 2025 am 09:58 AMRajini , a whimsical programming language born from the iconic dialogues of Rajinikanth, blends coding with pop culture. This playful esoteric language, created by Aadhithya Sankar, isn't meant for serious software development, but offers a unique

Top 40 DBMS Interview Questions and Answers (2025)Apr 22, 2025 am 09:56 AM

Top 40 DBMS Interview Questions and Answers (2025)Apr 22, 2025 am 09:56 AMThis article provides a comprehensive guide to database management system (DBMS) interview questions, designed to prepare candidates for various DBMS-related roles. It covers fundamental concepts such as DBMS and RDBMS architectures, normalization t

Excel TRANSPOSE FunctionApr 22, 2025 am 09:52 AM

Excel TRANSPOSE FunctionApr 22, 2025 am 09:52 AMPowerful tools in Excel data analysis and processing: Detailed explanation of TRANSPOSE function Excel remains a powerful tool in the field of data analysis and processing. Among its many features, the TRANSPOSE function stands out for its ability to reorganize data quickly and efficiently. This feature is especially useful for data scientists and AI professionals who often need to reconstruct data to suit specific analytics needs. In this article, we will explore the TRANSPOSE function of Excel in depth, exploring its uses, usage and its practical application in data science and artificial intelligence. Learn more: Microsoft Excel Data Analytics Table of contents In Excel

How to Install Power BI DesktopApr 22, 2025 am 09:49 AM

How to Install Power BI DesktopApr 22, 2025 am 09:49 AMGet Started with Microsoft Power BI Desktop: A Comprehensive Guide Microsoft Power BI is a powerful, free business analytics tool enabling data visualization and seamless insight sharing. Whether you're a data scientist, analyst, or business user, P

Graph RAG: Enhancing RAG with Graph Structures - Analytics VidhyaApr 22, 2025 am 09:48 AM

Graph RAG: Enhancing RAG with Graph Structures - Analytics VidhyaApr 22, 2025 am 09:48 AMIntroduction Ever wondered how some AI systems seem to effortlessly access and integrate relevant information into their responses, mimicking a conversation with an expert? This is the power of Retrieval-Augmented Generation (RAG). RAG significantly

SQL GRANT CommandApr 22, 2025 am 09:45 AM

SQL GRANT CommandApr 22, 2025 am 09:45 AMIntroduction Database security hinges on managing user permissions. SQL's GRANT command is crucial for this, enabling administrators to assign specific access rights to different users or roles. This article explains the GRANT command, its syntax, c

What is Python IDLE?Apr 22, 2025 am 09:43 AM

What is Python IDLE?Apr 22, 2025 am 09:43 AMIntroduction Python IDLE is a powerful tool that can easily develop, debug and run Python code. Its interactive shell, syntax highlighting, autocomplete and integrated debugger make it ideal for programmers of all levels of experience. This article will outline its functions, settings, and practical applications. Overview Learn about Python IDLE and its development benefits. Browse and use the main components of the IDLE interface. Write, save, and run Python scripts in IDLE. Use syntax highlighting, autocomplete and intelligent indentation. Use the IDLE integrated debugger to effectively debug Python code. Table of contents

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Dreamweaver Mac version

Visual web development tools