Home >Technology peripherals >AI >Demis Hassabis: AI is more powerful than we imagined

Demis Hassabis: AI is more powerful than we imagined

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-12 18:43:091063browse

Recently, Demis Hassabis, the founder of DeepMind, was a guest on Lex Fridman’s podcast and talked about many interesting points.

At the beginning of the interview, Hassabis bluntly stated that the Turing test is outdated because it is a benchmark that has been proposed for decades, and the Turing test is based on human actions and reactions. This is It is prone to a "farce" similar to that reported by a Google engineer some time ago that the AI system is conscious: researchers talk to a language model and map their own perceptions onto the judgment of the model, which is unobjective.

Since its establishment in 2015, DeepMind’s development in the field of artificial intelligence has brought surprises to the world again and again: from the game program AlphaGo to the protein prediction model AlphaFold, the technological breakthroughs of deep reinforcement learning have solved the problems that plague mankind. Scientists have tackled major scientific issues for many years, and the thinking and motivation of the team behind them are fascinating.

In this interview with Hassabis, he also talked about an interesting point, that is, AI surpasses the limitations of human intelligence. While humans may have become accustomed to this three-dimensional world with time, AI may be able to achieve the intelligence of understanding the world from twelve dimensions and get rid of the nature of tools, because there are still many shortcomings in our human understanding of the world.

The following is an interview with Demis Hassabis:

1From games to AI

Lex Fridman: When did you start to like programming?

Demis Hassabis: I started playing chess when I was about 4 years old and bought my first computer when I was 8 with the winnings I won from a chess tournament. Taiwan zx spectrum, later I bought a book about programming. I fell in love with computers when I first started using them to make games. I thought they were magical and an extension of your mind. You could ask them to do some task and when you woke up the next day it had already been solved.

Of course, all machines do this to some extent, augmenting our natural abilities, such as cars that allow us to move faster than we can run. But artificial intelligence is the ultimate expression of all learning that machines can do, so my thoughts naturally extended to artificial intelligence as well.

Lex Fridman: When did you fall in love with artificial intelligence? When did I begin to understand that it can not only write programs and perform mathematical operations while sleeping, but can also perform tasks more complex than mathematical operations?

Demis Hassabis: It can be roughly divided into several stages.

I was the captain of the youth chess team. When I was about 10 or 11 years old, I planned to become a professional chess player. This was my first dream. At the age of 12 I reached Grandmaster level and was the second-ranked chess player in the world, behind Judith Pologer. When I try to improve my chess skills, I first need to improve my thinking process. How does the brain come up with these ideas? Why does it make mistakes? How can this thought process be improved?

Like the chess computers of the early to mid-80s, I was used to having a branded version of Kasparov that, while not as powerful as it is today, could be improved upon by practicing with it. At the time I thought, this is amazing, someone programmed this board to play chess. I bought a copy of "The Chess Computer Handbook" by David Levy published in 1984. It was a very interesting book and allowed me to fully understand how chess programs are made.

Caption: Kasparov, former Soviet and Russian professional chess player, chess grandmaster

My first artificial intelligence program was created by Programming my Amiga, I wrote a program to play Othello backwards, which is a slightly simpler game than chess, but I used all the principles of chess programming in it, i.e. alpha-beta search, etc.

The second stage was a game called "Theme Park" that I designed when I was about 16 or 17 years old, which involved AI simulation in the game, although it was very simple by today's AI standards. , but it reacts to how you play as a player, so it's also called a sandbox game.

Lex Fridman: Can you tell us some of your key connections with AI? What does it take to create an AI system in a game?

Demis Hassabis: I trained myself in games when I was a child, and later went through a phase of designing games and writing AI for games. All the games I wrote in the 1990s had artificial intelligence as a core component. The reason why I did this in the game industry is because at that time I thought the game industry was at the forefront of technology. People like John Carmack and Quake seemed to be doing it in games. We're still reaping the benefits of it, like GPUs, which were invented for computer graphics but were later discovered to be important for AI. So I thought at the time, the game had cutting-edge artificial intelligence.

In the early days, I participated in a game called "Black and White", which is the most profound example of the application of reinforcement learning in computer games. You can train a small pet in the game, and it will learn from the way you treat it. If you treat it badly, it will become mean and mean to your villagers and the small tribe you manage. . But if you treat it well, it will become kind.

Lex Fridman: The game's mapping of good and evil made me realize that you can determine the outcome through the choices you make. Games can bring this kind of philosophical meaning.

Demis Hassabis: I think games are a unique medium in that as a player you don’t just passively consume entertainment, you actually actively participate as a representative . So I think that's why games have more substance in some ways than other media, like movies and books.

We’ve thought deeply about AI from the beginning, using games as a proving ground for proven and open AI algorithms. This is why Deepmind initially used a large number of games as the main test platform, because games are very efficient and it is easy to have indicators to see how the AI system is improving, the direction of its thinking, and whether it is making incremental improvements.

Lex Fridman: Assuming we can't build a machine that can beat humans at chess, one would think that Go is an unbreakable game because of the complexity of the combinations. But in the end, AI researchers built this machine, and humans realized that we are not as smart as we thought.

Demis Hassabis: This is an interesting journey of thinking, especially when I understand it from two perspectives (AI creator and game player), it feels even more magical, It feels a bit bittersweet at the same time.

Kasparov calls chess an intelligent “fruit fly”. I quite like this description because chess has been closely related to AI from the beginning. I think every AI practitioner, including Turing and Shannon, and all the forefathers in the field, has tried to write a chess program. Shannon wrote the first program document about chess in 1949. Turing also wrote a famous chess program, but because the computer was too slow to run, he used pencil and paper to run the program manually and talked with friends. Play together.

DeepBlue came out as a big moment, combining all the things I love about chess, computers and artificial intelligence. In 1996, it defeated Garry Kasparov. After that, I was more impressed with Kasparov's mind than DeepBlue because Kasparov is a human mind and not only can he reach the same level as a computer in playing chess, Kasparov can also do everything a human can do, such as ride a bicycle, speak Multilingualism, participation in political activities, and more.

While DeepBlue had its moments of glory in chess, it was essentially distilling the knowledge of a chess grandmaster into a program that couldn't do anything else. So I think there's something smart that's missing from the system, and that's why we tried to do AlphaGo.

Lex Fridman: Let’s talk briefly about the human side of chess. From a game design perspective, you suggested that chess is attractive because it is a game. Could you please explain whether there is a creative tension between the bishop (the bishop in chess) and the knight (the knight in chess)? What makes games attractive and able to span centuries?

Demis Hassabis: I am also thinking about this issue. In fact, many excellent chess players do not necessarily think about this issue from the perspective of a game designer.

Why is chess so attractive? I think a key reason is the dynamics of the different positions, you can tell whether they are closed or open, think about how differently the bishop and the knight move, and chess has evolved to balance those two. , roughly 3 points.

Lex Fridman: So you think the dynamic is always there and the remaining rules are trying to stabilize the game.

Demis Hassabis: Maybe this is a bit like a chicken-and-egg situation, but the two achieve a beautiful balance. The bishop, knight, and knight have different powers. But its value is equal throughout the universe. They've been balanced by humans over the past few hundred years, which I think gives the game a creative tension.

Lex Fridman: Do you think AI systems can attract humans to design games?

Demis Hassabis: This is an interesting question. If creativity is defined as coming up with something original and useful for a purpose, then the lowest level of creativity is like an interpolated expression, and basic AI systems have this ability. Show it millions of pictures of cats, and then show me a normal cat. This is called interpolation.

Also like AlphaGo, it can infer. After playing millions of games against itself, AlphaGo came up with some great new ideas, such as making 37 moves in the game, providing a strategy that humans have never thought of, even though we have been playing the game for hundreds and thousands of years.

There is another level above this, which is whether you can think outside the box and do real innovation. Could you invent chess instead of coming up with a move? Could chess, or something similar to chess or Go, be invented?

I think AI can do it one day, but the question now is how to assign this task to a program. We are not yet able to concrete high-level abstract concepts into artificial intelligence systems, and they still lack something in terms of truly understanding high-level concepts or abstract concepts. As it stands, they can be combined and composed, and AI can do interpolation and inference, but none of it is really invention.

Lex Fridman: Coming up with rule sets and optimizing them, developing complex goals around those rule sets, is something we can't do right now. But is it possible to take a specific set of rules and run it and see how long it takes the AI system to learn from scratch?

Demis Hassabis: I actually thought about it, this is amazing for a game designer. If there was a system that played your game tens of millions of times, it might be able to implement automatic balancing rules overnight. Units or rules in the game can be adjusted through equations or parameters to make the game more balanced. It's kind of like giving a base set and exploring it through a Monte Carlo method search or something like that, that would be a super powerful tool.

And in order to automatically balance, it usually requires thousands of hours of training from hundreds of games. Balancing games like StarCraft, Blizzard, etc. is astounding and requires testers year after year. . So conceivably, at some point these things become effective enough, you might want to do it overnight.

Lex Fridman: Do you think we are living in a simulation?

Demis Hassabis: Yes. Nick Bostrom famously first proposed the theory of simulation, but I don't really believe in it. In a sense, we are in some kind of computer game, or our descendants are somehow reshaping the planet in the 21st century.

The best way to understand physics and the universe is to understand it from a computational perspective as a universe of information. In fact, information is the most basic unit of reality. Compared to matter or energy, physicists would say E=mc², which is the basis of the universe. But I think that information is probably the most fundamental way to describe the universe, and that it can itself specify energy or matter the right stuff. So we can say that we are in some kind of simulation. But I don't agree with these ideas of throwing away billions of simulations.

Lex Fridman: Based on your understanding of the general term machine, your understanding of computers, do you think there is anything in the universe beyond the capabilities of computers? You don't agree with Roger Penrose?

Demis Hassabis: Roger Penrose is very famous and has participated in many excellent debates. I have read his classic book "The Emperor's New Brain" where he explains consciousness in the brain. More quantum stuff is needed. I've also been thinking a lot in my work about what we're doing, in effect, pushing Turing machines or classical computing to its limits. What are the limits of classical computing? I also studied neuroscience, which was the reason I chose this direction for my PhD, to see if there is a quantum presence in the brain from a neuroscience or biological perspective.

So far, most neuroscientists and biologists would say there is no evidence of any quantum systems or effects in the brain, most of which can be explained using classical theory and knowledge of biology. But at the same time, starting with what Turing machines can do, including AI systems, is an ongoing process, especially in the past decade. I wouldn't bet on how far universal Turing machines and classical computing paradigms can go, but perhaps what happens in the brain can be emulated on a machine without the need for metaphysical or quantum stuff.

2Al for science

Lex Fridman: Now let’s talk about AlphaFold. Do you think human thinking comes from this similar nerve? Cyber, biological computing mush instead of working directly mentally?

Demis Hassabis: In my opinion, the greatest miracle in the universe is that there are only a few pounds of mush in our skulls, which is also the brain and the largest known structure in the universe. Complex objects. I think it's an amazingly efficient machine, and it's one of the reasons I've always wanted to build AI. By building an agent like AI and comparing it to the human mind, we may be able to help us historically wonder about the uniqueness of the mind and its true secrets, consciousness, dreaming, creativity, emotion, and everything else.

There are now a large number of tools to achieve this. All the neuroscience tools, FMI machines can record, and there is also AI computing power to build intelligent systems. It’s amazing what the human mind can do. The fact that humans create things like computers and think about and study these problems is a testament to the human mind and helps us understand the universe and the human mind more clearly. It could even be said that we may be the mechanism by which the universe attempts and understands its own beauty.

Looking at it from another perspective, the basic building blocks of biology can also be used to understand the human mind and body. It is amazing to simulate and build models starting from the basic building blocks. You can build larger and larger , more complex systems, and even human biology as a whole.

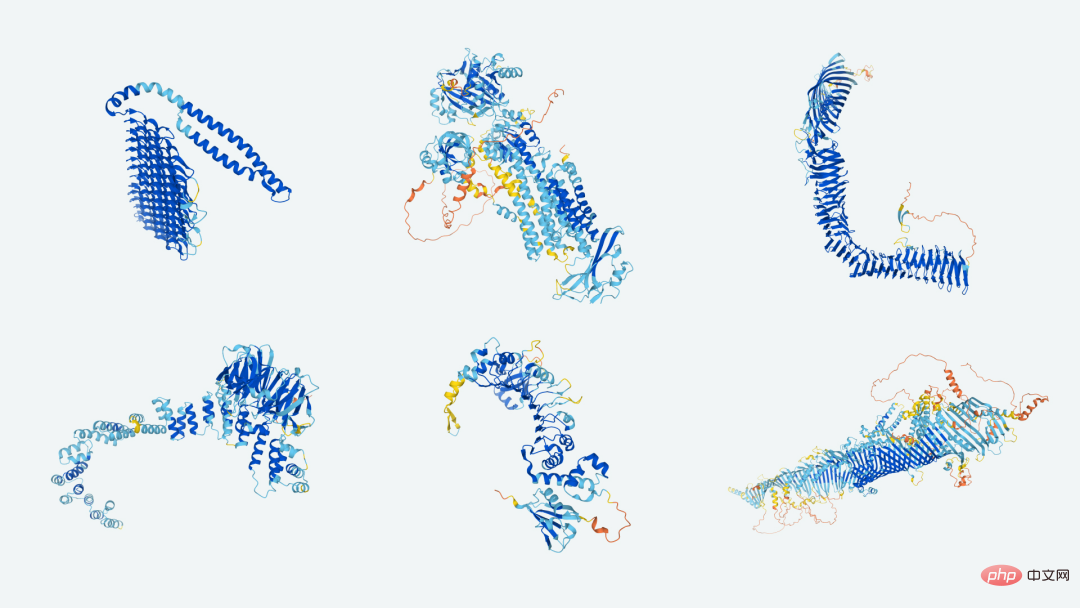

There is another problem that is considered impossible to solve, which is protein folding. AlphaFold solved the protein folding problem, which is one of the biggest breakthroughs in the history of structural biology. Protein is essential for all life, and every function of the body depends on protein.

Proteins are specified by their gene sequence (also called an amino acid sequence), which can be thought of as their basic building blocks. They fold into a three-dimensional structure in the body and in nature, and this three-dimensional structure determines its function in the body. Furthermore, if you are interested in drugs or diseases and want to use a drug compound to block the action of a protein, the prerequisite is to understand the three-dimensional structure of the binding site on the protein surface.

Note: In July 2021, DeepMind publicly released the AlphaFold prediction results for the first time through a database established in cooperation with the European Molecular Biology Laboratory (EMBL). The initial database included 98% of all human proteins

Lex Fridman: The essence of the protein folding problem is, can you get a one-dimensional string of letters from an amino acid sequence? Can three-dimensional structures be instantly predicted computationally? This has been a major challenge in biology for more than 50 years. Nobel Prize winner Christian Anfinsen first stated in 1972 that he speculated that going from amino acid sequence to three-dimensional structure could be achieved.

Demis Hassabis: This sentence by Christian Anfinsen opened up the entire 50 fringe fields of computational biology, in which they are trapped and not done very well.

Before the advent of AlphaFold, this was all done experimentally. It was very difficult to crystallize proteins. Some proteins cannot be crystallized like membrane proteins, and expensive electron microscopy or X-ray crystallographic analysis must be used. Instrument can be used to obtain the three-dimensional structure and visualize its structure. With AlphaFold, two people can predict a three-dimensional structure in seconds.

Lex Fridman: There is a data set, it is trained on this data set, and how to map amino acids. Incredibly, this little chemistry computer can do calculations in a distributed way and do them very fast.

Demis Hassabis: Maybe we should discuss the origin of life. In fact, protein itself is an amazing little biological and animal machine. Cyrus Levinthal, the scientist who proposed Levinthal's paradox, roughly calculated that the average protein may be 2,000 amino acid bases long and can have 10 to 300 different protein folding methods. And in nature, physics somehow solves this problem, and proteins fold up in your body in a matter of milliseconds, or a second.

Lex Fridman: The sequence has a unique way of forming itself, it finds a way to remain stable in the midst of vast possibilities. In some cases there may be things like dysfunction, but most of the time it's a unique mapping that's not obvious.

Demis Hassabis: If health usually has a unique mapping, then when it comes to illness, what exactly is the problem? For example, one hypothesis for Alzheimer's disease was that beta-amyloid folds in the wrong way, causing it to become misfolded so that it becomes tangled up in neurons.

So to understand health, function, and disease, you need to understand how they are structured, and knowing what these things are doing is super important. The next step is that when proteins interact with something, they change shape. So in biology, they are not necessarily static.

Lex Fridman: Maybe you can give some solutions to AlphaFold, which is a real physics system unlike the game. What is very difficult to solve in this? Which solutions are relevant?

Demis Hassabis: AlphaFold is the most complex and probably the most meaningful system we have built to date.

We originally built AlphaGo and AlphaZero to be related to games, but the ultimate goal is not just to crack games, but to use them to guide general learning systems and solve real-world challenges. We want to work more on scientific challenges like protein folding, and AlphaFold is our first important proof point.

In terms of data, the number of innovations requires about 30 different composition algorithms, put together to crack protein folding. Some of the big innovations have been around physics and evolutionary biology, setting up hard coding to constrain things like bond angles in proteins, but without affecting the learning system, so the system can still learn the physics from the examples.

Assuming that there are only about 150,000 proteins, even after 40 years of experiments, only about 50,000 protein structures will be discovered. The training set is much smaller than what is typically used, but various techniques like self-extraction are used. Therefore, when using AlphaFold to make some very confident predictions, it is crucial for AlphaFold to work by putting it back into the training set to make the training set larger.

Actually, a lot of innovation is needed to solve this problem. What AlphaFold produces is a histogram, a matrix of pairwise distances between all molecules in the protein. They must be a separate optimization. process to create three-dimensional structures. To make AlphaFold truly end-to-end, it can go directly from the base sequence of amino acids to the three-dimensional structure, skipping the intermediate steps.

It can also be found from machine learning that the more end-to-end, the better the system can be. The system is better at learning constraints than human designers. In this case, a 3D structure is better than having an intermediate step where one has to go to the next step manually. The best approach is to let gradients and learning flow all the way through the system, from the endpoint, to the desired final output, to the input.

Lex Fridman: Regarding the idea of AlphaFold, that may be an early step in a long journey in biology. Do you think the same method can predict the structure and function of more complex biological systems, multi-protein interactions? Function; as a starting point, can it simulate larger and larger systems, eventually simulating things like the human brain and human body? Do you think this is a long-term vision?

Demis Hassabis: Of course, once we have a robust enough biological system, treating disease and understanding biology is at the top of my To Do List, and that's One of the reasons I'm personally pushing for AlphaFold is that AlphaFold is just the beginning.

AlphaFold solves the huge problem of protein structure, but biology is dynamic and everything we study is protein-liquid binding. Reacting with molecules, building pathways, and ultimately forming a virtual cell is my dream. I've been talking to a lot of biology friends, including Paul Nurse, a biologist at the Crick Institute. For biology and disease discovery, building a virtual cell is incredible because you can do a lot of experiments on the virtual cell and then go into the laboratory to verify it at the final stage.

In terms of discovering new drugs, it takes about 10 years from identifying the target to having a drug candidate. If most of the work can be done in virtual cells, the time may be shortened by an order of magnitude. To realize virtual cells, an understanding of the interactions of different parts of biology must be developed. Every few years we talk to Paul about this. After AlphaFold last year, I said now is finally the time we can do it, and Paul was so excited. We have some collaboration with his lab. On the basis of AlphaFold, I believe there will be some amazing progress in biology. It can also be seen that there are already communities doing this after AlphaFold is open sourced.

I think that one day, artificial intelligence systems may be able to solve problems like general relativity, and not just by processing content on the Internet or public health. It will be very interesting to see what it will be able to come up with. It's a bit like our previous debate about creativity inventing Go, rather than just coming up with a good Go move. If it wanted to win an award like the Nobel Prize, it would have required inventing Go, rather than having it dictated by a human scientist or creator.

Lex Fridman: A lot of people do think of science as standing on the shoulders of giants, and the question is how far are you really on the shoulders of giants? Maybe it's just absorbing a different type of past result and ultimately providing a breakthrough idea with a new perspective.

Demis Hassabis: This is a big mystery. I believe that in the past ten years and even the next few decades, many new major breakthroughs will appear in different disciplines. At the intersection, some new connections will be found between these seemingly unrelated fields. One could even argue that deep thinking is a cross-discipline between neuroscientific thinking and AI engineering thinking.

Lex Fridman: You have a paper on "Magnetic Control of Tokamak Plasma through Deep Reinforcement Learning", so you are seeking to use deep reinforcement learning to solve nuclear fusion and do high-temperature plasma control. Can you explain why AI can finally solve this?

Demis Hassabis: In the past year or two, our work has been very interesting and fruitful. We have launched many of my dream projects, which are related to the scientific field that I have collected over the years. project. If we can help push this forward, it could have a transformative impact, and the scientific challenge itself is a very interesting question.

Currently, nuclear fusion faces many challenges, mainly in physics, materials, science and engineering, and how to build these large-scale nuclear fusion reactors and contain the plasma.

We are working with the Ecole Polytechnique Fédérale de Lausanne (EPFL) and the Swiss Institute of Technology, and they have a test reactor they are willing to let us use. It's an amazing test reactor where they try all kinds of pretty crazy experiments. What we are looking at is, when entering a new field such as nuclear fusion, what are the bottlenecks? Thinking from first principles, what are the underlying problems that hinder the operation of nuclear fusion?

In this case, plasma control is perfect. This plasma is 1 million degrees Celsius, hotter than the sun, and obviously no material can contain it. So there have to be very strong superconducting magnetic fields, but the problem is that the plasma is quite unstable, like holding many stars in a reactor, predicting in advance what the plasma will do, you can control it by moving the magnetic field in millions of seconds What will it do next.

If you think of it as a reinforcement learning prediction problem, this seems perfect, there are controllers that can move the magnetic field and cut, but previously it was a traditional controller. I wish there was a controllable rule that they couldn't react to plasma in the moment, had to be hardcoded.

Lex Fridman: AI finally solves nuclear fusion.

Demis Hassabis: Last year we published a paper in Nature magazine on solving this problem, fixing the plasma in a specific shape. It's actually almost like sculpting the plasma into different shapes, controlling it and holding it there for record time. This is an unsolved problem in nuclear fusion.

It is important to include it in the structure and maintain it, and there are some different shapes that are more conducive to energy production, called drops and so on. We are talking to a lot of fusion startups to see what are the next problems that can be solved in the fusion space.

Lex Fridman: There is also a fascinating aspect in the title of the paper, Pushing the Frontier of Density Functions by Solving Fractional Electron Problems. Can you explain this work? Will AI be able to model and simulate arbitrary quantum mechanical systems in the future?

Demis Hassabis: People have tried to write approximations of the density function and descriptions of electron clouds, observing how two elements interact when put together. And what we're trying to do is learn a simulation, learn a chemical function that can describe more types of chemistry.

So far, AI can run expensive simulations, but only for very small and very simple molecules, and we haven’t been able to simulate large materials. So after building an approximation of a function to show its equation, describing what the electrons are doing, all material science and properties are governed by how electrons interact with each other.

Lex Fridman: Summarize simulations through functions to get closer to actual simulation results. The difficulty of this task lies in running complex simulations, learning the mapping tasks from initial conditions and simulation parameters, and learning functions What will it be?

Demis Hassabis: This is tricky, but the good news is that we have done it, we can run a large number of simulations, molecular dynamics simulations, on a computing cluster, so A large amount of data is generated. In this case, data is generated. That's why we use game emulators to generate data, because you can create as much data as you want. If there are free computers in the cloud, we can run these calculations.

3AI and Humanity

Lex Fridman: How do you understand the origin of life?

Demis Hassabis: I think the ultimate use of AI is to accelerate science to the extreme. It's kind of like a tree of knowledge. If you imagine this is all the knowledge there is to gain in the universe, but so far we've barely scratched the surface of it. AI will speed up this process and explore as much of this knowledge tree as possible.

Lex Fridman: Intuition tells me that the human tree of knowledge is very small, given our cognitive limitations. Even with the tools, there are still many things we don’t understand. This may be why non-human systems can go further.

Demis Hassabis: Yes, very likely.

But first, these are two different things. Like what do we understand today, what can the human mind understand, what is the whole thing that we want to understand, here are three concentrics, you can think of them as three bigger trees, or explore more branches of this tree . We’ll explore more with AI.

Now the problem is, if you think about what is the totality of things that we can understand, there may be some things that cannot be understood, like things outside the simulation, or things outside the universe.

Lex Fridman: Because the human brain has become accustomed to the state of this three-dimensional world with time.

Demis Hassabis: But our tools can go beyond that. They can be 11-dimensional or 12-dimensional.

The example I often give is when I played chess with Gary Kasparov, we discussed things like chess. If you are very good at chess, you can't think of Gary's moves, but he can give You explain. You can think of this as post hoc reasoning. There is a further explanation, maybe you can't invent this thing, but you can understand and appreciate it, just like you appreciate Vivaldi or Mozart and appreciate its beauty.

Lex Fridman: I want to ask some crazier questions. For example, do you think there are alien civilizations beyond the earth?

Demis Hassabis: My personal opinion is that we are alone at the moment. We already have various astronomical telescopes and other detection technologies trying to find signals from other civilizations in space. If there are many alien civilizations doing this at the same time, then we should hear noisy sounds from outer space. But the fact is, we didn't receive any signal.

Many people will argue that there are alien civilizations in the world, but we have not really searched for them properly, or we may have searched for the wrong waveband, or we may have used the wrong equipment, and we did not realize it. Aliens exist in very different forms, and so on. But I don’t agree with these views. We have actually done a lot of exploration. If there are really so many extraterrestrial civilizations, we should have discovered them long ago.

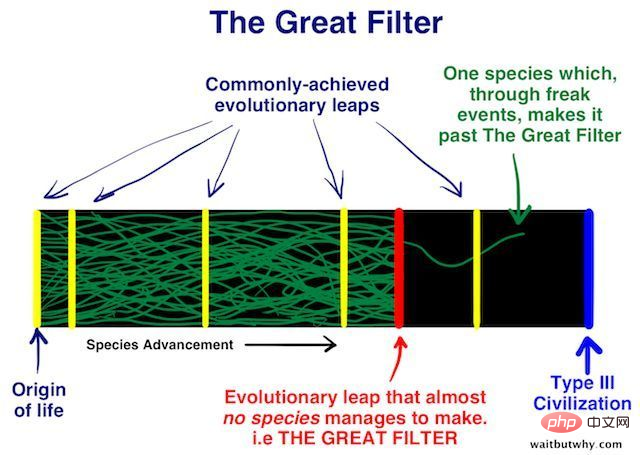

Interestingly, if the Earth is a lonely civilization, from the perspective of the Great Filters, this is quite comforting, which means that we have passed through the Great Filters.

Going back to the question you just asked about the origin of life, life originated from some incredible things, and no one knows how these things happened. I wouldn't be surprised to see some form of single-celled life, like bacteria, somewhere other than Earth. But based on its ability to capture mitochondria and use them for our own purposes, the emergence of multicellular life is unprecedentedly difficult.

Illustration: The Great Filter Theory mentioned by Demis Hassabis

Lex Fridman: Do you think consciousness is required to have true intelligence? ?

Demis Hassabis: I personally believe that consciousness and wisdom are doubly separated, so we can achieve consciousness without wisdom, and vice versa.

For example, many animals are self-aware and can also socialize and dream. They can be defined as having a certain degree of self-awareness, but they are not intelligent. But at the same time, those artificial intelligences that are very smart at one task, they can play chess, or perform other tasks very well, but they don't have any self-awareness.

Lex Fridman: Some time ago, a Google engineer thought that a certain language model was sentient. Have you ever encountered a sentient language model? If a system is "perceived", how do you understand this situation?

Demis Hassabis: I don’t think any AI system in the world is conscious or sentient. This is my true feeling when interacting with AI every day. The so-called perception is more of a projection of our brain itself. Since it is a language model and is closely related to wisdom, it is easy for people to anthropomorphize the system. This is why I think the Turing test is flawed, because it is based on human reactions and judgments.

We should talk about consciousness with leading philosophers, like Daniel Dennett and David Charmers, and others who have thought deeply about consciousness. There is currently no generally accepted definition of consciousness. If I were to say it, I think the definition of consciousness is the feeling that comes when information is processed.

Lex Fridman: Let me ask a dark, personal question. You said creating the most powerful super artificial intelligence system in the world. As the old saying goes, absolute power corrupts, and you're more likely to be one of them because you're the one most likely to control the system. Would you consider this?

Demis Hassabis: I am thinking about defenses against this corruption all the time.

Tools or technologies that are in the best interests of humanity allow us to enter a radical world where we face many difficult challenges. AI can help us solve problems, ultimately leading humanity to ultimate prosperity and even finding aliens. The creator of AI, the culture that AI relies on, the values that AI has, and the builder of the AI system will all affect its development. Even if an AI system learns on its own, much of its knowledge will carry with it the residue of certain pre-existing cultures and values of its creator.

Different cultures have made us more divided than ever before. Perhaps when we enter an era of extreme abundance and resources are less scarce, we will not need fierce competition, but can shift to better cooperation.

Lex Fridman: When resources are significantly constrained, some atrocities occur.

Demis Hassabis: Resource scarcity is one of the causes of competition and destruction. All human beings want to live in a kind and safe world, so we must solve the problem of scarcity. question.

But this is not enough to achieve peace, because there are other things that create corruption. AI should not be run by just one person or organization. I think AI should belong to the world, to humans, and everyone should have a say in AI.

Lex Fridman: Do you have any advice for high school and college students? If young people have the desire to engage in AI, or want to impact the world with their own power, how can they get a job from their own heart? A career you are proud of? How to find your ideal life?

Demis Hassabis: I always like to say two sentences to young people. The first sentence is, where is your true passion? Young people should explore the world as much as possible. When we are young, we have enough time and can take the risks of exploration. Finding connections between things in your own unique way, I think is a great way to find your passion.

The second sentence is, know yourself. It takes a lot of time to understand what is the best way to work, when is the best working time, what is the best way to learn, and how to deal with stress. Young people should test themselves in different environments, try to improve their weaknesses, find out their unique skills and strengths, and then hone them. These are your future values in this world.

If you can combine these two things, find your passion, and develop your own unique and powerful skills, then you will gain incredible energy and make a huge difference in the world.

The above is the detailed content of Demis Hassabis: AI is more powerful than we imagined. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology