Home >Technology peripherals >AI >Only 1% of the Embedding parameters are needed, hardware costs are reduced by ten times, and the open source solution uses a single GPU to train a large recommended model

Only 1% of the Embedding parameters are needed, hardware costs are reduced by ten times, and the open source solution uses a single GPU to train a large recommended model

- 王林forward

- 2023-04-12 15:46:18971browse

Deep Recommendation Models (DLRMs) have become the most important technical scenarios for the application of deep learning in Internet companies, such as video recommendation, shopping search, advertising push and other traffic monetization services, which have greatly improved user experience and business commercial value. . However, massive user and business data, frequent iterative update requirements, and high training costs all pose severe challenges to DLRM training.

In DLRM, you need to perform a lookup in the embedded table (EmbeddingBags) first, and then complete the downstream calculation. Embedded tables often contribute more than 99% of the memory requirements in DLRM, but only 1% of the computation. With the help of GPU on-chip high-speed memory (High Bandwidth Memory) and powerful computing power, GPU has become the mainstream hardware for DLRM training. However, with the deepening of research on recommendation systems, the growing embedding table size and limited GPU memory form a significant contradiction. How to use GPU to efficiently train very large DLRM models while breaking through the limitations of the GPU memory wall has become a key issue that needs to be solved in the field of DLRM.

Colossal-AI has previously successfully used heterogeneous strategies to increase the parameter capacity of NLP models trained on the same hardware by hundreds of times, and has recently successfully expanded it In the recommendation system, the embedded table is dynamically stored in the CPU and GPU memory through the software cache (Cache) method. Based on the software Cache design, Colossal-AI also adds pipeline prefetching to reduce software Cache retrieval and data movement overhead by observing training data that will be input in the future. At the same time, it trains the entire DLRM model on the GPU in a synchronous update manner, which, combined with the widely used hybrid parallel training method, can be extended to multiple GPUs. Experiments show that Colossal-AI only needs to retain 1% of the embedding parameters in the GPU and can still maintain excellent end-to-end training speed. Compared with other PyTorch solutions, the graphics memory requirement is reduced by an order of magnitude, and a single graphics card can train a terabyte-level recommendation model. The cost advantage is significant. For example, only 5GB of video memory is needed to train a DLRM that occupies a 91GB Embedding Bag. The cost of training hardware is reduced tenfold from two A100s of about 200,000 yuan to an entry-level graphics card such as RTX 3050 that only costs about 2,000 yuan. .

Open source address: https://github.com/hpcaitech/ColossalAI

##Existing Embedding table expansion technologyThe embedding table maps discrete integer features into continuous floating point feature vectors. The following figure shows the embedding table training process in DLRM. First, find the row corresponding to the Embedding Table for each feature in the embedding table, and then use reduction operations, such as max, mean, and sum operations, to turn it into a feature vector and pass it to the subsequent dense neural network. It can be seen that the embedded table training process of DLRM is mainly irregular memory access operations, so it is severely limited by the hardware memory access speed.

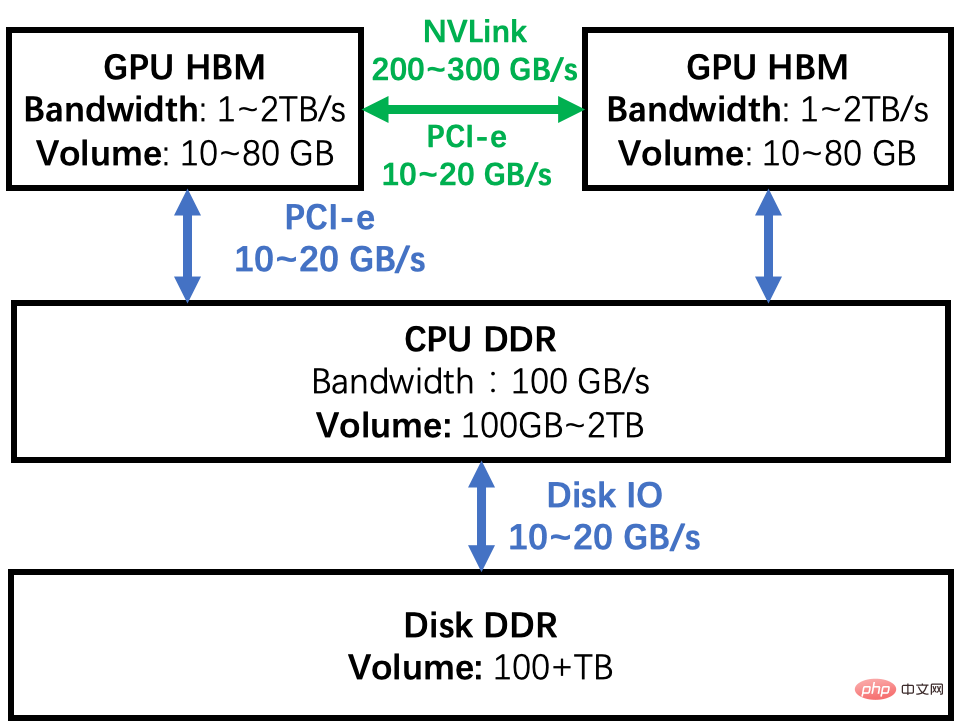

#The embedded table of industrial-grade DLRM may reach hundreds of GB or even TB levels, far exceeding the maximum video memory capacity of tens of GB of a single GPU. There are many ways to break through the memory wall of a single GPU to increase the size of DLRM's embedded table. Taking the memory hierarchy diagram of the GPU cluster shown in the figure below as an example, let us analyze the advantages and disadvantages of several common solutions.

GPU model parallelism: The embedding table is divided and distributed in the memory of multiple GPUs. During training, the intermediate results are synchronized through the interconnection network between GPUs. The disadvantage of this method is that the embedded table segmentation load is not uniform, and the scalability problem is difficult to solve. Secondly, the initial hardware cost of adding a GPU is high, and the computing power of the GPU is not fully utilized during DLRM training, but only its HBM bandwidth advantage is used, resulting in low GPU utilization.

CPU partial training: Split the embedding table into two parts, one part is trained on the GPU and the other part is trained on the CPU. By taking advantage of the long tail effect of data distribution, we can make the proportion of CPU calculations as small as possible and the proportion of GPU calculations as large as possible. However, as the batch size increases, it is difficult for all mini-batch data to hit the CPU or GPU. If the data hits the CPU or GPU at the same time, this method is difficult to handle. In addition, since DDR bandwidth and HBM are one data magnitude apart, even if 10% of the input data is trained on the CPU, the entire system will be slowed down by at least half. In addition, the CPU and GPU need to transmit intermediate results, which also has considerable communication overhead and further slows down the training speed. Therefore, researchers have designed methods such as asynchronous updates to avoid these performance defects. However, asynchronous methods can cause uncertainty in training results and are not the first choice of algorithm engineers in practice.

Software Cache: Ensure that all training is performed on the GPU, and the embedding table exists in the heterogeneous space composed of CPU and GPU. Each time through the software Cache method, the Swap the required parts into the GPU. This method can cheaply expand storage resources to meet the growing needs of embedded tables. Moreover, compared to using CPU for calculation, the entire training process in this way is completely completed on GPU, taking full advantage of HBM bandwidth advantages. However, Cache queries and data movement will cause additional performance losses.

There are already some excellent software Cache solutions for embedding tables, but they often use customized EmbeddingBags Kernel implementations, such as fbgemm, or use third-party deep learning frameworks. Colossal-AI does not make any Kernel-level changes based on the native PyTorch. It provides a set of out-of-the-box software Cache EmbeddingBags implementation. It also further optimizes the DLRM training process and proposes prefetching. Pipelining to further reduce Cache overhead.

Memory Hierarchy

Colossal-AI The embedded table software Cache

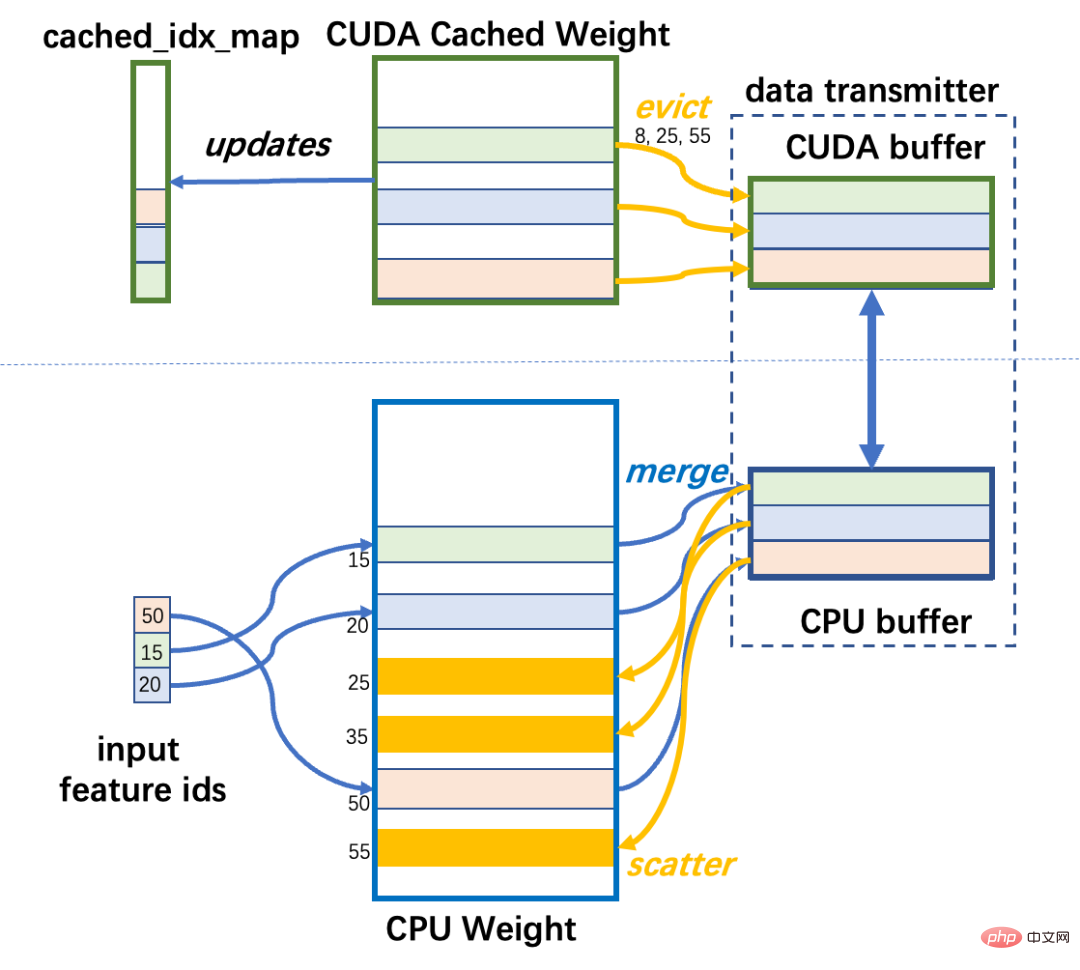

Colossal-AI implements a software Cache and encapsulates it into nn.Module for users to use in their own models. The embedding table of DLRM is generally EmbeddingBags composed of multiple Embeddings and resides in CPU memory. This part of the memory space is named CPU Weight. A small part of the data of EmbeddingBags is stored in the GPU memory, which includes the data to be used for training. This part of the memory space is named CUDA Cached Weight. During DLRM training, you first need to determine the rows of the embedded table corresponding to the data input into the mini-batch for this iteration. If some rows are not in the GPU, they need to be transferred from the CPU Weight to the CUDA Cached Weight. If there is not enough space in the GPU, it uses the LFU algorithm to evict the least used data based on the historical frequency of accessing the cache.

In order to achieve Cache retrieval, some auxiliary data structures are needed to help: cached_idx_map is a one-dimensional array that stores the correspondence between the row numbers in the CPU Weight and the CUDA Cached Weight, and the corresponding rows in Information about how often the GPU is accessed. The ratio of CUDA Cached Weight size to CPU Weight size is named cache_ratio, and the default is 1.0%.

Cache runs before each iteration forward to adjust the data in CUDA Weight, specifically in three steps.

Step1: CPU Index: Retrieve the line number that needs to be cached in the CPU Weight

It needs to input mini Take the intersection of input_ids and cached_idx_map of -batch to find the row number in CPU Weight that needs to be moved from CPU to GPU.

Step2: GPU index: Find the rows that can be evicted in CUDA Weight according to the frequency of use

This requires us Perform top-k (maximum k numbers) operations on the parts after the difference set of cache_idx_map and input_ids in order from low to high according to frequency.

Step3: Data transfer:

Move the corresponding rows in CUDA Cached Weight to CPU Weight, and then Move the corresponding row in CPU Weight to CUDA Weight.

The data transmission module is responsible for the bidirectional transmission of data between CUDA Cached Weight and CPU Weight. Different from inefficient line-by-line transmission, it uses cache first and then centralized transmission to improve PCI-e bandwidth utilization. Embedded rows scattered in memory are gathered into contiguous data blocks in the source device's local memory, and then the blocks are transferred between the CPU and GPU and scattered to the corresponding locations in the target memory. Moving data in blocks can improve PCI-e bandwidth utilization, and merge and scatter operations only involve on-chip memory accesses for the CPU and GPU, so the overhead is not very large.

Colossal-AI uses a size-restricted buffer to transfer data between the CPU and GPU. In the worst case, all input ids miss the cache, and a large number of elements need to be transferred. To prevent the buffer from taking up too much memory, the buffer size is strictly limited. If the transferred data is larger than the buffer, the transfer will be completed in multiple times.

##Cached EmbeddingBag Workflow

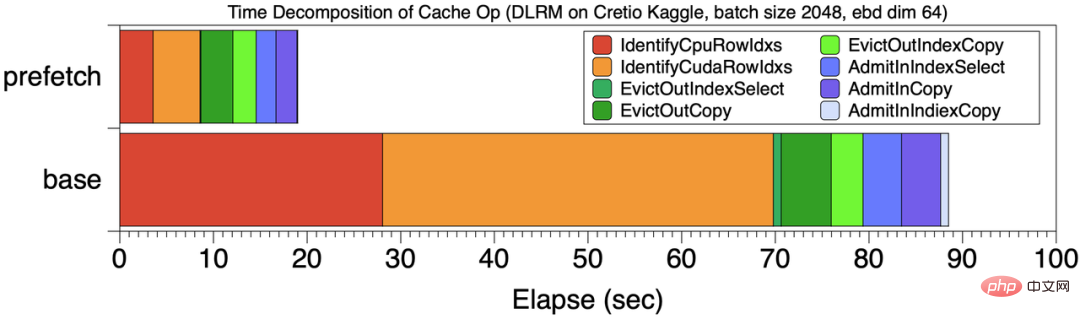

Software Cache Performance AnalysisThe above operations of Cache Step1 and Step2 are both memory access intensive. Therefore, in order to take advantage of the bandwidth of GPU HBM, they are run on the GPU and implemented using APIs encapsulated by deep learning frameworks. Nonetheless, the overhead of Cache operations is particularly significant compared to training operations on the GPU for embedding tables.

For example, in a training task that totals 199 seconds, the overhead of Cache operation is 99 seconds, accounting for nearly 50% of the total computing time. . After analysis, the main overhead of Cache is mainly caused by Step1 and Step2. The base position in the figure below shows the time breakdown of Cache overhead at this time. The red and orange stages of Cache step 1 and 2 account for 70% of the total Cache overhead.

Time breakdown of Cache operations

The reason for the above problem is that the traditional Cache strategy is somewhat "short-sighted" and can only adjust the Cache according to the current mini-batch situation, so most of the time is wasted on query operations.

Cache Pipeline PrefetchIn order to reduce the overhead of Cache, Colossal-AI has designed a set of "Foresight" Cache mechanism. Instead of only performing cache operations on the first mini-batch, Colossal-AI prefetches several mini-batches that will be used later and performs cache query operations in a unified manner.

As shown in the figure below, Colossal-AI uses prefetching to merge multiple mini-batch data for unified Cache operations, and also uses a pipeline approach to overlap the overhead of data reading and calculation. In the example, the prefetch mini-batch number is 2. Before starting training, the mini-batch 0,1 data is read from the disk to the GPU memory, then the Cache operation is started, and then forward and backward propagation and parameter updates of the two mini-batches are performed. At the same time, the initial data reading for mini-batch 2 and 3 can be performed, and this overhead can overlap with the calculation.

Compared with the baseline Cache execution method, the figure [Time breakdown of Cache operations] compares the cache time of prefetch 8 mini-batches and baseline break down. The total training time dropped from 201 seconds to 120 seconds, and the proportion of Cache phase operation time shown in the figure also dropped significantly. It can be seen that compared with each mini-batch performing cache operations independently, the time of each part is reduced, especially the first two steps of cache operations.

To sum up, Cache pipeline prefetching brings two benefits.

a. Dilute Cache index overhead

The most obvious benefit of prefetching is to reduce the overhead of Step1 and Step2 , so that this two-step operation accounts for less than 5% of the total training process. As shown in [Time Breakdown of Cache Operations], by prefetching 8 mini-batch data, the overhead of Cache query is significantly reduced compared to the baseline without prefetching.

b. Increase CPU-GPU data movement bandwidth

By concentrating more data, improve data transmission granularity, thereby making full use of CPU-GPU Transmission bandwidth. For the above example, the CUDA->CPU bandwidth is increased from 860MB/s to 1477 MB/s, and the CPU->CUDA bandwidth is increased from 1257 MB/s to 2415 MB/s, which almost doubles the performance gain.

Easy to use

The usage is consistent with Pytorch EmbeddingBag. When building a recommendation model, only the following lines of code are required for initialization, which can greatly increase the embedding table capacity. Achieve terabyte-level recommendation model training at low cost.

Bashfrom colossalai.nn.parallel.layers.cache_embedding import CachedEmbeddingBag emb_module = CachedEmbeddingBag(num_embeddings=num_embeddings,embedding_dim=embedding_dim,mode="sum"include_last_offset=True,sparse=True,_weight=torch.randn(num_embeddings, embedding_dim),warmup_ratio=0.7,cache_ratio = 0.01,)

Performance test

On NVIDIA A100 GPU (80GB) and AMD EPYC 7543 32-Core Processor (512GB) hardware platform, Colossal -AI uses Meta's DLRM model as the test target, and uses the ultra-large data set Cretio 1TB and Meta's dlrm_datasets to generate data sets as the test model. In the experiment, the PyTorch training speed of storing all the embedding tables on the GPU is used as the baseline.

Cretio 1TB

The Cretio 1TB embedded table has a total of 177944275 rows, set embedding dim=128, and its embedded table memory Requirements 91.10 GB. Even the most high-end NVIDIA A100 80GB cannot meet the memory requirements of storing all EmbeddingBags in a single GPU memory.

But using Colossal-AI, training is still completed on a single GPU. When cache ratio=0.05, the memory consumption is only 5.01 GB, which is directly reduced by about 18 times, and can be further expanded to a single GPU. Implement the training of terabyte-level recommendation system model on Zhang GPU. In terms of training speed, as shown in the figure below, it shows the delay of training 100M samples under different batch sizes. The green Prefetch1 is the delay without prefetching, and the blue Prefetch8 is the delay using prefetch (prefetch mini-batch=8). It can be seen that the prefetch pipeline optimization plays an important role in improving the overall performance. The dark part of each column in the figure is the Cache overhead. After using prefetching, the Cache overhead is controlled within 15% of the total training time.

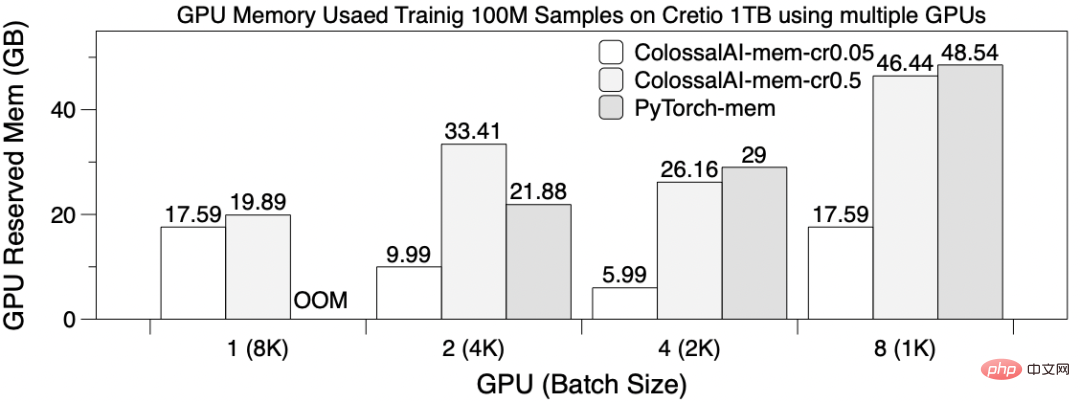

Multi-GPU scalability

Use 8192 as the global batch size, Use table-wise sharding as EmbeddingBags on 8 GPU cards to train DLRM in parallel and train 100M samples. At this time, set the Prefetch size to 4, ColossalAI-mem-cr0.05 is cache ratio=0.05, and ColossalAI-mem-cr0.5=0.5. The figure below shows the training latency for different GPU scenarios. Except that PyTorch OOM cannot be trained when using 1 GPU, the training times of PyTorch and Colossal-AI are similar in other cases. It can be observed that using 4 and 8 GPUs does not bring significant performance improvements. This is because, 1. Synchronizing the results requires huge communication overhead. 2. Table-wise sharding will cause sharding load imbalance. It also shows that using multiple GPUs to expand embedding table training scalability is not very good.

The following figure shows the video memory usage. The video memory usage is different on different cards. The maximum video memory value is shown here. When only one GPU is used, only Colossal-AI's software Cache method can be trained, and the memory occupied by multiple cards in parallel is also significantly reduced several times.

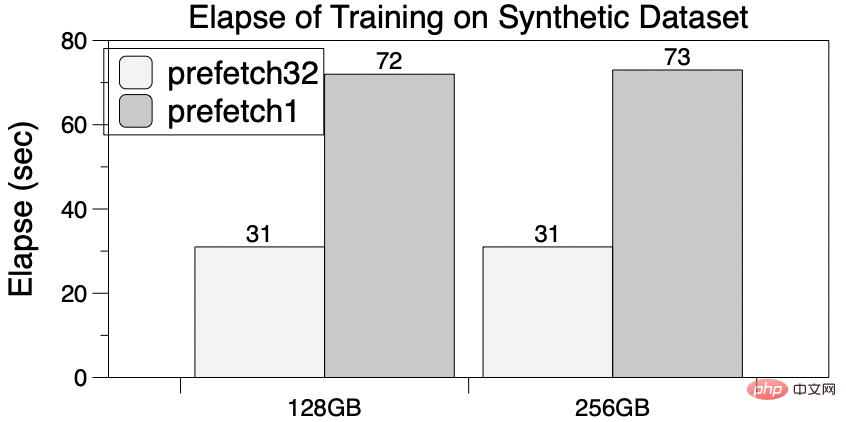

Meta Research’s synthetic dataset dlrm_datasets imitates the training access behavior of embedded tables in the industry, so it is often used as a recommendation system in research Test reference for related software and hardware design. Select 500 million rows of embedded table items as a sub-dataset, and construct two EmbeddingBags of 256GB and 128GB for testing.

PyTorch cannot be trained on a single card A100 due to insufficient video memory. In contrast, Colossal-AI's software cache will significantly reduce GPU memory requirements, enough to train embedding tables as large as 256GB, and can be further expanded to the TB level. Moreover, pipeline prefetching can also show acceleration effects. When the number of prefetches is 32, the total time is reduced by 60% compared with no prefetching, and the demand for GPU storage does not increase.

One More Thing

##Colossal, a general deep learning system for the era of large models- AI uses a number of self-developed leading technologies such as efficient multi-dimensional automatic parallelism, heterogeneous memory management, large-scale optimization libraries, adaptive task scheduling, etc. to achieve efficient and rapid deployment of AI large model training and inference, and reduce the cost of AI large model application.

Colossal-AI related solutions have been successfully implemented by well-known manufacturers in autonomous driving, cloud computing, retail, medicine, chips and other industries, and have been widely praised.

Colossal-AI focuses on open source community building, provides Chinese tutorials, opens user communities and forums, conducts efficient communication and iterative updates for user feedback, and continuously adds cutting-edge technologies such as PaLM, AlphaFold, and OPT. application.

Since its open source, Colossal-AI has been ranked first in the world on GitHub and Papers With Code hot lists many times, and has received international attention together with many star open source projects with tens of thousands of stars. Pay attention inside and outside!

Project open source address: https://github.com/hpcaitech/ColossalAI

##

The above is the detailed content of Only 1% of the Embedding parameters are needed, hardware costs are reduced by ten times, and the open source solution uses a single GPU to train a large recommended model. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology