Home >Technology peripherals >AI >With just 3 samples and a sentence, AI can customize photo-realistic images. Google is playing with a very new diffusion model.

With just 3 samples and a sentence, AI can customize photo-realistic images. Google is playing with a very new diffusion model.

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-12 15:46:07916browse

Recently, text-to-image models have become a popular research direction. Whether it is a large natural landscape or a novel scene image, it may be automatically generated using simple text descriptions.

Among them, rendering wildly imagined scenes is a challenging task that requires compositing instances of specific themes (objects, animals, etc.) in new scenes so that they appear natural. Seamlessly blend into the scene.

Some large-scale text-to-image models achieve high-quality and diverse image synthesis based on text prompts written in natural language. The main advantage of these models is the strong semantic priors learned from a large number of image-text description pairs, such as associating the word "dog" with various instances of dogs that can appear in different poses in the image.

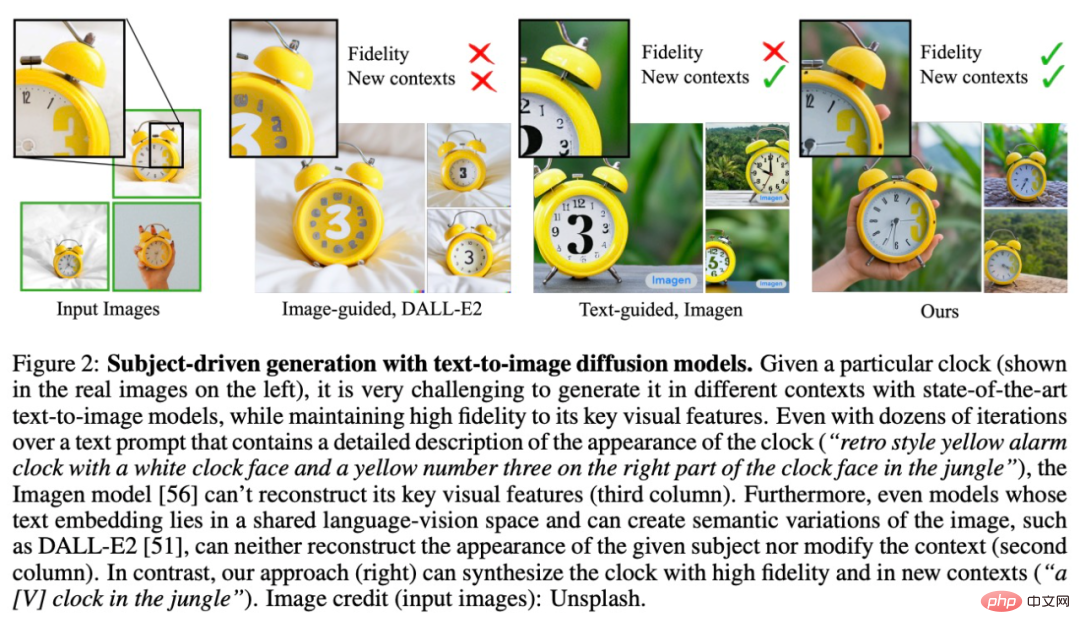

While the synthesis capabilities of these models are unprecedented, they lack the ability to imitate a given reference subject and synthesize new images with the same subject but different instances in different scenes. It can be seen that the expression ability of the output domain of existing models is limited.

In order to solve this problem, researchers from Google and Boston University proposed a "personalized" text-to-image diffusion model DreamBooth. Ability to adapt to user-specific image generation needs.

Paper address: https://arxiv.org/pdf/2208.12242.pdf

Project Address: https://github.com/XavierXiao/Dreambooth-Stable-Diffusion

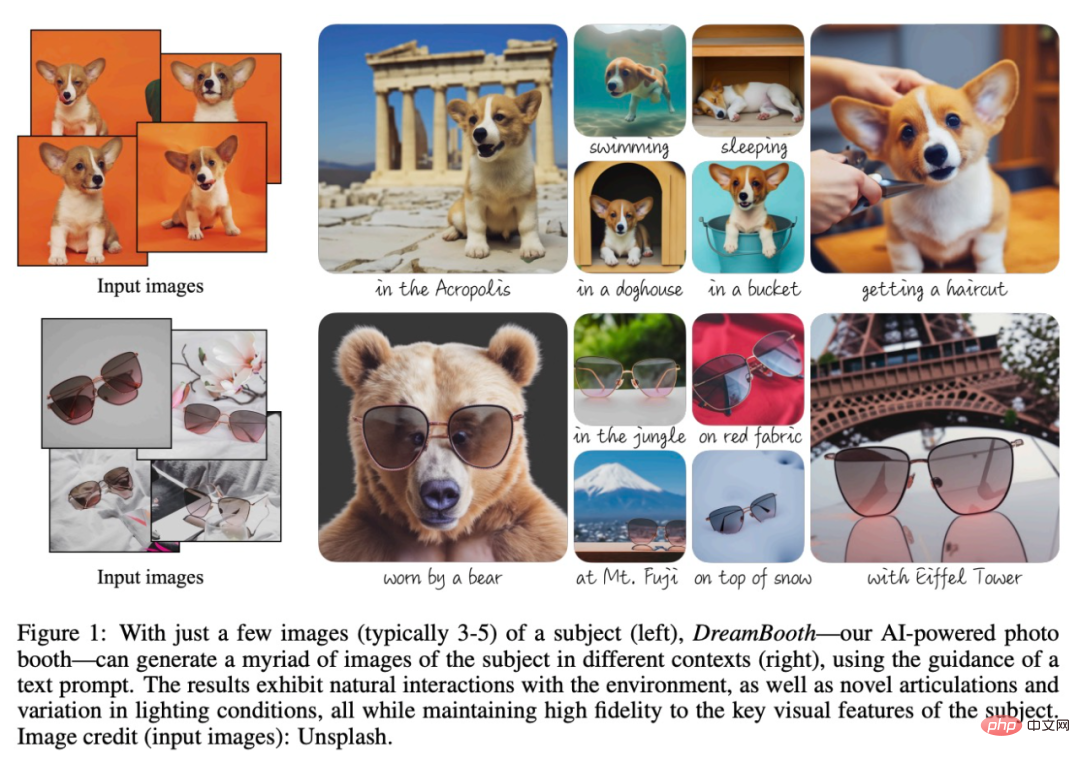

The goal of this research is to extend the language of the model - the visual dictionary, so that it can incorporate new vocabulary Bind to the specific theme the user wants to generate. Once the new dictionary is embedded into the model, it can use these words to synthesize novel and realistic images of specific topics while contextualizing them in different scenes, preserving key identifying features, as shown in Figure 1 below.

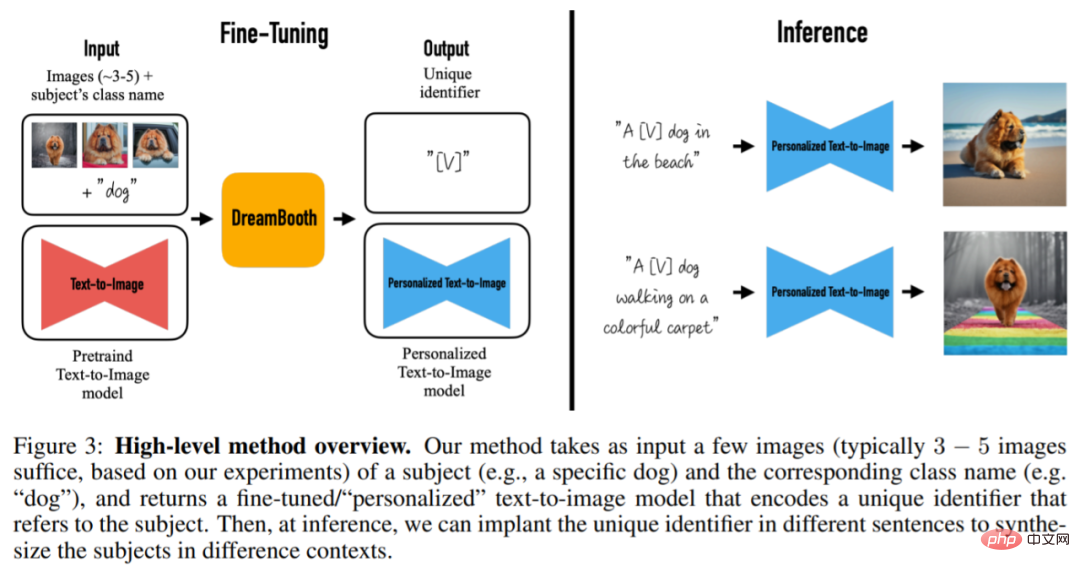

Specifically, the study implants images of a given subject into the model’s output domain so that they can be synthesized using a unique identifier . To this end, the study proposes a method to represent a given topic with a rare token identifier and fine-tunes a pre-trained, diffusion-based text-to-image framework that operates in two steps; generating low-resolution from text images, and then apply a super-resolution (SR) diffusion model.

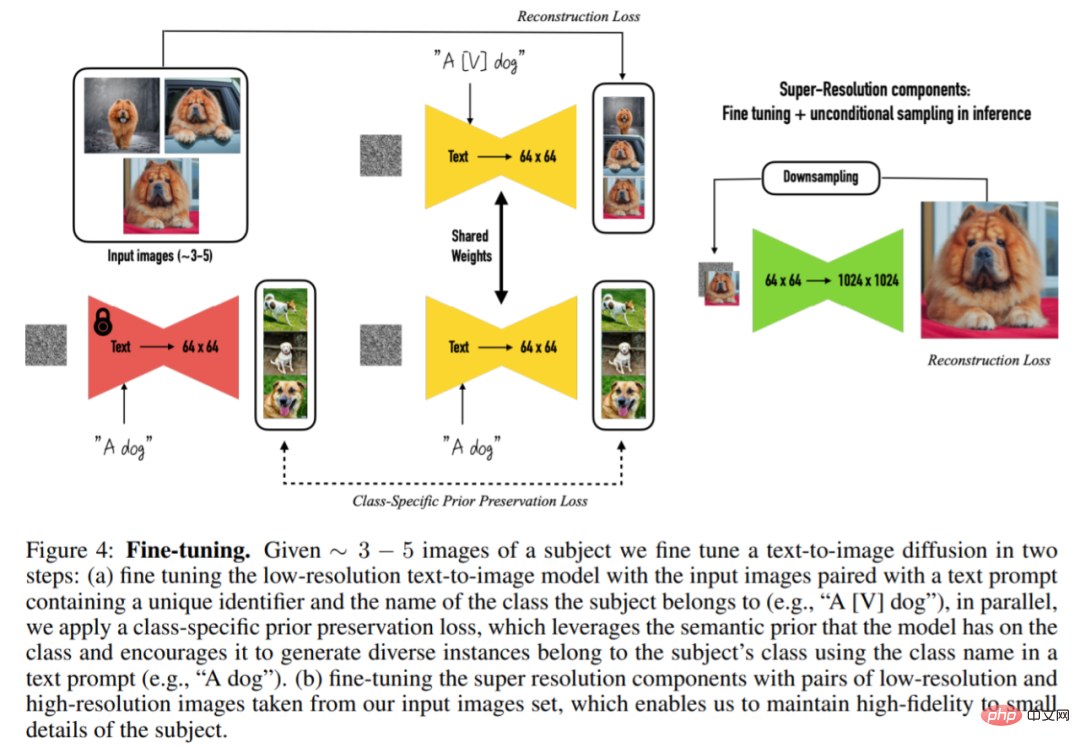

This study first fine-tuned a low-resolution text-to-image model using input images and text hints containing unique identifiers (with subject class names, such as "A [V] dog") . To prevent the model from overfitting class names to specific instances and semantic drift, this study proposes a self-generated, class-specific prior preservation loss, which exploits the prior semantics of classes embedded in the model to encourage the model Generate different instances of the same class under a given topic.

In the second step, the study fine-tunes the super-resolution component using low-resolution and high-resolution versions of the input image. This allows the model to maintain high fidelity to small but important details in the subject of the scene.

Let’s take a look at the specific methods proposed in this study.

Method Introduction

Given 3-5 captured images without text descriptions, this paper aims to generate images with high detail fidelity and prompts by text New images to guide change. The study does not impose any restrictions on input images, and subject images can have different contexts. The method is shown in Figure 3. The output image can modify the original image, such as the position of the subject, change the properties of the subject such as color, shape, and modify the subject's posture, expression, material, and other semantic modifications.

More specifically, this method takes as input some images (usually 3 - 5 images) of a subject (for example, a specific dog) and the corresponding class name (for example, the dog category), and Returns a fine-tuned/personalized text-to-image model that encodes a unique identifier referencing the subject. Then, during reasoning, unique identifiers can be embedded in different sentences to synthesize topics in different contexts.

The first task of the research is to implant topic instances into the output domain of the model and bind the topics to unique identifiers. This study proposes methods for designing identifiers, in addition to designing a new method for supervising the model fine-tuning process.

In order to solve the problem of image overfitting and language drift, this study also proposes a loss (Prior-Preservation Loss), which encourages the diffusion model to continuously generate the same class as the subject. Different instances, thereby alleviating problems such as model overfitting and language drift.

In order to preserve image details, the study found that the super-resolution (SR) component of the model should be fine-tuned. This article is completed on the basis of the pre-trained Imagen model. The specific process is shown in Figure 4. Given 3-5 images of the same subject, the text-to-image diffusion model is then fine-tuned in two steps:

Rare token identifier represents the topic

This study marks all input images of the topic as "a [identifier] [class noun]", where [ identifier] is a unique identifier linked to the topic, while [class noun] is a rough class descriptor of the topic (e.g. cat, dog, watch, etc.). This study specifically uses class descriptors in sentences in order to associate class priors with topics.

Effect display

The following is a stable diffusion implementation of Dreambooth (refer to the project link). Qualitative results: The training images come from the "Textual Inversion" library:

After the training is completed, at the prompt of "photo of a sks container", the model is generated The container photo is as follows:

Add a location "photo of a sks container on the beach" in the prompt, and the container will appear on the beach;

The green container is too simple in color. If you want to add some red, enter the prompt "photo of a red sks container" to get it done:

Enter the prompt "a dog on top of sks container" to make the puppy sit in the box:

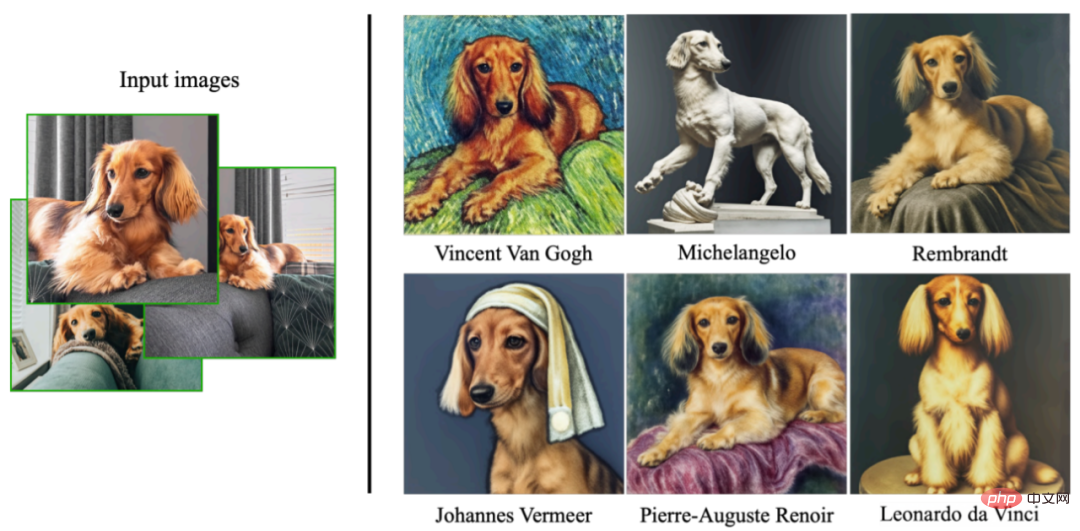

The following are some results presented in the paper. Generate artistic pictures about dogs in different artist styles:

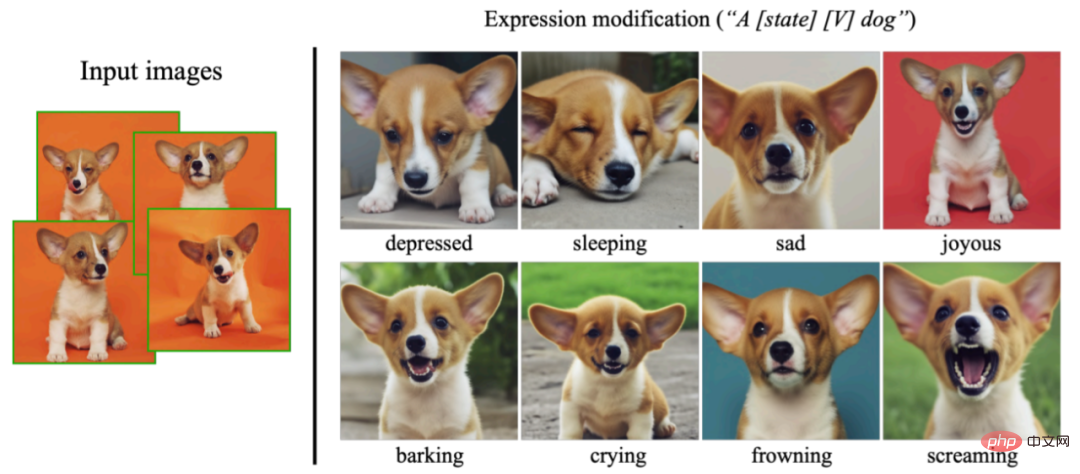

This research can also synthesize various expressions that do not appear in the input image, demonstrating the extrapolation ability of the model:

For more details, please refer to the original paper.

The above is the detailed content of With just 3 samples and a sentence, AI can customize photo-realistic images. Google is playing with a very new diffusion model.. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology