Technology peripherals

Technology peripherals AI

AI GAN's counterattack: Zhu Junyan's new CVPR work GigaGAN, the image output speed beats Stable Diffusion

GAN's counterattack: Zhu Junyan's new CVPR work GigaGAN, the image output speed beats Stable DiffusionGAN's counterattack: Zhu Junyan's new CVPR work GigaGAN, the image output speed beats Stable Diffusion

Image generation is one of the most popular directions in the current AIGC field. Recently released image generation models such as DALL・E 2, Imagen, Stable Diffusion, etc. have ushered in a new era of image generation, achieving unprecedented levels of image quality and model flexibility. The diffusion model has also become the dominant paradigm at present. However, diffusion models rely on iterative inference, which is a double-edged sword because iterative methods can achieve stable training with simple objectives, but the inference process requires high computational costs.

Before diffusion models, generative adversarial networks (GANs) were a commonly used infrastructure in image generation models. Compared to diffusion models, GANs generate images through a single forward pass and are therefore inherently more efficient, but due to the instability of the training process, scaling GANs requires careful tuning of network architecture and training factors. Therefore, GANs are good at modeling single or multiple object classes, but are extremely challenging to scale to complex data sets (let alone the real world). As a result, very large model, data, and computational resources are now dedicated to diffusion and autoregressive models.

But as an efficient generation method, many researchers have not completely abandoned the GAN method. For example, NVIDIA recently proposed the StyleGAN-T model; Hong Kong Chinese and others used GAN-based methods to generate smooth videos. These are further attempts by CV researchers on GAN.

Now, in a CVPR 2023 paper, researchers from POSTECH, Carnegie Mellon University, and Adobe Research jointly explored several important issues about GANs, including:

- #Can GAN continue to scale and benefit from massive resources? Has GAN hit a bottleneck?

- What prevents further expansion of GANs, and can we overcome these obstacles?

- Paper link: https://arxiv.org/abs/2303.05511

- Project link: https://mingukkang.github.io/GigaGAN/

It is worth noting that Zhu Junyan, the main author of CycleGAN and winner of the 2018 ACM SIGGRAPH Best Doctoral Thesis Award, is the second author of this CVPR paper.

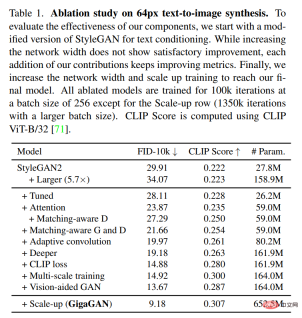

The study first conducted experiments using StyleGAN2 and observed that simply extending the backbone network resulted in unstable training. Based on this, the researchers identified several key issues and proposed a technique to stabilize training while increasing model capacity.

First, this study effectively expands the capacity of the generator by retaining a set of filters and employing sample-specific linear combinations. The study also adopted several techniques commonly used in diffusion context and confirmed that they bring similar benefits to GANs. For example, intertwining self-attention (image only) and cross-attention (image-text) with convolutional layers can improve model performance.

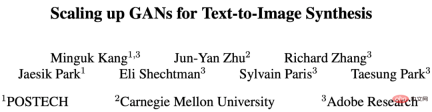

The research also reintroduces multi-scale training and proposes a new scheme to improve image-text alignment and generate low-frequency details of the output. Multi-scale training allows GAN-based generators to use parameters in low-resolution blocks more efficiently, resulting in better image-text alignment and image quality. After careful adjustment, this study proposes a new model GigaGAN with one billion parameters and achieves stable and scalable training on large datasets (such as LAION2B-en). The experimental results are shown in Figure 1 below.

In addition, this study also adopted a multi-stage method [14, 104], first with a low resolution of 64 × 64 The image is generated at 512 × 512 resolution and then upsampled to 512 × 512 resolution. Both networks are modular and powerful enough to be used in a plug-and-play manner.

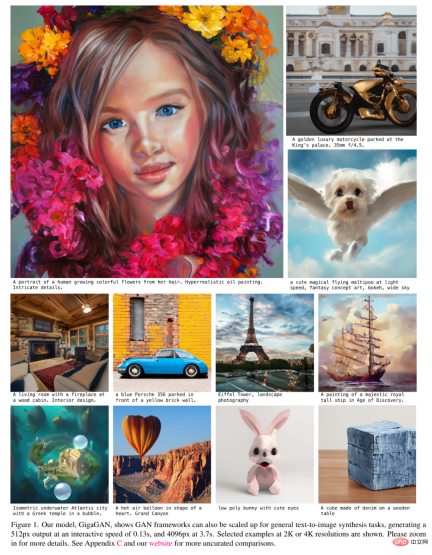

This study demonstrates that text-conditioned GAN upsampling networks can be used as efficient and higher-quality upsamplers for underlying diffusion models, as shown in Figures 2 and 3 below.

The above improvements make GigaGAN far beyond previous GANs: 36 times larger than StyleGAN2 and 6 times larger than StyleGAN-XL and XMC-GAN . While GigaGAN's parameter count of one billion (1B) is still lower than that of recent large synthetic models such as Imagen (3.0B), DALL・E 2 (5.5B), and Parti (20B), the researchers say they have not yet observed any significant changes in the model's The quality of the size is saturated.

GigaGAN achieves a zero-sample FID of 9.09 on the COCO2014 dataset, which is lower than DALL・E 2, Parti-750M and Stable Diffusion.

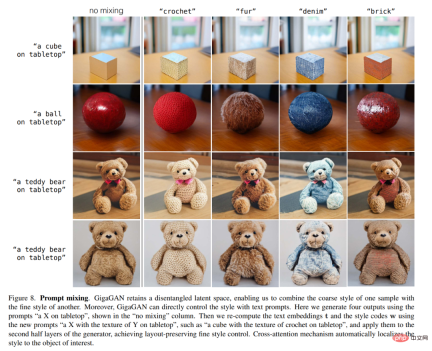

In addition, compared with diffusion models and autoregressive models, GigaGAN has three major practical advantages. First, it is dozens of times faster, producing a 512-pixel image in 0.13 seconds (Figure 1). Second, it can synthesize ultra-high-resolution images at 4k resolution in just 3.66 seconds. Third, it has a controllable latent vector space that is suitable for well-studied controllable image synthesis applications, such as style blending (Figure 6), prompt interpolation (Figure 7), and prompt blending (Figure 8).

#This study successfully trained the GAN-based billion-parameter scale model GigaGAN on billions of real-world images. This suggests that GANs remain a viable option for text-to-image synthesis and that researchers should consider them for aggressive future expansion.

Method OverviewThe researcher trained a generator G (z, c), given a potential encoding z∼N (0, 1)∈R ^128 and text conditioning signal c, predict an image x∈R^(H×W×3). They use a discriminator D(x, c) to judge the authenticity of the generated images compared to samples in a training database D, which contains image-text pairs.

Although GANs can successfully generate realistic images on single- and multi-class datasets, open text conditional synthesis on Internet images still faces challenges. The researchers hypothesize that the current limitations stem from its reliance on convolutional layers. That is, the same convolutional filter is used to model a universal image synthesis function for all text conditions at all locations in the image, which is a challenge. In view of this, researchers try to inject more expressiveness into parameterization by dynamically selecting convolution filters based on input conditions and capturing long-range dependencies through attention mechanisms.

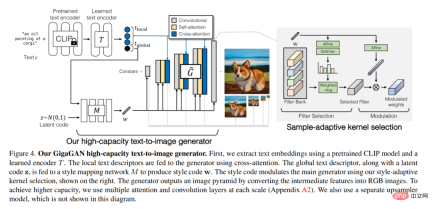

GigaGAN High Volume Text-Image Generator is shown in Figure 4 below. First, we use a pre-trained CLIP model and a learned encoder T to extract text embeddings. Feed local text descriptors to the generator using cross-attention. The global text descriptor, together with the latent code z, is fed into the style mapping network M to produce the style code w. The style code adjusts the main generator using the style from the paper - adaptive kernel selection, shown on the right.

The generator outputs an image pyramid by converting intermediate features into RGB images. To achieve higher capacity, we use multiple attention and convolutional layers at each scale (Appendix A2). They also used a separate upsampler model, which is not shown in this figure.

The discriminator consists of two branches for processing image and text conditioning t_D. The text branch handles text similarly to the generator (Figure 4). The image branch receives an image pyramid and makes independent predictions for each image scale. Furthermore, predictions are made at all subsequent scales in the downsampling layer, making it a multi-scale input, multi-scale output (MS-I/O) discriminator.

Experimental results

Experimental results

In the paper, the author recorded five different experiments.

In the first experiment, they demonstrated the effectiveness of the proposed method by incorporating each technical component one by one.

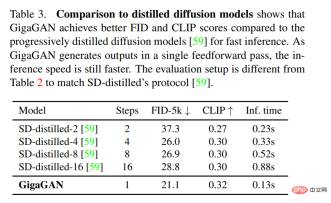

In the second experiment, they tested the model’s ability to generate graphs, and the results showed that GigaGAN performed better than Stable Diffusion (SD-v1.5) is comparable to FID while producing results much faster than diffusion or autoregressive models.

In the third experiment, they compared GigaGAN with a distillation-based diffusion model, and the results showed that GigaGAN was more efficient than distillation-based diffusion. Models synthesize higher quality images faster.

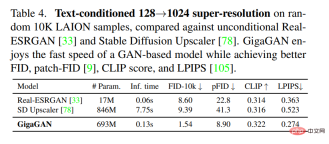

In the fourth experiment, they verified that GigaGAN’s upsampler achieved conditional and unconditional super-resolution. Advantages over other upsamplers in rate tasks.

##Finally, they presented their Large-scale GAN models still enjoy the continuous and disentangled latent space operations of GAN, thus enabling new image editing modes. See Figures 6 and 8 above for diagrams.

The above is the detailed content of GAN's counterattack: Zhu Junyan's new CVPR work GigaGAN, the image output speed beats Stable Diffusion. For more information, please follow other related articles on the PHP Chinese website!

Tesla's Robovan Was The Hidden Gem In 2024's Robotaxi TeaserApr 22, 2025 am 11:48 AM

Tesla's Robovan Was The Hidden Gem In 2024's Robotaxi TeaserApr 22, 2025 am 11:48 AMSince 2008, I've championed the shared-ride van—initially dubbed the "robotjitney," later the "vansit"—as the future of urban transportation. I foresee these vehicles as the 21st century's next-generation transit solution, surpas

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AM

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AMRevolutionizing the Checkout Experience Sam's Club's innovative "Just Go" system builds on its existing AI-powered "Scan & Go" technology, allowing members to scan purchases via the Sam's Club app during their shopping trip.

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AM

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AMNvidia's Enhanced Predictability and New Product Lineup at GTC 2025 Nvidia, a key player in AI infrastructure, is focusing on increased predictability for its clients. This involves consistent product delivery, meeting performance expectations, and

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AM

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AMGoogle's Gemma 2: A Powerful, Efficient Language Model Google's Gemma family of language models, celebrated for efficiency and performance, has expanded with the arrival of Gemma 2. This latest release comprises two models: a 27-billion parameter ver

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AM

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AMThis Leading with Data episode features Dr. Kirk Borne, a leading data scientist, astrophysicist, and TEDx speaker. A renowned expert in big data, AI, and machine learning, Dr. Borne offers invaluable insights into the current state and future traje

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Notepad++7.3.1

Easy-to-use and free code editor

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.