Home >Technology peripherals >AI >The confidentiality of GPT-4 technical details caused controversy, and OpenAI's chief scientist responded

The confidentiality of GPT-4 technical details caused controversy, and OpenAI's chief scientist responded

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-12 15:37:031102browse

Early yesterday morning, OpenAI unexpectedly released GPT-4.

This announcement came as a surprise to the technology community. After all, it was generally expected that GPT-4 would be announced at Microsoft’s “The Future of Work with AI” event on Thursday.

Just four months after ChatGPT debuted, it has set a record as the “fastest-growing consumer application in history.” Now that GPT-4 is online, the product's response capabilities have reached a new level.

After being shocked, many researchers carefully read the technical report of GPT-4, but felt disappointed: Why are there no technical details?

A release that goes against the founding spirit

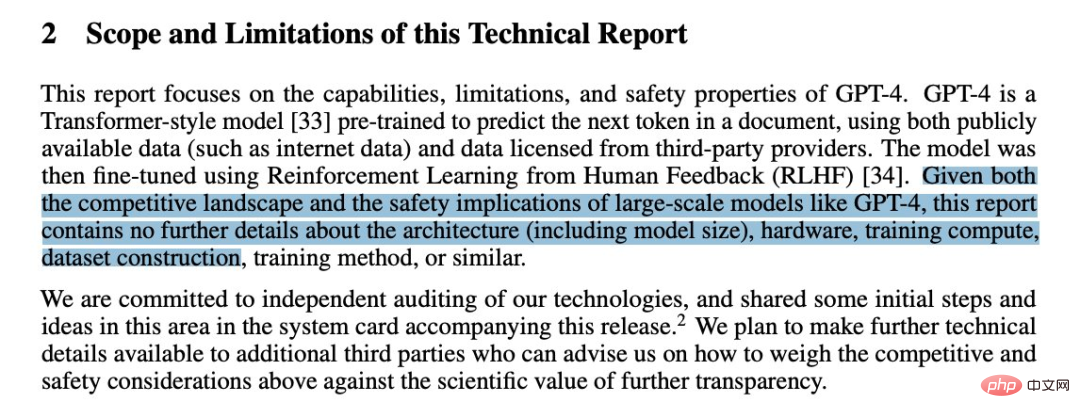

In the announcement, OpenAI shared a large number of GPT-4 benchmark and test results and some interesting demonstrations, but provided little information about Information such as the data used to train the system, the cost of computing power, or the hardware or methods used to create GPT-4.

For example, the conclusion of a save-reading GPT-4 paper is: "We use Python."

Someone even joked: "I read that GPT-4 is based on the Transformer architecture."

Many members of the AI community criticized the decision, noting that it undermines OpenAI’s founding ethos as a research-based organization and leaves others It's harder to reproduce its work.

Most of the initial reactions to the GPT-4 closed model were negative, but it seems that anger can no longer change its decision to "close source":

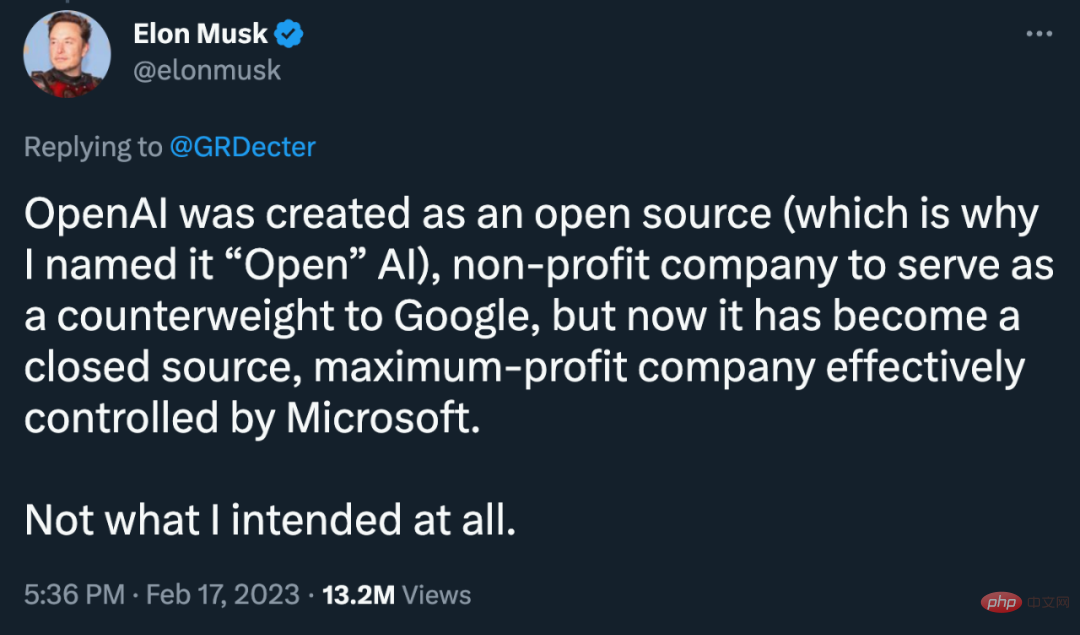

In fact, criticism of OpenAI for not being open source has been going on for some time. Even Musk, a founding team member of OpenAI, has publicly questioned its "deviation from the original intention":

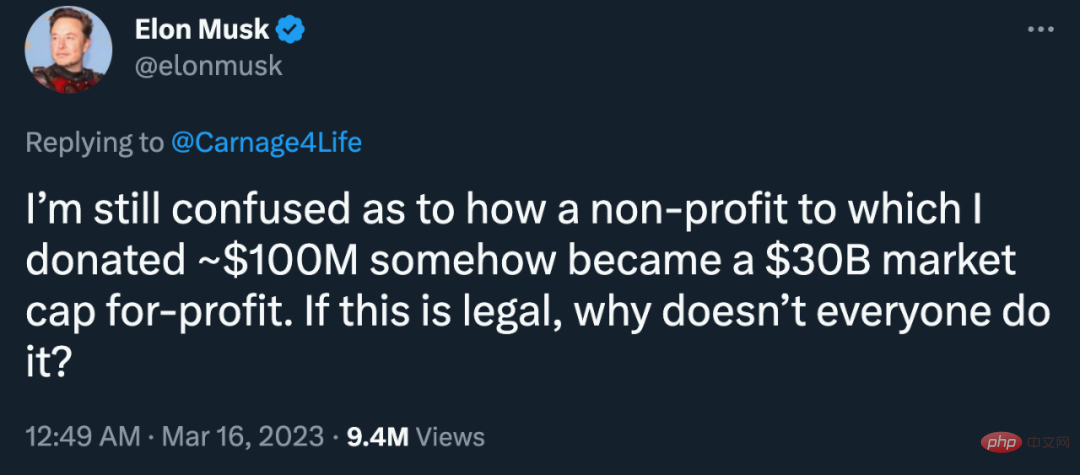

On this matter, Musk is still confused: "I don't understand how a non-profit organization with nearly US$100 million invested in it turned into a commercial company with a market value of 30 billion?"

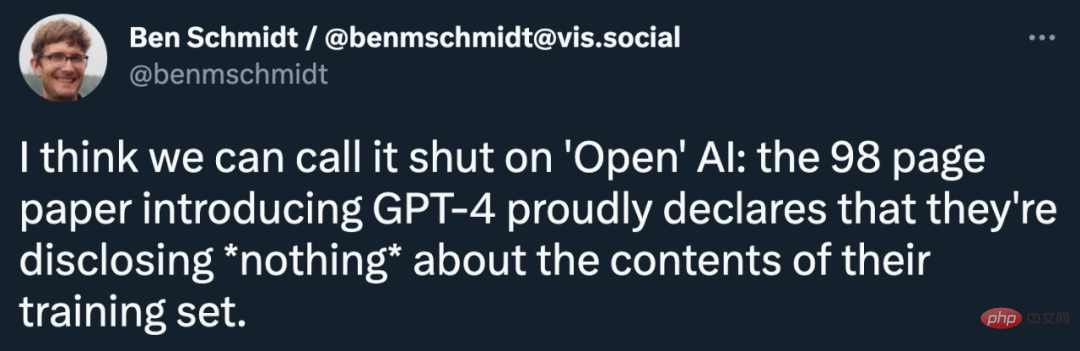

Ben Schmidt, vice president of information design at Nomic AI, said: "I think we can stop calling it 'Open' - the 98-page paper introducing GPT-4 proudly declares that they don't Disclose any information about the contents of the training set."

Some people also believe that OpenAI hides the details of GPT-4 Another reason is legal liability. AI language models are trained on huge text data sets, and many models (including the early GPT system) scrape information from the web, one of which sources may include copyrighted material. Several companies are currently being sued by independent artists and photo site Getty Images.

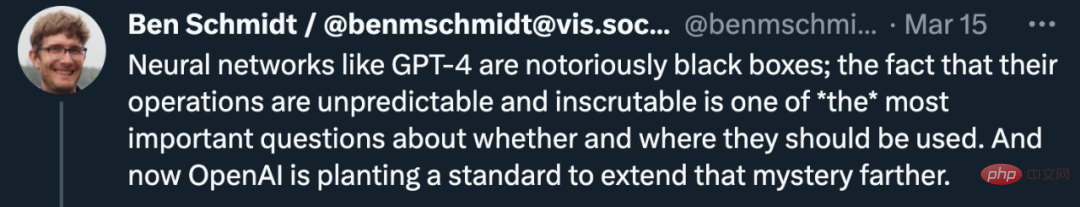

More importantly, some say, it would make it harder to develop safeguards against the threats posed by GPT-4. Ben Schmidt also believes that without being able to see the data trained on GPT-4, it is difficult to know where the system can be used safely and propose fixes.

"It is well known that neural networks like GPT-4 are black boxes. In fact, their operation is unpredictable and difficult to understand, which is the most important question about whether and where they should be used. One of the important questions. Now OpenAI is gradually developing a standard that further expands this mystery." Ben Schmidt said.

OpenAI Chief Scientist: It would be unwise to open source GPT-4

OpenAI’s chief scientist and Co-founder Ilya Sutskever responded to the above controversy, saying that the reason why OpenAI does not share more detailed information on GPT-4 is "fear of competition and concern about security":

"From the competitive landscape It seems that the competition from the outside world is very fierce. The development of GPT-4 is not easy. It has gathered almost all the power of OpenAI. It took a long time to produce this thing, and there are many companies who want to do the same thing. "

"The security aspect is not as prominent as the competition aspect, but it will also change. These models are very efficient, and they are becoming more and more efficient. Certain At some point, it would be fairly easy to do massive damage with these models if someone wanted to. As these abilities become more advanced, it makes sense not to reveal them."

When being Asked "why OpenAI changed the way it shares research results," Sutskever responded: "Frankly, we were wrong. If you believe, as we do, that at some point, AI or AGI will become extremely powerful and exciting. It's unbelievable, then there's no point in open source. It's a bad idea, and I fully believe that within a few years it will be clear to everyone that open source AI is unwise."

Lightning William Falcon, CEO of AI and creator of the open source tool PyTorch Lightning, told VentureBeat that he can understand this decision from a business perspective: "As a company, you have every right to do this."

But he also said that OpenAI’s move has set a “bad template” for the wider community and may have harmful effects.

Regarding the reason why OpenAI does not share its training data, Sutskever’s explanation is: “My view on this is that training data is technology. The reason why we do not disclose training data is that we do not The reasons for disclosing the number of parameters are pretty much the same." When asked if OpenAI could explicitly state that its training data does not contain counterfeit material, Sutskever did not respond.

Sutskever agrees with OpenAI’s critics that open-source models facilitate the development of safeguards. "If more people study these models, we'll know more, and that'll be even better," he said. For these reasons, OpenAI provides certain academic and research institutions with access to its systems.

What can we expect next?

The heated discussion caused by GPT-4 is expected to continue for a while, so that people may ignore some other developments.

For example, amid the overwhelming discussion yesterday, Google’s release seemed quiet. At present, generative AI has been fully integrated into Google Workspace, and functions such as generating pictures, presentations, emails, documents, etc. have been updated. As you can imagine, this will be a huge productivity improvement.

Next, people can look forward to a lot: soon, Microsoft CEO Satya Nadella will give a speech in person to introduce more cooperation between Microsoft and OpenAI, such as Office suite based on GPT-4.

Source: https://www.theinformation.com/articles/microsoft-rations-access-to- ai-hardware-for-internal-teams

Let’s wait and see.

The above is the detailed content of The confidentiality of GPT-4 technical details caused controversy, and OpenAI's chief scientist responded. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology