Home >Technology peripherals >AI >Study shows large language models have problems with logical reasoning

Study shows large language models have problems with logical reasoning

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-12 15:19:031365browse

Translator|Li Rui

Reviewer|Sun Shujuan

Before chatbots with perceptual capabilities became a hot topic, large language models (LLM) had attracted more attention of excitement and worry. In recent years, large language models (LLMs), deep learning models trained on large amounts of text, have performed well on several benchmarks used to measure language understanding capabilities.

Large language models such as GPT-3 and LaMDA manage to maintain coherence across longer texts. They seem knowledgeable about different topics and remain consistent throughout lengthy conversations. Large language models (LLMs) have become so convincing that some people associate them with personality and higher forms of intelligence.

But can large language models (LLMs) perform logical reasoning like humans? Transformers, a deep learning architecture used in large language models (LLMs), does not learn to simulate reasoning capabilities, according to a research paper published by UCLA scientists. Instead, computers have found clever ways to learn the statistical properties inherent in reasoning problems.

The researchers tested the currently popular Transformer architecture BERT in a limited problem space. Their results show that BERT can accurately respond to inference problems on examples within a distribution in the training space, but cannot generalize to examples in other distributions based on the same problem space.

And these tests highlight some of the shortcomings of deep neural networks and the benchmarks used to evaluate them.

1. How to measure logical reasoning in artificial intelligence?

There are several benchmarks for artificial intelligence systems targeting natural language processing and understanding problems, such as GLUE, SuperGLUE, SNLI, and SqUAD. As Transformer has grown larger and been trained on larger datasets, Transformer has been able to incrementally improve on these benchmarks.

It’s worth noting that the performance of AI systems on these benchmarks is often compared to human intelligence. Human performance on these benchmarks is closely related to common sense and logical reasoning abilities. But it's unclear whether large language models improve because they gain logical reasoning capabilities or because they are exposed to large amounts of text.

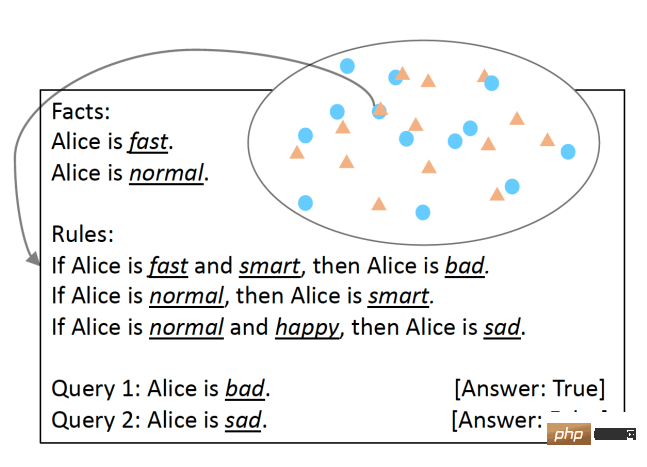

To test this, UCLA researchers developed SimpleLogic, a class of logical reasoning questions based on propositional logic. To ensure that the language model's reasoning capabilities were rigorously tested, the researchers eliminated language differences by using template language structures. SimpleLogic problems consist of a set of facts, rules, queries, and labels. Facts are predicates known to be "true". Rules are conditions, defined as terms. A query is a question that a machine learning model must respond to. The label is the answer to the query, that is, "true" or "false". SimpleLogic questions are compiled into continuous text strings containing the signals and delimiters expected by the language model during training and inference.

Questions asked in SimpleLogic format One of the characteristics of SimpleLogic is that its questions are self-contained and require no prior knowledge. This is especially important because, as many scientists say, when humans speak, they ignore shared knowledge. This is why language models often fall into a trap when asked questions about basic world knowledge that everyone knows. In contrast, SimpleLogic provides developers with everything they need to solve their problems. Therefore, any developer looking at a problem posed by the SimpleLogic format should be able to infer its rules and be able to handle new examples regardless of their background knowledge.

2. Statistical features and logical inference

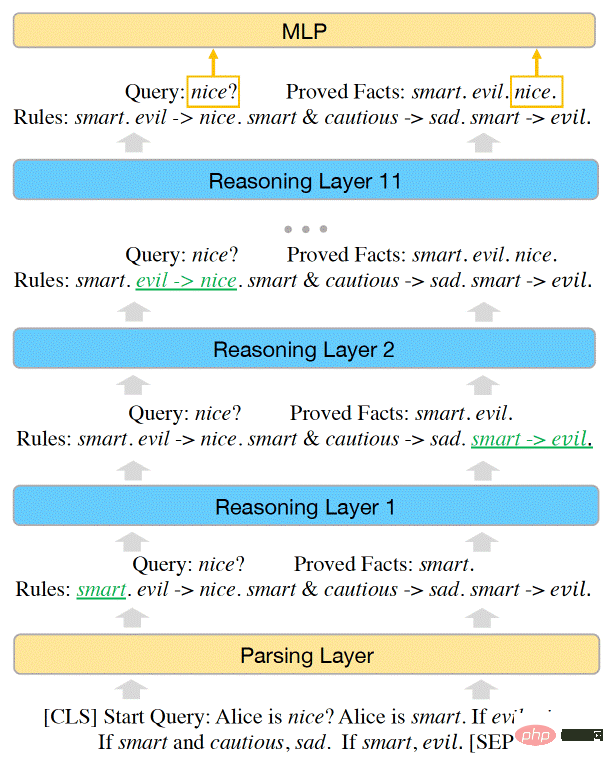

The researchers proved that the problem space in SimpleLogic can be represented by an inference function. The researchers further showed that BERT is powerful enough to solve all problems in SimpleLogic, and they can manually adjust the parameters of the machine learning model to represent the inference function.

However, when they trained BERT on the SimpleLogic example dataset, the model was unable to learn the inference function on its own. Machine learning models manage to achieve near-perfect accuracy on a data distribution. But it does not generalize to other distributions within the same problem space. This is the case even though the training dataset covers the entire problem space and all distributions come from the same inference function.

The capacity of the BERT Transformer model is sufficient to represent SimpleLogic’s inference capabilities

(Note: This is different from the out-of-distribution generalization challenge, which applies to open space Problem. When a model cannot generalize to OOD data, its performance will drop significantly when processing data that is not within its training set distribution.)

The researchers wrote: "After further investigation, we provide an explanation for this paradox: a model that achieves high accuracy only on distributed test examples has not learned to reason. In fact, the model has learned to reason on logical reasoning problems use statistical features to make predictions, rather than simulating correct inference functions."

This finding highlights an important challenge in using deep learning for language tasks. Neural networks are very good at discovering and fitting statistical features. In some applications this can be very useful. For example, in sentiment analysis, there are strong correlations between certain words and sentiment categories.

However, for logical reasoning tasks, even if statistical features exist, the model should try to find and learn the underlying reasoning functions.

The researchers wrote: “When we attempt to train neural models end-to-end to solve natural language processing (NLP) tasks that involve both logical reasoning and prior knowledge and present language differences, it should Be careful." They emphasized that the challenges posed by SimpleLogic become even more severe in the real world, where the large amounts of information required for large language models (LLMs) are simply not contained in the data.

The researchers observed that when they removed a statistical feature from the training data set, the performance of the language model improved on other distributions of the same problem space. The problem, however, is that discovering and removing multiple statistical features is easier said than done. As the researchers point out in their paper, "Such statistical features can be numerous and extremely complex, making them difficult to remove from training data."

3. Inference in Deep Learning

Unfortunately, as the size of language models becomes larger, the logical reasoning problem does not disappear. It's just hidden in huge architectures and very large training corpora. Large language models (LLM) can describe facts and stitch sentences together very well, but in terms of logical reasoning, they still use statistical features for reasoning, which is not a solid foundation. Moreover, there is no indication that by adding layers, parameters, and attention heads to Transformers, the logical reasoning gap will be closed.

This paper is consistent with other work showing the limitations of neural networks in learning logical rules, such as the Game of Life or abstract reasoning from visual data. The paper highlights one of the main challenges facing current language models. As UCLA researchers point out, “On the one hand, when a model is trained to learn a task from data, it always tends to learn statistical patterns that are inherently present in the inference examples; However, on the other hand, logical rules never rely on statistical patterns to make inferences. Since it is difficult to construct a logical inference data set that does not contain statistical features, learning inferences from data is difficult."

Original link: https://bdtechtalks.com/2022/06/27/large-language-models-logical-reasoning/

The above is the detailed content of Study shows large language models have problems with logical reasoning. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology