Home >Technology peripherals >AI >WeChat's large-scale recommendation system training practice based on PyTorch

WeChat's large-scale recommendation system training practice based on PyTorch

- 王林forward

- 2023-04-12 12:13:021527browse

This article will introduce WeChat’s large-scale recommendation system training based on PyTorch. Unlike some other deep learning fields, the recommendation system still uses Tensorflow as the training framework, which is criticized by the majority of developers. Although there are some practices using PyTorch for recommendation training, the scale is small and there is no actual business verification, making it difficult to promote early adopters of business.

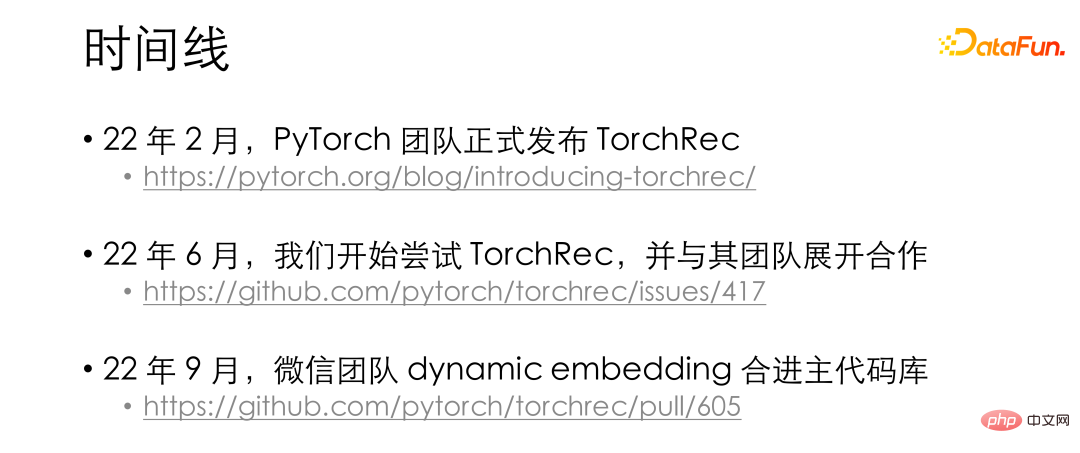

In February 2022, the PyTorch team launched the official recommended library TorchRec. Our team began to try TorchRec in internal business in May and launched a series of cooperation with the TorchRec team. During several months of trial use, we have experienced many advantages of TorchRec, and we also feel that TorchRec still has some shortcomings in very large-scale models. In response to these shortcomings, we designed extended functions to fill its problems. In September 2022, the extended function dynamic embedding we designed has been officially integrated into the main branch of TorchRec, and is still being continuously optimized with the official team.

##1. What TorchRec can bring to us

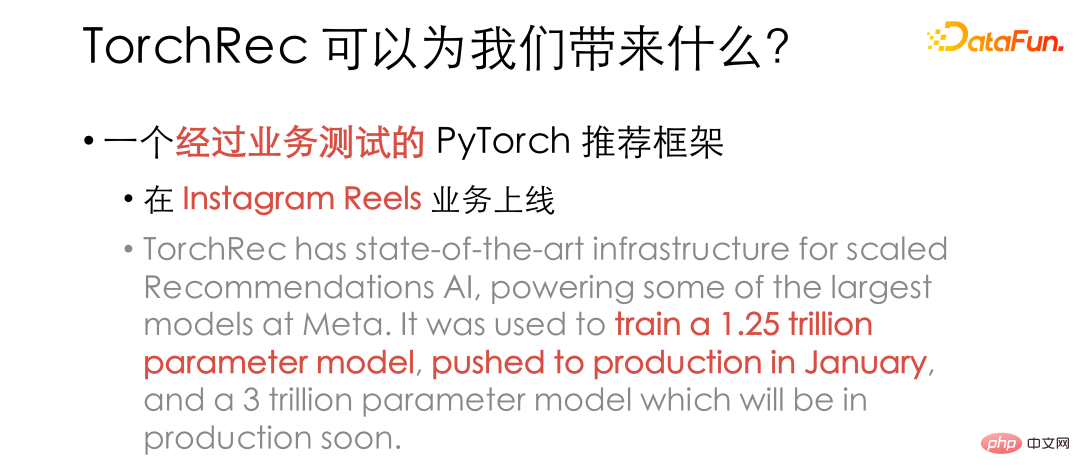

Let’s first talk about what TorchRec can bring us? We all know that recommendation systems are often directly linked to the company's cash flow, and the cost of trial and error is very high, so what everyone needs is a framework that has been tested by business. This is why some previous recommendation frameworks based on PyTorch have not been widely used. As an official recommendation framework, TorchRec was launched in January 2022. Meta had already used it to successfully train and launch a 125 billion parameter model on the Instagram Reels business, becoming a business-tested PyTorch framework. With the support of a big business like Instagram, we have more confidence and can finally rationally consider the advantages of a recommendation framework based on PyTorch.

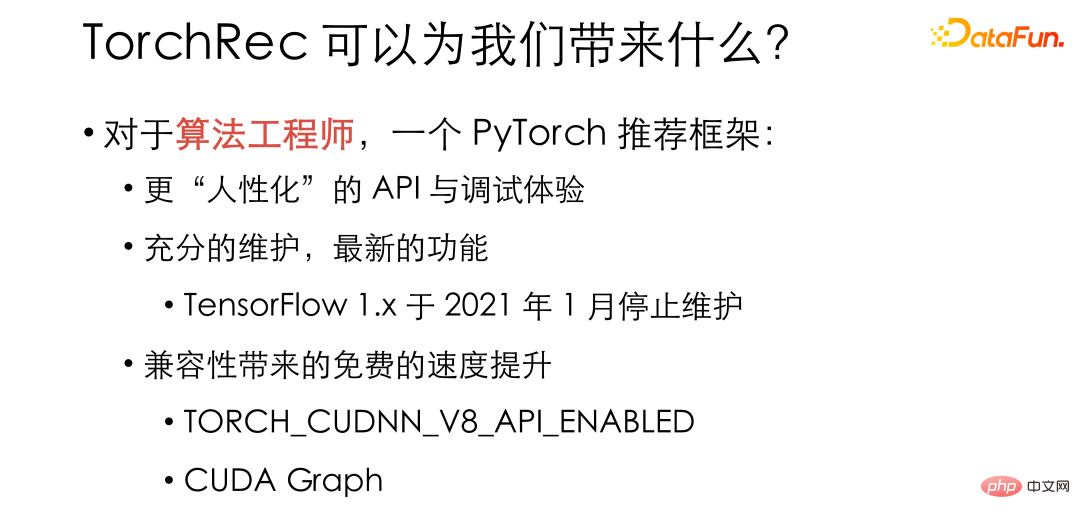

TorchRec has different benefits for different members of the team. First of all, for the vast majority of algorithm engineers in the team, the PyTorch recommended framework allows everyone to finally enjoy the more user-friendly dynamic graphics and debugging experience experienced by CV and NLP engineers.

In addition, PyTorch has excellent compatibility - a model based on PyTorch1.8 can run on the latest version 1.13 without changing a line of code. ——Allowing algorithm engineers to finally upgrade the framework with confidence, thereby enjoying the latest framework functions and better performance. On the other hand, some recommended frameworks based on TensorFlow are often stuck on a certain version of TensorFlow. For example, many teams may still use internal frameworks based on TensorFlow 1.x. TensorFlow 1.x has stopped maintenance in January 2021, which means that in the past two years, all new bugs and new features will not be well supported. Problems encountered during use can only be repaired by the internal maintenance team, which adds additional costs. Timely framework upgrades can also bring free speed improvements. Higher versions of PyTorch often match higher versions of CUDA, as well as some new features like CUDA graph, which can further increase training speed and improve training efficiency.

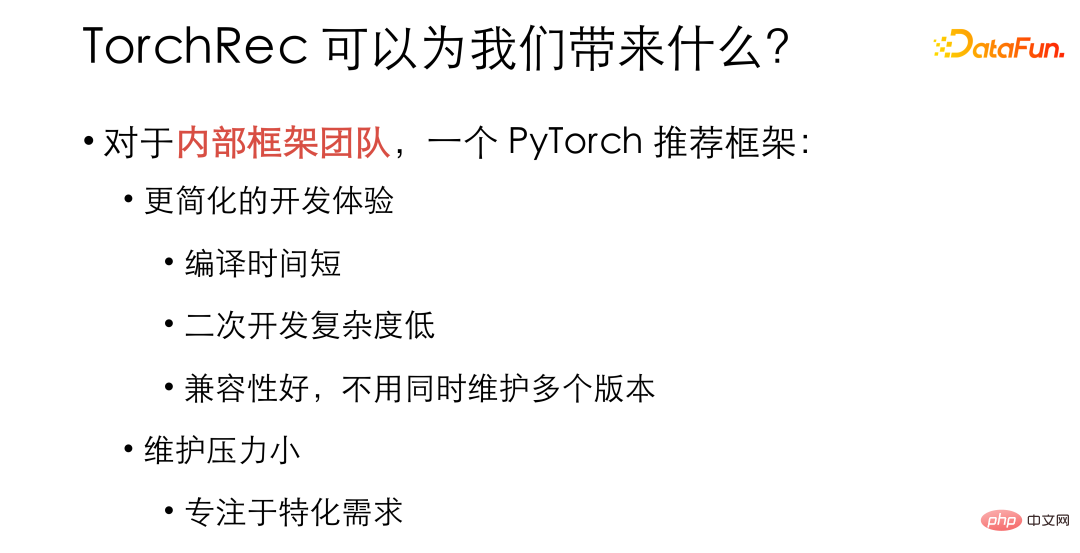

In addition to algorithm engineers, the framework team is also an important part of the recommendation team. The framework team in the company will conduct secondary development based on internal needs after selecting the open source framework. For them, a recommended framework for PyTorch will lead to a more streamlined development experience. Many traditional TensorFlow recommended frameworks will imitate TF serving to make an extension based on C session - this design solution was considered a very advanced solution at the time - but this requires a complete compilation of the entire TensorFlow just to change one line of code, which is time-consuming It's very long, and it even has to solve trivial problems such as downloading external dependencies on the intranet, and the development experience is not very good.

You will not encounter such problems when using PyTorch, because PyTorch takes the Python philosophy as its core and hopes that everyone can extend it freely. When we are doing secondary development, we only need to encapsulate it with a relatively mature Python library like pybind11, package our library into a dynamic link library, and then it can be loaded. In this way, the overall compilation speed will naturally be much faster, and the learning cost will be much lower.

As mentioned earlier, PyTorch is a framework with very good backward compatibility. This allows the maintenance team to avoid maintaining multiple versions. Many common problems can be solved. If we can get an official solution, everyone can focus on specialized needs, and team efficiency will be significantly improved.

The above are all the advantages of TorchRec as a PyTorch recommendation framework. What makes us very happy is that the TorchRec team did not stop at being a PyTorch recommendation framework. . They observed the characteristics of existing recommendation models and hardware, and added many new features to the framework, giving TorchRec a clear performance advantage over traditional recommendation frameworks. Next, I will choose a few of them to introduce, namely GPU embedding, the excellent GPU kernel in TorchRec, and the embedding division that TorchRec can perform based on network communication.

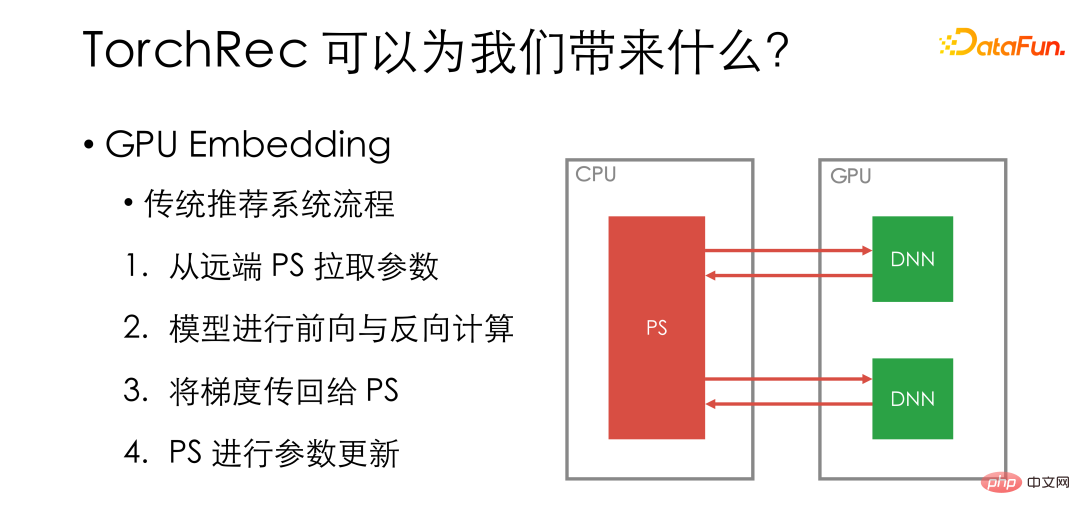

The first is GPU embedding. Let's first review the traditional recommendation system GPU training process. We will put the specific model on the GPU worker, and the embedding will be stored on the remote PS. Each iteration step will first pull parameters from the remote PS, then perform forward and reverse calculations of the model on the GPU, transfer the gradient back to the PS, and update the parameters on the PS.

The green part in the picture is the operation performed on the GPU, and the red part is performed on the network or CPU. It can be seen that although the GPU is the most expensive part of the system, many operations are not placed on the GPU.

Traditional processes do not fully utilize the GPU. At the same time, from a hardware perspective, the memory of a single GPU card is getting larger and larger, and some dense models are far from fully utilizing the GPU. With NVIDIA's continuous optimization, NV link and GPU direct RDMA have also made inter-card communication faster and faster. .

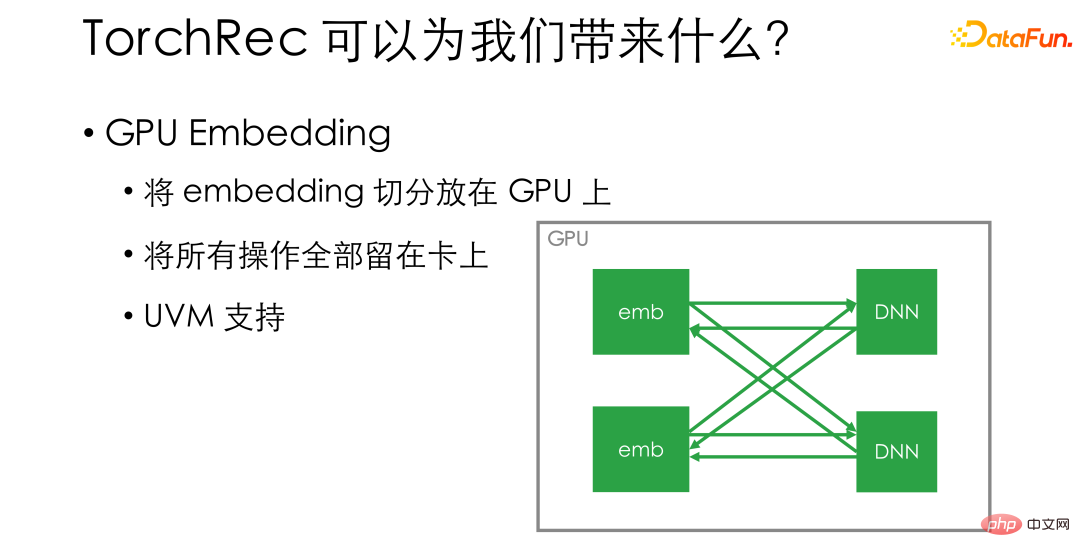

GPU embedding is a very simple solution. He directly splits the embedding and places it on the GPU - for example, if there are 8 cards on a single machine, we directly split the embedding into 8 parts and place each part on a card - thus ensuring that all operations remain on the card. . The utilization efficiency of GPU will be significantly improved, and the training speed will also be qualitatively improved. If you are worried about insufficient video memory space on the GPU, TorchRec also supports UVM, which can divide a part of the memory on the host in advance as a supplement to the video memory, thereby increasing the embedding size that can be placed inside a single machine.

In addition to GPU embedding, TorchRec also implements a very excellent GPU kernel. These kernels take full advantage of the latest hardware features and CUDA features.

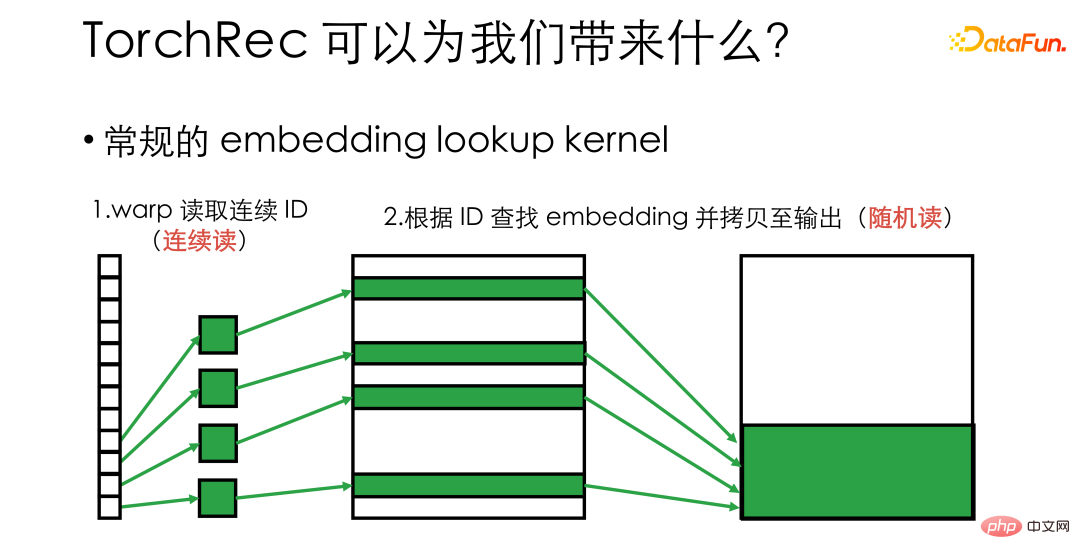

#For example, if you want to implement an embedding lookup kernel, you need to find a bunch of embeddings corresponding to IDs from a large embedding. vector, then in a common implementation, each GPU thread will be assigned an ID and let them find the corresponding embedding respectively. At this time, we have to consider that the bottom layer of the GPU is scheduled according to warp, and the 32 threads in a warp will read and write the video memory together. This means that in the above process, although the video memory is continuously accessed when reading the ID, subsequent copies become a random read and write state. For hardware, random reading and writing cannot fully utilize the memory bandwidth, and the operating efficiency is not high enough.

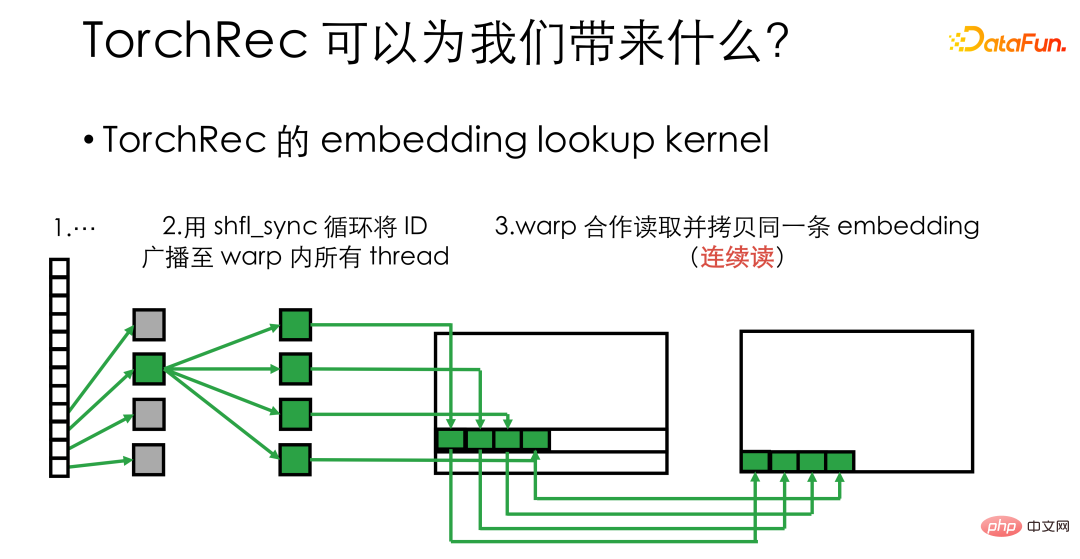

TorchRec uses warp primitives like shuffle_sync to broadcast the ID to On all threads in the warp, 32 threads in a wrap can process the same embedding at the same time, so that continuous memory reading and writing can be performed, which significantly improves the bandwidth utilization efficiency of the video memory and increases the speed of the kernel several times. .

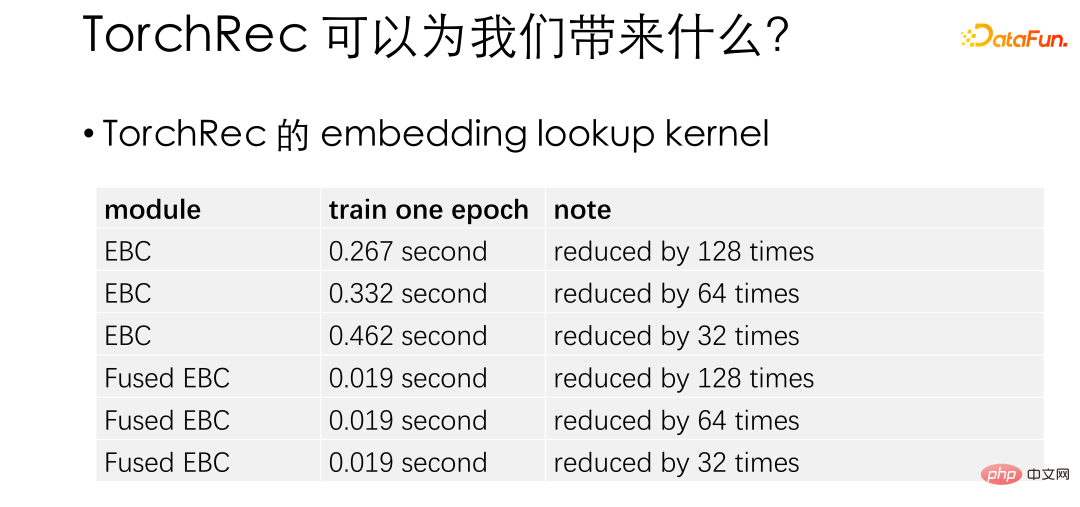

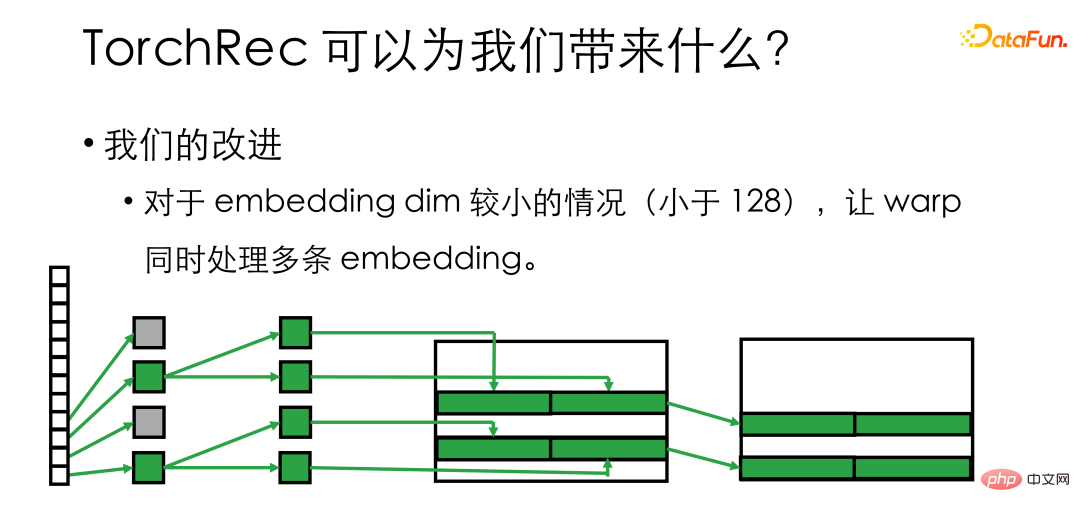

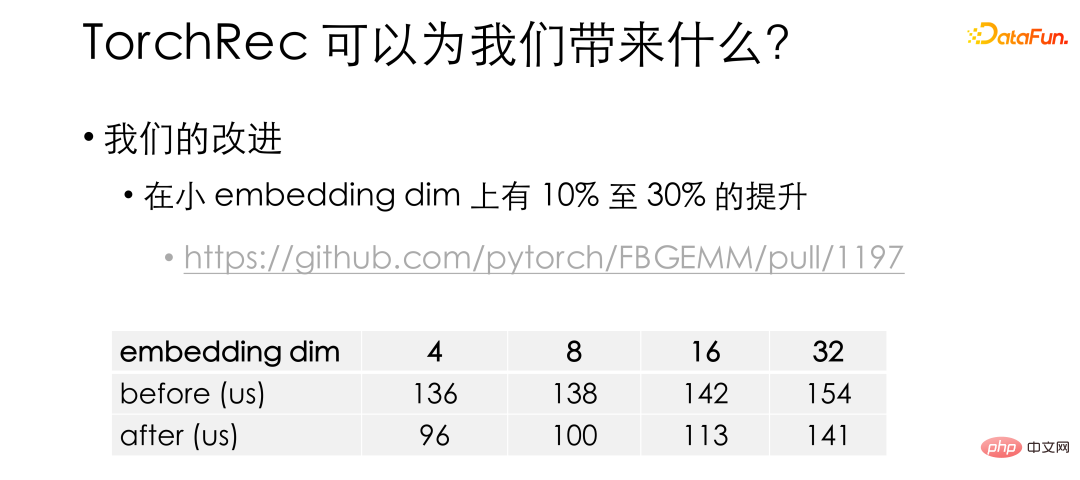

This table is an official test of embedding lookup performance improvement. Here Fused EBC is the optimized kernel. It can be seen that under different settings, TorchRec has dozens of times performance improvement compared to the native PyTorch. Based on TorchRec, we found that when the embedding is relatively small (less than 128), half or more threads may be idle, so we further group the threads in the warp and let them process multiple embeddings at the same time.

#With our improvements, the small embedding The kernel on dim has been improved by another 10% to 30%. This optimization has also been incorporated into the official repo. It should be noted that the kernel of TorchRec is placed in the FBGEMM library. Interested friends can take a look.

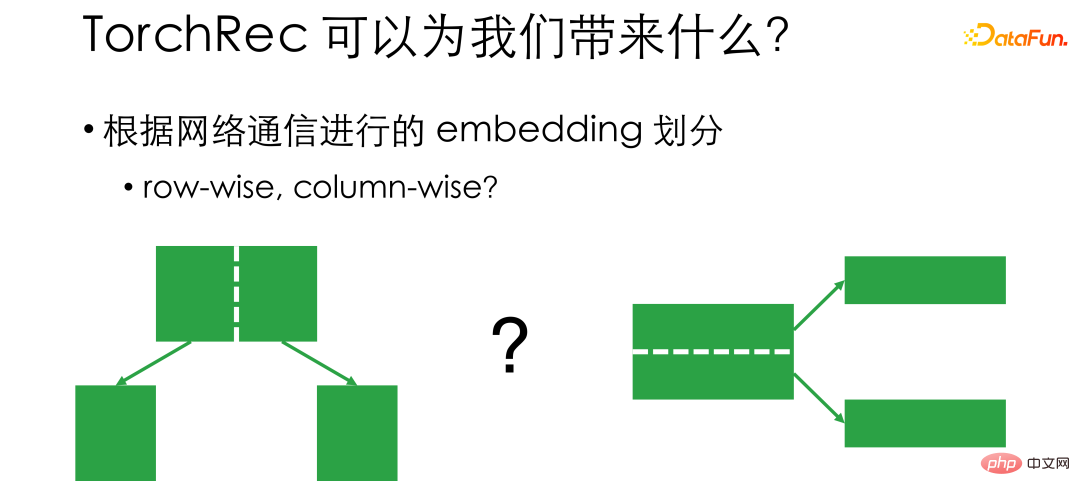

Finally, I would like to introduce the embedding division mechanism of TorchRec. As mentioned earlier, GPU embedding is to divide the embedding and put it on the card, so how to divide it becomes a question that needs to be considered. Traditionally, there are two division ideas, Row wise and Column wise. Row wise means that if there are 20,000 features, numbers 0 to 10000 are placed on card 1, and numbers 10000 to 20000 are placed on card 2. In this way, when we train, if the ID corresponds to card 1, we start from Take it from card 1, which corresponds to card 2, so take it from card 2. The problem with Row wise is that because we don’t know whether there is a big gap between the traffic volume of the first 10,000 numbers and the last 10,000 numbers, the communication is unbalanced and the network hardware cannot be fully utilized.

Column wise is divided from the perspective of embedding length. For example, the total length of the embedding is 128. The first 64 dimensions and the last 64 dimensions can be placed in different positions. This way the communication will be more balanced, but when reading, it needs to communicate with all cards or PSs.

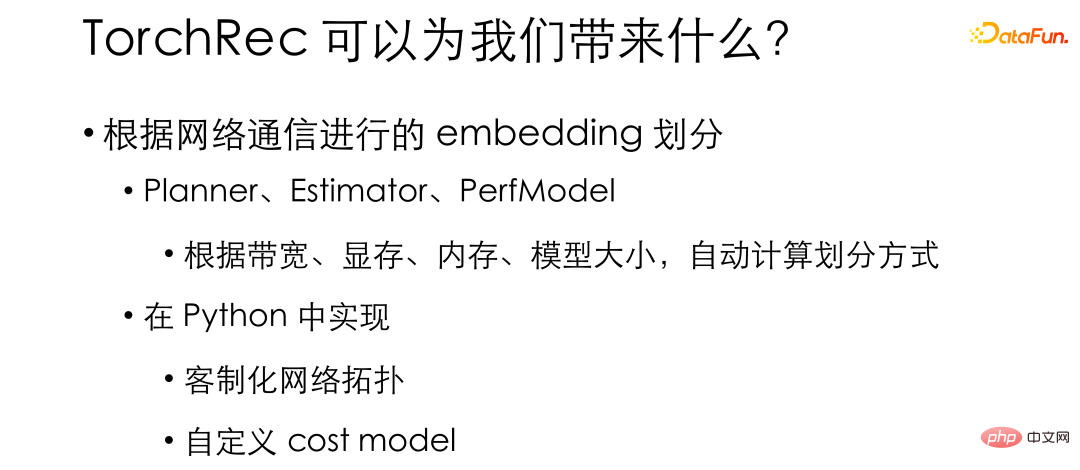

The difference in division mode brings trade-off in selection. Traditional recommendation frameworks will fix the embedding division method in the design, while TorchRec supports multiple division methods - such as row wise, column wise, even table wise, data parallel - and internally provides such as Planner, Estimator, PerfModel and other modules can automatically calculate the division method based on the bandwidth, video memory, memory, model size and other parameters of the usage scenario. In this way, embedding can be divided most efficiently according to our actual hardware conditions and the hardware can be utilized most efficiently. Most of these functions are implemented in Python. This allows us to customize it for our internal environment and easily build a recommendation system that is most suitable for our internal environment.

2. Experimental results on tens of billions of models

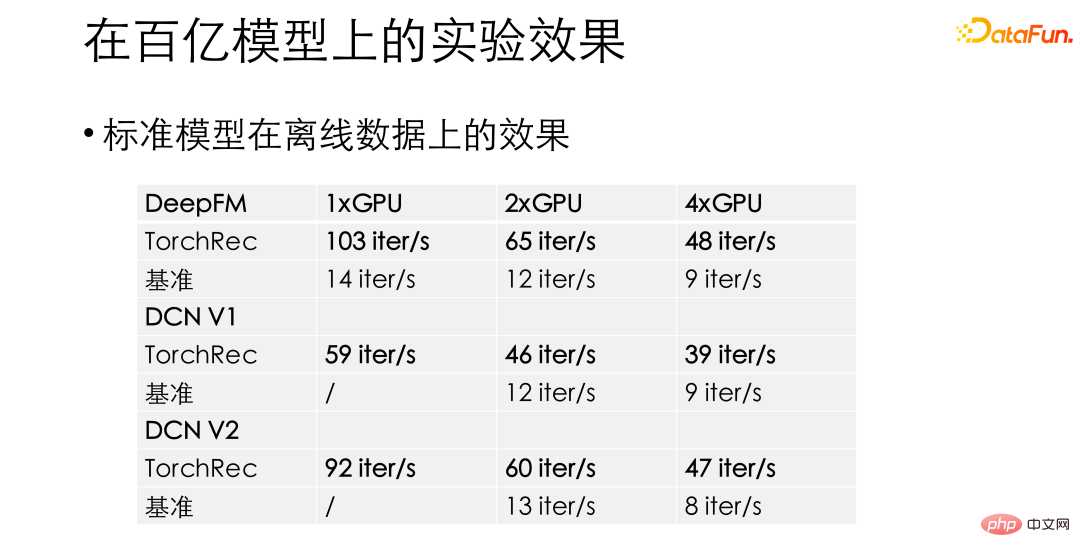

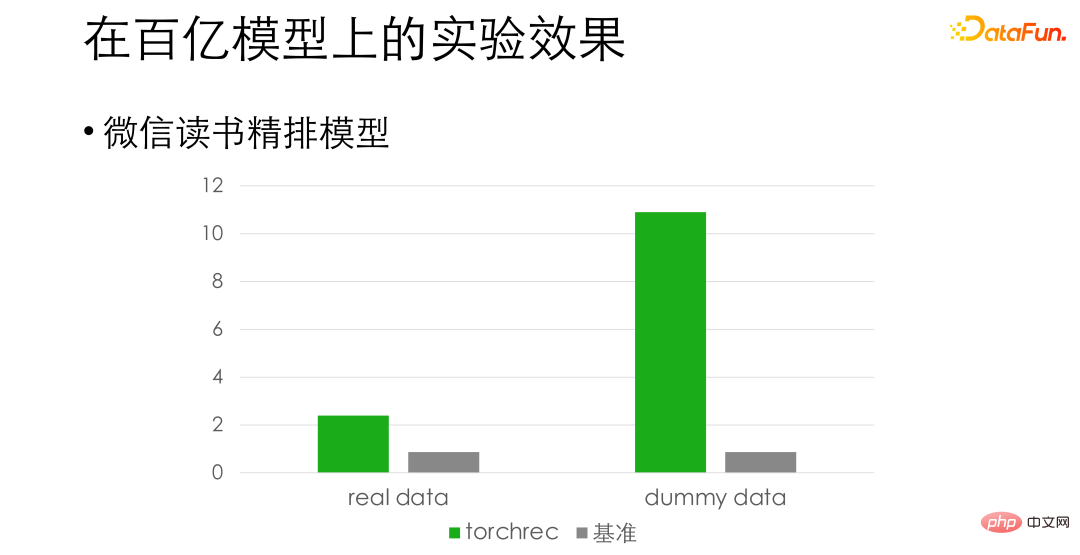

In our experiments, for standard models such as DeepFM and DCN, TorchRec has an astonishing 10 to 15 times performance improvement compared to previous baseline recommendation frameworks. Obtaining such performance gains gives us the confidence to launch TorchRec into business.

For the WeChat reading fine arrangement model, based on the alignment accuracy, we found that on the real data There is a performance improvement of about 3 times, and even an improvement of about 10 times on fake data. The difference here is that reading data for training has become a bottleneck, and we are still making further optimizations in this regard.

03

##The original plan is worth 100 billion or more Shortcomings in large models

The models introduced earlier are basically models with tens of billions or less, that is A model that can fit in a single machine. While pushing TorchRec to larger models, we observed some issues with TorchRec's native design. For large models, TorchRec's pure GPU embedding solution requires more cards - maybe the original training speed of 8 cards can absorb all the data, but we have to use 16 cards to lay down the embedding, which makes it difficult to improve. The GPU hardware utilization efficiency has been dragged down again.

#And for large model scenarios, the algorithm team often proposes dynamic addition and deletion requirements for embedding, such as deleting IDs that have not been accessed for a week. TorchRec's solution does not support such a feature. In addition, very large model businesses generally involve many teams, and migration of basic frameworks will encounter great resistance. What we need is support for gradual and gradual migration, and we cannot let everyone put down the work at hand. That would be too costly and risky.

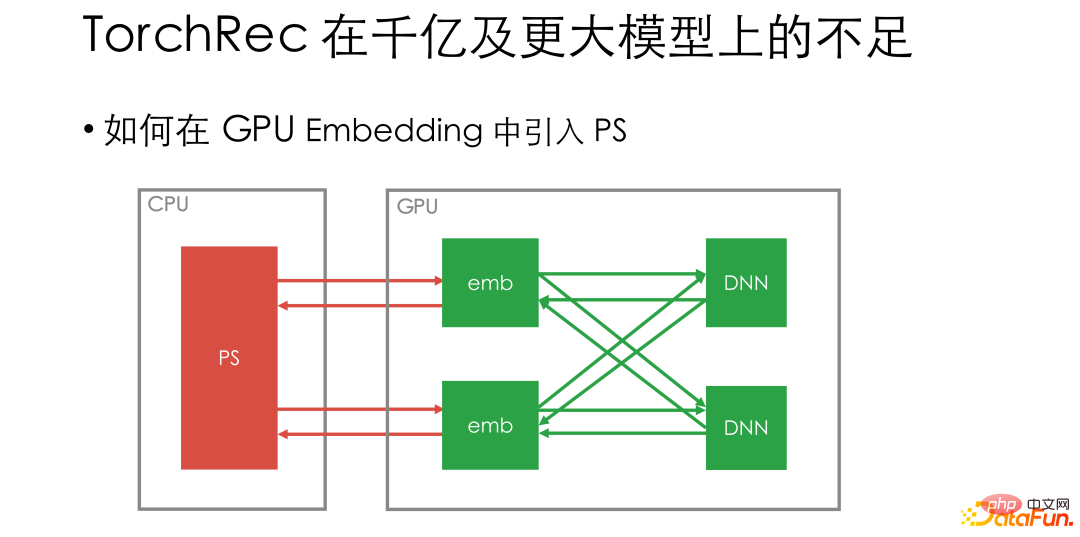

Based on the above requirements, we considered how to modify TorchRec so that it can adapt to the scenario of very large-scale models. We believe that in ultra-large-scale training, it is still necessary to support remote PS connections, because the remote CPU PS is very mature and can easily support the dynamic addition of embedding. At the same time, for cross-team cooperation, PS can be used to isolate training and inference to achieve gradual migration.

Then the next question is how to introduce PS. If the PS is directly connected to the GPU embedding, each iteration step still has to access the remote PS, which will increase the proportion of the overall network and CPU operations, and the GPU utilization will be lowered.

04

How to solve the problem of dynamic embedding in WeChat team

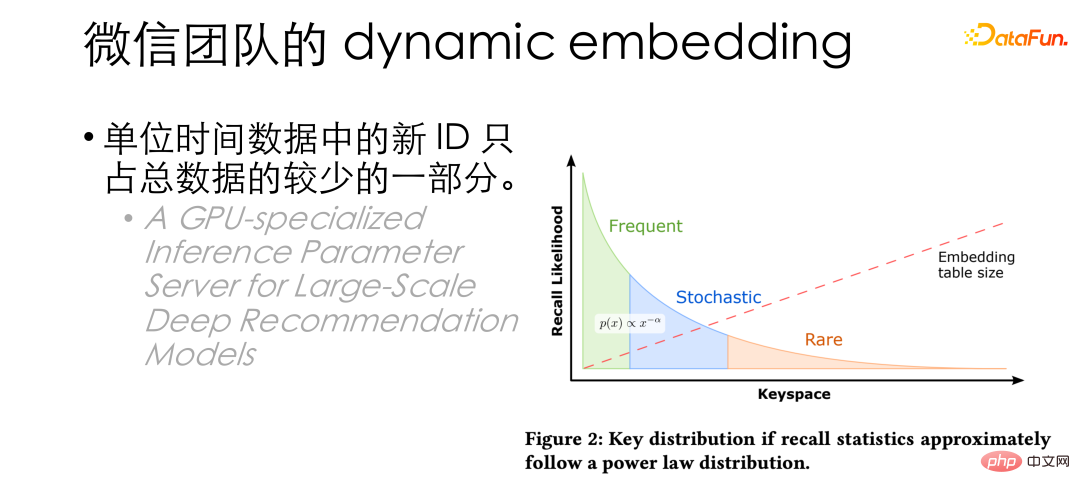

At this time we found that the new IDs in the data per unit time actually accounted for only a small part of the total data, HugeCTR published a paper A similar conclusion was also mentioned: only a small portion of IDs will be accessed frequently. From this, we thought of using GPU embedding for training normally, and then expelling IDs to PS in batches when the display memory is full.

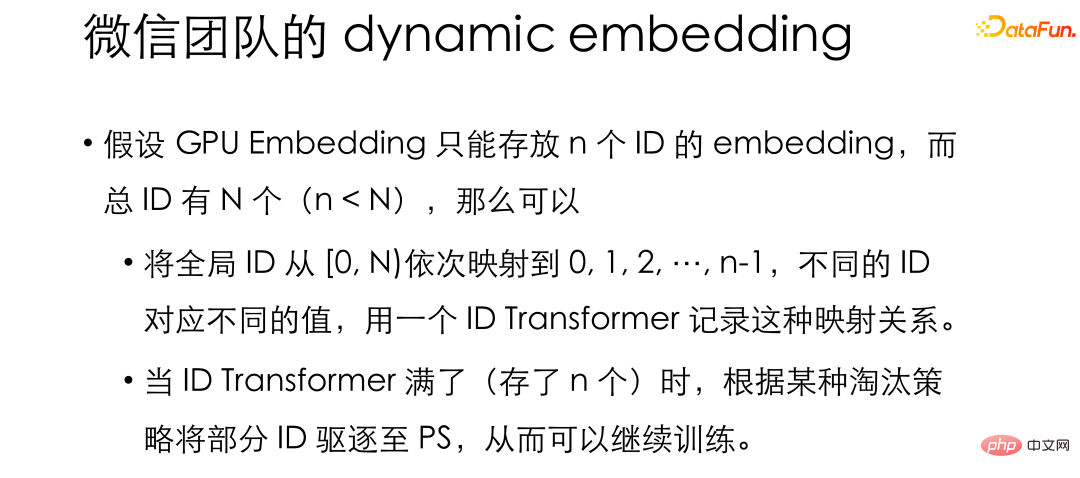

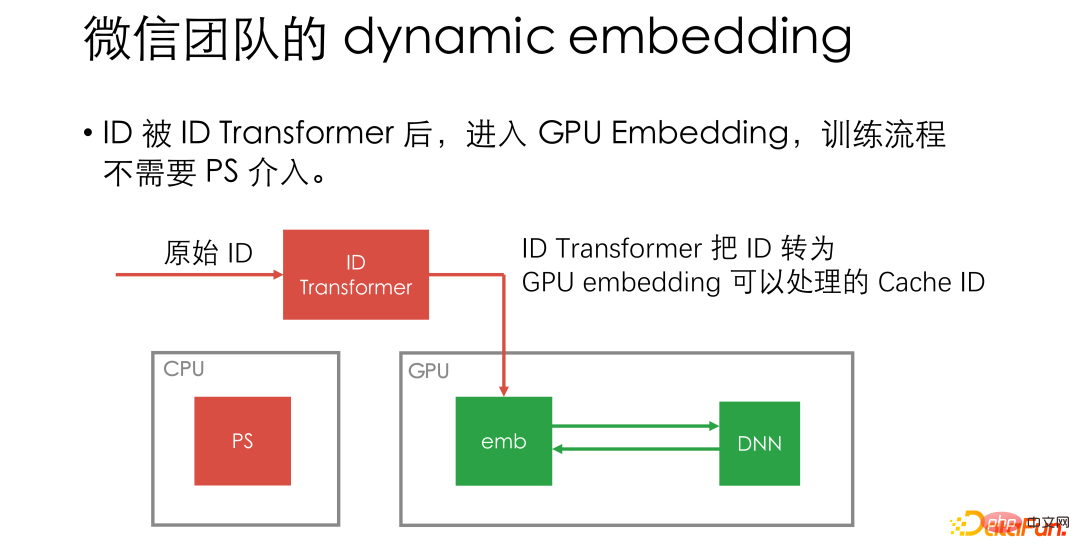

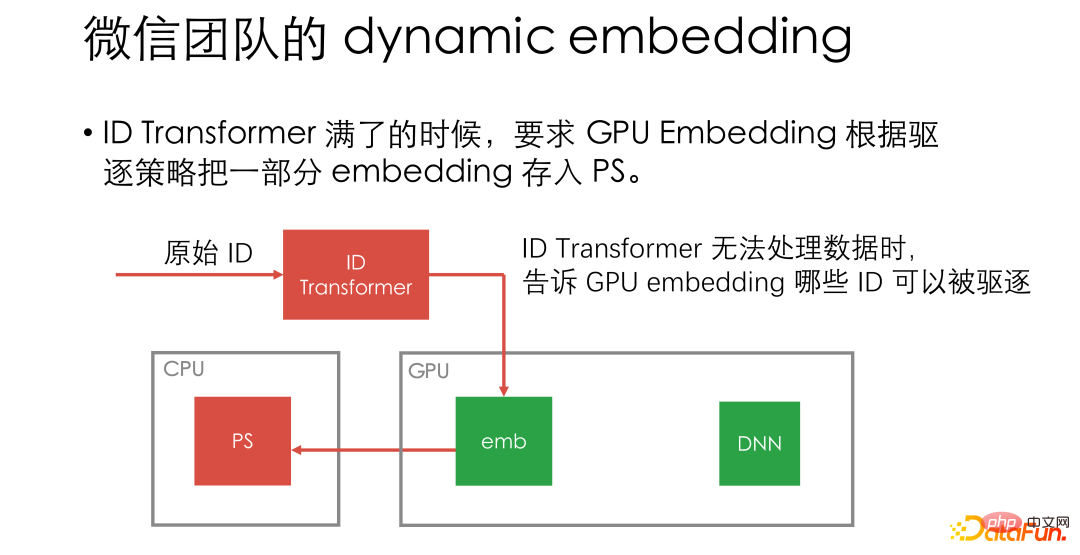

According to this idea, if the GPU embedding can only store n IDs, and the total IDs are N one, or even infinitely many. The global ID can be mapped to 0, 1, 2, 3... in order, and the mapping relationship is stored in a structure called ID transform, allowing GPU embedding to use the mapping results for normal training. When the GPU embedding is full, that is, when there are n pairs of mappings in the ID transformer, IDs are evicted to PS in batches.

#Under this design, it can be PS rarely intervenes, GPU worker and PS communication is only required during eviction.

In addition, in this design, PS only needs to be used as KV and does not need to support parameter updates. , there is no need to implement optimizer-related operations, allowing the PS team to focus on storage-related work. We also support plug-ins that implement any KV storage, and the open source version has a built-in Redis plug-in, so that Redis can also be used as a PS.

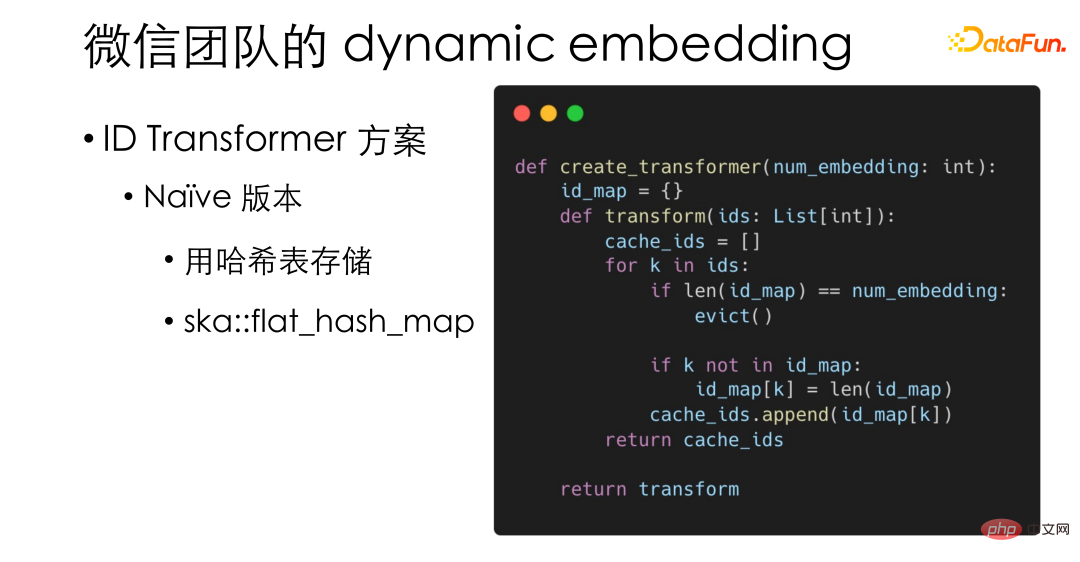

The following introduces some design details in dynamic embedding. The simplest and most basic ID Transformer we implemented actually uses a hash table, using the high-performance ska::flat_hash_map in PyTorch.

ID Transformer As the only CPU operation in the process, the performance requirements may be relatively high, so We also implemented a high-performance version, which is stored in L1 cacheline units to further improve memory access efficiency.

In addition, for the eviction scheme, we hope to efficiently Fusion of LRU and LFU. Inspired by the LFU solution of Redis, we designed a probabilistic algorithm: only the index of the ID access frequency is stored. For example, if accessed 32 times, 5 is stored. When updating the frequency, if this ID is accessed again, a 5-digit random number will be generated. If all 5 digits are 0, that is, an event with a probability of 1/32 has occurred, we will increase the frequency index to 6. Through such a probability algorithm, the frequencies of LRU and LFU can be put into uint32, integrating LRU and LFU without increasing the memory access pressure.

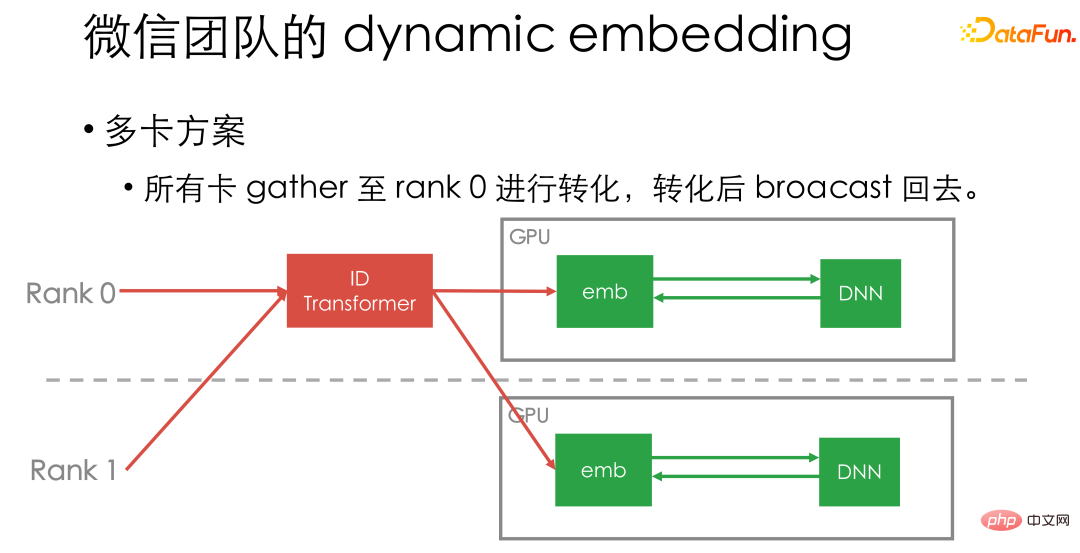

Finally, let’s briefly introduce our multi-card solution. We currently gather all the card data to the ID Transformer of card one, and then broadcast it back. Because the ID Transformer we implemented has very high performance and can be combined with the GPU computing pipeline, it will not become a specific performance bottleneck.

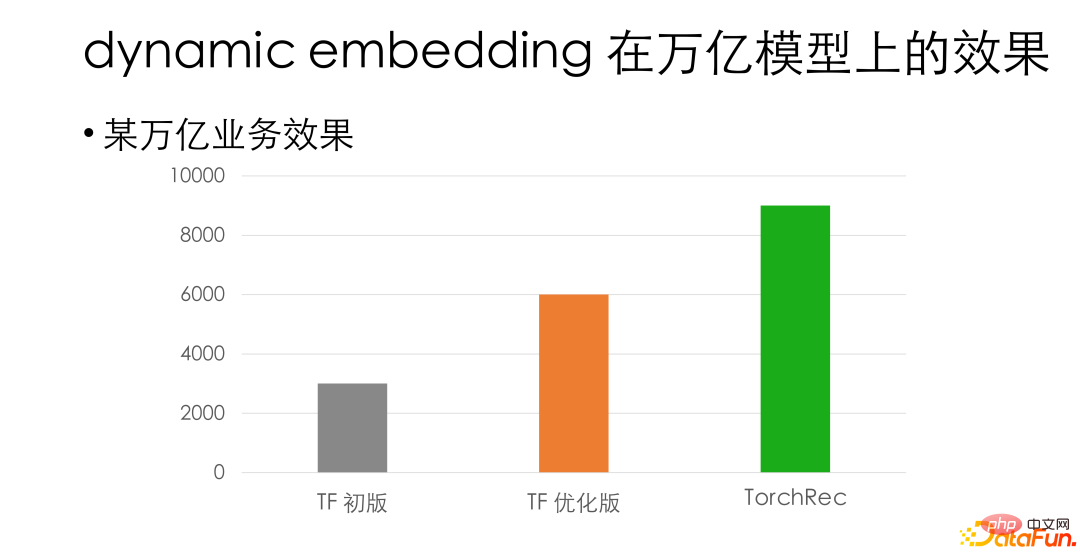

The above are some design ideas for dynamic embedding. In our internal trillion-level business, in the case of alignment accuracy, the dynamic embedding solution has about 3 times the performance improvement compared to our original internal GPU Tensorflow framework. Compared with the TF optimized version, it still has more than 50% performance advantage.

Finally, I recommend everyone to try Torchrec. For relatively small businesses, such as businesses worth tens of billions, it is recommended that you use the native TorchRec directly: it is plug-and-play, does not require any secondary development, and the performance can be doubled. For extremely large businesses, we recommend that you try to integrate TorchRec's dynamic embedding with us. On the one hand, it is convenient to connect to the internal PS, on the other hand, it also supports the expansion and gradual migration of embedding, and at the same time, you can still get a certain performance improvement.

#Here are some precision models and existing application scenarios that we have aligned, for those who are interested Friends can try it.

The above is the detailed content of WeChat's large-scale recommendation system training practice based on PyTorch. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology