Home >Technology peripherals >AI >Year-end review: 6 major breakthroughs in computer science in 2022! Cracking quantum encryption, fastest matrix multiplication, etc. are on the list

Year-end review: 6 major breakthroughs in computer science in 2022! Cracking quantum encryption, fastest matrix multiplication, etc. are on the list

- PHPzforward

- 2023-04-12 11:49:031297browse

In 2022, many epoch-making events will happen in the computer field.

This year, computer scientists learned the secret of perfect transmission, Transformer has made rapid progress, and with the help of AI, decades-old algorithms have been greatly improved...

Big Computer Events in 2022

Now, the range of problems that computer scientists can solve is getting wider and wider, so their work is also increasingly interdisciplinary.

This year, many achievements in the field of computer science have also helped other scientists and mathematicians.

For example, cryptography issues involve the security of the entire Internet.

Behind cryptography, there are often complex mathematical problems. There was once a very promising new cryptographic scheme that was considered to be sufficient to resist attacks from quantum computers. However, this scheme was overturned by the mathematical problem of "the product of two elliptic curves and its relationship with the Abelian surface."

A different set of mathematical relationships, in the form of one-way functions, will tell cryptographers whether they have a truly secure code.

Computer science, especially quantum computing, also has significant overlap with physics.

One of the major events in theoretical computer science this year is that scientists proved the NLTS conjecture.

This conjecture tells us that the ghostly quantum entanglement between particles is not as subtle as physicists once imagined.

This not only affects our understanding of the physical world, but also affects the countless cryptographic possibilities brought about by entanglement.

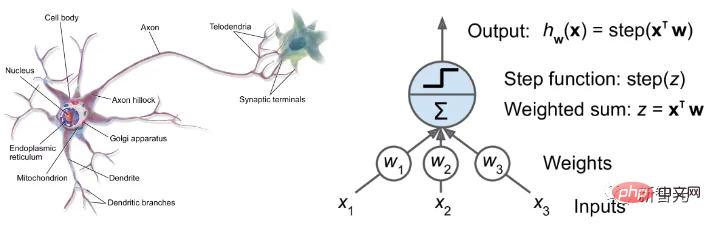

Also, artificial intelligence has always been complementary to biology - in fact, the field of biology draws inspiration from the human brain, which may be The ultimate computer.

For a long time, computer scientists and neuroscientists have hoped to understand how the brain works and create brain-like artificial intelligence, but these have always seemed to be pipe dreams.

But what’s incredible is that the Transformer neural network seems to be able to process information like a brain. Whenever we understand more about how Transformers work, we understand more about the brain, and vice versa.

Perhaps this is why Transformer is so good at language processing and image classification.

Even, AI can help us create better AI. New hypernetworks can help researchers train neural networks at a lower cost and faster speed. , and can also help scientists in other fields.

Top1: The answer to quantum entanglement

Quantum entanglement is a property that closely connects distant particles. It is certain that The thing is, a completely entangled system cannot be fully described.

But physicists believe that systems that are close to complete entanglement will be easier to describe. But computer scientists believe that these systems are also impossible to calculate, and this is the quantum PCP (Probabilistically Checkable Proof) conjecture.

To help prove the quantum PCP theory, scientists proposed a simpler hypothesis called the "non-low-energy trivial state" (NLTS) conjecture.

In June this year, three computer scientists from Harvard University, University College London and the University of California, Berkeley, achieved the first proof of the NLTS conjecture in a paper.

##Paper address: https://arxiv.org/abs/2206.13228

This means that there are quantum systems that can maintain entangled states at higher temperatures. It also shows that even away from extreme conditions such as low temperatures, entangled particle systems are still difficult to analyze and calculate the ground state energy.

Physicists were surprised because it meant entanglement wasn't necessarily as fragile as they thought, while computer scientists were delighted to be close to proving something called quantum PCP (Probabilistically Possible). The proof of the theorem is one step closer.

In October this year, researchers successfully entangled three particles together over a considerable distance, strengthening the possibility of quantum encryption. .

Top2: Changing the way AI understands

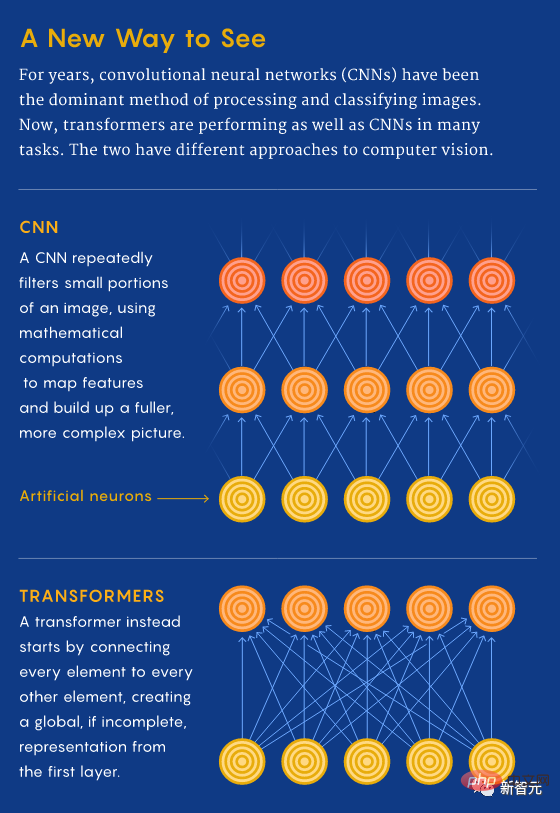

#Over the past five years, Transformer has revolutionized the way AI processes information .

In 2017, Transformer first appeared in a paper.

People develop Transformers to understand and generate language. It can process every element in the input data in real time, giving them a "big picture" view.

Compared with other language networks that adopt a fragmented approach, this "big picture view" greatly improves the speed and accuracy of Transformer.

This also makes it incredibly versatile. Other AI researchers also apply Transformer to their own fields.

They have discovered that applying the same principles can be used to upgrade tools for image classification and processing multiple types of data simultaneously.

##Paper address: https://arxiv.org/abs/2010.11929

Transformers has quickly become a leader in applications such as word recognition focused on analyzing and predicting text. It sparked a wave of tools, such as OpenAI’s GPT-3, which trains on hundreds of billions of words and generates consistent new text to disturbing degrees.However, compared with non-Transformer models, these benefits come at the expense of more training volume for Transformer.

These faces were created by a Transformer-based network trained on a dataset of over 200,000 celebrity faces

In March, researchers studying how Transformer works discovered that part of what makes it so powerful is its ability to attach greater meaning to words. , rather than a simple memory model.

In fact, Transformer is so adaptable that neuroscientists have begun to use Transformer-based networks to model human brain functions.

This shows that artificial intelligence and human intelligence may be one and the same.

Top3: Cracked Quantum Encryption Algorithm

The emergence of quantum computing has made many problems that originally required a huge amount of calculations impossible. was solved, and the security of classic encryption algorithms was also threatened. As a result, academic circles have proposed the concept of post-quantum cryptography to resist cracking by quantum computers.

As a highly anticipated encryption algorithm, SIKE (Supersingular Isogeny Key Encapsulation) is an encryption algorithm that uses elliptic curves as theorem.

However, in July this year, two researchers from the University of Leuven in Belgium discovered that this algorithm can use a 10-year-old machine in just one hour. of desktop computers were successfully hacked.

It’s worth noting that the researchers approached the problem from a purely mathematical perspective, attacking the core of the algorithm design rather than any potential code vulnerabilities.

Paper address: https://eprint.iacr.org/2022/975

In this regard, research Researchers say that only if you can prove the existence of a "one-way function" is it possible to create a provably safe code, that is, a code that can never fail.

While it’s still unknown whether they exist, researchers believe the problem is equivalent to another problem called Kolmogorov complexity. One-way functions and true cryptography are only possible if some version of Kolmogorov complexity is difficult to compute.

Top4: Training AI with AI

In recent years, the pattern recognition skills of artificial neural networks have injected new ideas into the field of artificial intelligence. vitality.

But before a network can start working, researchers must first train it.

This training process can last for months and requires large amounts of data, during which potentially billions of parameters need to be fine-tuned.

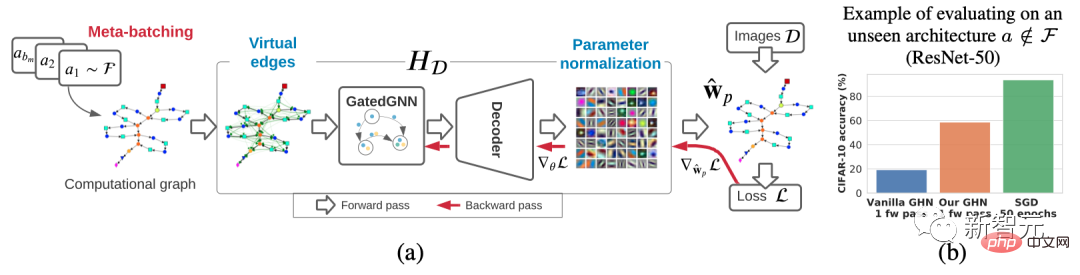

Now, researchers have a new idea—let machines do it for them.

This new "hypernetwork" is called GHN-2, and it is capable of processing and spitting out other networks.

##Paper link: https://arxiv.org/abs/2110.13100

It is fast and can analyze any given network and quickly provide a set of parameter values that are as valid as the parameters in the network trained in a traditional way.

Although the parameters provided by GHN-2 may not be optimal, it still provides a more ideal starting point, reducing the time and data required for full training.

Backpropagation training with parameters predicted on the given image dataset and our DEEPNETS-1M architecture dataset

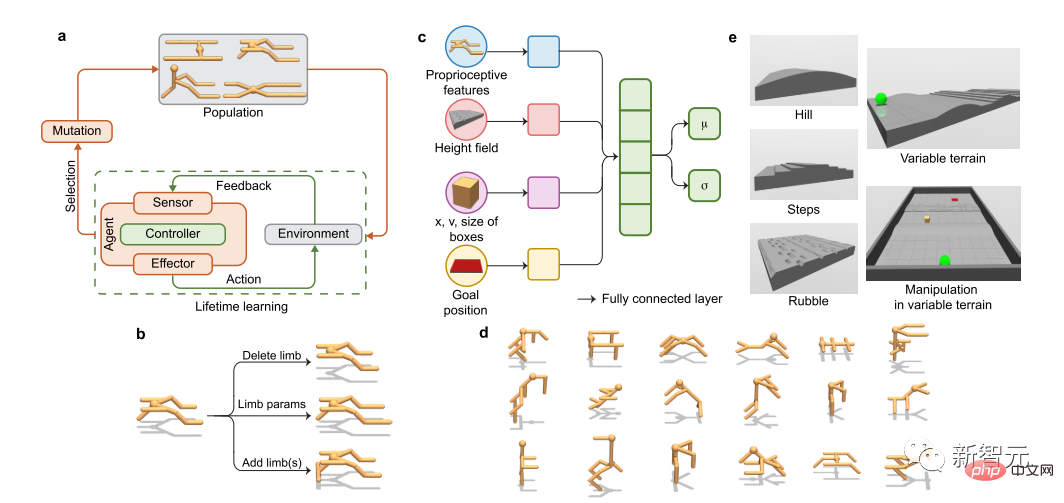

This summer, Quanta magazine also looked at another new way to help machine learning - embodied artificial intelligence.

It allows algorithms to learn from responsive three-dimensional environments rather than static images or abstract data.

Whether they are agents exploring a simulated world, or robots in the real world, these systems have fundamentally different ways of learning that, in many cases, are faster than using traditional methods The trained system is better.

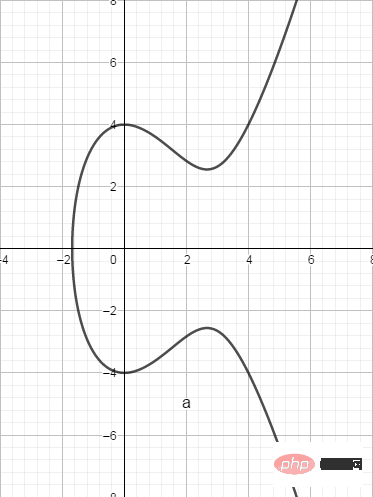

Top5: Algorithm improvements

Improving the efficiency of basic computing algorithms has always been a hot topic in the academic community, because it will affect the overall speed of a large number of calculations, thus affecting the field of intelligent computing. Create a domino effect.

In October this year, in a paper published in Nature, the DeepMind team proposed the first AI for discovering novel, efficient, and correct algorithms for basic computing tasks such as matrix multiplication. System - AlphaTensor.

Its emergence has found a new answer to a 50-year-old unsolved mathematical problem: finding the fastest way to multiply two matrices.

Matrix multiplication, as one of the basic operations of matrix transformation, is a core component of many computing tasks. It covers computer graphics, digital communications, neural network training, scientific computing, etc., and the algorithms discovered by AlphaTensor can greatly improve the computing efficiency in these fields.

##Paper address: https://www.nature.com/articles/s41586-022-05172-4

In March of this year, a team of six computer scientists proposed a "ridiculously fast" algorithm that gave computers a breakthrough solution to the oldest "maximum flow problem" progress.

The new algorithm can solve this problem in "almost linear" time, that is, its running time is basically proportional to the time required to record the details of the network.

##Paper address: https://arxiv.org/abs/2203.00671v2

The maximum flow problem is a combinatorial optimization problem. It discusses how to make full use of the device's capabilities to maximize the transportation flow and achieve the best results.In daily life, it is used in many aspects, such as Internet data flow, airline scheduling, and even matching job seekers with vacant positions.

As one of the authors of the paper, Daniel Spielman from Yale University said, "I originally firmly believed that such an efficient algorithm could not exist for this problem."

Top6: New ways to share information

Mark Braverman, a theoretical computer scientist at Princeton University, has spent more than a quarter of his life studying new theories of interactive communication.His work has allowed researchers to quantify terms such as "information" and "knowledge," which not only provides a theoretical understanding of interactions; New technologies are created to make communication more efficient and accurate.

Because of his For this achievement, among other achievements, the International Mathematical Union awarded Braverman this July the IMU Abacus Medal, one of the highest honors in theoretical computer science.

IMU's award speech pointed out that Braverman's contribution to information complexity has led to a deeper understanding of the different measures of information cost when two parties communicate with each other. His work paved the way for new encoding strategies that are less susceptible to transmission errors, as well as new methods of compressing data during transmission and manipulation. The problem of information complexity comes from the pioneering work of Claude Shannon - in 1948, he developed a mathematical framework for one person to send a message to another person through a channel. And Braverman’s greatest contribution was to establish a broad framework that clarified general rules for describing the boundaries of interactive communication-these rules proposed how to send data online through algorithms. new strategies for compressing and protecting data. ##Paper address: https://arxiv.org/abs/1106.3595 The "interactive compression" problem can be understood this way: If two people exchange a million text messages, but only learn 1,000 bits of information, can the exchange be compressed to 1,000 bits of conservation? Braverman and Rao’s research shows that the answer is no. And Braverman not only cracked these questions, he introduced a new perspective that allowed researchers to first elucidate them and then Translated into the formal language of mathematics. His theories laid the foundation for exploring these issues and identifying new communication protocols that may appear in future technologies.

The above is the detailed content of Year-end review: 6 major breakthroughs in computer science in 2022! Cracking quantum encryption, fastest matrix multiplication, etc. are on the list. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology