Home >Technology peripherals >AI >Why did ChatGPT suddenly become so powerful? A Chinese doctor's long article of 10,000 words deeply dissects the origin of GPT-3.5 capabilities

Why did ChatGPT suddenly become so powerful? A Chinese doctor's long article of 10,000 words deeply dissects the origin of GPT-3.5 capabilities

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-11 21:37:041734browse

The recently released ChatGPT by OpenAI has injected a shot in the arm into the field of artificial intelligence. Its powerful capabilities are far beyond the expectations of language processing researchers.

Users who have experienced ChatGPT will naturally ask questions: How did the original GPT 3 evolve into ChatGPT? Where does GPT 3.5’s amazing language capabilities come from?

Recently, researchers from Allen Institute for Artificial Intelligence wrote an article trying to analyze the Emergent Ability of ChatGPT, and Tracing the origins of these capabilities, a comprehensive technical roadmap is given to illustrate how the GPT-3.5 model series and related large-scale language models have evolved step by step into their current powerful form.

Original link: https://yaofu.notion.site/GPT-3-5-360081d91ec245f29029d37b54573756

The authorFu Yao is a doctoral student at the University of Edinburgh who enrolled in 2020. He graduated with a master's degree from Columbia University and a bachelor's degree from Peking University. He is currently at the Allen Institute for Artificial Intelligence Work as a research intern. His main research direction is large-scale probabilistic generative models of human language.

The authorPeng Hao graduated from Peking University with a bachelor's degree and a Ph.D. from the University of Washington. He is currently a Young Investigator at the Allen Institute for Artificial Intelligence and will be graduating in 2023 In August, he joined the Department of Computer Science at the University of Illinois at Urbana-Champaign as an assistant professor. His main research interests include making language AI more efficient and understandable, and building large-scale language models.

The authorTushar Khot graduated from the University of Wisconsin-Madison with a Ph.D. and is currently a research scientist at the Allen Institute for Artificial Intelligence. His main research direction is structured machine reasoning.

1. The 2020 version of the first generation GPT-3 and large-scale pre-training

The first generation GPT-3 demonstrated three important capabilities:

- Language generation: Follow the prompt word (prompt), and then generate a sentence that completes the prompt word. This is also the most common way humans interact with language models today.

- In-context learning: Follow several examples of a given task and then generate solutions for new test cases. A very important point is that although GPT-3 is a language model, its paper hardly talks about "language modeling" - the authors devoted all their writing energy to the vision of contextual learning. This is the real focus of GPT-3.

- World knowledge: Includes factual knowledge (factual knowledge) and common sense (commonsense).

So where do these abilities come from?

Basically, the above three capabilities come from large-scale pre-training: pre-training a model with 175 billion parameters on a corpus of 300 billion words (60% of the training corpus comes from In 2016-2019, 22% of C4 came from WebText2, 16% came from Books, and 3% came from Wikipedia). in:

- The ability of language generation comes from the training goal of language modeling (language modeling).

- World knowledge comes from a training corpus of 300 billion words (where else could it be).

- The 175 billion parameters of the model are to store knowledge, which is further proved by the article by Liang et al. (2022). They concluded that performance on knowledge-intensive tasks is closely related to model size.

- The source of contextual learning capabilities and why contextual learning can be generalized are still difficult to trace. Intuitively, this capability may come from data points from the same task being sequentially arranged in the same batch during training. However, little research has been done on why language model pre-training prompts contextual learning, and why contextual learning behaves so differently from fine-tuning.

What is curious is how strong the first generation GPT-3 was.

In fact, it is difficult to determine whether the original GPT-3 (called davinci in the OpenAI API) is "strong" or "weak".

On the one hand, it responds reasonably to certain specific queries and achieves decent performance in many data sets;

On the other hand, it performs worse than smaller models like T5 on many tasks (see its original paper).

Under today’s (December 2022) ChatGPT standards, it is difficult to say that the first generation of GPT-3 is “intelligent”. Meta’s open-source OPT model attempts to replicate the original GPT-3, but its capabilities are in sharp contrast to today’s standards. Many people who have tested OPT also believe that compared with the current text-davinci-002, the model is indeed "not that good".

Nonetheless, OPT may be a good enough open source approximation of the original GPT-3 (according to the OPT paper and Stanford University's HELM evaluation).

Although the first generation GPT-3 may appear weak on the surface, subsequent experiments proved that the first generation GPT-3 has very strong potential. These potentials were later unlocked by code training, instruction tuning, and reinforcement learning with human feedback (RLHF), and the final body demonstrated extremely powerful emergent capabilities.

2. From the 2020 version of GPT-3 to the 2022 version of ChatGPT

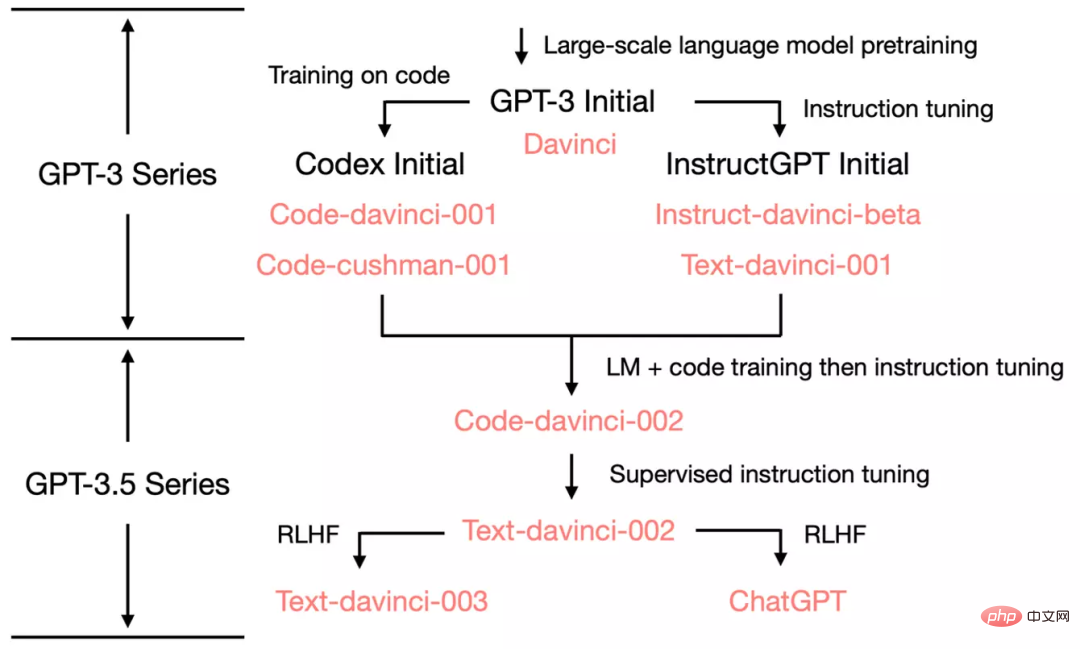

Starting from the original GPT-3, in order to show how OpenAI developed to ChatGPT, we look at Let’s take a look at the evolutionary tree of GPT-3.5:

In July 2020, OpenAI released davinci’s first-generation GPT-3 paper with a model index of It has continued to evolve ever since.

In July 2021, the Codex paper was released, in which the initial Codex was fine-tuned based on a (possibly internal) 12 billion parameter GPT-3 variant. This 12 billion parameter model later evolved into code-cushman-001 in the OpenAI API.

In March 2022, OpenAI released a paper on instruction tuning, and its supervised instruction tuning part corresponds to davinci-instruct-beta and text- davinci-001.

From April to July 2022, OpenAI began beta testing the code-davinci-002 model, also called Codex. Then code-davinci-002, text-davinci-003 and ChatGPT are all obtained by fine-tuning instructions from code-davinci-002. See OpenAI’s model index documentation for details.

Although Codex sounds like a code-only model, code-davinci-002 is probably the most powerful GPT-3.5 variant for natural language (better than text- davinci-002 and -003). It is likely that code-davinci-002 was trained on both text and code and then adapted based on instructions (explained below).

Then text-davinci-002, released in May-June 2022, is a supervised instruction tuned model based on code-davinci-002. Fine-tuning the instructions on text-davinci-002 is likely to reduce the model's contextual learning ability, but enhance the model's zero-shot ability (will be explained below).

Then there are text-davinci-003 and ChatGPT, both released in November 2022, which are versions of the reinforcement learning based on human feedback (instruction tuning with reinforcement learning from human feedback) model. Two different variations.

text-davinci-003 restores (but is still worse than code-davinci-002) some of the partial context learning capabilities that were lost in text-davinci-002 (presumably because it was Language modeling was mixed in during fine-tuning) and the zero-sample capability was further improved (thanks to RLHF). ChatGPT, on the other hand, seems to sacrifice almost all context learning capabilities for the ability to model conversation history.

Overall, during 2020-2021, before code-davinci-002, OpenAI has invested a lot of effort in enhancing GPT-3 through code training and instruction fine-tuning . By the time they completed code-davinci-002, all the capabilities were already there. It is likely that subsequent instruction fine-tuning, whether through a supervised version or a reinforcement learning version, will do the following (more on this later):

- Instruction fine-tuning does not New capabilities will be injected into the model - all capabilities that already exist. The role of command nudges is to unlock/activate these abilities. This is mainly because the amount of data for instruction fine-tuning is several orders of magnitude smaller than the amount of pre-training data (the basic capabilities are injected through pre-training).

- Command fine-tuning will differentiate GPT-3.5 into different skill trees. Some are better at contextual learning, like text-davinci-003, and some are better at conversations, like ChatGPT.

-

Instruction fine-tuning sacrifices performance for human alignment. The authors of OpenAI call this an "alignment tax" in their instruction fine-tuning paper.

Many papers report that code-davinci-002 achieves the best performance in benchmarks (but the model does not necessarily match human expectations). After fine-tuning the instructions on code-davinci-002, the model can generate feedback that is more in line with human expectations (or the model is aligned with humans), such as: zero-sample question and answer, generating safe and fair dialogue responses, and rejecting beyond the scope of the model's knowledge. The problem.

3. Code-Davinci-002 and Text-Davinci-002, train on the code and fine-tune the instructions

In code-davinci-002 Before text-davinci-002, there were two intermediate models, namely davinci-instruct-beta and text-davinci-001. Both are worse than the above-mentioned two-002 models in many aspects (for example, text-davinci-001's chain thinking reasoning ability is not strong).

So we focus on the -002 model in this section.

3.1 The source of complex reasoning ability and the ability to generalize to new tasks

We focus on code-davinci-002 and text-davinci-002, these two brothers are The first version of the GPT3.5 model, one for code and the other for text. They exhibit three important capabilities that are different from the original GPT-3:

- Respond to human commands:Previously, the output of GPT-3 was mainly Common sentences in the training set. The model now generates more reasonable answers (rather than relevant but useless sentences) to instructions/cue words.

- Generalize to unseen tasks: When the number of instructions used to adjust the model exceeds a certain scale, the model can automatically perform tasks that have never been seen before. Valid answers can also be generated on new commands. This ability is crucial for online deployment, because users will always ask new questions, and the model must be able to answer them.

- Code generation and code understanding: This ability is obvious because the model is trained with code.

- Use chain-of-thought for complex reasoning: The model of the first generation GPT3 had little or no chain-of-thought reasoning capabilities. code-davinci-002 and text-davinci-002 are two models with strong enough thinking chain reasoning capabilities.

- #Thought chain reasoning is important because thought chains may be the key to unlocking emergent capabilities and transcending scaling laws.

Where do these abilities come from?

Compared to the previous model, the two main differences are instruction fine-tuning and code training. Specifically:

- The ability to respond to human commands is a direct product of command fine-tuning.

- The generalization ability to respond to unseen instructions appears automatically after the number of instructions exceeds a certain level, and the T0, Flan and FlanPaLM papers further prove this.

- #The ability to use thought chains for complex reasoning may well be a magical byproduct of coding training. For this, we have the following facts as some support:

- The original GPT-3 was not trained to code, and it could not do thought chains.

- text-davinci-001 model, although it has been fine-tuned by instructions, the first version of the thinking chain paper reported that its ability to reason about other thinking chains is very weak——so the instructions are fine-tuned It may not be the reason why the thinking chain exists. Code training is the most likely reason why the model can do thinking chain reasoning.

- PaLM has 5% code training data and can do thought chains.

- The code data volume in the Codex paper is 159G, which is approximately 28% of the 570 billion training data of the first generation GPT-3. code-davinci-002 and its subsequent variants can do chain of thought reasoning.

- In the HELM test, Liang et al. (2022) conducted a large-scale evaluation of different models. They found that models trained on code have strong language inference capabilities, including the 12 billion parameter code-cushman-001.

- Our work on AI2 also shows that when equipped with complex thought chains, code-davinci-002 is currently the best performing model on important mathematical benchmarks such as GSM8K.

- Intuitively, procedure-oriented programming is very similar to the process by which humans solve tasks step by step, and object-oriented programming is similar to the process by which humans solve complex tasks step by step. The process of breaking it down into simple tasks is similar.

- All of the above observations are correlations between code and reasoning ability/thinking chains, but not necessarily causation. This correlation is interesting, but is currently an open question to be studied. At present, we do not have very conclusive evidence that code is the cause of thought chains and complex reasoning.

- In addition, another possible by-product of code training is long-distance dependencies, as Peter Liu pointed out: "Next word prediction in a language is usually very local, and code usually requires Longer dependencies do things like match opening and closing brackets or refer to distant function definitions." What I would like to add further here is: due to class inheritance in object-oriented programming, code may also contribute to the model's ability to establish coding hierarchies. We leave the testing of this hypothesis to future work.

Also note some differences in details:

- text-davinci-002 is the same as code-davinci-002

- ##Code-davinci-002 The base model, text-davinci-002, is the product of instruction fine-tuning code-davinci-002 (see OpenAI documentation). It is fine-tuned on the following data: (1) human-annotated instructions and expected outputs; (2) model outputs selected by human annotators.

- When there are in-context examples, Code-davinci-002 is better at context learning; when there are no context examples/zero samples, text-davinci-002 It performs better in zero-shot task completion. In this sense, text-davinci-002 is more in line with human expectations (since writing contextual examples for a task can be cumbersome).

- It is unlikely that OpenAI deliberately sacrificed the ability of contextual learning in exchange for zero-sample capabilities - the reduction of contextual learning capabilities is more of a side effect of instruction learning. OpenAI calls this an alignment tax.

- 001 model (code-cushman-001 and text-davinci-001) v.s. 002 model (code-davinci-002 and text-davinci- 002)

- 001 model is mainly for pure code/pure text tasks; 002 model deeply integrates code training and instruction fine-tuning, code and Any text will do.

- Code-davinci-002 may be the first model that deeply integrates code training and instruction fine-tuning. The evidence is: code-cushman-001 can perform inference but does not perform well on plain text, text-davinci-001 performs well on plain text but is not good at inference. code-davinci-002 can do both at the same time.

At this stage, we have identified the critical role of instruction fine-tuning and code training. An important question is how to further analyze the impact of code training and instruction fine-tuning?

Specifically:Do the above three abilities already exist in the original GPT-3, and are only triggered/unlocked through instructions and code training? Or do these capabilities not exist in the original GPT-3, but were injected through instructions and code training?

If the answers are already in the original GPT-3, then these capabilities should also be in OPT. Therefore, to reproduce these capabilities, it may be possible to adjust OPT directly through instructions and code.

However, it is possible that code-davinci-002 is not based on the original GPT-3 davinci, but is based on a larger model than the original GPT-3. If this is the case, there may be no way to reproduce it by adjusting the OPT.

The research community needs to further clarify what kind of model OpenAI trained as the base model for code-davinci-002.

We have the following hypotheses and evidence:

- The base model of code-davinci-002 may not be the first generation GPT-3 davinci model.

- The original GPT-3 was trained on the data set C4 2016-2019, while the code-davinci-002 training set is extended to 2021 Just ended. So it is possible that code-davinci-002 is trained on the 2019-2021 version of C4.

- The original GPT-3 had a context window of 2048 words. The context window for code-davinci-002 is 8192. The GPT series uses absolute positional embedding. Direct extrapolation of absolute positional embedding without training is difficult and will seriously damage the performance of the model (see Press et al., 2022). If code-davinci-002 is based on the original GPT-3, how does OpenAI extend the context window?

- On the other hand, whether the base model is the original GPT-3 or a model trained later, the ability to follow instructions and zero-shot generalization is Might already exist in the base model and be unlocked later via command tweaks (rather than injected).

- This is mainly because the size of the instruction data reported in the OpenAI paper is only 77K, which is several orders of magnitude less than the pre-training data.

- Other instruction fine-tuning papers further prove the contrast of data set size on model performance. For example, in the work of Chung et al. (2022), the instruction fine-tuning of Flan-PaLM is only pre-training. Calculated 0.4%. Generally speaking, the instruction data will be significantly less than the pre-training data.

- # However, the model’s complex reasoning capabilities may be through code data injection during the pre-training stage.

- The size of the code data set is different from the above instruction fine-tuning case. The amount of code data here is large enough to occupy a significant part of the training data (for example, PaLM has 8% of the code training data)

- As mentioned above, before code-davinci-002 The model text-davinci-001 has probably not been fine-tuned on code data, so its reasoning/thinking chain capabilities are very poor, as reported in the first version of the thinking chain paper, sometimes even smaller than the parameter size code-cushman-001 Not bad.

- Perhaps the best way to distinguish the effects of code training and instruction fine-tuning is to compare code-cushman-001, T5, and FlanT5.

- Because they have similar model sizes (11 billion and 12 billion) and similar training data sets (C4), their biggest difference is Have you trained on the code/have you done instruction fine-tuning?

- There is currently no such comparison. We leave this to future research.

4. text-davinci-003 and ChatGPT, the power of Reinforcement Learning from Human Feedback (RLHF)

is currently stage (December 2022), there are almost no strict statistical comparisons between text-davinci-002, text-davinci-003 and ChatGPT, mainly because:

- text-davinci-003 and ChatGPT are less than a month old at the time of writing.

- ChatGPT cannot be called through the OpenAI API, so trying to test it on standard benchmarks is cumbersome.

So the comparison between these models is more based on the collective experience of the research community (not very statistically rigorous). However, we believe that preliminary descriptive comparisons can still shed light on the mechanisms of the model.

We first notice the following comparison between text-davinci-002, text-davinci-003 and ChatGPT:

- All three models have been fine-tuned on command.

- text-davinci-002 is a model that has been fine-tuned with supervised instruction tuning.

- text-davinci-003 and ChatGPT are Instruction tuning with Reinforcement Learning from Human Feedback RLHF. This is the most significant difference between them.

This means that the behavior of most new models is an artifact of RLHF.

So let's look at the ability of RLHF triggers:

- Informative response: text-davinci-003's generation is usually faster than text-davinci-002 long. ChatGPT's response is more lengthy, so that users must explicitly ask "Answer me in one sentence" to get a more concise answer. This is a direct outgrowth of RLHF.

- Fair response: ChatGPT usually gives very balanced answers to events involving the interests of multiple entities, such as political events. This is also a product of RLHF.

- Inappropriate rejection issues: This is a combination of the content filter and the model's own capabilities triggered by RLHF, where the filter filters out a portion and then the model rejects a portion.

- Reject questions outside the scope of its knowledge: For example, reject new events that occurred after June 2021 (because it is not included in the data after that) trained). This is the most amazing part of RLHF, as it enables the model to implicitly distinguish which problems are within its knowledge and which are not.

There are two things worth noting:

- All capabilities are inherent in the model, not Injected via RLHF. What RLHF does is trigger/unlock emergent abilities. This argument mainly comes from the comparison of data size: compared with the amount of pre-trained data, RLHF takes up much less computing power/data.

- The model knows what it doesn't know not by writing rules, but by unlocking it through RLHF. This is a very surprising finding because the original goal of RLHF was to have the model generate responses that compound human expectations, which was more about having the model generate safe sentences than letting the model know what it didn't know.

What’s going on behind the scenes is probably:

- ChatGPT: Sacrificing the ability to learn context in exchange for modeling conversation history Ability. This is an empirical observation, as ChatGPT does not appear to be as strongly affected by contextual presentation as text-davinci-003.

- text-davinci-003: Restore the context learning ability sacrificed by text-davinci-002 and improve the zero-sample ability. According to the instructGPT paper, this comes from the reinforcement learning tuning stage mixed in with the goal of language modeling (rather than RLHF itself).

5. Summary of the evolutionary process of GPT-3.5 at the current stage

So far, we have carefully examined all the capabilities that have appeared along the evolutionary tree. The following table summarizes the evolutionary path:

We can conclude:

- Language generation ability, basic world knowledge, and context learning all come from pre-training (davinci).

- The ability to store large amounts of knowledge comes from the amount of 175 billion parameters.

- The ability to follow instructions and generalize to new tasks comes from expanding the number of instructions in instruction learning (Davinci-instruct-beta).

- The ability to perform complex reasoning likely comes from code training (code-davinci-002).

- The ability to generate neutral, objective, safe and informative answers comes from alignment with humans. Specifically:

- If it is the supervised learning version, the resulting model is text-davinci-002.

- If it is the reinforcement learning version (RLHF), the obtained model is text-davinci-003.

- Whether it is supervised or RLHF, the performance of the model cannot exceed code-davinci-002 in many tasks. This phenomenon of performance degradation due to alignment is called alignment tax.

- The dialogue ability also comes from RLHF (ChatGPT). Specifically, it sacrifices the ability of context learning in exchange for:

- Model conversation history.

- Increase the amount of dialogue information.

- Reject questions that are outside the scope of model knowledge.

6. What GPT-3.5 cannot currently do

Although GPT-3.5 is an important step in natural language processing research, it does not fully include All desirable properties envisioned by many researchers (including AI2). The following are some important properties that GPT-3.5 does not have:

- Rewrite the model's beliefs in real time: When a model expresses a belief about something, if that belief is wrong, we may have a hard time correcting it:

- An example I encountered recently is: ChatGPT insists that 3599 is a prime number, even though it admits that 3599 = 59 * 61. Also, see this Reddit example of the fastest swimming marine mammal.

- However, there appear to be different levels of strength in model beliefs. One example is that even if I tell it that Darth Vader (the character from the Star Wars movies) won the 2020 election, the model will still think that the current president of the United States is Biden. But if I change the election year to 2024, it thinks the president is Darth Vader and is the president in 2026.

- Formal Reasoning: The GPT-3.5 series cannot reason in strictly formal systems such as mathematics or first-order logic:

- #In the natural language processing literature, the definition of the word "inference" is often not clear. But if we look at it from the perspective of ambiguity, for example, some questions are (a) very ambiguous and without reasoning; (b) there is a bit of logic in it, but it can also be vague in some places; (c) very rigorous and cannot have any ambiguity.

- Then, the model can perform well (b) type of reasoning with ambiguity. Examples are:

- Generate how to make tofu noodle. When making tofu puffs, it is acceptable to be a little vague in many of the steps, such as whether to make it salty or sweet. As long as the overall steps are roughly correct, the tofu curd will be edible.

- Ideas for proving mathematical theorems. The proof idea is an informal step-by-step solution expressed in language, and the rigorous derivation of each step does not need to be too specific. Proof ideas are often used in mathematics teaching: as long as the teacher gives a roughly correct overall step, students can roughly understand it. Then the teacher assigns the specific proof details to the students as homework, and the answers are omitted.

- GPT-3.5 Inference of type (c) is not possible (inference cannot tolerate ambiguity).

- #An example is a strict mathematical proof, which requires that intermediate steps cannot be skipped, blurred, or wrong.

- But whether this kind of strict reasoning should be done by language models or symbolic systems remains to be discussed. One example is that instead of trying to get GPT to do three-digit addition, just call Python.

- Search from the Internet: The GPT-3.5 series (temporarily) cannot directly search the Internet.

- #But there is a WebGPT paper published in December 2021, which allows GPT to call the search engine. So the retrieval capabilities have been tested within OpenAI.

- The point that needs to be distinguished here is that the two important but different capabilities of GPT-3.5 are knowledge and reasoning. Generally speaking, it would be nice if we could offload the knowledge part to an external retrieval system and let the language model focus only on reasoning. Because:

- #The internal knowledge of the model is always cut off at some point. Models always require the latest knowledge to answer the latest questions.

- Recall that we have discussed that 175 billion parameters are heavily used to store knowledge. If we could offload the knowledge outside of the model, the model parameters could be reduced so much that eventually it could even run on mobile phones (crazy idea, but ChatGPT is sci-fi enough, who knows what the future holds).

7. Conclusion

In this blog post, we carefully examined the range of capabilities of the GPT-3.5 series and traced the origins of all their emergent capabilities source.

The original GPT-3 model obtained generative capabilities, world knowledge and in-context learning through pre-training. Then through the model branch of instruction tuning, we gained the ability to follow instructions and generalize to unseen tasks. The branch model trained by the code gains the ability to understand the code. As a by-product of the code training, the model also potentially gains the ability to perform complex reasoning.

Combining these two branches, code-davinci-002 appears to be the strongest GPT-3.5 model with all great capabilities. Next, through supervised instruction tuning and RLHF, the model capability is sacrificed in exchange for human alignment, that is, the alignment tax. RLHF enables the model to generate more informative and unbiased answers while rejecting questions outside its knowledge range.

We hope this article can help provide a clear picture of GPT evaluation and trigger some discussions about language models, instruction tuning, and code tuning. Most importantly, we hope this article can serve as a roadmap for reproducing GPT-3.5 within the open source community.

FAQ

- Are the statements in this article more like a hypothesis or a conclusion?

- #The ability to reason complexly from code training is an assumption we tend to believe.

- The ability to generalize to unseen tasks comes from large-scale instruction learning is the conclusion of at least 4 papers.

- It is an educated guess that GPT-3.5 comes from other large base models rather than the 175 billion parameter GPT-3.

- All these capabilities already exist, and it is a strong assumption to unlock these capabilities through instruction tuning, whether it is supervised learning or reinforcement learning, rather than injecting them. You dare not believe it. Mainly because the amount of instruction tuning data is several orders of magnitude less than the amount of pre-training data.

- Conclusion = lots of evidence to support the validity of these claims; hypothesis = positive evidence but not strong enough; educated guess = no hard evidence, but some factors point in that direction

- Why are other models like OPT and BLOOM not as powerful?

- OPT is probably because the training process is too unstable.

- The situation of BLOOM is unknown.

The above is the detailed content of Why did ChatGPT suddenly become so powerful? A Chinese doctor's long article of 10,000 words deeply dissects the origin of GPT-3.5 capabilities. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology