Author | Mush Honda

Planner | Yun Zhao

At the end of last year, as if overnight, artificial intelligence became popular again. First, the generated images of DALL-E 2 were lifelike, and then The "horse-riding astronaut" is unforgettable, followed by Stable Diffusion, a publicly copyrighted drawing AI that generates text-generated images, and the last "king bomb": ChatGPT, which was at its peak when it debuted, earned OpenAI a lot of face. Even during the Spring Festival, many people on the Internet lamented that it was "in danger", fearing that the climax of this wave of artificial intelligence would really take away their jobs. However, will reality really be like this? Will AI usher in a renaissance for computer application software? This article takes a specific field application as an example to explain the three stages of AI being instrumentalized, and explains several reasons why AI should not and cannot develop into completely autonomous. In addition, the author also shared what a real AI character should look like. We need to be alert, but not panic.

"With artificial intelligence, delivery time will be reduced from minutes to seconds!" "Suppliers and customers will return to the era of cheap and user-friendly experience, computers Application software will usher in a moment of renaissance!"

Unfortunately, cheap storage space, high-speed processing speed, readily available AI training equipment and the Internet have already reduced this promise It turned into exaggerated hype.

Take software testing as an example. If you search for "application of artificial intelligence in software testing", the system will return a series of "magical" solutions promised to potential buyers. Many solutions offer ways to reduce manual labor, improve quality, and reduce costs. What is a bit strange is that some manufacturers promise that their AI solutions can perfectly solve software testing problems, and the wording is even more shocking - the "holy grail" of software testing - the idea is to put it this way - it is to remove people from the difficulties and worries It frees you from the software development cycle and makes the testing cycle shorter, more effective and simpler.

Can AI really transform into the all-powerful "Holy Grail"? Moreover, should we let AI completely replace humans? It’s time to put a stop to these almost absurd hypes.

1. The Real Truth

In the real world, excluding humans from the software development process is much more complex and daunting. Regardless of using waterfall, rapid application development, DevOps, agile, and other methodologies, people remain at the center of software development because they define the boundaries and potential of the software they create. In software testing, since business requirements are often unclear and constantly changing, the "goals" are always changing. Users demand changes in usability, and even developers' expectations of what's possible with software change.

The original standards and methods for software testing (including the term quality assurance) came from the field of manufacturing product testing. In this case, the product is well-defined, the testing is more mechanical, but the characteristics of the software are malleable and change frequently. Software testing does not lend itself to this uniform machine approach to quality assurance.

In modern software development, there are many things that developers cannot predict and know. For example, user experience (UX) expectations may have changed after the first iteration of the software. Specific examples include: People have higher expectations for faster screen load times or faster scrolling requirements. Users no longer want lengthy scrolling down the screen because it is no longer popular.

For various reasons, artificial intelligence can never predict or test on its own what even its creator cannot predict. Therefore, in the field of software testing, it is impossible to have truly autonomous artificial intelligence. Creating a software testing “terminator” may pique the interest of marketers and potential buyers, but such deployments are destined to be a mirage. Instead, software testing autonomy makes more sense in the context of AI and humans working together.

2. AI needs to go through three maturity stages

Software testing AI development is basically divided into three Mature stage:

- Operational stage (Operational)

- Process stage (Process)

- Systemic stage (Systemic)

Currently, the vast majority of software testing based on artificial intelligence is in the operational stage. At its most basic, operational testing involves creating scripts that mimic routines that testers perform hundreds of times. The “AI” in this example is far from smart and can help shorten script creation, repetitive execution and storage of results.

Procedural AI is a more mature version of operational AI and is used by testers for test generation. Other uses may include test coverage analysis and recommendations, defect root cause analysis and effort estimation, and test environment optimization. Process AI can also facilitate the creation of synthetic data based on patterns and usage.

The benefits of procedural AI are obvious, providing an extra pair of “eyes” to offset and hedge away some of the risks testers take when setting their test execution strategies. In practical applications, procedural AI can make it easier to test modified code.

- With manual testing, it’s common to see testers retest the entire application, looking for unintended consequences when the code is changed.

- Procedural AI, on the other hand, can recommend testing of a single unit (or limited area of impact) rather than a wholesale retest of the entire application.

At this level of AI, we see clear advantages in development time and cost.

However, when the focus switches to the third stage - systemic AI, the picture becomes hazy, because the future may become a slippery slope and an unrewarded promise.

3. Unreliable systematic AI

The reason why systematic (or completely autonomous) AI testing is impossible (at least currently impossible), One reason is that AI will require extensive training. Testers can be confident that process AI will suggest a unit test that adequately guarantees software quality. However, with system AI, testers cannot know with a high degree of confidence that the software will meet all requirements.

If this level of artificial intelligence is truly autonomous, it will have to test every requirement imaginable—even those not imaginable by humans. They then need to review the assumptions and conclusions of autonomous AI. Confirming that these assumptions are correct requires a great deal of time and effort to provide highly credible evidence.

Autonomous software testing can never be fully realized. Because people will not trust it, and this is equivalent to losing the goal and premise of achieving complete autonomy.

4, Artificial intelligence needs training

#Although fully autonomous artificial intelligence is a myth, support and expansion Artificial intelligence that matches human efforts at software quality is a worthy goal. In this case, humans can support the AI: testers still need to remain patient in supervising, correcting, and teaching the AI and the ever-evolving training sets it relies on. The challenge is how to train AI while assigning risk to various bugs in the test software. This training must be ongoing and not limited to testing areas. Self-driving car makers train artificial intelligence to distinguish between a person crossing the street and riding a bicycle.

Testers must train and test AI software on past data to build their confidence in the AI’s capabilities. At the same time, truly autonomous AI will need to predict future conditions in its testing—developer guidance and user guidance—something it cannot do based on historical data. Instead, trainers tend to train AI based on data sets based on their own biases. These biases limit the possibilities of artificial intelligence exploration, just as a blind man would prevent a horse from straying from its established path for the sake of certainty. The more biased the AI appears, the worse its credibility will be. The best training an AI can receive is to process risk probabilities and derive risk mitigation strategies that are ultimately evaluated by humans.

5. Risk Mitigation Measures

#In the final analysis, software testing is about the tester’s sense of accomplishment and self-confidence. They measure and evaluate the possible consequences of the initial implementation as well as code changes that may cause problems for developers and users. But it is undeniable that even if software testing has fully explored every possibility of application crash, the reliability cannot be 100%. There is an element of risk in all software testing, whether performed manually or automatically.

Testers must decide test coverage based on the likelihood that the code will cause problems. They must also use risk analysis to decide which areas should be focused outside coverage. Even if AI determines and displays the relative probability of software failure at any point in the chain of user activity, testers still need to manually confirm the calculations.

Artificial Intelligence offers the possibility for continuity of software affected by historical biases. However, there is still not a high level of confidence in AI risk assessment and risk reduction recipes.

AI-enabled software testing tools should be practical and effective in order to help testers produce realistic results while relieving testers of manual labor.

The most exciting (and potentially disruptive) deployment of AI in software testing is at the second level of AI development maturity: procedural AI. As one researcher at Katalon noted, “The biggest practical use of AI applied to software testing will be at the process level, the first stage of autonomous test creation. This will be when I will be able to create automated tests that can Created by me, it can also serve me."

Autonomous and self-directed artificial intelligence, replacing all human participation in the software testing process, etc., are all hype. It is more realistic and desirable to expect AI to augment and complement human efforts and shorten testing times.

Reference link: https://dzone.com/articles/ai-in-software-testing-the-hype-the-facts-the-pote

The above is the detailed content of The AI that is so hyped is so exciting!. For more information, please follow other related articles on the PHP Chinese website!

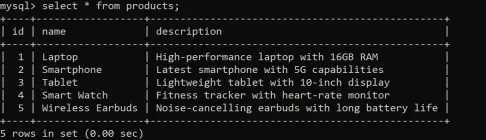

How to Use Aliases in SQL? - Analytics VidhyaApr 21, 2025 am 10:30 AM

How to Use Aliases in SQL? - Analytics VidhyaApr 21, 2025 am 10:30 AMSQL alias: A tool to improve the readability of SQL queries Do you think there is still room for improvement in the readability of your SQL queries? Then try the SQL alias! Alias This convenient tool allows you to give temporary nicknames to tables and columns, making your queries clearer and easier to process. This article discusses all use cases for aliases clauses, such as renaming columns and tables, and combining multiple columns or subqueries. Overview SQL alias provides temporary nicknames for tables and columns to enhance the readability and manageability of queries. SQL aliases created with AS keywords simplify complex queries by allowing more intuitive table and column references. Examples include renaming columns in the result set, simplifying table names in the join, and combining multiple columns into one

Code Execution with Google Gemini FlashApr 21, 2025 am 10:14 AM

Code Execution with Google Gemini FlashApr 21, 2025 am 10:14 AMGoogle's Gemini: Code Execution Capabilities of Large Language Models Large Language Models (LLMs), successors to Transformers, have revolutionized Natural Language Processing (NLP) and Natural Language Understanding (NLU). Initially replacing rule-

Tree of Thoughts Method in AI - Analytics VidhyaApr 21, 2025 am 10:11 AM

Tree of Thoughts Method in AI - Analytics VidhyaApr 21, 2025 am 10:11 AMUnlocking AI's Potential: A Deep Dive into the Tree of Thoughts Technique Imagine navigating a dense forest, each path promising a different outcome, your goal: discovering hidden treasure. This analogy perfectly captures the essence of the Tree of

How to Implement Normalization with SQL?Apr 21, 2025 am 10:05 AM

How to Implement Normalization with SQL?Apr 21, 2025 am 10:05 AMIntroduction Imagine transforming a cluttered garage into a well-organized, brightly lit space where everything is easily accessible and neatly arranged. In the world of databases, this process is called normalization. Just as a tidy garage improve

Delimiters in Prompt EngineeringApr 21, 2025 am 10:04 AM

Delimiters in Prompt EngineeringApr 21, 2025 am 10:04 AMPrompt Engineering: Mastering Delimiters for Superior AI Results Imagine crafting a gourmet meal: each ingredient measured precisely, each step timed perfectly. Prompt engineering for AI is similar; delimiters are your essential tools. Just as pre

6 Ways to Clean Up Your Database Using SQL REPLACE()Apr 21, 2025 am 09:57 AM

6 Ways to Clean Up Your Database Using SQL REPLACE()Apr 21, 2025 am 09:57 AMSQL REPLACE Functions: Efficient Data Cleaning and Text Operation Guide Have you ever needed to quickly fix large amounts of text in your database? SQL REPLACE functions can help a lot! It allows you to replace all instances of a specific substring with a new substring, making it easy to clean up data. Imagine that your data is scattered with typos—REPLACE can solve this problem immediately. Read on and I'll show you the syntax and some cool examples to get you started. Overview The SQL REPLACE function can efficiently clean up data by replacing specific substrings in text with other substrings. Use REPLACE(string, old

R-CNN vs R-CNN Fast vs R-CNN Faster vs YOLO - Analytics VidhyaApr 21, 2025 am 09:52 AM

R-CNN vs R-CNN Fast vs R-CNN Faster vs YOLO - Analytics VidhyaApr 21, 2025 am 09:52 AMObject Detection: From R-CNN to YOLO – A Journey Through Computer Vision Imagine a computer not just seeing, but understanding images. This is the essence of object detection, a pivotal area in computer vision revolutionizing machine-world interactio

What is KL Divergence that Revolutionized Machine Learning? - Analytics VidhyaApr 21, 2025 am 09:49 AM

What is KL Divergence that Revolutionized Machine Learning? - Analytics VidhyaApr 21, 2025 am 09:49 AMKullback-Leibler (KL) Divergence: A Deep Dive into Relative Entropy Few mathematical concepts have as profoundly impacted modern machine learning and artificial intelligence as Kullback-Leibler (KL) divergence. This powerful metric, also known as re

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft