Home >Technology peripherals >AI >Is it really that silky smooth? Hinton's group proposed an instance segmentation framework based on large panoramic masks, which enables smooth switching of image and video scenes.

Is it really that silky smooth? Hinton's group proposed an instance segmentation framework based on large panoramic masks, which enables smooth switching of image and video scenes.

- 王林forward

- 2023-04-11 17:16:031468browse

Panorama segmentation is a basic vision task that aims to assign semantic labels and instance labels to each pixel of an image. Semantic labels describe the category of each pixel (e.g. sky, vertical object, etc.), and instance labels provide a unique ID for each instance in the image (to distinguish different instances of the same category). This task combines semantic segmentation and instance segmentation to provide rich semantic information about the scene.

Although the categories of semantic labels are fixed a priori, the instance IDs assigned to objects in the image can be interchanged without affecting recognition. For example, swapping the instance IDs of two vehicles does not affect the results. Therefore, a neural network trained to predict instance IDs should be able to learn a one-to-many mapping from a single image to multiple instance ID assignments. Learning one-to-many mappings is challenging, and traditional methods often utilize multi-stage pipelines including object detection, segmentation, and merging multiple predictions. Recently, based on differentiable bipartite graph matching, some scholars have proposed end-to-end methods that can effectively convert one-to-many mapping into one-to-one mapping based on recognition matching. However, these methods still require customized architectures and specialized loss functions, as well as built-in inductive biases for panoramic segmentation tasks.

Recent general-purpose vision models, such as Pix2Seq, OFA, UViM, and Unified I/O, advocate general, task-unlimited frameworks to achieve generalization tasks while still being able to perform tasks better than before The model is much simpler. For example, Pix2Seq generates a series of semantically meaningful sequences based on images to complete some core visual tasks, and these models are based on Transformers to train autoregressive models.

In a new paper, researchers such as Ting Chen and Geoffrey Hinton of Google Brain follow the same concept and understand the panoramic segmentation task problem from the perspective of conditional discrete data generation.

##Paper link https://arxiv.org/pdf/2210.06366.pdf

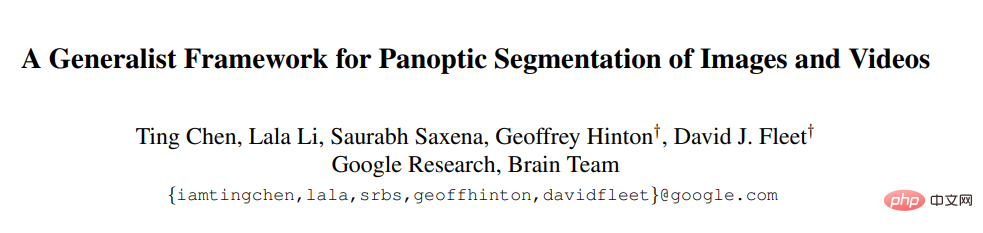

As shown in Figure 1, the researcher designed a generative model for panoramic masks and generated a set of discrete tokens for each picture input to the model. Users can apply this model to video data (online data/streaming media) simply by using predictions from past frames as additional conditional signals. This way, the model automatically learns to track and segment objects.

Generative modeling of panoramic segmentation is very challenging because panoramic masks are discrete, or categorical, and the model Probably very large. For example, to generate a 512×1024 panoramic mask, the model must generate over 1M discrete tags (semantic and instance labels). This is still relatively expensive for autoregressive models because tokens are sequential in nature and difficult to change as the scale of the input data changes. Diffusion models are better at handling high-dimensional data, but they are most often applied in continuous rather than discrete domains. By representing discrete data with analog bits, the authors show that diffusion models can be trained directly on large panoramic masks without the need to learn a latent space.

Through extensive experiments, the researchers demonstrated that their general method can compete with state-of-the-art expert methods in similar environments.

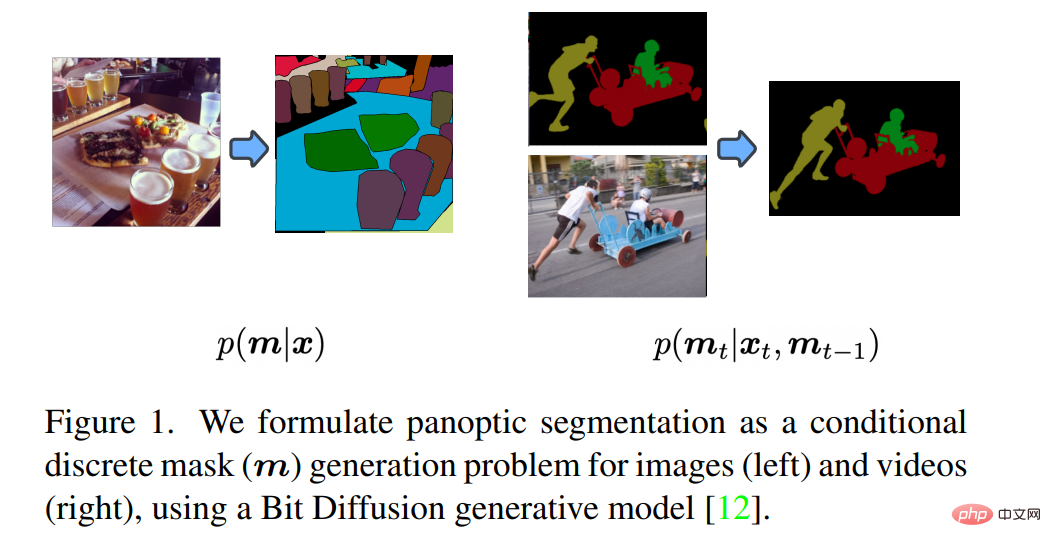

Model ArchitectureDiffusion model sampling is done iteratively, so forward propagation of the network must be run multiple times during inference. Therefore, as shown in Figure 2, the researchers intentionally split the network into two components: 1) image encoder; 2) mask decoder. The former maps raw pixel data to high-level representation vectors, and then the mask decoder iteratively reads out the panoramic mask.

Pixel/Image Encoder

The encoder is a network that maps the original image  to the feature map in

to the feature map in  , where H' and w' are panoramic masks height and width. The panorama mask can be the same size as the original image or smaller. In this work, the researchers used ResNet as the backbone network and then used the encoder layer of Transformer as the feature extractor. In order to ensure that the output feature map has sufficient resolution and contains features of different scales, inspired by U-Net and feature pyramid network, the researchers used convolution with bilateral connections and upsampling operations to merge from different resolutions. feature. Although more complex encoders can be used, which can use some of the latest advances in architectural design, this is not the main focus of the network model, so the researchers just use simpler feature extractors to illustrate its role in the model.

, where H' and w' are panoramic masks height and width. The panorama mask can be the same size as the original image or smaller. In this work, the researchers used ResNet as the backbone network and then used the encoder layer of Transformer as the feature extractor. In order to ensure that the output feature map has sufficient resolution and contains features of different scales, inspired by U-Net and feature pyramid network, the researchers used convolution with bilateral connections and upsampling operations to merge from different resolutions. feature. Although more complex encoders can be used, which can use some of the latest advances in architectural design, this is not the main focus of the network model, so the researchers just use simpler feature extractors to illustrate its role in the model.

Mask decoder

The decoder is iteratively refined based on image features during model inference. Panoramic mask. Specifically, the mask decoder used by the researchers is TransUNet. The network takes as input the concatenation of an image feature map from the encoder and a noise mask (either randomly initialized or iteratively from the encoding process) and outputs an accurate prediction of the mask. One difference between the decoder and the standard U-Net architecture for image generation and image-to-image conversion is that the U-Net used in this paper uses a transformer decoder layer with a cross-attention layer on top before upsampling. to merge encoded image features.

Application in video mode

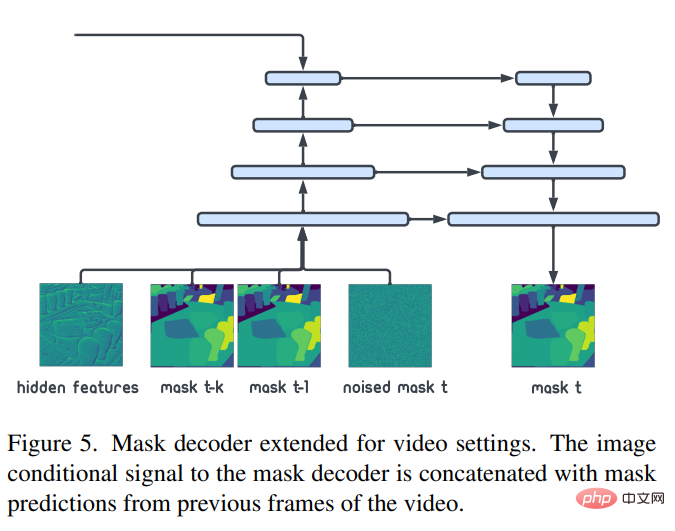

The researcher models the panoramic mask under image conditions as :p(m|x). Based on the three-dimensional mask of a given video (with an additional temporal dimension), our model can be directly applied to video panoramic segmentation. In order to adapt to online/streaming video settings, p(m_t|x_t,m_(t-1),m_(t-k)) modeling can be used instead to generate a new panorama based on the current image and the mask of the previous moment. mask. As shown in Figure 5, this change can be achieved by concatenating the past panoramic mask (m_(t-1),m_(t-k)) with the existing noise mask. Apart from this minor change, everything else is the same as the video base model (p(m|x)). This model is very simple and can be applied to video scenes by fine-tuning the image panorama model.

Experimental results

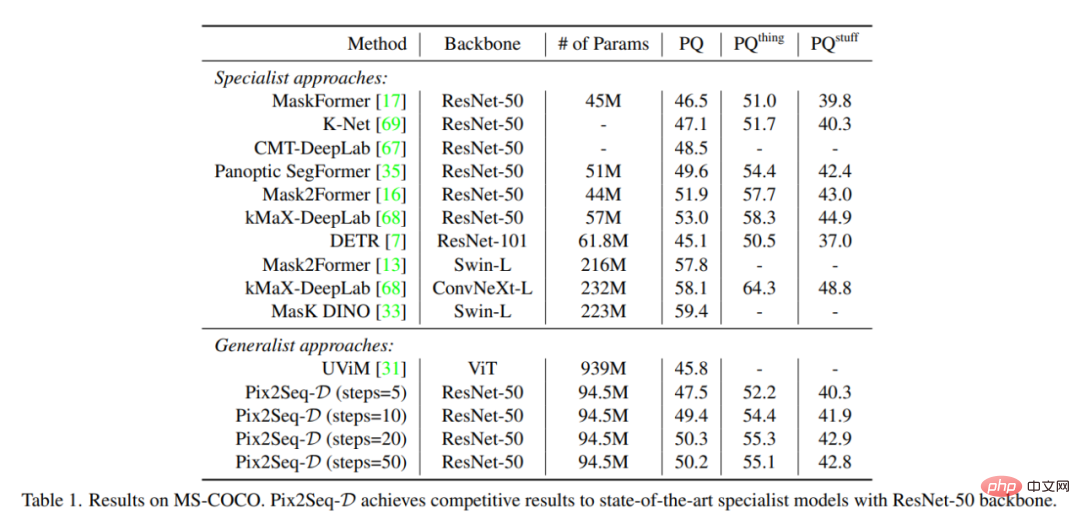

This paper compares with two series of state-of-the-art methods, namely expert methods and general methods. Table 1 summarizes the results on the MS-COCO dataset. The generalization quality (PQ) of Pix2Seq-D on the ResNet-50 based backbone is competitive with state-of-the-art methods. Compared with other recent general-purpose models such as UViM, our model performs significantly better while being more efficient.

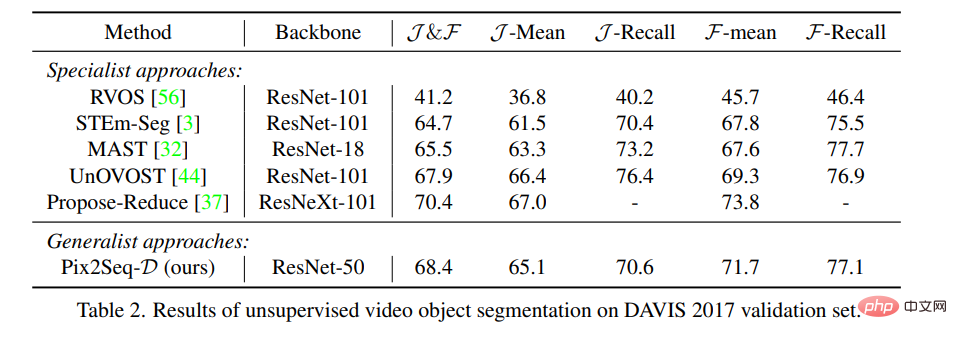

Table 2 Compares Pix2Seq-D with state-of-the-art methods for unsupervised video object segmentation on the DAVIS dataset, using metrics Standard J&F. It is worth noting that the baseline does not include other general models since they are not directly applicable to the task. Our method achieves the same results as state-of-the-art methods without special design.

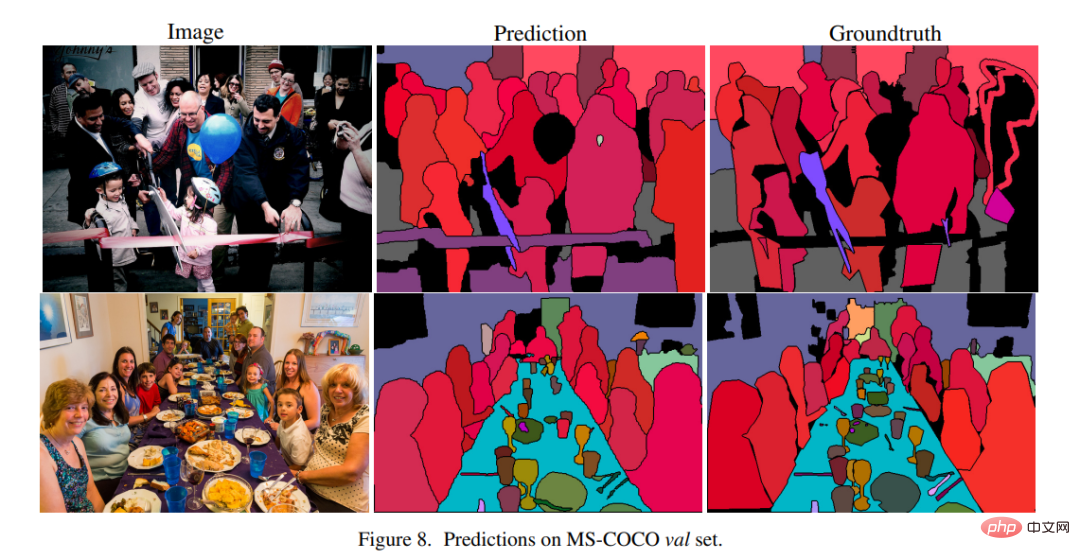

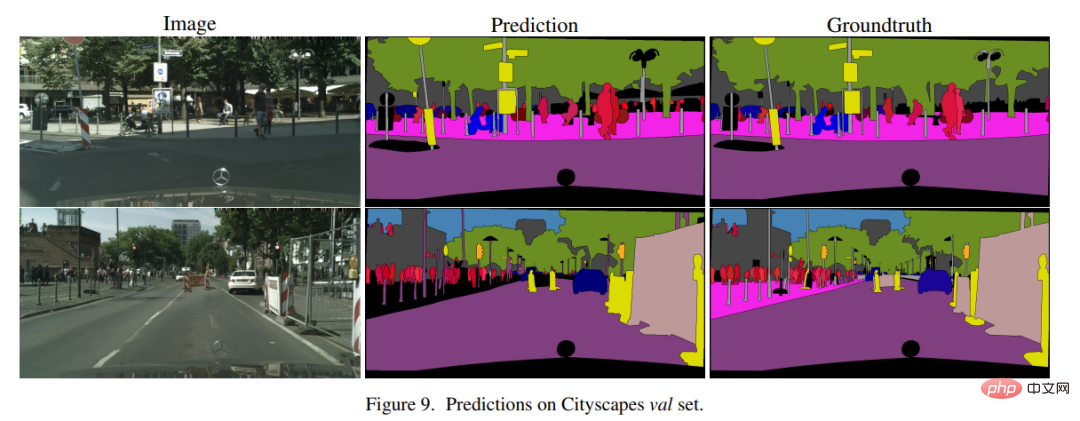

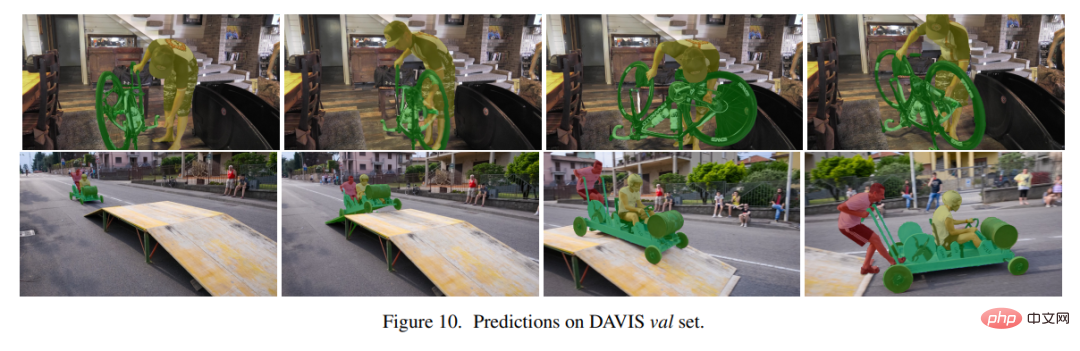

Figures 8, 9, and 10 show example results of Pix2Seq-D on MS-COCO, Cityscape, and DAVIS.

The above is the detailed content of Is it really that silky smooth? Hinton's group proposed an instance segmentation framework based on large panoramic masks, which enables smooth switching of image and video scenes.. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology