Home >Technology peripherals >AI >DeepMind is back on Science! AI 'Wall Breaker' plays tricks to defeat human masters

DeepMind is back on Science! AI 'Wall Breaker' plays tricks to defeat human masters

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-10 22:53:11873browse

Recently, DeepMind’s AI agent DeepNash successfully defeated professional human players in Stratego and successfully ranked among the Top 3.

On December 1, the paper was officially published in Science.

Paper address: http://www.science.org/doi/10.1126/science.add4679

Use this AI to pay tribute to John Nash

In today’s era, game-playing AI has developed to a whole new stage.

In the past, many scientists used chess and Go to train AI, but DeepMind used Stratego this time, which is a classic board game that is more complex than chess and Go, and more clever than poker.

And this AI agent named DeepNash learned Stratego from scratch by playing against itself.

Now, DeepNash ranks among the top three in history among human experts on Gravon, the world’s largest online Stratego platform.

#DeepNash adopts a brand-new playing method, based on game theory and model-free deep reinforcement learning.

It can be seen that this name is also intended to pay tribute to the famous American mathematician John Nash.

The Nash equilibrium he proposed, also known as non-cooperative game equilibrium, is a very important part of game theory.

What’s the secret of Stratego?

Board games have historically been a benchmark for progress in AI because they allow us to study how humans and machines formulate and execute strategies in controlled environments.

And what is the mystery of this Stratego?

The difference from chess and Go is that Stratego is an incomplete information game: players cannot directly observe the identity of their opponent's pieces.

Because of this complexity, the AI-based Stratego system is often at the amateur level, no matter how hard it works, and cannot reach the level of "experts".

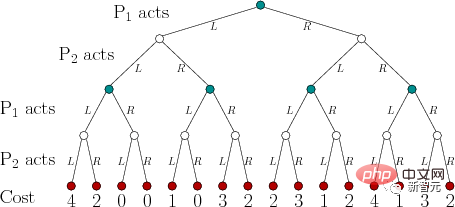

In the past, the reason why various AIs have achieved great success in games and completely defeated humans is because of an AI technology called "Game Tree Search".

Although "Game Tree Search" can kill all parties in various games where the information is fully grasped, it is somewhat helpless for games like Stratego because of its Not scalable enough.

At this point, DeepNash has completely defeated game tree search.

In fact, DeepNash has mastered the value of Stratego, which has far exceeded the game itself.

The real world is often very complex and information is limited. Truly advanced AI systems face environments like Stratego.

DeepNash successfully showed us how AI can successfully balance results and solve complex problems under uncertainty.

How to play Stratego

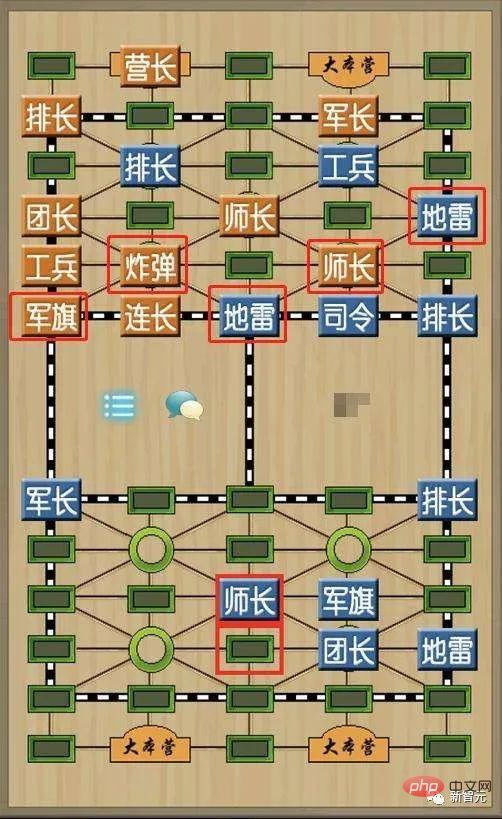

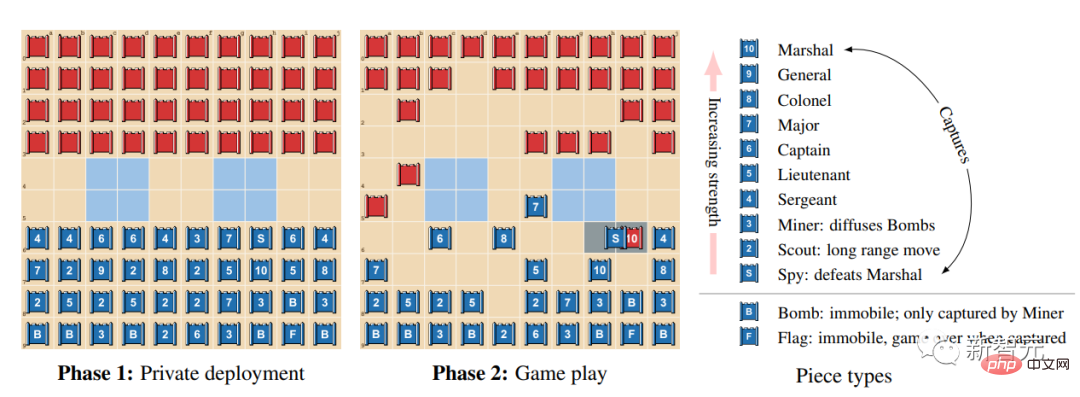

Stratego is a turn-based capture the flag game. In the game, players need to bluff, use roundabout tactics, collect information, and operate skillfully.

It is a zero-sum game, so any gain for one player represents an equal amount of loss for the opponent.

It sounds very similar to our military chess.

Stratego differs from military chess in that it has a larger number of chess pieces, more military ranks, a simpler chessboard design, and no railroads, camps, or referees.

When both sides set up a formation, all chess pieces should be upright and cannot be seen by the other side.

After the formation is completed, the red side moves first, and then takes turns to move one piece.

Among the chess pieces, the military flag and mines cannot be moved. The scouts can move any square horizontally and vertically, but cannot cross the chess pieces. The other chess pieces can only move one square horizontally or vertically.

When the chess pieces of both sides are in the same grid, they are uncovered together and judged by size. The winning chess piece is put back to its original position, facing backward, and the losing chess piece is removed.

Stratego's victory method is similar to Chinese military chess. Victory is achieved by capturing the opponent's military flag or destroying all moving chess pieces.

Why is Stratego so challenging for AI? Part of the reason is that it is a game of imperfect information.

The two players in Stratego are hidden from each other when arranging their 40 pieces into the starting formation.

Because players do not have access to the same knowledge, they need to balance all possible outcomes when making any decision.

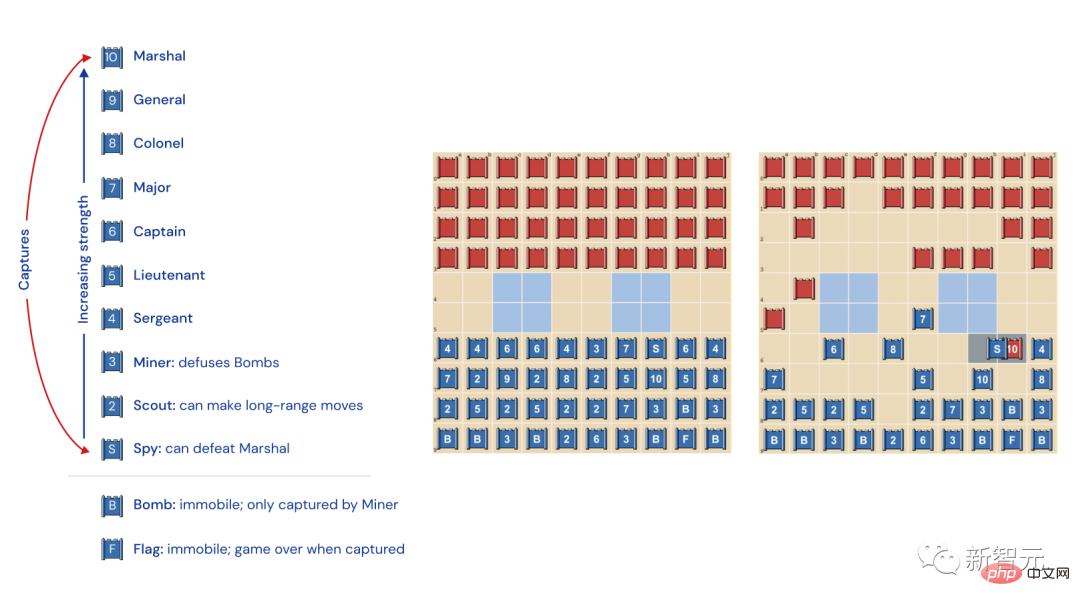

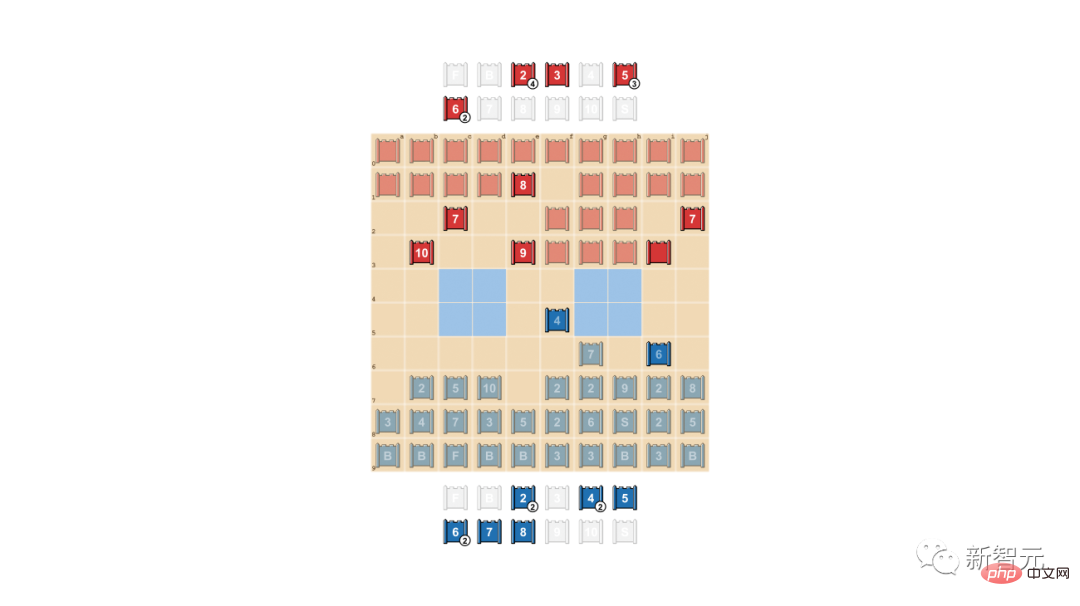

Types and rankings of Stratego chess pieces

Left: Ranking of chess pieces. In the game, the piece with higher military rank wins, with the only exception being 10 (Marshal) who is attacked by a spy; the bomb always wins, with the only exception being captured by a miner.

Middle: Possible starting formations. The flag is to be safely tucked away at the back, with bombs on the sides providing protection. The two light blue areas are "lakes" and must never be entered.

Right: A game in progress. As you can see, the blue team's spy captures the red team's marshal.

This game stumped AlphaZero

In Stratego, information is hidden.

Only when encountering other players, the identity of the opponent's pieces will be revealed.

The difference between chess and Go is that they are "perfect information games" because both players know exactly the location and identity of each chess piece.

DeepMind’s AlphaZero has always performed well in perfect information games, but in Stratego, it was stumped.

In chess, AlphaZero surpassed Stockfish after 4 hours; in shogi, AlphaZero surpassed Elmo after 2 hours; and in Go, AlphaZero surpassed Elmo in 30 AlphaGo, which surpassed AlphaGo and defeated Lee Sedol an hour later.

Stratego is more similar to Texas Hold'em and requires human-like abilities - humans need to make decisions with incomplete information and need to bluff.

American writer Jack London once pointed out: "In life, we do not always hold good cards, but sometimes, we can play well with a bad hand."

In fact, many AIs are also very good at playing poker, but when they face Stratego, they are confused - the process of this game is too long!

To win, players need to make hundreds of moves. Therefore, reasoning in the game must be based on a large number of continuous actions. In this process, it is difficult to clearly see how each action will affect the final result.

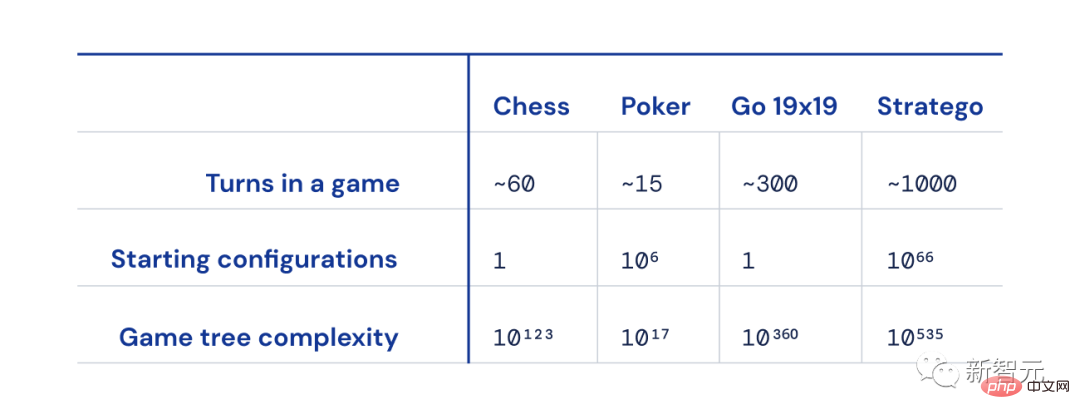

Size difference between chess, poker, go and strategy

And, compared to chess, go and poker, possible games The number of states ("game tree complexity") is off the charts and extremely difficult to solve.

And this is why Stratego is so exciting—it represents a decades-long challenge in the AI community.

Stratego: The high ground for AI to conquer

Over the years, how to make artificial intelligence stand out in the Stratego game has become the focus of AI researchers.

There are two main difficulties in defeating human players in this game.

First of all, the game tree of this game has 10 535th power states, that is, there are 10 535th power possible layouts in a game. In contrast, there are only 10 possible layouts in Go.

Secondly, in Stratego, artificial intelligence needs to reason about the opponent's deployment strategy for more than 10 to the 66th power, while poker only has a thousand possible card pairs.

Therefore, it is not easy to crack the complicated layout of Stratego. How to defeat human Stratego players is an unprecedented challenge faced by AI researchers.

How to completely defeat other AI? Relying on the core weapon of R-NaD

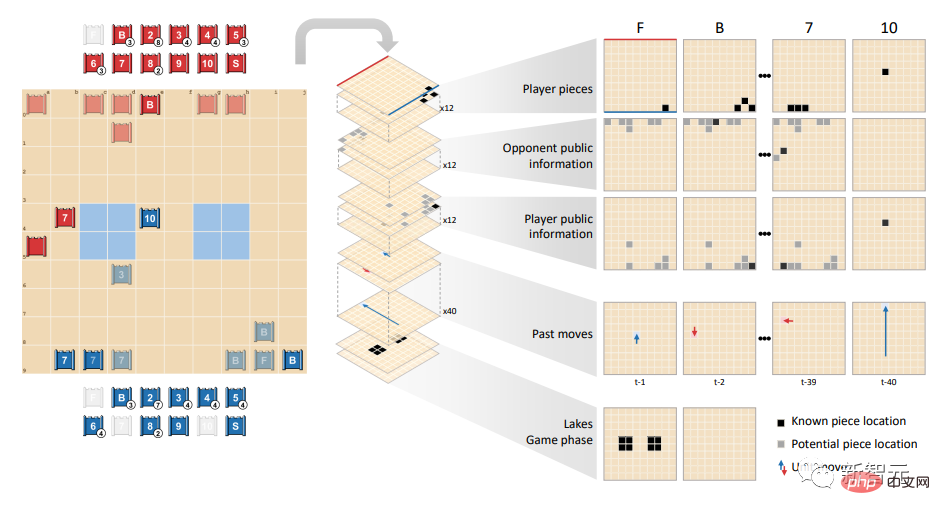

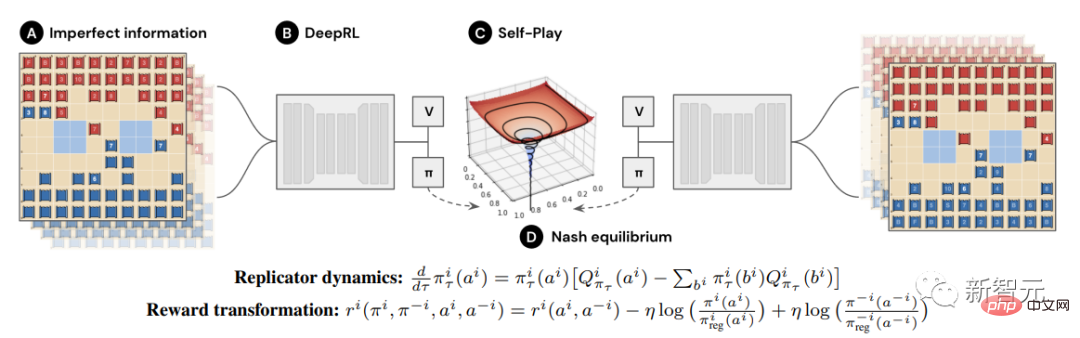

The reason why DeepNash has completely surpassed other AIs is because it adopts a novel method based on a combination of game theory and model-free deep reinforcement learning.

"No model" means that DeepNash does not try to explicitly simulate the opponent's state in the game.

Especially in the early stages of the game, when DeepNash knows little about the opponent's pieces, this modeling, even if it is possible to complete, has a high probability of being invalid.

Moreover, because Stratego’s game tree is so complex, DeepNash cannot use the Monte Carlo tree search used by other AIs when playing games. The latter is the key to AI's landmark achievements in less complex board games and poker.

It can be seen that although the equilibrium strategy can play a role in a complete information game in which both parties take turns to act, it is insufficient in an incomplete information game.

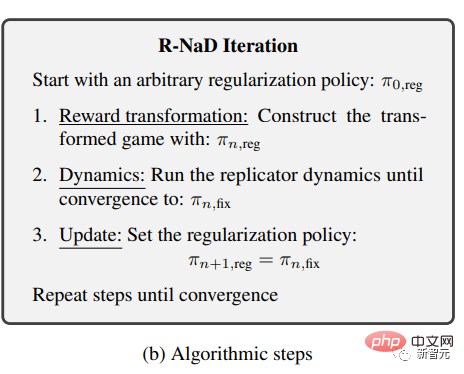

DeepNash adopts a new game theory algorithm idea - Regularized Nash Dynamic Programming (Regularized Nash Dynamic, R-NaD).

#This model-free reinforcement learning algorithm is the core of DeepNash.

It guides DeepNash and makes its learning behavior develop in the direction of Nash equilibrium.

DeepNash combines R-NaD with deep neural network architecture and converges to Nash equilibrium.

Includes three steps: reward transformation, dynamic planning (dynamics) and update iteration (udate).

The research team repeatedly applied these three steps until a series of fixed points were generated to prove that the algorithm converged to the Nash equilibrium of the original game.

When playing against the strongest Stratego robots (including several winners of the Computer Strategy World Championship), DeepNash has a win rate of 97% and often achieves a 100% win rate.

On the Gravon gaming platform, DeepNash achieved a winning rate of 84% against top human players, ranking among the top three in history.

Of course, Nash equilibrium cannot be reached through game theory without restrictions in the game, because the player's winning rate cannot be guaranteed in this way.

The equilibrium strategy is only fully applicable in games with complete information. In games with incomplete information, other strategies are needed to win unexpectedly.

In the initial formation, DeepNash adopted some extraordinary gameplay. In order to become hard to exploit, DeepNash developed an unpredictable strategy.

This means that the initial deployment must be flexible enough to prevent the opponent from discovering his own pattern in the subsequent series of matches.

In the game stage, DeepNash will also try its best to randomize (randomise) between seemingly identical actions to prevent itself from becoming exploitable.

In this process, hiding information is very important.

Hide information and confuse your opponents

In real-life scenarios, people will also use other means to win, such as Bluffing.

As "the father of game theory" von Neumann described: "Real life is full of 'bluffing', 'little tricks of deception' and 'guessing what others think I am going to do'." "

"Red Eyes and Blue Eyes Suicide Problem" by Terence Tao: I know, I know he knows, I know he knows he knows...

In this regard, DeepNash is no less impressive.

The research team demonstrated DeepNash’s two bluffing techniques: active bluffing (positive bluffing) and passive bluffing (negative bluffing).

The so-called active bluffing is to pretend that one's chess pieces are of high level to intimidate the opponent. Simply put, it's "bluffing."

In this example, DeepNash taught us a good lesson:

When playing against human players (red team), DeepNash (blue team) sacrificed 7 ( At the cost of other pieces such as Major) and 8 (Colonel), find out the opponent's 10 (Marshal), 9 (General), an 8 and two 7s.

At this point, DeepNash (blue side) has found many of the opponent's most powerful pieces, and at the same time, hidden its own key pieces.

At first glance, DeepNash seems to be at a clear disadvantage: its 7 and 8 are out, but the human opponent retains all pieces ranked 7 and above.

However, DeepNash had the last laugh - relying on the reliable information it had obtained from the opponent's top management, it estimated its winning probability to be 70%.

In the end, it did win.

The "art" of bluffing

In poker, excellent players will play psychological warfare to intimidate the other party even when we are weak. .

DeepNash also learned this bluffing strategy - negative bluffing.

This is what we often call "pretend to be a pig and eat the tiger": Disguise your high-level chess pieces as low-level chess pieces, wait until the opponent is fooled, and then win them in one fell swoop.

In the following example, DeepNash uses 2 (very weakly a scout) to chase the opponent's 8 who reveals his identity.

The human opponent determines based on this that the pursuer is likely to be 10, and therefore attempts to lure it into the spy's ambush circle.

In the end, DeepNash succeeded in using small chess piece 2 to destroy the opponent's key chess piece spy.

The human player (red side) is convinced that the unknown piece chasing his 8 must be DeepNash's 10 (because at this time DeepNash has already lost his only 9

The following are four complete game videos between DeepNash and (anonymous) human experts, Game 1, Game 2, Game 3, and Game 4. Click in and you will get more surprises. (Video Addresses are listed in the cited material)

I was surprised by DeepNash’s level of play. I’ve never heard of an artificial Stratego player approaching the level required to win against a human player.

But after playing against DeepNash personally, I am not surprised that it ranked in the top 3 on Gravon. My prediction: If it is allowed to participate in the human world championship, it will do very well.

——Vincent de Boer, co-author of the paper, former Stratego world champion

The future is immeasurable

It can be seen that this novel R-NaD method of DeepMind can be directly applied to perfect Or other two-player zero-sum games with imperfect information.

R-NaD has the potential to transcend the two-player game setting and solve large-scale real-world problems.

In addition, R-NaD is also expected to Unlock new applications of AI in other areas with different goals.

For example, in scale optimization of traffic management, where people do not know the intentions of others or environmental information, R-NaD is expected to optimize the driver's travel time.

The human world is naturally unpredictable.

Now, people have created a general AI system that is robust in the face of uncertainty, which makes us more confident about human beings. The future is full of imagination.

Reference:

http://www.science.org/doi/10.1126/science.add4679

https://www. nature.com/articles/d41586-022-04246-7

https://www.deepmind.com/blog/mastering-stratego-the-classic-game-of-imperfect-information

https://youtu.be/HaUdWoSMjSY

https://youtu.be/L-9ZXmyNKgs

https://youtu.be/EOalLpAfDSs

https ://youtu.be/MhNoYl_g8mo

The above is the detailed content of DeepMind is back on Science! AI 'Wall Breaker' plays tricks to defeat human masters. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology