Home >Technology peripherals >AI >Is our society ready to let AI make decisions?

Is our society ready to let AI make decisions?

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-09 20:41:161287browse

With the accelerated development of technology, artificial intelligence (AI) plays an increasingly important role in the decision-making process. Humans increasingly rely on algorithms to process information, recommend certain actions, and even take action on their behalf.

But, if AI really helps or even makes decisions for us, especially decisions involving subjective, moral and ethical decisions, can you accept it?

Recently, a research team from Hiroshima University explored human reactions to the introduction of artificial intelligence decision-making. Specifically, by studying human interactions with self-driving cars, they explored the question: "Is society ready for AI ethical decision-making?"

The team Published their findings in the Journal of Behavioral and Experimental Economics on May 6, 2022.

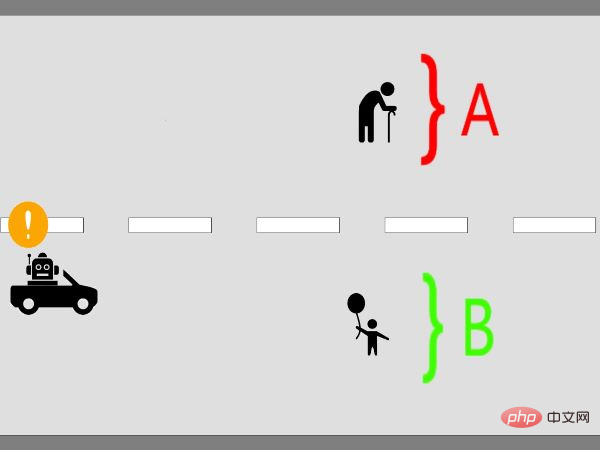

In the first experiment, researchers presented 529 human subjects with ethical dilemmas that drivers might face. In the scenario created by the researchers, a car driver had to decide whether to crash his car into one group of people or another, and a collision was inevitable. That is, an accident will cause serious harm to one group of people, but save the lives of another group of people.

Human subjects in the experiment had to rate the decisions of a car driver, who could be a human or an artificial intelligence. With this, the researchers aimed to measure the biases people might have toward AI's ethical decision-making.

In their second experiment, 563 human subjects answered a number of pertinent questions posed by the researchers to determine people’s perceptions of How will AI react to artificial intelligence ethical decision-making after it becomes part of society?

In this experiment, there are two situations. One involved a hypothetical government that had decided to allow self-driving cars to make moral decisions; another scenario allowed subjects to "vote" on whether to allow self-driving cars to make moral decisions. In both cases, subjects could choose to support or oppose the decision made by the technology.

The second experiment was designed to test the effects of two alternative ways of introducing artificial intelligence into society.

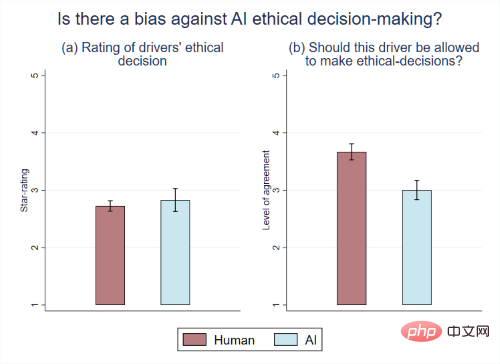

The researchers observed that when subjects were asked to evaluate the moral decisions of human or AI drivers, they had no clear preference for either. However, when subjects were asked to express their opinion on whether AI should be allowed to make ethical decisions on the road, subjects had stronger opinions about AI-driven cars.

#The researchers believe the difference between the two results is due to a combination of two factors.

The first element is that many people believe that society as a whole does not want artificial intelligence to make decisions related to ethics and morals, so when asked about their views on this matter, they will be influenced by their own ideas. "

In fact, when participants were explicitly asked to distinguish their answers from society's answers, the differences between the AI and human drivers disappeared." Hiroshima University Graduate School of Humanities and Social Sciences Assistant Professor Johann Caro-Burnett said.

The second element is whether the consequences of discussing this relevant topic when introducing this new technology into society will vary from country to country. "In areas where people trust the government and have strong government institutions, information and decision-making power contribute to the way subjects evaluate the ethical decision-making of AI. In contrast, in areas where people distrust the government and have weak institutions, decision-making abilities deteriorate How subjects evaluate the ethical decisions of artificial intelligence," Caro-Burnett said. "

We found that society has a fear of AI ethical decision-making. However, the roots of this fear are not inherent in the individual. In fact, this rejection of AI comes from what the individual believes to be society's perception. ” said Shinji Kaneko, a professor at Hiroshima University’s Graduate School of Humanities and Social Sciences.

#Graphic | On average, people rated the ethical decisions of AI drivers no differently than human drivers. However, people don’t want AI to make ethical decisions on the road

so people don’t show any signs of being biased against AI ethical decisions when not explicitly asked . Yet when asked explicitly, people expressed distaste for AI. Furthermore, with increased discussion and information on the topic, acceptance of AI has increased in developed countries and worsened in developing countries.

Researchers believe that this rejection of new technology is primarily due to personal beliefs about society's opinions and is likely to apply to other machines and robots. "It is therefore important to determine how individual preferences aggregate into a social preference. Furthermore, as our results show, such conclusions must also differ across countries," Kaneko said.

The above is the detailed content of Is our society ready to let AI make decisions?. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology