Home >Technology peripherals >AI >The latest deep architecture for target detection has half the parameters and is 3 times faster +

The latest deep architecture for target detection has half the parameters and is 3 times faster +

- 王林forward

- 2023-04-09 11:41:031661browse

Brief introduction

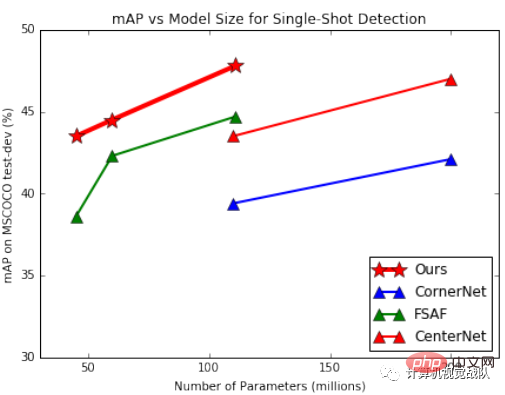

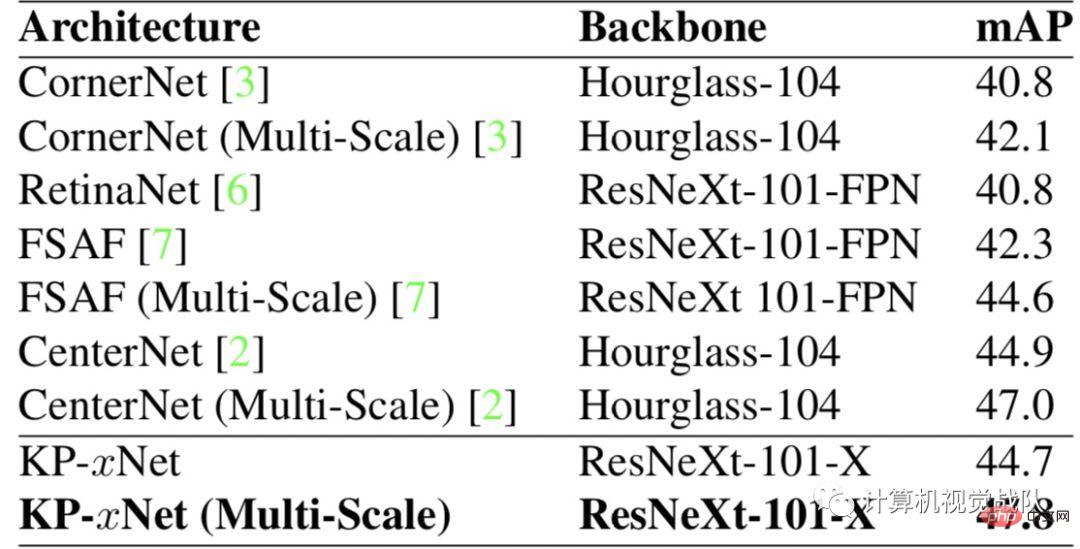

The research authors propose Matrix Net (xNet), a new deep architecture for object detection. xNets map objects with different size dimensions and aspect ratios into network layers, where the objects are almost uniform in size and aspect ratio within the layer. Therefore, xNets provide a size- and aspect-ratio-aware architecture. Researchers use xNets to enhance keypoint-based target detection. The new architecture achieves higher time-efficiency than any other single-shot detector, with 47.8 mAP on the MS COCO dataset, while using half the parameters and being 3 times faster to train than the next best framework. times.

Simple result display

As shown in the figure above, xNet’s parameters and efficiency far exceed those of other models. Among them, FSAF has the best effect among anchor-based detectors, surpassing the classic RetinaNet. The model proposed by the researchers outperforms all other single-shot architectures with a similar number of parameters.

Background and current situation

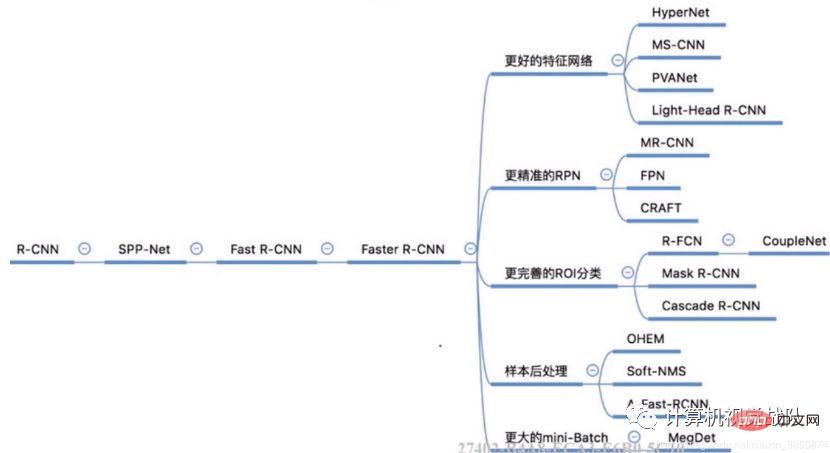

Object detection is one of the most widely studied tasks in computer vision, with many applications to other vision tasks such as object tracking, instance segmentation and Image captions. Target detection structures can be divided into two categories: single-shot detector and two-stage detector. Two-stage detectors utilize a region proposal network to find a fixed number of object candidates, and then use a second network to predict the score of each candidate and improve its bounding box.

Common Two-stage algorithm

Single-shot detectors can also be divided into two categories: anchor-based detectors and keypoint-based detectors. Anchor-based detectors contain many anchor bounding boxes and then predict the offset and class of each template. The most famous anchor-based architecture is RetinaNet, which proposes a focal loss function to help correct the class imbalance of anchor bounding boxes. The best performing anchor-based detector is FSAF. FSAF integrates anchor-based outputs with anchor-less output heads to further improve performance.

On the other hand, the keypoint-based detector predicts the top left and bottom right heatmaps and matches them using feature embeddings. The original keypoint-based detector is CornerNet, which utilizes a special coener pooling layer to accurately detect objects of different sizes. Since then, Centerne has greatly improved the CornerNet architecture by predicting object centers and corners.

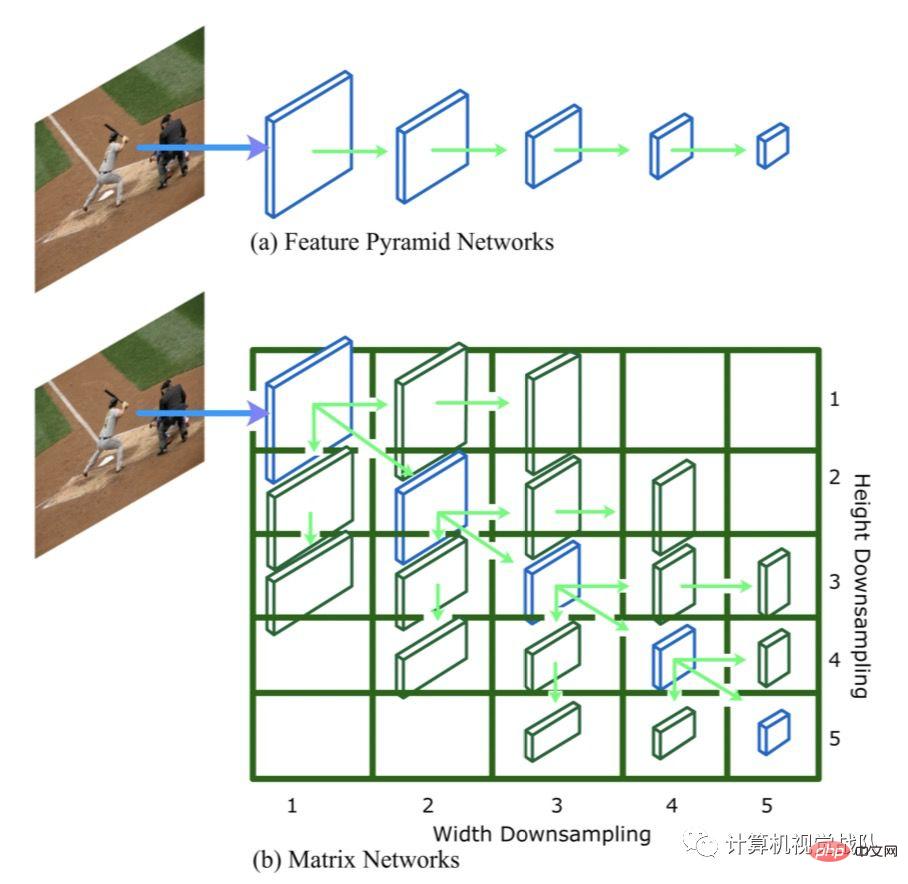

Matrix Nets

The figure below shows Matrix nets (xNets), which use hierarchical matrices to model targets with different sizes and cluster transverse ratios, where in the matrix Each entry i, j of represents a layer li,j. The width in the upper left corner of the matrix l1,1 is downsampled by 2^(i-1), and the height is downsampled by 2^(j-1). Diagonal layers are square layers of different sizes, equivalent to an FPN, while off-diagonal layers are rectangular layers (this is unique to xNets). Layer l1,1 is the largest layer. The width of the layer is halved for each step to the right, and the height is halved for each step to the right.

For example, layer l3,4 is half the width of layer l3,3. Diagonal layers model objects whose aspect ratio is close to square, while non-diagonal layers model objects whose aspect ratio is not close to square. Layers near the upper right or lower left corner of the matrix model objects with extremely high or low aspect ratios. Such targets are very rare, so they can be pruned to improve efficiency.

1. Layer Generation

Generating the matrix layer is a critical step because it affects the number of model parameters. The more parameters, the stronger the model expression and the more difficult the optimization problem, so researchers choose to introduce as few new parameters as possible. Diagonal layers can be obtained from different stages of the backbone or using a feature pyramid framework. The upper triangular layer is obtained by applying a series of shared 3x3 convolutions with 1x2 stride on the diagonal layer. Similarly, the bottom left layer is obtained using a shared 3x3 convolution with a stride of 2x1. Parameters are shared between all downsampling convolutions to minimize the number of new parameters.

2. Layer range

Each layer in the matrix models a target with a certain width and height, so we need to define the width assigned to the target of each layer in the matrix and height range. The range needs to reflect the receptive field of the matrix layer feature vector. Each step to the right in the matrix effectively doubles the receptive field in the horizontal dimension, and each step doubles the receptive field in the vertical dimension. So as we move to the right or down in the matrix, the range of width or height needs to double. Once the range for the first layer l1,1 is defined, we can use the above rules to generate ranges for the rest of the matrix layer.

3. Advantages of Matrix Nets

The main advantage of Matrix Nets is that they allow square convolution kernels to accurately collect information about different aspect ratios. In traditional object detection models, such as RetinaNet, a square convolution kernel is required to output different aspect ratios and scales. This is counter-intuitive because different aspects of the bounding box require different backgrounds. In Matrix Nets, since the context of each matrix layer changes, the same square convolution kernel can be used for bounding boxes of different scales and aspect ratios.

Because the target size is nearly uniform within its designated layer, the dynamic range of width and height is smaller compared to other architectures (such as FPN). Therefore, regressing the height and width of the target will become an easier optimization problem. Finally, Matrix Nets can be used as any object detection architecture, anchor-based or keypoint-based, one-shot or two-shots detector.

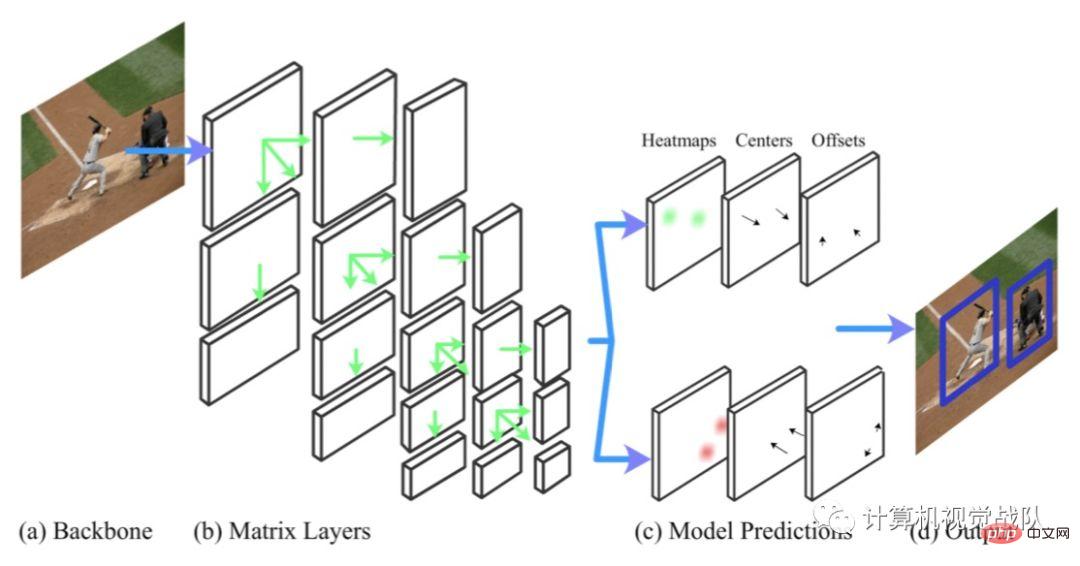

Matrix Nets are used for detection based on key points

When CornerNet was proposed, it was for Instead of anchor-based detection, it utilizes a pair of corners (top left and bottom right) to predict bounding boxes. For each corner, CornerNet predicts heatmaps, offsets and embeddings.

The above picture is the target detection framework based on key points - KP-xNet, which contains 4 steps.

- (a-b): The backbone of xNet is used;

- (c): The shared output sub-network is used, and for For each matrix layer, the heatmap and offset of the upper left corner and lower right corner are predicted, and center points are predicted for them within the target layer;

- (d): Using the center Point prediction matches corners in the same layer, and then the outputs of all layers are combined with soft non-maximum suppression to get the final output.

Experimental results

The following table shows the results on the MS COCO data set:

The researchers also compared the newly proposed model with other models based on the number of parameters on different backbones. In the first figure, we find that KP-xNet outperforms all other structures at all parameter levels. The researchers believe this is because KP-xNet uses a scale- and aspect-ratio-aware architecture.

Paper address: https://arxiv.org/pdf/1908.04646.pdf

The above is the detailed content of The latest deep architecture for target detection has half the parameters and is 3 times faster +. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology